It feels like a new, mind-blowing AI model drops every other week. For businesses trying to figure out how to actually use this tech, it’s a lot to keep up with. The whole space is pretty much run by three major players right now: OpenAI, Anthropic, and Google. If you’re thinking about building AI into your company, especially for something as important as customer support, picking the right API is a huge decision.

This guide is here to help you sort through the noise. We’re going to compare these platforms based on what really matters for a business: how well they work, what they cost, and how much of a headache they are to set up. We'll look at what these raw models can do on their own, and then we’ll talk about a more practical way to get AI working for you.

An overview of the leading AI model APIs

First off, let's quickly clarify what we're talking about. An API (Application Programming Interface) is basically a bridge that lets developers connect their own software to these powerful AI models. So when you’re comparing the OpenAI API vs Anthropic API vs Gemini API, you're looking at the engines that power thousands of different AI tools.

What is the OpenAI API?

If you’ve ever used ChatGPT or watched a developer rave about GitHub Copilot, you’ve seen OpenAI’s work. They were the first ones to really capture the public's attention, and their API Platform is the most established and widely used. Their GPT models, like GPT-4.1 and GPT-4o, are known for being great all-rounders that are solid at reasoning, coding, and general conversation. Because they’ve been around the longest, there’s a huge community of developers, which means you can find a tutorial for just about anything.

What is the Anthropic API?

Anthropic was started by former OpenAI researchers who wanted to build AI with safety as a top priority. Their models are all named Claude, with Claude 4 Opus and Sonnet being the current heavy hitters. The Anthropic API has become popular with larger companies for a couple of good reasons: it's excellent at digesting and thinking about long documents, it's designed to be more reliable and less likely to invent facts, and its built-in safety features make it a safe bet for businesses in regulated fields.

What is the Google Gemini API?

Gemini is Google's entry into the AI race, and it was built from the start to be "natively multimodal." All that means is the Google Gemini API isn't just about text, it’s designed to understand images, video, and audio without breaking a sweat. It has a massive context window (we’ll get to that in a sec) and is tightly woven into Google's other products like Google Cloud and Workspace. It's a really strong contender for companies already using Google’s tools or those wanting to build something that goes beyond just words.

Comparing core capabilities and performance

Alright, enough with the definitions. Let's get into how these APIs actually compare on the features that will matter for your project.

Why a bigger context window isn't always better

You’ll hear the term "context window" thrown around a lot. It’s just the technical term for how much information a model can hold in its short-term memory during a single conversation. It's measured in "tokens," where about 1,000 tokens equals 750 words.

Both Google Gemini and the newest OpenAI models claim to have enormous 1,000,000+ token context windows. That sounds incredible, right? You could feed them a whole book. But there’s a catch. The model’s performance isn't always stable across that entire window. Tests have shown that accuracy can take a nosedive when you're pushing the upper limits. It's a bit like trying to listen in a crowded room, the more you try to take in at once, the harder it is to focus on what’s important.

Anthropic's Claude does things a bit differently. It offers a 200,000-token window, which is still massive and can easily handle a 150,000-word document. The key is that its performance is remarkably consistent from start to finish. For most business needs, like analyzing a long support thread or a legal contract, that kind of reliability is way more useful than a record-breaking but sometimes flaky context window.

Handling more than just text

Multimodality just means the ability to process different kinds of information, not only text. This is where Google's Gemini really stands out. It was built to handle images, audio, and even video. Imagine a customer support situation where someone could just send a photo of a broken product or a quick video of an error code. Gemini is designed for exactly that.

OpenAI’s GPT-4o has also gotten very good in this area and can handle images and audio quite well. Anthropic, while getting better, has historically focused more on perfecting text-based reasoning, so it's not usually the first choice for highly visual or audio-dependent tasks.

Function calling: Getting AI to talk to your systems

This is probably the most important feature for any real-world business use. "Function calling" or "tool use" is what lets an AI actually do things instead of just talking. It’s how an AI can check an order status in Shopify, update a ticket in Zendesk, or pull a customer's payment history from your database.

All three APIs can do this, but they each have their own style. OpenAI is great at handling several requests at once, which makes it fast. Claude is better at working through multi-step tasks one by one and is particularly good at explaining its thought process, which is helpful for troubleshooting.

The problem? Actually setting this up yourself is a major engineering project. You have to define every tool, handle the back-and-forth communication, manage errors, and feed the results back to the model correctly. This is a huge lift, which is why many teams use platforms like eesel AI that handle all of this complexity for you through a simple workflow builder.

The business reality: Pricing and implementation

Okay, now for the two questions every business leader really cares about: "How much will this cost?" and "How hard is it to get running?"

A detailed pricing breakdown

All three platforms charge you based on how many tokens you use. You pay for the data you send to the model (input) and for the data it generates (output). It sounds straightforward, but it can make your monthly bill really unpredictable. A busy month for your support team could lead to a surprisingly large invoice.

Here’s a rough idea of how their pricing compares for their most popular models. These numbers shift, but the general cost differences usually stay the same.

| Provider | Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) | Best For |

|---|---|---|---|---|

| OpenAI | GPT-4.1 | $2.00 | $8.00 | High-performance, general tasks |

| GPT-4.1 mini | $0.40 | $1.60 | Balanced performance and cost | |

| GPT-4.1 nano | $0.10 | $0.40 | High-volume, low-latency tasks | |

| Anthropic | Claude 4 Opus | $15.00 | $75.00 | Complex reasoning, coding |

| Claude 4 Sonnet | $3.00 | $15.00 | Balanced enterprise tasks | |

| Claude 3 Haiku | $0.25 | $1.25 | Fast, high-volume tasks | |

| Gemini 2.5 Pro | $1.25 | $10.00 | Advanced multimodal, long context | |

| Gemini 2.5 Flash | $0.15 | $0.60 | Fast, cost-effective tasks |

Pricing accurate as of late 2024, based on publicly available data.

Getting set up: The implementation challenge

When it comes to developer resources, OpenAI has a head start with a ton of documentation and community forums. If your company already runs on Google Cloud, then plugging into Gemini will feel natural. Anthropic is catching up fast with excellent, clear documentation for its API.

But here’s the reality check: for a support team that just wants to automate some of its work, none of these are simple. Integrating directly with an API means you need developers. You need people to write the code, manage the servers, tweak the prompts, and make sure nothing breaks. It’s a serious, ongoing investment of both time and money.

The smarter choice: Why you need a platform

After all that, you might be realizing that debating the fine points of the OpenAI API vs Anthropic API vs Gemini API is asking the wrong question. The real challenge isn't just picking a model; it's turning that model into a tool that actually helps your business.

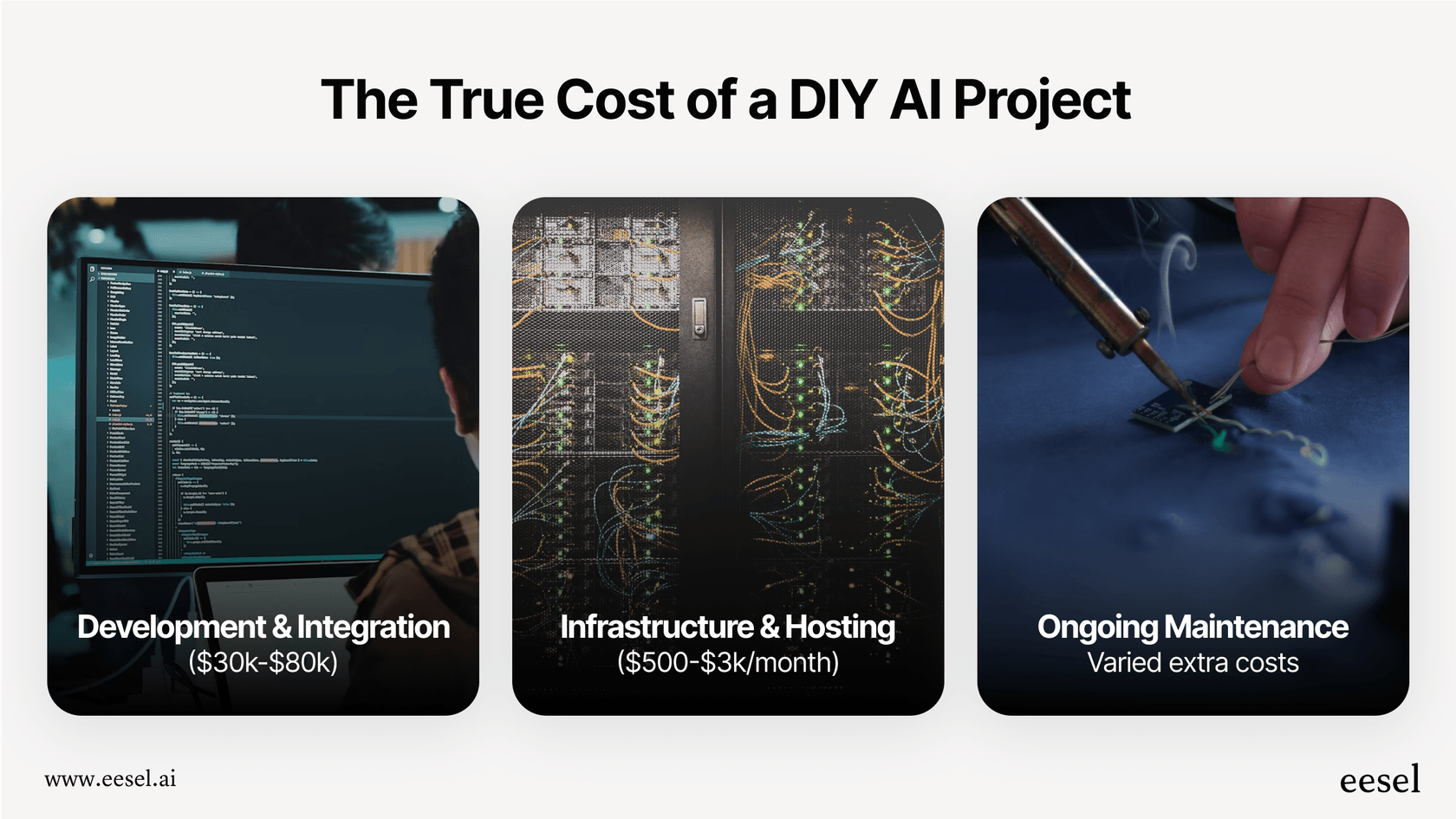

The hidden costs of building it yourself

The DIY approach sounds great in theory, but it’s packed with hidden work and risks:

-

Time and people: Building a solid AI agent for your help desk isn't something you knock out in a weekend. It can easily take a few engineers months to build, test, and deploy something reliable.

-

So much complexity: Suddenly, you're responsible for everything. Figuring out the right prompts, syncing knowledge from different places, building out workflows, designing an interface, and tracking results all land on your plate.

-

A risky bet: How do you even know if your custom solution will work well? It's tough to test it on real-world scenarios before you set it live with your customers. You’re essentially building in the dark.

-

Getting locked in: If you build your entire system around one API, you're stuck there. What happens when a competitor releases a model that's better or cheaper? Switching can be almost as much work as starting from scratch.

How eesel AI simplifies everything

This is the exact problem a platform like eesel AI was built to solve. It gives you the power of these AI models without the massive engineering headache.

-

Get started in minutes, not months: You don't have to deal with long development projects. eesel AI has one-click integrations with help desks like Zendesk, Freshdesk, and Intercom. You can get everything set up on your own without needing to talk to a salesperson.

-

Full control, no code required: You get the flexibility of a custom-built tool but through a simple interface. You can use the prompt editor to shape your AI's personality, build custom workflows, and connect to your tools with AI Actions, all without a developer.

-

Test with confidence: This is a big one. The simulation mode in eesel AI lets you test your setup on thousands of your own past support tickets. You can see exactly how it would have replied, get a forecast of your automation rate, and adjust its performance before it ever talks to a customer. It takes all the guesswork out of the process.

-

Predictable pricing: Instead of the wild ride of token-based billing, eesel AI has transparent plans. You know exactly what you’re paying each month, and there are no per-resolution fees that charge you more for being successful.

Focus on the solution, not the tool

While OpenAI, Anthropic, and Gemini all offer amazing technology, that’s what they are: technology. For most businesses, getting bogged down in a technical comparison of their APIs is a distraction from the actual goal.

If you want to resolve customer issues faster and take some of the pressure off your support team, the better question is how to do that with the least amount of time, risk, and cost. An AI platform handles the complexity of choosing and managing models so you can focus on what you're good at: designing great support workflows and taking care of your customers.

Ready to see how an AI platform can help your support team without the engineering overhead? Set up your first AI agent with eesel AI in minutes.

Frequently asked questions

The most crucial factor is not just raw model performance, but the practical implementation and reliability. Consider how easily you can integrate the API, manage ongoing development, and achieve consistent, accurate results for your specific business workflows and customer interactions.

All three platforms use a token-based pricing model, charging for both input and output, which can make monthly costs unpredictable. OpenAI offers generally competitive rates, Anthropic's Opus can be pricier for its advanced reasoning, and Google Gemini is competitive, especially with its cost-effective Flash model.

Directly implementing any of these APIs for complex business tasks, such as automating a customer support desk, requires significant engineering effort. A small team would face substantial challenges in writing code, managing prompts, handling errors, and integrating with existing systems effectively.

Google Gemini was designed for native multimodality, excelling at understanding images, video, and audio from the start. OpenAI's GPT-4o has also become very proficient in handling images and audio. Anthropic has historically focused more on advanced text-based reasoning, although its multimodal capabilities are improving.

While Gemini and newer OpenAI models claim very large context windows, their accuracy can sometimes degrade at the highest limits. Anthropic's Claude, with a 200,000-token window, offers remarkably consistent performance across its entire range, making it highly reliable for digesting long documents without losing focus.

A platform like eesel AI simplifies the entire process, abstracting away the complexities of direct API integration, prompt engineering, and infrastructure management. This significantly reduces development time, eliminates hidden costs, and allows businesses to deploy robust AI solutions quickly and with predictable pricing.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.