Trying to pick the right AI model for your business can feel like a full-time job. You've got OpenAI’s GPT-4 Turbo on one side and Meta’s Llama 3 on the other, and the technical specs can make your head spin.

For most of us, especially in customer support, the real question isn't about abstract benchmarks. It's about what will actually work, what won't break the bank, and what you can set up without hiring a team of developers. This guide is a straightforward comparison of GPT-4 Turbo vs Llama 3, focusing on what really counts for your business: how they perform in the real world, how much they actually cost, and where they can make a real difference.

What is GPT-4 Turbo?

Let's start with the one you've probably heard the most about: GPT-4 Turbo. This is the big one from OpenAI, the company that gave us ChatGPT. You can think of it as the high-end, premium engine in the AI space. It's a "proprietary" or "closed-source" model, which just means you can't peek under the hood to see how it works. But what you do see is its seriously impressive performance.

Here’s what you need to know about it:

-

Top-tier performance: When it comes to complex reasoning, math problems, and understanding different languages, GPT-4 Turbo is often the model to beat. It’s particularly good at untangling tricky, nuanced issues.

-

A huge memory: It has a massive context window (up to 128,000 tokens). In plain English, that’s like it being able to read and remember a 300-page book in one go. This lets it understand the full picture of long documents or complicated support threads without getting lost.

-

Accessed via API: Businesses usually interact with GPT-4 Turbo through the OpenAI API. This means you need developers to integrate it into your existing tools. It’s the engine running behind the scenes of countless AI apps, but it doesn’t work on its own.

What is Llama 3?

On the other side of the ring is Llama 3 from Meta AI. This family of models is making waves because it’s open-source, and that’s a big deal. It gives businesses a lot more flexibility in how they use the model, including the option to run it on their own servers for greater privacy and control.

Here's what makes it stand out:

-

Different sizes for different jobs: Llama 3 is available in a few different sizes, with the 8B and 70B parameter models being the most common. This is a great feature because you can choose a model that gives you the performance you need without paying for power you won't use. You don't always need the most expensive tool for the job.

-

Very competitive performance: Don't let the "open-source" tag fool you. The Llama 3 70B model is a powerhouse. In many tests, it performs on par with, and sometimes even better than, previous versions of GPT-4. It's solid proof that open-source models can go toe-to-toe with proprietary ones.

-

Designed for efficiency: Llama 3 was built to be powerful without being bloated. This makes it a popular choice for businesses and developers who want top-notch AI that is more affordable on a per-token basis. You generally access it through cloud providers or by hosting it yourself.

Performance and benchmarks: A head-to-head comparison

Alright, let's look at the numbers. While these tests don't tell the whole story, they give us a good baseline for how these two models "think" and where each one has a natural advantage.

General knowledge and reasoning

When it comes to broad, academic-style questions, GPT-4 Turbo usually has a small lead. On tests like MMLU (which is full of graduate-level questions on various subjects), its extensive training gives it a slight advantage.

That said, Llama 3 70B is right on its heels. It often beats older GPT-4 models and is quickly closing the gap. For the kind of general knowledge questions your business will likely encounter, you probably wouldn't be able to tell the difference between them. Both are more than smart enough.

Coding and mathematical abilities

This is where the differences start to become a bit more obvious.

-

Coding: On tests like HumanEval, which measures a model's ability to write code, both are very strong. GPT-4 Turbo tends to be a little better at solving complex, multi-step programming challenges. On the other hand, some developers have found that Llama 3 is fantastic at following very precise formatting instructions, which is a huge benefit when you need predictable code output.

-

Math: GPT-4 Turbo is the clear winner when it comes to mathematical reasoning tests. Its architecture makes it more dependable for tasks that require exact calculations and step-by-step logic. If numbers are a big part of your use case, GPT-4 Turbo is probably the more reliable choice.

A quick comparison of benchmarks

| Benchmark | Task | GPT-4 Turbo (Approx. Score) | Llama 3 70B (Approx. Score) | Key Takeaway |

|---|---|---|---|---|

| MMLU | General Knowledge | ~86% | ~82% | GPT-4 has a slight edge in broad, academic knowledge. |

| HumanEval | Code Generation | ~87% | ~82% | Both are very capable, but GPT-4 is often better for complex logic. |

| MATH | Math Problem Solving | ~73% | ~50-60% | GPT-4 is the clear winner for mathematical reasoning. |

Practical business applications and use cases

Okay, enough with the theory. How does this stuff actually help you get work done? An AI model on its own is just a brain in a jar; it’s only useful when you connect it to your specific business problems and give it the right information to work with.

Customer support automation

Both models are excellent for handling support tasks. They can summarize long ticket histories, help agents draft thoughtful replies, and answer frequently asked questions. Their large context windows let them process complicated conversations to get to the heart of the issue.

But here’s the thing they don't always tell you: these models know nothing about your business. An AI can't answer a question about your return policy if it’s never seen it. It has to be connected to your company’s knowledge to be effective.

Content creation and summarization

In this area, the two models have different strong suits. User feedback and various tests have shown that Llama 3 is particularly good at summarization. It can take a lengthy document and boil it down to a clear and accurate summary, which is great for things like internal reports or passing a ticket to another agent.

GPT-4 Turbo, however, tends to be better at generating longer-form, creative content. If you need help drafting a blog post, coming up with marketing ideas, or writing detailed product descriptions from a few notes, it's probably the better option.

Internal knowledge management

Either model can be used to build an internal Q&A bot for your employees. Just imagine your team asking about company policies, IT troubleshooting, or project updates and getting an immediate, correct answer.

The main hurdle isn't choosing the model; it's getting your team to use it. A new bot is useless if people have to open yet another app. The tool needs to live where your team already is. A platform like eesel AI's AI Internal Chat integrates right into Slack or Microsoft Teams. It learns from your internal documents and gives your team instant answers inside the chat tools they use all day.

Cost, accessibility, and customization

For most businesses, this is where the decision really gets made. The "best" model doesn't matter if it's too expensive, too difficult to set up, or too rigid to meet your needs.

The true cost of each model

-

GPT-4 Turbo: You pay OpenAI for every bit of information you send and receive, based on "tokens." It’s the premium choice, and the costs can add up fast and be hard to predict, especially if you have a sudden spike in customer questions.

-

Llama 3: Because it's open-source, Llama 3 is much cheaper on a per-token basis when you use it through a hosting service.

But the price per token is only part of the story. The total cost also includes the time your engineers spend on setup, integration, fixing bugs, and ongoing maintenance. This is why many businesses opt for a platform with simple, predictable pricing. For instance, eesel AI offers straightforward monthly plans that don't charge you per ticket resolved. Your bill stays the same even during your busiest months, giving you predictable costs without the hidden engineering headaches.

Accessibility and ease of setup

Using either of these models directly requires a lot of technical skill. You need developers who can work with the OpenAI API or who can manage the complex hosting and deployment of Llama 3. That's a huge barrier for most support and IT teams who just want a solution that works.

In contrast, platforms like eesel AI are designed so that anyone can use them. You can connect your help desk, like Zendesk, with just a few clicks and get started in minutes, not months, all without writing a single line of code.

Customization: Fine-tuning vs. a workflow engine

Because Llama 3 is open-source, you can customize it deeply through a process called "fine-tuning." However, this is a very complex and expensive project that requires specialized AI experts and huge amounts of data. For 99% of businesses, it’s just not a realistic option.

A much more practical route is to use a platform that has a simple, no-code workflow engine. eesel AI gives you complete control from an easy-to-use dashboard. You can set your AI's personality and tone of voice, tell it which knowledge sources to use, and even create custom actions, like having it look up an order in Shopify or escalate a ticket, all without needing a developer.

The engine vs. the car

So, what's the bottom line here? The easiest way to think about the GPT-4 Turbo vs Llama 3 choice is to compare them to car engines.

-

GPT-4 Turbo is the premium, high-performance engine from a luxury brand. It's incredibly powerful and reliable, but it comes with a higher price tag and requires expert mechanics (your developers) to install and maintain it.

-

Llama 3 is like a versatile, highly efficient engine from a top-tier supplier. It’s much more affordable and offers amazing flexibility, but you still have to build the rest of the car around it yourself.

For most businesses, debating which engine is slightly better misses the point. The real value is in the complete vehicle: a platform that’s easy to get started with, connects to all your tools, is safe to test, and delivers results from day one.

Put the world's best AI to work in minutes

Instead of getting bogged down in a complex and expensive build-it-yourself project, you can launch a powerful, secure, and fully customized AI agent with eesel AI.

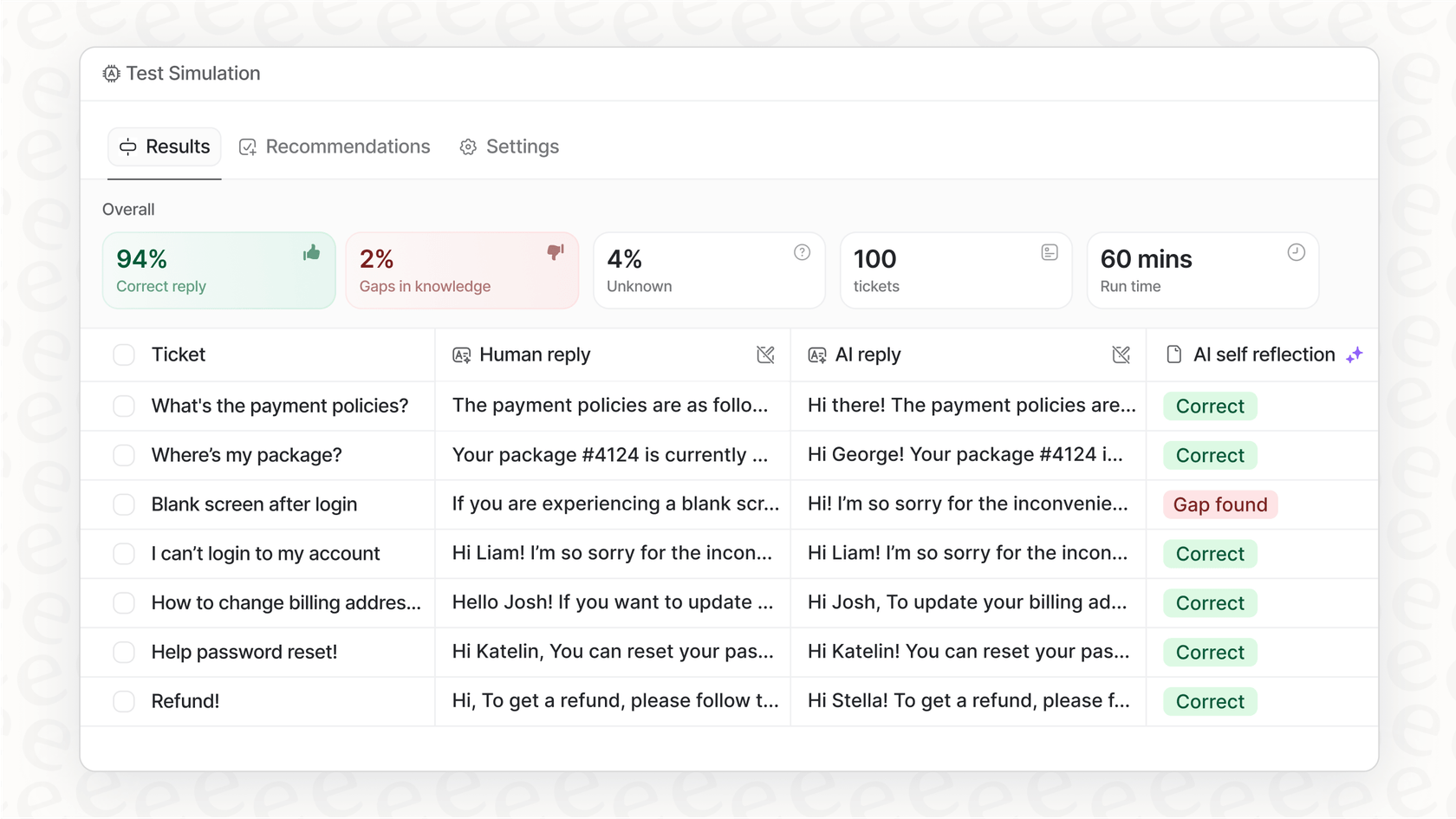

eesel AI is designed to use the best models like GPT-4, but it handles all the complicated parts for you. It connects to your tools, learns from your past support conversations to match your brand's voice, and even gives you a simulation mode so you can test everything with confidence before your customers see it.

Ready to see how simple powerful AI can be? Start your free trial of eesel AI today.

Frequently asked questions

GPT-4 Turbo is a premium, per-token model from OpenAI, leading to potentially higher and less predictable costs. Llama 3 is generally more affordable on a per-token basis, but the overall cost must factor in engineering effort for integration and maintenance. Platforms like eesel AI can offer predictable monthly pricing, eliminating per-token charges and hidden engineering costs.

GPT-4 Turbo typically shows a slight lead in broad, academic benchmarks like MMLU due to its extensive training. However, Llama 3 70B performs very competitively and is often indistinguishable from GPT-4 Turbo for the general knowledge tasks most businesses encounter. Both models are highly capable for everyday reasoning.

GPT-4 Turbo generally excels in complex mathematical reasoning and multi-step coding challenges, making it a reliable choice for number-heavy use cases. Llama 3 is also strong in coding and is particularly noted by some developers for its ability to follow precise formatting instructions for predictable code output.

The open-source nature of Llama 3 offers businesses greater flexibility, including the option to run it on their own servers for enhanced privacy and control. GPT-4 Turbo, being proprietary, provides top-tier performance through an API but limits direct insight or modification of its internal workings.

Using either GPT-4 Turbo via its API or deploying Llama 3 directly requires significant technical expertise and developer resources for setup, integration, and ongoing maintenance. In contrast, platforms like eesel AI simplify this process dramatically, allowing businesses to integrate AI solutions in minutes without writing code.

While Llama 3 offers deep customization through fine-tuning due to its open-source nature, this is a highly complex and resource-intensive undertaking for most businesses. A more practical approach is to use a platform with a no-code workflow engine, like eesel AI, which allows you to easily set the AI's personality, tone, and specific actions from a user-friendly dashboard.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.