Let's be honest, the buzz around generative AI is everywhere. But for most companies, that excitement comes to a screeching halt when it's time to actually build something. Taking a cool AI prototype and turning it into a real application that customers can rely on is a huge leap. Suddenly you’re worried about performance, reliability, and costs that can balloon without warning.

Building and scaling an AI app isn't just tricky; it requires a specialized foundation to work properly. This is the exact problem Fireworks AI was created to solve. It’s a platform designed to handle all the heavy-lifting on the infrastructure side, so developers can stop wrestling with servers and focus on creating great AI products.

In this post, we’ll walk you through everything you need to know about Fireworks AI. We'll break down its core features, make sense of its pricing, and talk about who it’s really for. We’ll also get into its limitations and explore why, for some teams, a completely different type of AI tool might be a much better fit.

What is Fireworks AI?

At its heart, Fireworks AI is a platform for building and running generative AI applications on a massive library of open-source models. Think of it as the high-performance engine for an AI app. It handles all the complicated infrastructure behind the scenes so developers can focus on what their app actually does.

The company's background tells you a lot about their technical chops. It was founded by some of the original innovators from Meta AI who helped create PyTorch, one of the most popular open-source AI frameworks in the world. According to a post on the Google Cloud blog, the founders knew companies would struggle with deploying AI at scale and saw a chance to help.

Fireworks AI’s main pitch is to deliver "the fastest and most efficient gen AI inference engine to date." In plain English, they provide the raw speed and power needed to run complex AI models without making your users wait. It's important to remember that Fireworks AI is an infrastructure layer, not a finished product. Developers use its API to power their own tools, whether that’s a customer support bot, a coding assistant, or a company-wide search engine.

Key features of Fireworks AI

The Fireworks AI platform is built on three main ideas that support the entire journey of an AI model, from a simple experiment to serving millions of people.

A blazing-fast inference engine

So, what exactly is an "inference engine?" It’s the system that takes a trained AI model and runs it to generate a response, like answering a question or creating an image. The speed of that engine is everything for a real-time app. Nobody wants to use a chatbot that takes ten seconds to reply.

Fireworks AI has built its name on being fast (low latency) and being able to handle a ton of requests at once (high throughput). They process over 140 billion tokens every day and maintain a 99.99% API uptime, which means their customers' apps are consistently responsive and dependable. They pull this off with highly optimized software running on the latest hardware, like top-of-the-line NVIDIA GPUs.

A huge library of open-source models

One of the best things about Fireworks AI is its commitment to the open-source community. Instead of locking you into their own proprietary model, they give you instant access to a massive library of popular open-source models. This gives developers the freedom to pick the perfect model for their project, tweak it, and avoid getting stuck with a single vendor.

You can find well-known models like Meta's Llama 3, Google's Gemma, Mixtral 8x22B, and Qwen, all ready to use with a simple API call. This saves you the massive headache of downloading, configuring, and managing the powerful GPUs needed to run these models yourself.

Complete AI model lifecycle management

Fireworks AI gives you tools for every step of the building process:

-

Build: Developers can go from an idea to a functioning model in just a few minutes. The platform sets up all the GPU infrastructure, so you can start tinkering with prompts and getting results right away.

-

Tune: You can use a process called fine-tuning to train a base model on your own data. This helps the AI learn your company's specific lingo, brand voice, or internal knowledge, which leads to much better, more relevant answers.

-

Scale: As your app gets more popular, Fireworks AI handles the scaling for you. It doesn't matter if you have ten users or ten million; the infrastructure automatically adds more resources to handle traffic spikes without you having to do a thing.

Fireworks AI pricing explained

Fireworks AI has a pretty flexible, usage-based pricing model that works for everyone from a solo developer messing around on a weekend to a big company with serious AI needs. Their pricing, which we’ve pulled from their official pricing page, is split into three main types.

Serverless inference pricing

This is a straightforward pay-as-you-go plan where you’re charged per "token" (a piece of text, roughly four characters long) that the AI processes. It's a great way to get started, build prototypes, or run apps with unpredictable traffic because you only pay for exactly what you use.

Here’s a quick look at their serverless prices for some popular models:

| Base Model Category | Price per 1M Tokens |

|---|---|

| Less than 4B parameters | $0.10 |

| 4B - 16B parameters | $0.20 |

| More than 16B parameters | $0.90 |

| MoE 56.1B - 176B parameters | $1.20 |

| Meta Llama 3.1 405B | $3.00 |

| Kimi K2 Instruct | $0.60 input, $2.50 output |

It's good to know that for some of the fancier models, the price for input tokens (what you send to the AI) might be different from the price for output tokens (what the AI sends back).

Fine-tuning pricing

This pricing is for when you want to customize a model with your own data. You're charged based on the number of tokens used during the training job. After your model is fine-tuned, you pay the same rate to run it as you would for the original base model.

| Base Model Size | Price per 1M Training Tokens |

|---|---|

| Up to 16B parameters | $0.50 |

| 16.1B - 80B parameters | $3.00 |

| >300B parameters | $10.00 |

On-demand pricing

For apps that need consistent, heavy-duty performance, the on-demand option is usually the most economical. Instead of paying per token, you pay by the second for dedicated access to a GPU. This is perfect for high-volume applications because it typically gives you better performance and lower costs than the serverless plan.

| GPU Type | Price per Hour (billed per second) |

|---|---|

| A100 80 GB GPU | $2.90 |

| H100 80 GB GPU | $4.00 |

| H200 141 GB GPU | $6.00 |

Who is Fireworks AI for? (And who it isn't for)

Fireworks AI is a seriously powerful platform, but its intense focus on infrastructure means it's designed for a very specific crowd. Knowing whether you're in that group is the key to deciding if it's the right choice for you.

The ideal user: AI developers and enterprises

The intended user for Fireworks AI is pretty clear: AI-focused startups, big companies with dedicated machine learning teams, and any developer building a custom AI application from scratch.

Their platform is made to power things like:

-

Advanced chatbots and customer support AI

-

Code generation and developer assistance tools

-

Sophisticated internal search and document summarization

-

Complex, multi-step AI agents

These users need the fine-grained control, raw performance, and scalability that a platform like Fireworks AI offers. They are comfortable working with APIs, fine-tuning models, and plugging a powerful AI engine into a larger, custom-built product.

The key limitation: Not a ready-made tool for business teams

This is probably the most important thing to grasp: Fireworks AI is an engine, not a finished car. It gives you all the horsepower you could ever want, but you have to build the rest of the vehicle yourself.

This means you need a lot of technical expertise, development time, and budget for ongoing maintenance. A customer support manager or an IT lead can't just flip a switch and have Fireworks AI solve their problems. It’s simply not built for that. It’s a developer platform, not a business application.

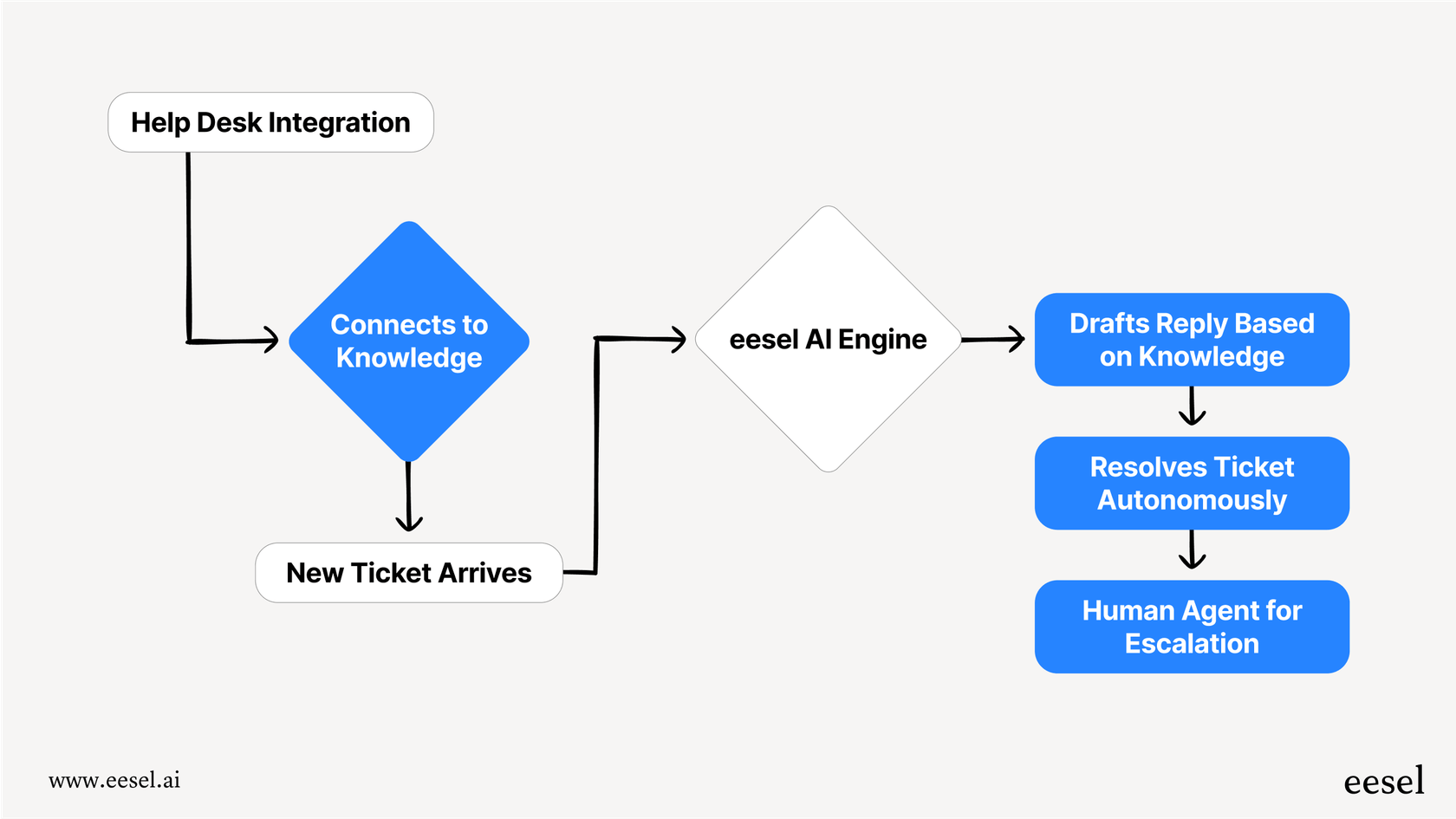

For business teams that need an AI solution right now, without hiring a squad of engineers, a ready-to-use platform like eesel AI is a much more direct route. These tools are built for a different job entirely: solving specific business problems right out of the box.

Here’s where a tool like eesel AI stands apart:

-

Go live in minutes, not months. eesel AI is completely self-serve and has one-click integrations with the tools your team already relies on, like Zendesk, Freshdesk, and Slack. You can have a working AI agent handling real customer questions in the time it takes to read through API documentation.

-

No need to rip and replace. Instead of forcing you to build something new, eesel AI plugs directly into your current help desk and workflows. It just makes the tools your team already knows how to use smarter.

-

Designed for business users. eesel AI is made specifically for customer service and internal support. It automatically learns from your past support tickets, help center articles, and knowledge bases like Confluence or Google Docs to give accurate, relevant answers from day one.

The whole process is different. With Fireworks AI, a developer builds a custom app using an API. With eesel AI, a support manager uses a simple dashboard to connect their tools and turn on the AI.

Our final verdict on Fireworks AI

Fireworks AI is, without question, a top-tier AI infrastructure platform. It absolutely delivers on its promise of speed, scalability, and flexibility. For developers and companies building custom AI products, it’s a fantastic choice.

This video explains how Fireworks AI achieves its high speed for generative AI applications.

If you have an engineering team and a vision for a unique AI-powered tool, Fireworks AI gives you the best-in-class engine to make it happen. Its performance and model library offer everything you need to build something special.

However, it’s not a plug-and-play solution for business departments like customer support or IT. The complexity and expertise required make it the wrong tool for teams who just want to automate their work. You wouldn't buy a jet engine just to drive to the grocery store.

The takeaway is this: choose Fireworks AI if you’re building the future of AI. But for solving your business problems with AI today, look for a tool built for your specific workflow.

Get started with AI for customer support today

Picking the right AI tool really just depends on your goal. If you're building foundational AI products that will shape the market for years to come, an engine like Fireworks AI is a must-have.

But if your goal is to automate customer support, help out your agents, and give your customers a better experience right now, then you need a purpose-built solution. For that, eesel AI is designed from the ground up to give you results without writing a single line of code.

You can use its simulation mode to see exactly how many of your past tickets could have been solved automatically. You can connect all your scattered knowledge sources in a few clicks. And you can go live with a fully functional AI agent that works inside your existing help desk in minutes, not months.

Frequently asked questions

Fireworks AI is a high-performance infrastructure platform designed for building and running generative AI applications. It tackles the complexities of deploying and scaling AI models, allowing developers to focus on creating the application logic rather than managing servers.

Fireworks AI provides access to a massive library of popular open-source models, including Meta's Llama 3, Google's Gemma, Mixtral 8x22B, and Qwen. This commitment to open-source gives developers flexibility in choosing and customizing models for their projects.

Fireworks AI uses a blazing-fast inference engine optimized with highly efficient software and top-of-the-line NVIDIA GPUs. This architecture ensures low latency and high throughput, enabling real-time responses and handling billions of tokens daily.

No, Fireworks AI is primarily an infrastructure layer designed for AI developers and enterprise machine learning teams. It requires technical expertise to integrate via APIs and build custom applications, making it unsuitable for non-technical business users seeking out-of-the-box solutions.

Fireworks AI offers flexible, usage-based pricing across three main models: serverless inference (pay-per-token), fine-tuning (pay-per-training-token), and on-demand (pay-per-second for dedicated GPU access). The best option depends on your usage volume and specific needs.

Developers benefit from significantly faster deployment, instant access to a vast open-source model library, and automatic scaling capabilities. Fireworks AI abstracts away the complexities of GPU management and infrastructure, letting teams focus purely on AI innovation.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.