Voice-powered everything seems to be the new normal, from meeting assistants that jot down notes for you to voice commands that handle your grocery list. But the tech behind it all can feel a bit like alphabet soup. You’ll often hear about OpenAI's Whisper and Text-to-Speech (TTS) APIs, and while they sound related, they actually do opposite jobs.

Figuring out the difference is pretty important for anyone looking to build apps with voice features or just make their business run a little smoother with AI. This guide will break down exactly what Whisper and TTS APIs do, how they’re different, how they play together, and help you figure out which one you actually need.

The core of Whisper vs TTS API: Speech-to-text vs text-to-speech

Before we get into a head-to-head comparison, let's get the basics down. These two technologies are really just two sides of the same coin: one is for listening, and the other is for talking.

What is OpenAI Whisper?

OpenAI Whisper is what’s known as a speech-to-text model. Its whole job is to take spoken audio and turn it into written text. Simple as that.

It was trained on a massive 680,000 hours of audio from all over the web, which is why it’s so good at understanding different accents, filtering out background noise, and even catching technical jargon. It can transcribe 98 different languages and even translate many of them into English. You can get it as an open-source model to run on your own hardware or use the paid API, which is much easier to plug into your projects.

Basically, Whisper is the "ears" of an AI system.

What is a TTS API?

A Text-to-Speech (TTS) API does the complete opposite. It takes written text and turns it into spoken audio. OpenAI has its own TTS API that can create very human-sounding voices from a block of text. These systems are designed to sound natural, with the right kind of rhythm and tone you'd expect from a person.

You can think of a TTS API as the "voice" of an AI system. It’s the technology that lets your GPS give you turn-by-turn directions, your phone read an article out loud, or an AI assistant give you a verbal answer.

How Whisper and TTS APIs work together

Here’s the main thing people get wrong in the whole "Whisper vs TTS API" debate: you don't choose one over the other. They’re collaborators. You use them at different points in a process to create a full conversation loop.

Let's say you're building a voice assistant. Here's how the two would team up:

-

You speak: You ask a question, like, "What are your business hours?"

-

Whisper listens: The system captures the audio and Whisper transcribes it into a simple text string: "What are your business hours?"

-

The app thinks: Your application (or a large language model) gets that text, figures out what you want, and finds the answer. The answer is also text: "We are open from 9 AM to 5 PM, Monday to Friday."

-

The TTS API talks: Finally, the TTS API takes that text response and turns it into an audio file of a synthesized voice speaking the words, so you can hear the answer.

As you can see, they work in sequence. They aren’t interchangeable. Audio becomes text, the text is processed, and then the text response becomes audio again.

The real challenge isn't picking between them, but getting them integrated smoothly and building all the logic in the middle. This usually takes a lot of developer time, ongoing maintenance, and you have to be careful about errors or "hallucinations" where the AI understands something or just makes up a wrong answer.

Picking the right tool for the job

When you're looking to build something with voice, you need to look at a few key things. While Whisper is a top-notch choice for speech-to-text, there are other players like Deepgram, Google, and Amazon out there.

Here are the factors you'll want to think about:

-

Accuracy: How well does the model actually understand what’s being said? This is often measured by "Word Error Rate" (WER), and a lower score is better. Whisper is known for being very accurate, but nothing is 100% perfect. A few wrong words can completely confuse your application.

-

Speed: How fast do you get a response? For a real-time conversation, you need very low latency. But if you’re just transcribing a long meeting recording after the fact, speed isn’t as critical.

-

Cost: API pricing is usually calculated per minute of audio you process. But don't forget the "hidden costs." If you go the open-source route with Whisper, you'll need powerful servers, someone to maintain them, and developer hours, which can add up fast.

-

Extra features: Do you need things like identifying different speakers, real-time transcription, or a custom vocabulary for your industry's lingo? The basic APIs might not have these.

This is where building from scratch can really become a headache. For a lot of businesses, especially in customer support, the main goal is just to answer customer questions faster. A custom voice bot is one way to do that, but it's a huge, long-term project.

A more direct route is often to use a platform that solves the actual business problem without the steep learning curve. For example, a tool like eesel AI is built to automate support by connecting directly to the helpdesk and knowledge bases you already use. It sidesteps the whole STT/TTS pipeline mess by focusing on text, where most support tickets are anyway. This lets you get up and running in minutes, not months.

| Feature | Building with Raw APIs (Whisper/TTS) | Using a Platform like eesel AI |

|---|---|---|

| Setup Time | Weeks to months of development | Minutes, with one-click integrations |

| Knowledge Source | Requires custom coding for each source | Instantly connects to helpdesks, docs, etc. |

| Maintenance | Ongoing developer time and server costs | Managed by the platform |

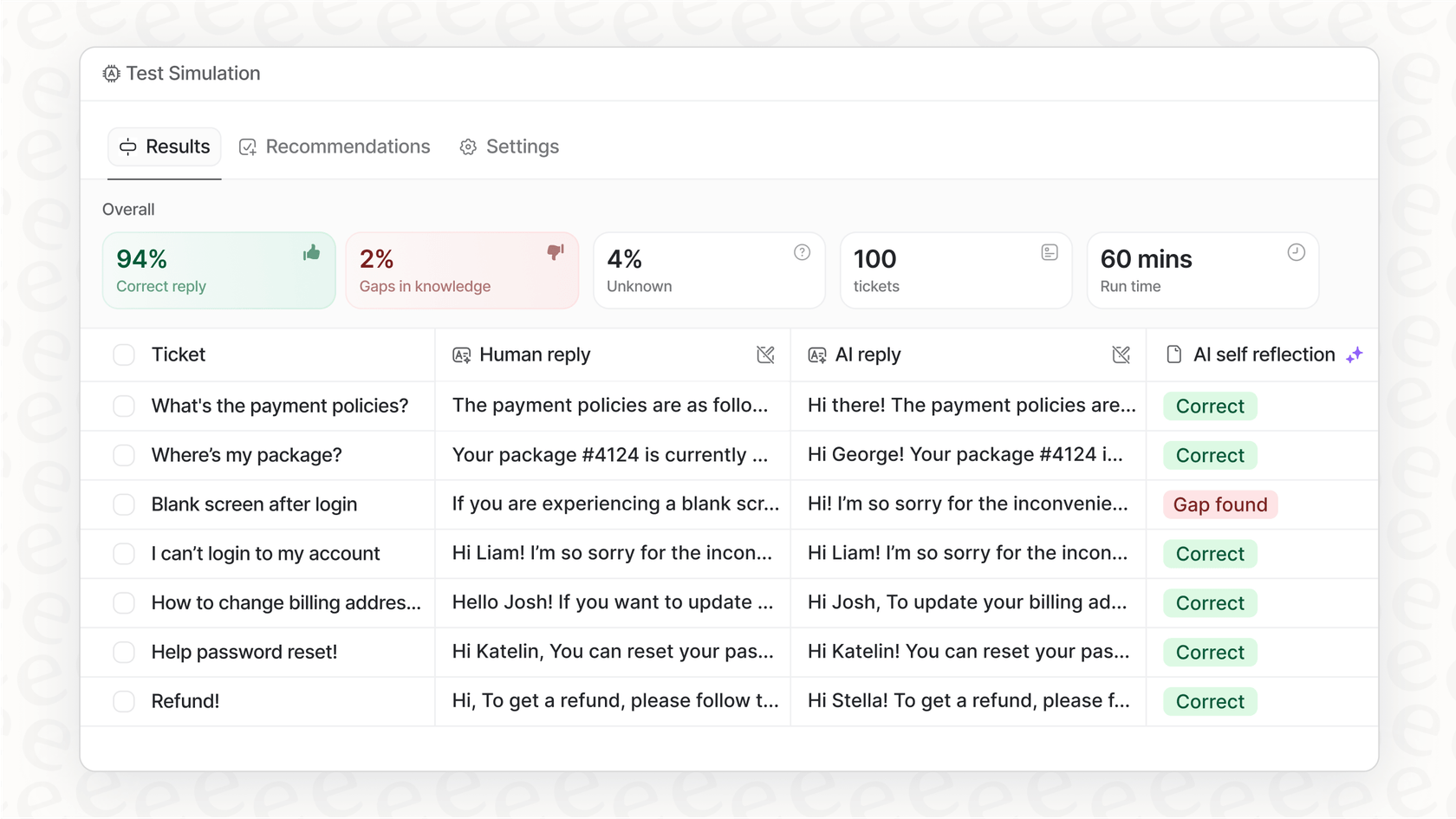

| Testing | Difficult to simulate real-world performance | Robust simulation on historical tickets |

| Core Focus | Technical implementation of voice I/O | Solving the business problem (support automation) |

Real-world examples of Whisper and TTS APIs

Whisper and TTS APIs are the engines behind a lot of tools we're already using every day:

-

Meeting Transcription: Automatically creating a written summary of calls and meetings.

-

Video Captioning: Generating subtitles for videos to make them more accessible.

-

Voice Assistants: The brains behind smart speakers and in-app voice commands.

-

Customer Support Voicebots: Automated phone systems that can actually understand and respond to people.

But sometimes, focusing on a fancy voicebot means you miss a bigger, easier win. Most customer support still happens over email and live chat. Automating these text-based channels can give you a much faster return on your investment while solving the exact same problem: getting people quick, accurate answers.

Instead of building a complicated voice system from the ground up, a platform like eesel AI lets you use AI right where your team and customers already are.

- It instantly knows your stuff: eesel AI trains on your past support tickets, help center articles, and internal docs in places like Confluence or Google Docs. It picks up your brand's voice and solutions from day one, something that would take ages to build into a custom bot.

- You can test it safely: You definitely want to know an AI works before you let it talk to your customers. eesel AI has a simulation mode that lets you test it on thousands of your past tickets, so you can see exactly how well it will perform.

- You're always in control: You get to decide which questions the AI handles and when it’s time to pass a conversation to a human. This lets you roll it out gradually and keep your customer experience great.

OpenAI Whisper and TTS API pricing

If you do decide that using the APIs directly is the right move, it's good to know what it'll cost. OpenAI's pricing is all usage-based.

-

Whisper API Pricing: The Whisper API costs $0.006 per minute of audio, rounded up to the nearest second. So, transcribing a one-hour meeting would set you back about $0.36.

-

TTS API Pricing: The Text-to-Speech API is $0.015 per 1,000 characters for the standard model and $0.030 per 1,000 characters for the higher-quality HD model.

While those rates seem low, the costs can add up if you have a lot of traffic. More importantly, that pricing doesn't include the cost of the AI model calls to figure out the answers, or the developer and server costs to keep everything running.

Focus on the solution, not just the tech

So, there you have it. Whisper (speech-to-text) and TTS (text-to-speech) are powerful tools that work together to bring voice interfaces to life. Whisper listens, and TTS talks. They’re two parts of a whole, not competitors.

But building a business solution from these raw parts is a serious project. It takes a lot of technical skill, ongoing upkeep, and a big investment in development time.

For businesses just looking to improve their customer support, there's often a much more direct path. By automating the text-based conversations where your team already spends most of its time, you can get big results without the hassle of a custom voice system.

Platforms like eesel AI offer a ready-to-go solution that plugs into your existing tools, learns from your company's knowledge, and gives you the control you need to automate support the right way.

Ready to see what an AI support platform built for the job can do? Try eesel AI for free and you can be up and running in minutes.

Frequently asked questions

The fundamental difference is their directionality: Whisper converts spoken audio into written text (speech-to-text), acting as the system's "ears." Conversely, a TTS API transforms written text into spoken audio (text-to-speech), serving as the system's "voice."

They collaborate sequentially to create a conversational loop. Whisper first transcribes user speech into text, which an application then processes to formulate a text-based response. Finally, the TTS API converts this text response back into spoken audio for the user.

They are not competitors and serve opposite, complementary functions. You typically use both in conjunction for a full two-way voice interaction, with Whisper handling input and a TTS API handling output.

Key factors include accuracy (e.g., Word Error Rate), speed (latency for real-time applications), cost (API pricing plus hidden infrastructure and development expenses), and extra features like speaker identification or custom vocabularies.

Yes, you can use them independently depending on your goal. For instance, Whisper alone is perfect for transcribing meeting recordings, while a TTS API can be used by itself to read out articles. A full conversational voice assistant, however, requires both.

They power applications like meeting transcription, video captioning, interactive voice assistants (e.g., smart speakers), and automated customer support voicebots. They form the core of any system that needs to both understand and generate human-like speech.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.