For a while now, the idea of having an AI coding partner right inside a Jupyter notebook has felt like the holy grail for data scientists and developers. When tools like GitHub Copilot started making waves, it just made everyone want that same power inside their notebooks even more. The engine behind those early tools was OpenAI Codex, a model that promised to turn plain English into functioning code.

This guide will walk you through the story of Codex and its journey with Jupyter, look at the modern tools that have taken its place, and make the case for why the real wins in productivity come from looking beyond the notebook to an an AI that understands your team’s entire knowledge base.

What was OpenAI Codex?

OpenAI Codex was an AI system that could take natural language prompts and spit out code. It was a spin-off of GPT-3, trained on a massive amount of text and billions of lines of public code. This is what powered the first version of GitHub Copilot.

Codex was pretty versatile, handling over a dozen languages like Python, JavaScript, and Ruby. It did more than just autocomplete; it could explain what a chunk of code did, translate code between languages, and even help refactor your work.

But here's a crucial piece of the puzzle: the original OpenAI Codex models were officially retired in March 2023. While the underlying tech has moved on and its successors are now baked into OpenAI's newer models, the specific Codex API that all the early integrations were built on is gone. That one change pretty much reshaped the entire landscape for AI tools in Jupyter.

The community's push for OpenAI Codex in Jupyter

Before any official tools came along, the developer community was already hard at work trying to get AI into their favorite notebook environment. Many data scientists and ML engineers live in Jupyter, and the iterative, cell-by-cell workflow is just how they think. Having to jump over to VS Code just to use Copilot felt like a "cumbersome" and clunky interruption.

Early community-built solutions

This frustration sparked a bunch of open-source projects. Tools like "gpt-jupyterlab" and "jupyterlab-codex" popped up, letting developers send the content of a notebook cell over to the OpenAI API. The setup was usually pretty simple: "pip install" a package, drop your OpenAI API key into the JupyterLab settings, and you'd get a new button to generate code. These tools were a great example of the community building what they needed.

Challenges and limitations of early integrations

As promising as they were, these early integrations had their share of headaches that made them tough to rely on long-term:

-

The setup headaches: Users frequently hit roadblocks during installation, especially on remote servers. A lot of these plugins needed a full restart of the JupyterLab server to work, and figuring out why your configuration wasn't working could eat up a good chunk of your afternoon.

-

Outdated models: Most of these tools were built specifically for the original Codex models. Once those were retired, the extensions basically stopped working unless they were completely overhauled to use newer (and differently priced) OpenAI models.

-

The biggest blind spot: no context: The most fundamental problem was that these tools were working in a vacuum. They could see the code in one cell, but they had zero access to the project documentation in Confluence, the specs in Google Docs, or that one key clarification your manager posted in Slack. The AI could write code, but it didn't know why it was writing it.

The modern landscape

Things have definitely matured since the days of simple Codex plugins. The focus has moved from single-model hacks to more solid, flexible frameworks that can connect to a bunch of different AI providers.

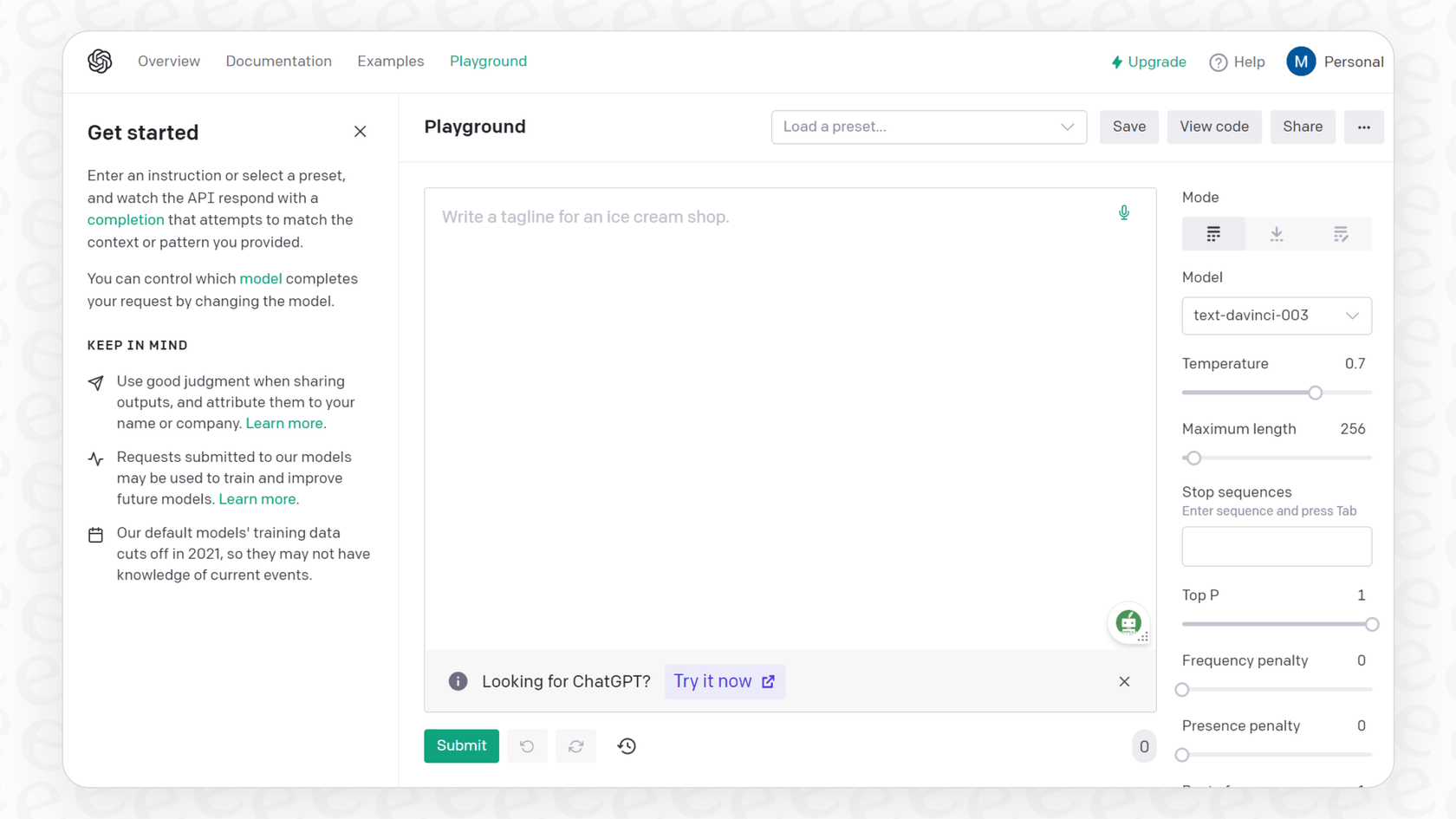

The rise of "jupyter-ai"

The main player today is "jupyter-ai", an official project from the Jupyter team. It puts generative AI right inside JupyterLab and Jupyter Notebooks. Instead of being locked into one old model, "jupyter-ai" is more of a universal adapter, a a whole range of modern models from providers like OpenAI, Anthropic, Cohere, and even open-source models from Hugging Face.

It comes with a built-in chat UI where you can ask questions about your code, plus handy "%%ai" "magic commands." These let you generate code, fix errors, or even create whole functions from a simple prompt inside a notebook cell.

Pricing and setup considerations

While "jupyter-ai" is open-source, the powerful models it connects to aren't free. You'll need an API key from a provider like OpenAI or Anthropic, and you'll get billed for what you use, usually based on "tokens" (the bits of words the AI chews on). The days of free betas are over; getting access to models that are good enough for coding is a paid service now. For instance, OpenAI's latest models are available through their API or as part of plans like ChatGPT Team or Enterprise.

| Provider | Example Models | Best for |

|---|---|---|

| OpenAI | "gpt-4o", "gpt-3.5-turbo" | General purpose code generation, explanation |

| Anthropic | "claude-3-sonnet" | Complex reasoning, handling large contexts |

| Hugging Face | Various open-source models | Specialized tasks, running on own infrastructure |

This video from the creator of Jupyter AI demonstrates how the tool brings generative AI capabilities directly into the familiar notebook environment.

Beyond code completion: The limitations of in-notebook AI

Even with a slick tool like "jupyter-ai", there's still a big limitation: the AI is stuck inside the developer's coding environment. A good developer does more than just write code. They need to understand business goals, read technical specs, and talk with their team. An AI assistant that can't see any of that information is only solving half the problem.

Think about how much of a developer's day is spent switching contexts. You're deep in a notebook, then you have to stop, open a new tab, search Confluence for API docs, scroll through a Slack channel to find a decision someone made, or pull up a Google Doc to check a design. Every one of those little interruptions breaks your focus and slows you down.

Unifying your tools with an internal AI assistant

This is where we need to think bigger than just a coding assistant and start thinking about a knowledge-aware AI platform. While "jupyter-ai" brings a model into your notebook, a tool like eesel AI brings all of your company’s scattered knowledge to the AI.

With eesel AI's internal chat, you can set up an assistant that’s trained on your organization's own data. It connects directly with the tools your team already relies on, like Notion, SharePoint, and Microsoft Teams.

Imagine a developer is working in Jupyter and has a question about a data schema. Instead of dropping everything to go digging through documentation, they can just ask the eesel AI bot in Slack: "What are the required fields for the "user_profiles" table?" The bot gives them an instant, accurate answer pulled straight from the team's official docs. That's what unifying your knowledge does, it cuts down on the constant context switching and lets developers get on with their work.

Getting started: Moving to a knowledge-aware workflow

The path from the first OpenAI Codex integrations with Jupyter to where we are today shows a clear pattern. We went from basic plugins to flexible in-notebook assistants. The next logical step is a fully connected, knowledge-aware AI workflow. The future of developer productivity isn't just about churning out code faster; it's about helping developers make better decisions with less hassle.

And you don't need a massive, months-long project to get there. With a platform designed to be simple, you can get going fast. For example, eesel AI is built to be radically self-serve, meaning you can go live in minutes, not months. You can connect your knowledge sources and roll out an internal AI assistant for your team without sitting through endless demos or custom implementation projects.

Build smarter, not just faster

AI-powered code completion in Jupyter is a fantastic tool that has changed how a lot of us work. But it really shines when the AI has the full picture, the context from your team’s documentation, conversations, and business goals. By connecting all of your internal knowledge, you can create an assistant that doesn't just write code, but helps your developers build the right code, faster.

Ready to give your developers an AI that actually understands your business? Try eesel AI to unify your knowledge and give your team's productivity a real boost.

Frequently asked questions

The original OpenAI Codex models, which powered early integrations, were officially retired in March 2023. While their underlying technology evolved into newer OpenAI models, the specific Codex API is no longer available, making direct "OpenAI Codex integrations with Jupyter" outdated for new projects.

The primary modern alternative is "jupyter-ai". This official Jupyter project acts as a universal adapter, connecting JupyterLab and Jupyter Notebooks to a range of current generative AI models from providers like OpenAI, Anthropic, and Hugging Face.

Early integrations often suffered from setup complexities, quickly became outdated when Codex models were retired, and critically, lacked access to broader project context like documentation or team discussions, operating in a knowledge vacuum.

Yes, while "jupyter-ai" itself is open-source, the powerful AI models it connects to typically require an API key from a provider (e.g., OpenAI, Anthropic) and incur usage-based costs, usually billed by "tokens."

To overcome context limitations, you need a knowledge-aware AI platform that unifies all your team's scattered information from tools like Confluence, Slack, and Google Docs. This allows the AI to provide answers and assistance based on your organization's entire knowledge base, extending beyond just the code in a notebook.

Moving to a knowledge-aware AI workflow significantly reduces context switching, helps developers make better decisions by providing instant access to organizational knowledge, and ultimately boosts overall productivity beyond just faster code generation.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.