Getting a beautiful design from Figma into functional, working code has always been one of those classic product team headaches. It’s a process filled with manual translation, endless back-and-forth between designers and developers, and that nagging feeling that things could be so much faster. The dream, of course, is that AI tools like OpenAI Codex could just look at a Figma file and spit out perfect code.

And to some extent, that dream is getting closer. But what does it actually take to make it happen?

This guide is a practical, no-fluff look at OpenAI Codex integrations with Figma. We'll walk through the official "how-to," but more importantly, we'll dive into the real-world messiness that the documentation usually skips over. We’ll look at what it takes to build a truly smooth workflow that doesn’t just stop at code, but carries all the way through to supporting your customers.

What are OpenAI Codex and Figma?

Before we jump into connecting them, let’s get on the same page about the two main players in this story.

What is OpenAI Codex?

You’ve probably heard of OpenAI Codex as the AI model that powers GitHub Copilot, the tool that feels like it’s reading your mind while you code. At its core, Codex is an AI system from OpenAI that's incredibly good at turning natural language, plain English, into code in dozens of languages.

Now, here's a quick but important heads-up: the original models named "Codex" were actually deprecated back in March 2023. These days, the term "Codex" is often used to refer to OpenAI's broader set of developer tools, which includes a command-line interface (CLI) and extensions for code editors. These modern tools use newer, more powerful GPT models to get the job done. So, when we talk about integrating with Figma, we're talking about hooking into this current toolset.

What is Figma?

If you’re in the product or design world, you almost certainly know Figma. It’s the collaborative, cloud-based design tool that has pretty much become the industry standard for everything from early wireframes to full-blown, interactive prototypes and massive design systems.

For developers, one of the most game-changing additions has been Dev Mode. It’s a specific workspace inside Figma built to make the handoff from design to development less painful. It gives developers direct access to measurements, CSS specs, downloadable assets, and even ready-to-copy code snippets. This focus on bridging the design-dev gap makes it a perfect candidate for pairing with an AI coding assistant.

The official approach: How OpenAI Codex integrations with Figma work

So, how do you get these two powerful tools to talk to each other? The technical magic behind it all relies on a common language they can both speak.

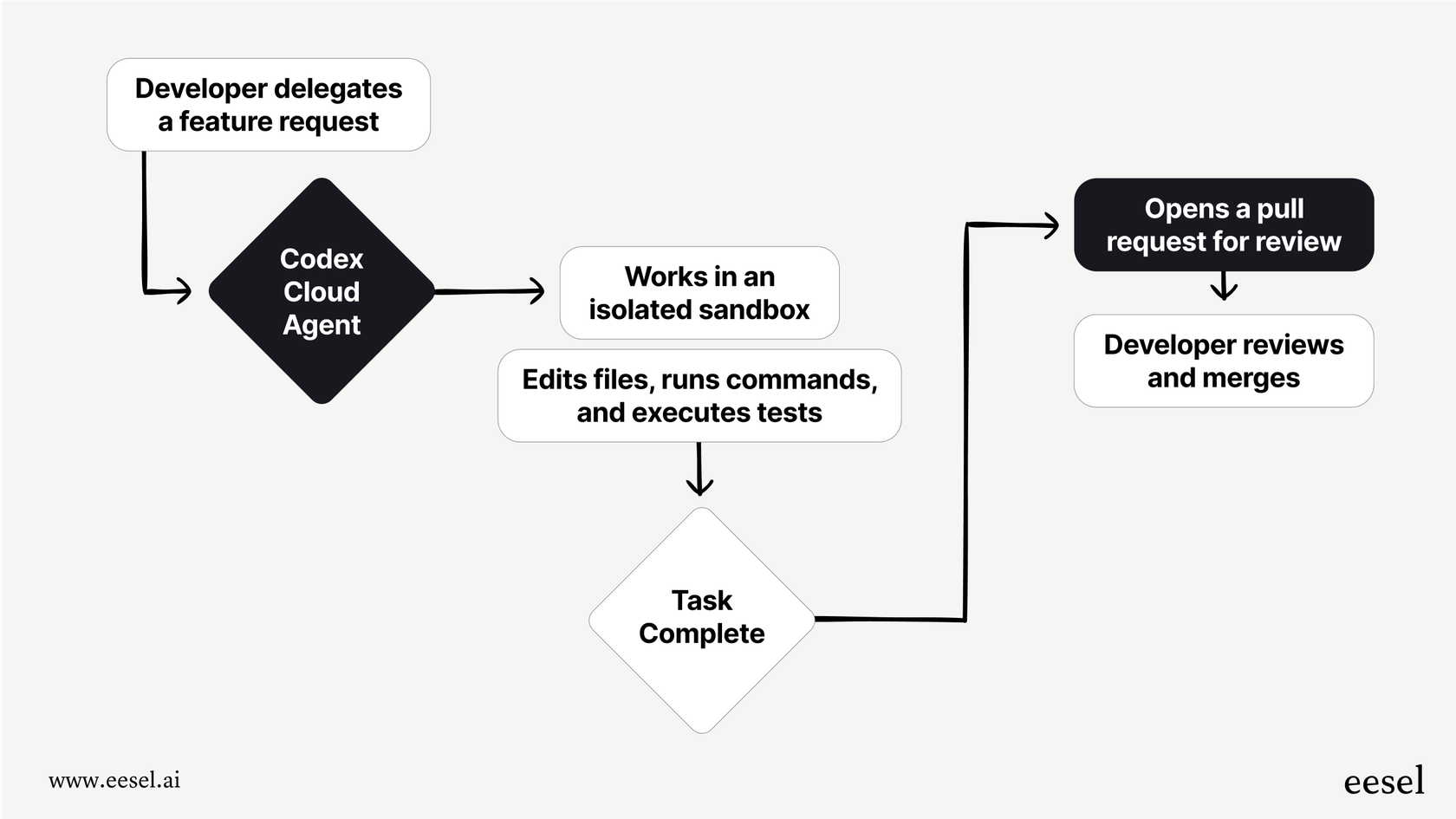

Understanding the Model Context Protocol (MCP)

The whole thing is built on something called the Model Context Protocol (MCP). It’s an open standard that basically acts as a universal adapter for AI.

Think of it like a USB port for AI models. A USB port lets you plug in all sorts of different devices, a keyboard, a mouse, a hard drive, and your computer just knows what to do with them. MCP does the same thing for AI. It provides a standard way to "plug in" different sources of information (like a Figma file, a web browser, or a database) to give an AI model fresh, real-time context. Instead of only knowing what it was trained on months ago, the AI can now see and use live information from other tools.

The role of the Figma MCP server

To make its design data available to an AI, Figma runs what's called an "MCP server." This server is the gateway that lets any MCP-compatible tool, like the Codex developer tools, pull information directly from your Figma files. It's how the AI gets to "see" your designs.

According to Figma's documentation, there are a couple of ways to connect to this server:

-

Desktop MCP server: This runs locally on your machine through the Figma desktop app. It's perfect for a workflow where you might click on a component in Figma and then ask the AI to build it in your code editor.

-

Remote MCP server: This is a hosted web address ("https://mcp.figma.com/mcp") that lets cloud-based tools and services connect directly without needing the desktop app running.

The end goal is pretty straightforward: give the AI direct access to your design frames, components, color variables, and layout specs. With that context, it can generate code that isn't just functional but is also perfectly aligned with your established design system.

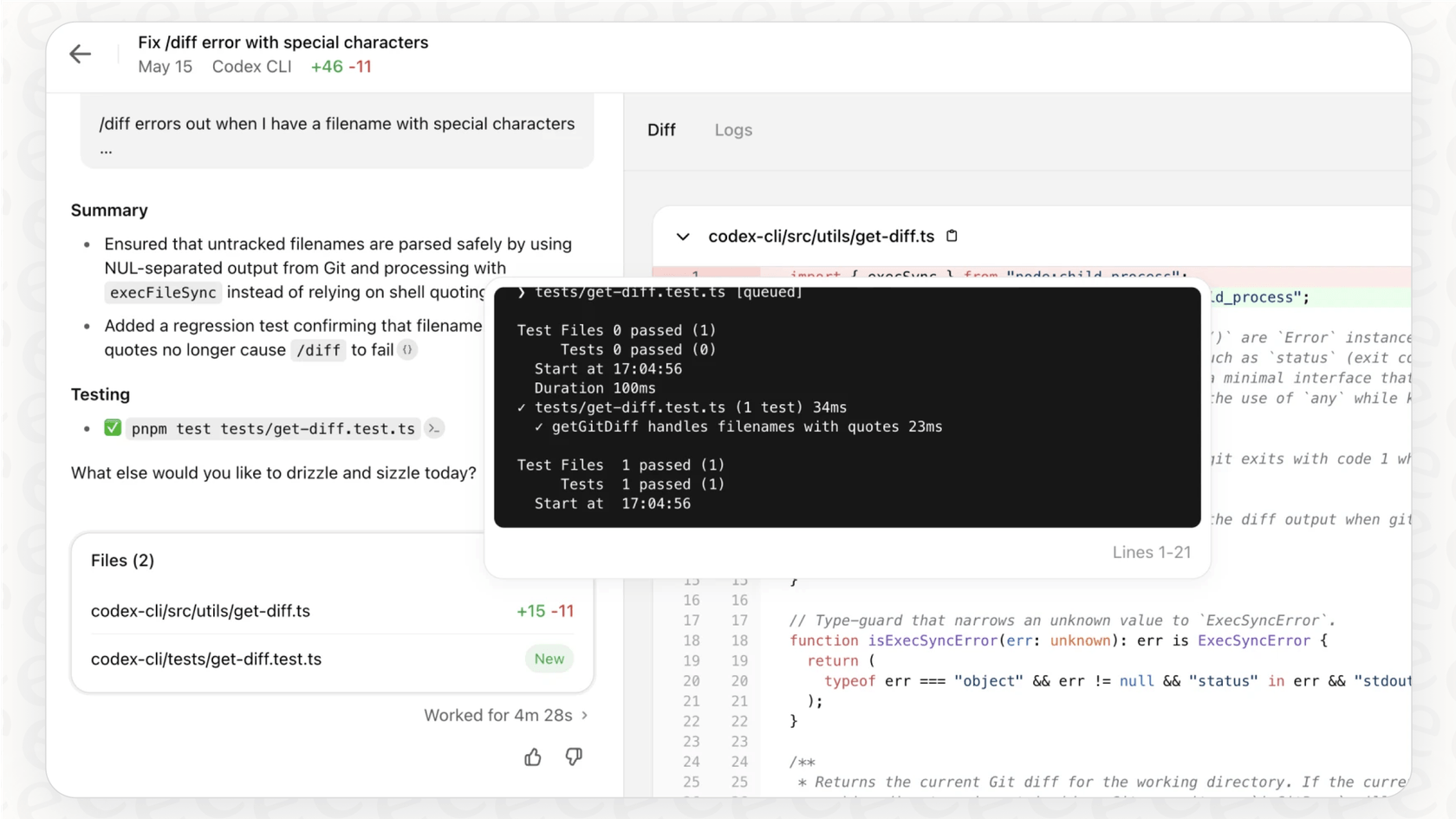

The intended workflow

On paper, the process sounds like a dream. A developer connects their Codex tool (probably running inside an editor like VS Code) to Figma's MCP server. They grab a link to a specific frame in a Figma file, paste it into their editor, and write a prompt like, "Build me a React component based on this design, and make it pixel-perfect."

Since the AI can access the design context through the MCP server, it can see the component names, pull the exact hex codes for your brand colors, use the right font sizes, and understand the spacing, all leading to incredibly accurate code. At least, that's the theory. You can find the official docs on this over at OpenAI's developer site.

This video provides a step-by-step guide on how to add MCP servers to OpenAI's Codex CLI, giving you a closer look at the technical setup.

The reality of OpenAI Codex integrations with Figma: Why they're complex

While the official workflow sounds clean and simple, the reality on the ground is… well, a lot messier. This setup is far from a one-click install and is really only approachable for developers who are very comfortable working under the hood.

A look at the real-world setup process

If you poke around community forums, you’ll quickly see the disconnect between the official docs and the real-world experience. A great example is this Reddit thread where developers share the hurdles they faced. The process isn't just "turn it on"; it often involves a bunch of non-obvious steps that aren't mentioned in the main guides:

-

Manual configuration: You have to find and manually edit a configuration file called "config.toml" on your machine just to tell the Codex tool that the Figma server even exists.

-

CLI for authentication: Even if you just want to use the handy VS Code extension, you can't. You first have to open up your terminal and use the command-line interface (CLI) to authenticate your account.

-

Token management: You'll also need to go into your Figma settings, generate a personal access token (basically a unique password for apps), and then figure out how to set it up as an environment variable so the tools can securely connect.

This is a long way from the simple, user-friendly experience you might expect. It requires a solid comfort level with the command line, editing config files, and handling API keys, which immediately puts it out of reach for most designers, product managers, and even some developers.

The limitations of a code-only approach

Let's say you push through the tricky setup and get it working. That’s a huge win! But it only solves one, very specific part of the product development puzzle: turning a static design into initial code. It doesn't do anything to help with everything that comes after.

-

Ongoing support: The feature is live, and customers start using it. What happens when they have questions? Your support team is now on the front lines, but they don't have a direct line into the design or development context.

-

Internal knowledge: How do you get your internal teams up to speed? Your customer support agents, sales engineers, and a marketing team all need to understand how the new feature works, but they aren't going to be digging through code or Figma files to find out.

-

Accessibility: This entire workflow is built by developers, for developers. It completely leaves out product managers, support leads, and designers who could get a ton of value from AI automation but don't spend their days inside a code editor.

A better way: Unifying knowledge beyond the design file

An efficient workflow needs an AI that understands the entire product lifecycle, not just a single Figma file. While the Codex integration is laser-focused on turning a design into code, a platform like eesel AI is built to automate the support and internal knowledge management that happens after the feature is built.

Instead of just connecting to a design tool, eesel AI plugs into your company's entire brain:

-

Help desks: It can learn from years of past conversations in Zendesk, Freshdesk, and Intercom.

-

Internal wikis: It instantly absorbs all your documentation from places like Confluence, Google Docs, and Notion.

-

Chat tools: You can deploy helpful AI assistants directly where your teams (and customers) already are, like in Slack or Microsoft Teams.

This is where the difference becomes really clear. The complex, developer-heavy setup for Codex and Figma is the polar opposite of eesel AI’s self-serve platform, where a non-technical support manager can build, test, and launch a powerful AI agent in minutes. No coding, no config files.

Pricing and availability

Okay, let's talk about what it costs to get access to these tools. Understanding the pricing and plan requirements is pretty important for deciding if this integration is even a good fit for your team.

OpenAI Codex pricing

This one is a bit fuzzy. OpenAI doesn't have a public, standalone price for the Codex developer tool itself. Access is usually bundled in with other services. For example, it's part of what makes GitHub Copilot work (which has its own monthly subscription), or its usage is billed against your general API credits if you're using the underlying models directly.

Figma pricing

For Figma, things are much clearer. To use the MCP server in any meaningful way, you’re going to need a paid plan with a Dev or Full seat. Users on the free plan are limited to just six tool calls per month, which is basically nothing if you're trying to do real development work.

Here’s a quick breakdown of the relevant Figma plans:

| Plan | Price (per editor/month, annual billing) | Key Features for Devs |

|---|---|---|

| Professional | $12 | Full design and prototyping features. |

| Organization | $45 | Design systems, advanced security, and admin controls. |

| Enterprise | $75 | Scalable security, dedicated support, and controls. |

The takeaway here is that the ability to connect Figma to an AI coding assistant is a premium feature, reserved for paying customers on the higher-tier plans.

From design-to-code to design-to-support

OpenAI Codex integrations with Figma represent a fascinating, powerful, but very technical frontier in AI-assisted development. For developers willing to roll up their sleeves, the direct MCP connection is a promising tool that can definitely speed up the initial coding process. However, it's still complex to set up and only addresses a small slice of the much bigger product lifecycle challenge.

The future of this space isn't just about generating code faster. It's about building a connected ecosystem where knowledge flows seamlessly from a design file, to the developer's editor, and finally, to the customer support team who helps real people use the final product. For teams that want to automate that entire chain without dedicating developer time to the task, a different approach is needed.

The smarter path to workflow automation

Instead of spending days wrestling with command-line tools and obscure ".toml" files, what if you could automate customer support for your new feature in the time it takes to drink a coffee?

eesel AI offers a radically simpler, self-serve platform that does just that. It connects all of your scattered knowledge sources to power AI agents that can handle frontline support questions, assist your human agents with complex issues, and automatically triage incoming tickets. Best of all, it has a powerful simulation mode, which lets you test your AI on thousands of your own historical support tickets. You can see exactly what its resolution rate would be before you ever turn it on for customers. It’s all about confident, risk-free automation.

Ready to automate more than just code? Try eesel AI for free and see how easy it is to build an AI agent that understands your entire business.

Frequently asked questions

OpenAI Codex integrations with Figma aim to automate the process of converting design files into functional code. They tackle the common challenge of manual translation and back-and-forth between designers and developers, speeding up initial code generation from Figma designs.

Technically, OpenAI Codex integrations with Figma utilize the Model Context Protocol (MCP). Figma provides an MCP server that allows Codex developer tools to access design data directly from Figma files. This gives the AI real-time context from designs to generate corresponding code.

In reality, setting up OpenAI Codex integrations with Figma involves significant manual configuration. Users often need to edit configuration files, use a command-line interface for authentication, and manage personal access tokens from Figma settings. It requires comfort with advanced developer tools.

The primary limitation is that OpenAI Codex integrations with Figma are focused solely on generating initial code from designs. They don't address broader aspects of the product lifecycle, such as ongoing customer support, internal knowledge management, or accessibility for non-developers.

For OpenAI's Codex tools, pricing is typically tied to API usage credits or bundled with services like GitHub Copilot. For Figma, using the MCP server for these integrations requires a paid plan with a Dev or Full seat, as the free plan offers very limited tool calls.

Although the original "Codex" models were deprecated, current OpenAI Codex integrations with Figma leverage OpenAI's broader set of developer tools. These modern tools now utilize newer, more powerful GPT models to convert natural language into code, ensuring the functionality remains relevant.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.