Let's be real: AI is everywhere in the dev world. The idea of plugging it into something like Bitbucket to speed things up isn't science fiction anymore. It’s a real way to get more done, write better code, and maybe even ship a little faster. But trying to figure out how to actually do it can feel like wading through a swamp of marketing buzzwords.

This guide is here to cut through all that noise. We’re going to look at what OpenAI Codex integrations with Bitbucket are, what you can actually do with them, the different ways to set them up, and the hurdles you'll almost definitely run into.

By the end, you'll have a much clearer picture of how to connect these tools in a way that solves actual problems for your development team.

What are OpenAI Codex integrations with Bitbucket?

Before we get into the "how," we need to be on the same page about the "what." The AI space moves incredibly fast, so let's get our definitions straight. It’ll make everything else click into place.

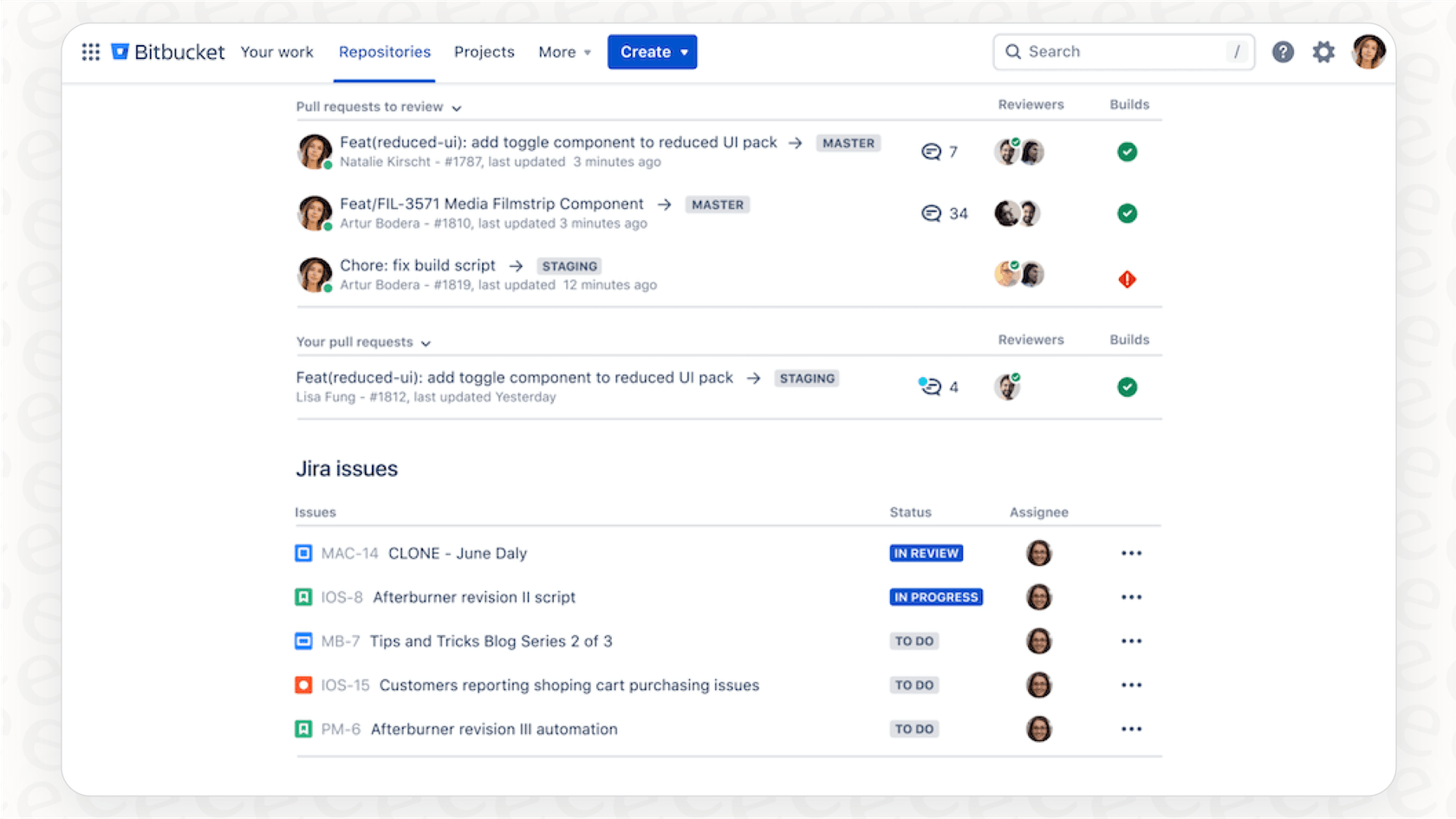

What is Bitbucket?

You probably know this one, but just in case: Bitbucket is Atlassian's Git platform for hosting and collaborating on code. It's a lot like its competitors, but its superpower is how neatly it fits into the Atlassian family, especially Jira. Teams often pick it for its solid CI/CD pipelines and its focus on enterprise-grade features and security.

What is OpenAI Codex? A necessary clarification

Okay, here’s where things get a bit tricky. If you’ve been keeping an eye on AI for a while, you’ll remember the original OpenAI Codex model. It was the tech behind the early versions of GitHub Copilot and felt like magic at the time. Well, that specific model was officially retired back in March 2023.

So, what does that mean for us today?

-

OpenAI now uses the "Codex" name for a newer, more ambitious software engineering agent that can tackle entire coding projects.

-

The capabilities of the original Codex have been completely folded into and surpassed by newer large language models (LLMs), like the GPT-4 series.

So for the rest of this guide, when we say "OpenAI Codex integrations," what we’re really talking about is using OpenAI's latest and greatest language models to do coding work inside your Bitbucket workflow.

What can you actually do with OpenAI Codex integrations with Bitbucket?

The whole point of setting up these integrations is to get rid of the boring, repetitive parts of a developer's day. When you get it right, connecting an AI to your repository is like having an extra junior developer on your team who works 24/7 and never gets tired. Here are a few of the most popular things people are doing:

-

Automated code reviews: Picture an AI that does a first pass on every single pull request. It could automatically drop comments pointing out syntax goofs, suggesting tweaks based on your team's style guide, or flagging code that smells a bit off. This doesn't mean your senior devs are out of a job; it just means they don't have to waste time on the small stuff and can focus on the big-picture architecture.

-

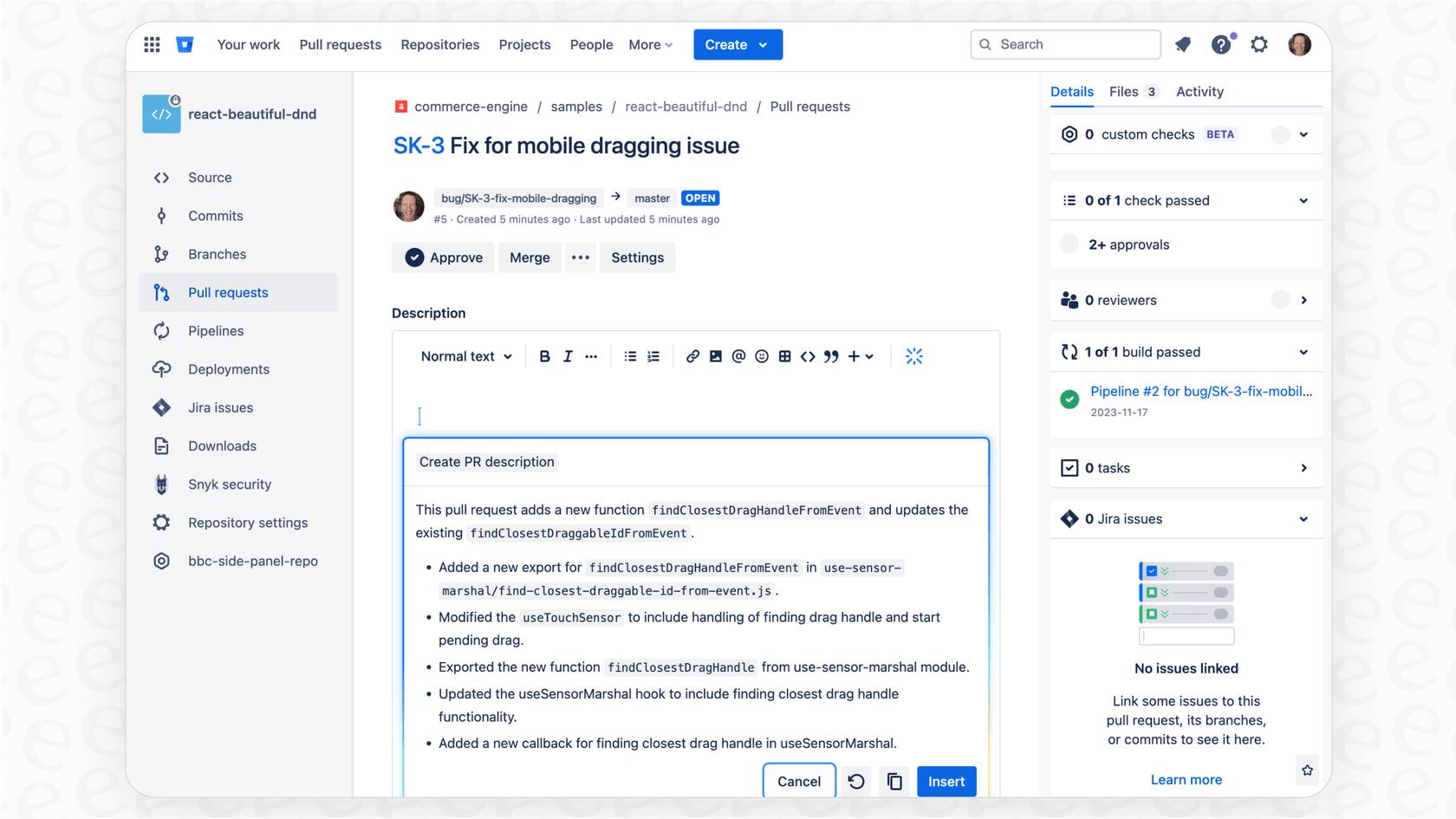

AI-generated pull request summaries: We’ve all been there, staring at a PR with a description that just says “bug fix.” An AI can read the commit messages and code changes to draft a clear summary of what’s going on. This saves the developer a few minutes and gives reviewers the context they need to get started right away.

-

Intelligent code Q&A: This one is a huge deal. It lets developers ask plain English questions about the code and get immediate answers. Things like, “What changed in the payments API in this PR?” or “Show me where the user auth logic lives.” It's like having a teammate with a perfect photographic memory of the entire codebase.

-

Automated documentation: Let's face it, docs are almost always out of date. An integration can automatically update your READMEs, internal wikis, or API documentation whenever the code it describes changes. This is a massive step toward finally solving the stale documentation problem.

Current methods for OpenAI Codex integrations with Bitbucket

So, how do you actually wire all this up? You've got a few options, each with its own balance of ease, power, and price.

Atlassian's native AI features

Atlassian is building its own AI tools, like Atlassian Intelligence and Rovo, right into Bitbucket. These are handy for simple things, like generating a PR description with a single click.

The catch is that you’re playing in Atlassian’s sandbox. These tools are convenient, but they don't always have the full power or flexibility of using OpenAI's models directly. If you have a specific, custom workflow in mind or need to do some heavy-duty code analysis, you’ll probably find the built-in features a bit limiting.

Third-party integration platforms

A bunch of no-code and low-code platforms have popped up to act as the glue between different apps, like Albato, Latenode, and Autonoly. They're pretty good for creating simple "if this, then that" workflows. For example, you could easily set up a rule: "When a new pull request is opened in Bitbucket, send the code to OpenAI and post its summary as a comment."

graph TD A[New Pull Request in Bitbucket] --> B{Third-Party Platform}; B --> C[Send Code to OpenAI API]; C --> D{Generate Summary}; D --> E[Post Summary as Comment in Bitbucket];

The downside is that these platforms can get pricey, and their pre-built triggers often don't have enough context about your repository to provide truly deep insights. A simple API call can summarize a file change, but it can't tell you why that change goes against a decision your team made six months ago.

Custom scripts with Bitbucket Pipelines

This is the roll-up-your-sleeves, most powerful option. You can write a script (say, in Python) that runs as part of a Bitbucket Pipeline whenever an event like a "git push" happens. The script can then call the OpenAI API with whatever instructions you want and handle the response.

This video demonstrates how to automate code reviews with GenAI in Bitbucket Pipelines, which can save time, improve security, and ensure consistent coding standards.

The upside is complete control. You can tweak your prompts, build out complex logic, and create a workflow that’s a perfect fit for your team. The obvious downside is that it takes a developer's time and effort to build and maintain. It's easily the most work-intensive option, and these custom scripts can sometimes be fragile.

The hidden challenges (and how to solve them)

Getting two APIs to talk to each other is usually the easy part. The hard part, where most teams get tripped up, is making the integration genuinely useful. Here are the real problems you'll hit and how to think about them.

The context gap: An AI with no company knowledge

This is, without a doubt, the biggest problem you'll face. A standard call to the OpenAI API has no clue about your company's coding standards, architectural decisions, or project history. It gives generic advice that might be technically fine but totally wrong for your specific codebase. It might suggest using a library you've banned or recommend a pattern that your team lead actively dislikes.

This is where you need more than just a smart AI; you need an intelligence layer that understands your organization. While OpenAI provides the horsepower, a platform like eesel AI provides that critical context. It connects to all your company’s knowledge, not just the code. We're talking about design docs in Confluence, project history in Jira Service Management, and even technical debates in Slack. By pulling all of this together, eesel AI helps you build an assistant that gives answers that are actually relevant to how your team works.

The setup headache: Simple tools vs. complex needs

You often get stuck in a frustrating spot. The no-code tools are simple to start with but aren't flexible enough for real engineering work. The custom scripts are powerful but can turn into a huge maintenance burden. There isn't a great middle ground for building sophisticated AI workflows without needing a team to manage them.

This is where eesel AI's "go live in minutes" approach comes in handy. It’s a self-serve platform that aims to give you the power of a custom-built solution without all the engineering headaches. With simple integrations for your existing tools, you can set up powerful AI agents through a dashboard, giving you both ease of use and deep customization.

The trust deficit: How do you roll out AI safely?

This is the big question: how can you trust an AI to start commenting on your team's pull requests without a ton of testing? Just letting an AI loose on your core workflow is a huge risk. What if it gives terrible advice, confuses people, or just creates more noise?

Most tools just kind of gloss over this problem, but eesel AI makes it a priority. It has a simulation mode that lets you test your AI agent on thousands of your past PRs or support tickets in a safe, sandboxed environment. You can see exactly how it would have behaved, get forecasts on its performance, and tweak it until it's perfect, all before it interacts with a single developer. This lets you roll out AI with confidence, not just hope.

Moving from a simple connection to real intelligence

Hooking up OpenAI to Bitbucket can be a huge help for automating away the boring stuff, improving code quality, and helping your team move faster. As we’ve covered, you have a few ways to get it done, from built-in features to fully custom scripts.

But a great integration is about more than just wiring up two APIs. The real magic happens when you can give the AI the context it needs to understand your team’s unique knowledge. The biggest wins come when you have a powerful workflow that’s still simple to manage, and a way to test and deploy it without taking big risks.

Solving these challenges around context, complexity, and control is exactly what modern AI platforms are designed for, turning a basic API call into an intelligent system that truly works for your team.

Unify your engineering knowledge with eesel AI

Ready to build an AI assistant that actually understands your team? One that can answer tough technical questions by tapping into your team's collective brain?

Connect your development tools like Confluence, Jira Service Management, and Slack with eesel AI. Give your team an internal AI that provides instant, accurate answers grounded in your company's own knowledge. You can get it running in minutes, not months.

Frequently asked questions

Today, when we discuss OpenAI Codex integrations with Bitbucket, we're talking about using OpenAI's latest and most capable language models, like the GPT-4 series, for coding-related tasks within your Bitbucket workflows. The capabilities of the original Codex model have been superseded and are now integrated into these newer, more powerful LLMs.

These integrations can significantly boost efficiency through automated code reviews that flag issues, AI-generated pull request summaries for quicker understanding, and intelligent code Q&A for instant answers about the codebase. They can also automate documentation updates, reducing manual effort.

You can integrate using Atlassian's native AI features (like Atlassian Intelligence), leverage third-party integration platforms for simpler workflows, or build custom scripts that run within Bitbucket Pipelines for maximum control and flexibility. Each method offers different levels of ease and power.

The "context gap" refers to the AI's lack of specific company knowledge, such as coding standards, architectural decisions, or project history. Without this context, the AI might provide generic advice that isn't suitable for your unique codebase or team practices, requiring an intelligence layer that understands your organization.

To build trust, teams should utilize simulation modes that allow testing AI agents on past data in a safe, sandboxed environment. This helps you observe behavior, forecast performance, and refine the AI before it directly interacts with live development workflows, mitigating risks.

Simpler, no-code tools might be easy to start with but often lack the deep context about your repository or the flexibility for complex engineering workflows. Their pre-built triggers may not provide enough nuanced information for truly insightful or customized AI assistance.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.