AI is definitely shaking up software development. The idea is to automate the boring stuff so engineers can focus on the hard problems. OpenAI Codex is a big name in this space, promising an AI agent that can understand your code, write new features, and even handle pull requests. Hooking it up to GitHub sounds like a dream, right? An AI assistant that can grab a ticket, fix a bug, and ask for a review.

But what’s it really like to use? This guide gives you the full picture of OpenAI Codex integrations with GitHub. We'll get into its features, the setup process, how much it costs, and the common headaches teams are facing. By the end, you'll have a much clearer idea of what you’re getting into.

What are OpenAI Codex integrations with GitHub?

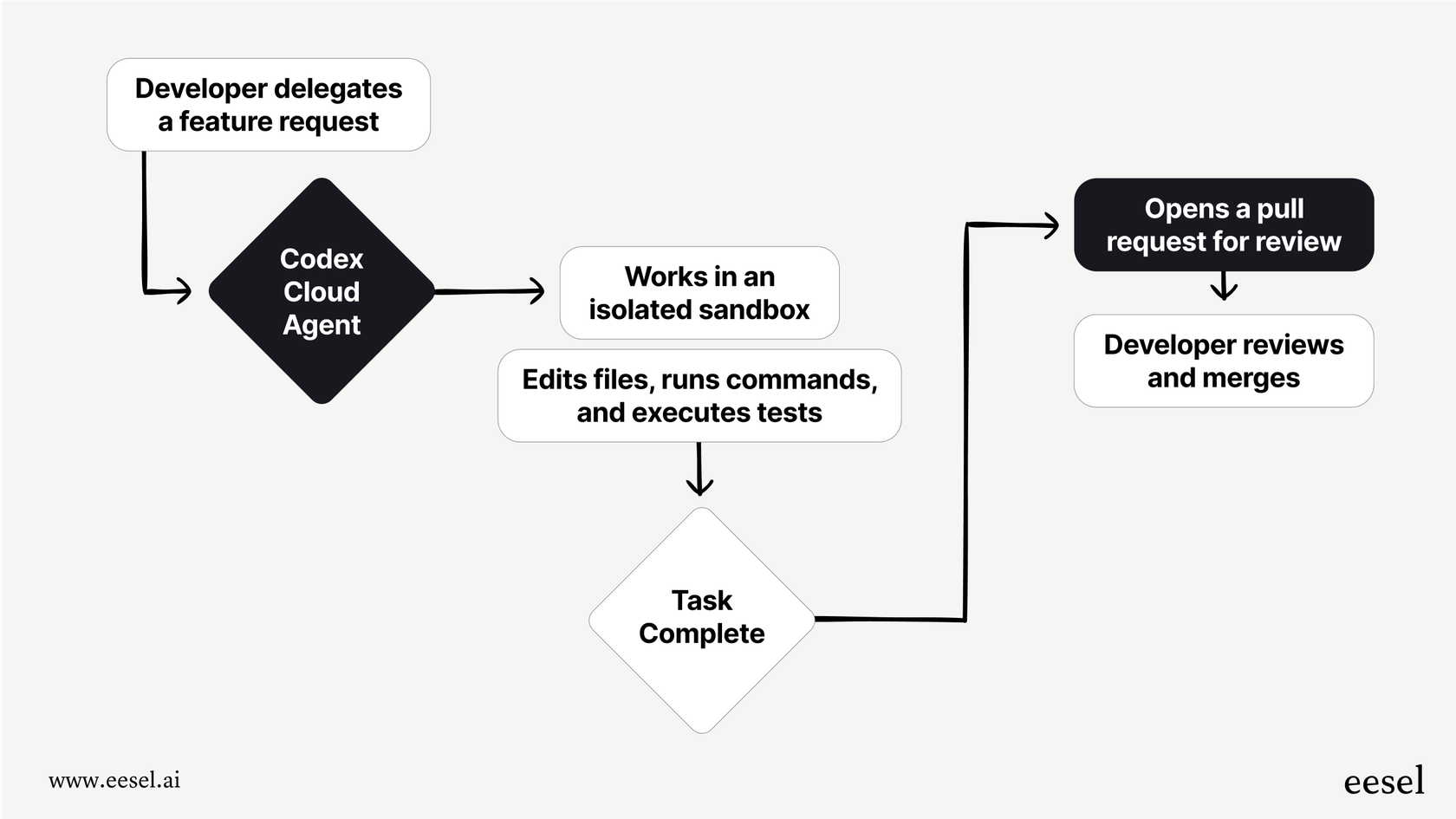

Think of OpenAI Codex as an AI software engineer that works in the cloud. It’s built on "codex-1", a version of OpenAI's models trained specifically to get coding. This isn't just a fancy autocomplete. Codex gets its own little sandbox environment with your code loaded in, so it can read files, run tests, and build features from the ground up.

The integration with GitHub is what makes it all tick. When you connect your GitHub account, you give Codex permission to access certain repositories. Then, you can start handing off tasks, like:

-

Fixing a bug based on an issue you wrote.

-

Cleaning up messy code to make it easier to read.

-

Answering questions about how a piece of your codebase works.

-

Drafting and submitting a pull request for a human to look over.

This turns Codex from a general coding tool into an agent that actually understands your project's context. You can give it a job and let it run in the background, almost like a new, very quiet teammate.

How OpenAI Codex integrations with GitHub work

Getting Codex up and running means connecting it to your dev environment. You can do this through a web UI or a command-line tool, depending on what you prefer.

Setting up OpenAI Codex integrations with GitHub in ChatGPT

Most people start by using Codex through the ChatGPT interface. When you head to the Codex section, it'll ask you to connect your GitHub account.

-

Authorization: You’ll get sent over to GitHub to approve the "ChatGPT connector" app. Here, you can pick which repos (public or private) you want Codex to have access to.

-

Giving it a task: Once you’re connected, you can tell it what to do in plain English. You can either "Ask" it questions or use the "Code" mode to have it write or change code.

-

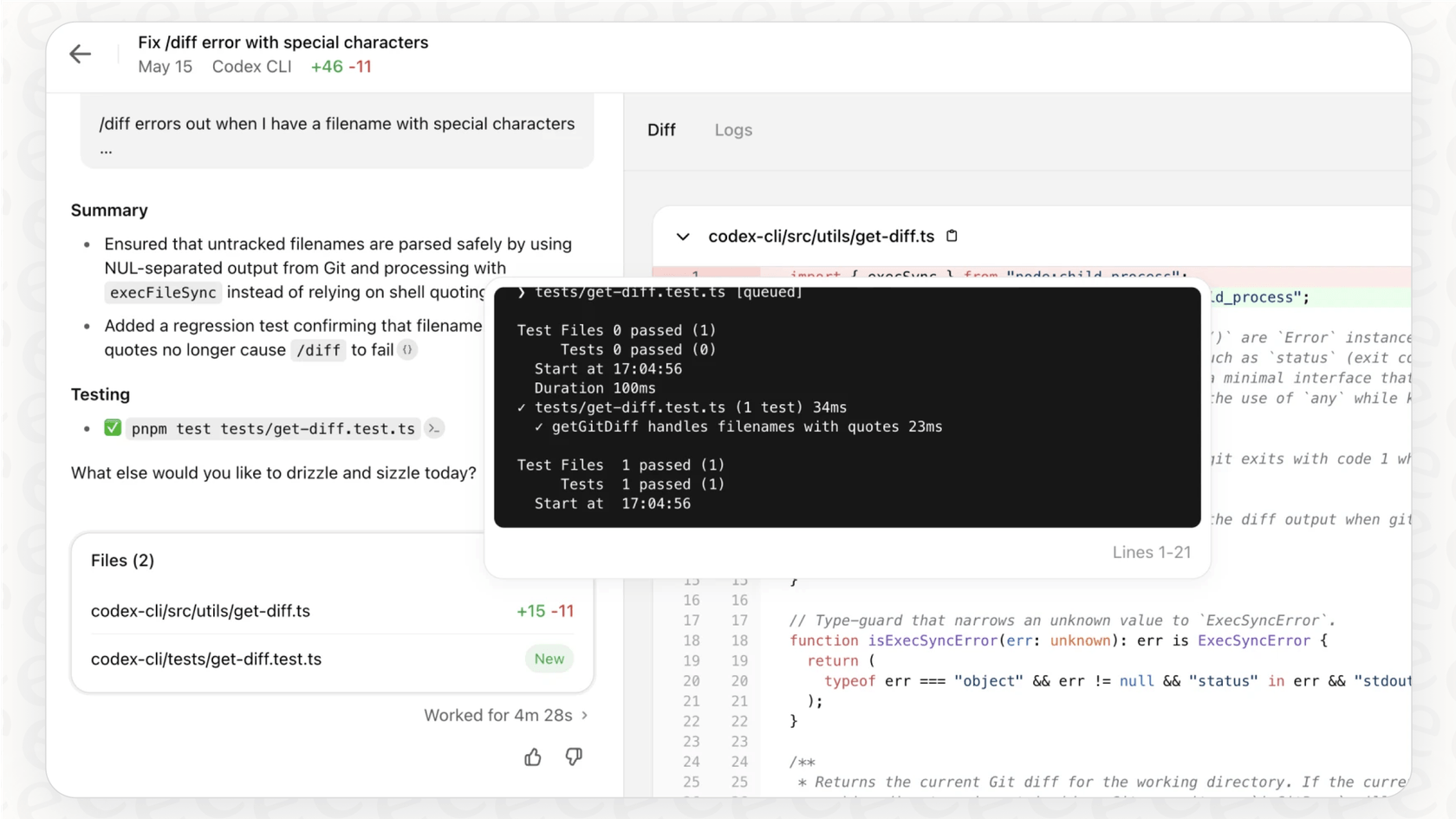

Watching it work: For every task, Codex creates an isolated cloud container, clones your repo, and gets to work. You can see its progress in real-time as it moves through files, runs commands, and makes changes.

-

The pull request: When it’s done, Codex commits the changes and can open a pull request in your GitHub repo for your team to review.

Sounds simple enough, but a lot of developers have run into trouble with the initial setup. Some have gotten stuck in an endless authentication loop. The usual fix is to go into ChatGPT's settings, disconnect the GitHub app, and then try connecting it again. It's a bit clunky and shows there are still some rough edges.

Using the command-line interface (CLI)

If you're more comfortable in the terminal, OpenAI has a Codex CLI. It’s a small, open-source tool that brings the same power to your local machine.

-

Authentication: You just sign in with your ChatGPT account, and it handles the API key setup for you.

-

Local context: The CLI works with your local files, so it doesn't need to clone anything into a separate environment. It just has the context right away.

-

Git integration: It plays nicely with your existing Git setup. You can easily see diffs, stage changes, and commit the code the AI generates.

Using AGENTS.md

To give Codex a better idea of how your project works, you can add an "AGENTS.md" file to the root of your repository. It's like a "README.md" but for the AI. You can put instructions in there, like:

-

Commands to run to set up the environment.

-

How to kick off your test suite.

-

Any style guides or best practices it should follow.

This is a neat idea, but it means you have to create and maintain a special config file that isn't part of your normal CI scripts or Dockerfiles. It’s just one more thing to keep track of.

This tutorial provides an introduction and setup guide for OpenAI Codex integrations with GitHub, covering both cloud tasks and the local CLI.

Key features and pricing

Codex is trying to be more than just a coding sidekick; it's meant to be an actual software engineering agent. Its features are built to handle jobs that take multiple steps and a bit of back-and-forth.

What it can do

-

Run tasks on its own: You can give it a high-level goal (like, "Fix the login bug") and it will work on it by itself for up to 30 minutes.

-

Answer questions about your code: You can ask it complicated questions about your architecture, and it will dig through the files to find an answer, sometimes even spitting out diagrams.

-

Test and debug: It can run your tests, see what fails, and try to fix the code until everything passes.

-

Refactor and document: It's pretty handy for cleanup tasks like splitting up big files, improving documentation, or adding tests to get your coverage up.

The pricing breakdown

You can't just buy OpenAI Codex by itself. It comes bundled with the paid ChatGPT subscriptions, so you're looking at a monthly per-user fee.

Here's the official pricing for the ChatGPT plans you'll need:

| Plan | Price (Monthly) | Key Features for Codex Access |

|---|---|---|

| Plus | $20/month | Limited access to Codex agent features. |

| Pro | $60/month | Full access to Codex, including unlimited messages and expanded agent capabilities. |

| Business | Custom | Everything in Pro, plus company knowledge connectors (GitHub, Slack, etc.) and admin controls. |

| Enterprise | Custom | Everything in Business, plus enterprise-grade security, priority support, and custom data retention. |

Common challenges and limitations

While an AI developer sounds amazing, using OpenAI Codex with GitHub today comes with some real frustrations that early users have been quick to point out.

1. The 'GitHub or nothing' limitation

This is probably the biggest drawback. The integration is exclusive to GitHub. If your team uses Azure DevOps, Bitbucket, or GitLab, you're out of luck. Codex has no native support for these platforms, which locks out a huge chunk of development teams, especially in larger companies. Forcing everyone onto a single vendor is a tough sell for teams that have their tools set up just the way they like.

It's a stark contrast to modern AI agent examples in other parts of the business. Take customer support AI, for example. A tool like eesel AI is built to be flexible because company knowledge is scattered everywhere. It connects to dozens of apps right away, from help desks like Zendesk and Jira Service Management to wikis like Confluence and Google Docs.

2. Messy pull requests and branches

The way Codex handles branches and PRs can be, as one user put it, a "nightmare." Here are some of the main complaints:

-

Weird branch names: Codex often creates branches with random names like "d92ewq-codex/...". This makes it a real pain to figure out which branch belongs to which task.

-

Unpredictable updates: If you ask for a follow-up, it’s a toss-up whether it will update the existing branch or just create a new one. You can end up with a tangled mess of pull requests.

-

Vague PR descriptions: The pull requests it generates often lack good descriptions or context, which means developers have to waste time digging in to understand what the AI actually changed.

3. The 'lazy' performance

Another common gripe is that Codex can be "lazy." You can give it a complex task, and it will start off strong, only to quit halfway through or complete just a tiny piece of the work. It might ignore specific instructions or forget to run tests like you asked, forcing you to constantly check in and give it more prompts to get the job done. It’s not quite the "set it and forget it" assistant you might be hoping for.

4. Awkward environment setup

Relying on a special "AGENTS.md" file for setup is another sore spot. Most teams have already put a lot of effort into defining their environments with standard tools like Dockerfiles or CI/CD scripts in GitHub Actions or Jenkins. Having to copy that logic into a separate, proprietary file is just extra work and one more thing that can break.

This kind of setup complexity is exactly what modern AI platforms in other fields try to avoid. In customer support automation, for example, a tool like eesel AI is all about getting you live in minutes. With one-click connections and a neat simulation mode, you can test your AI on past support tickets without any risk before you let it talk to actual customers. It’s all about making the rollout smooth and stress-free.

The verdict on OpenAI Codex integrations with GitHub

OpenAI Codex integrations with GitHub give us a pretty cool glimpse into where software development is headed. Having an AI agent that can understand, write, and test code is a big deal. For smaller, well-defined tasks, like writing unit tests, refactoring a function, or fixing a simple bug, Codex can seriously speed things up.

But the platform is still young, and the day-to-day headaches are real. The fact that it only works with GitHub is a major limitation, and the confusing workflows and inconsistent performance mean it's not the seamless AI teammate you might have in mind. It takes some patience and a lot of careful prompting to get it right.

As the AI models get smarter and the tools get better, Codex will probably become a standard part of every developer's workflow. For now, it’s a powerful but flawed tool that makes the most sense for teams that live and breathe GitHub.

Your business knowledge deserves a smarter AI

Codex is busy with code, but what about all the other knowledge locked up in your company? Your support tickets, internal wikis, and help center articles are packed with information. That's the stuff an AI can use to resolve customer issues, answer employee questions, and automate all those repetitive support tasks.

That’s exactly what eesel AI is built for. It connects to all the tools you already use, Zendesk, Slack, Confluence, and over 100 more, to provide accurate, automated support. We focused on making it simple, so you can go live in minutes and use our simulation mode to see your return on investment before you even start.

Learn how eesel AI can automate your support workflows today.

Frequently asked questions

OpenAI Codex integrations with GitHub allow an AI agent, built on OpenAI's "codex-1" model, to interact directly with your GitHub repositories. It can understand code, write new features, fix bugs, answer questions about your codebase, and even draft pull requests.

Setup can be done via the ChatGPT interface by authorizing a "ChatGPT connector" app to access your GitHub repos, or through a command-line interface (CLI) that works with your local files. An optional "AGENTS.md" file can also be used to define environment specifics.

Access to Codex is typically bundled with paid ChatGPT subscriptions (Plus, Pro, Business, Enterprise). For CLI users, the "codex-mini-latest" model has its own API pricing based on input and output tokens, with some free credits provided on Plus/Pro plans.

The most significant limitation is that OpenAI Codex integrations with GitHub are exclusive to GitHub. Teams using other platforms like Azure DevOps, Bitbucket, or GitLab currently have no native support for integrating Codex.

Developers often face issues like random or unclear branch names, unpredictable updates (where new branches are created instead of updating existing ones), and vague pull request descriptions. This can lead to a messy Git history and extra review effort.

Yes, some users describe Codex as "lazy," meaning it might start a complex task but stop halfway, complete only a small portion, or ignore specific instructions like running tests. This often requires constant oversight and additional prompting to ensure task completion.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.