Choosing the right Large Language Model (LLM) API for your project feels like a huge commitment, because, well, it is. The API you decide to build on will directly affect your product's performance, your monthly bill, and what your users think of it.

Right now, the two names on everyone's mind are OpenAI and Anthropic. They're both at the top of their game, but they approach AI from pretty different angles, each with their own technical strengths and philosophies.

This guide is here to help you sort through the options. We’ll give you a straightforward comparison of the OpenAI API vs Anthropic API, looking at everything from the developer experience to performance and, of course, pricing. We'll also explore why for a lot of companies, especially those in customer service, the question is shifting from which API to build with to what's the fastest way to get this working.

What is the OpenAI API?

OpenAI is the company that really got the whole generative AI party started. They're famous for creating powerful and versatile models that have captured everyone’s attention.

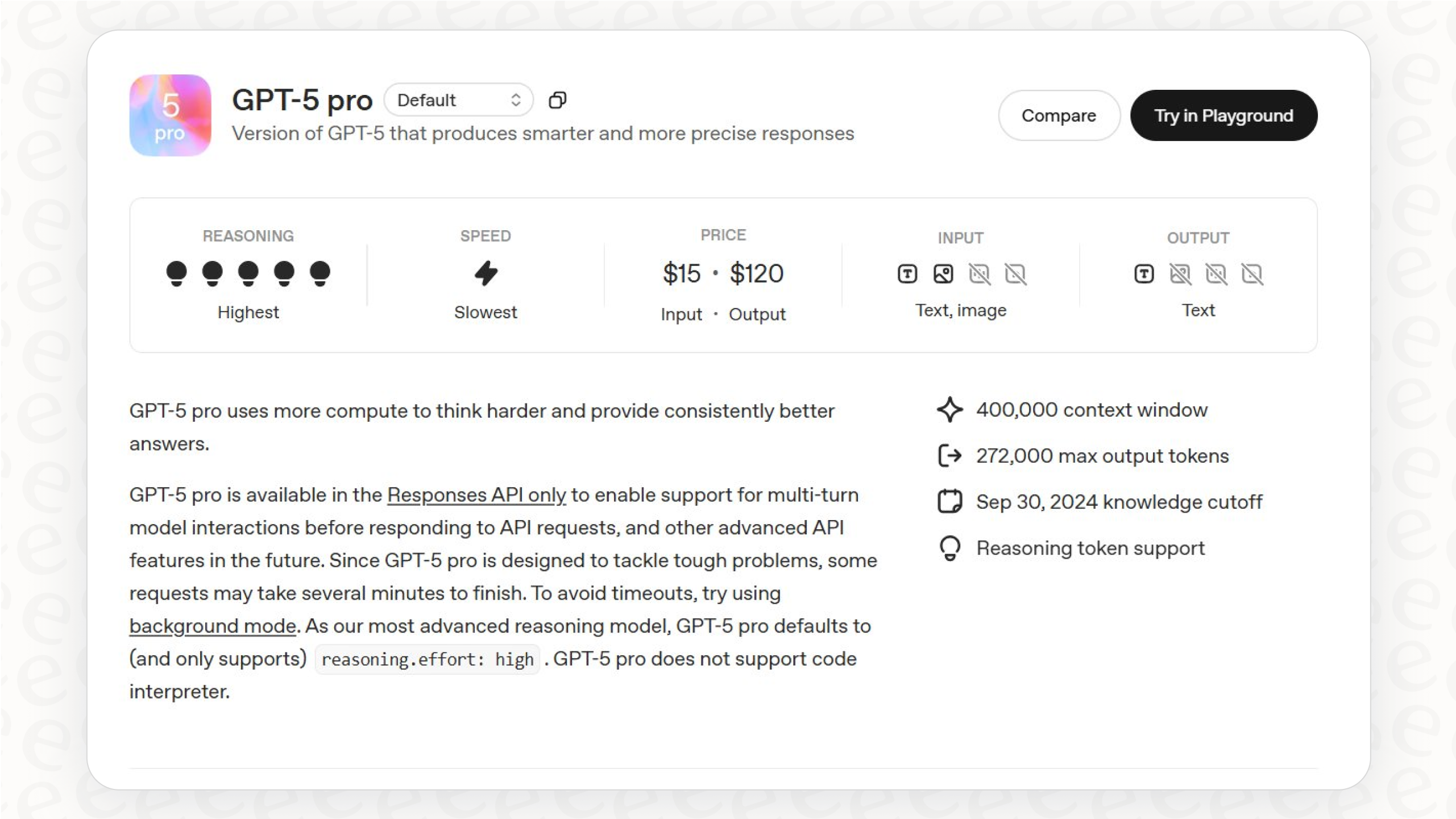

Their top models, like the GPT series (including the latest GPT-4o), are known for being great all-rounders. They're solid at general reasoning, creative writing, and are getting better at handling text, images, and audio all at once. The OpenAI API is designed to be picked up and used by as many people as possible, giving developers the tools to build anything from simple content tools to complex agents that can actually get things done. For many, it's the default choice for a flexible, powerful, and road-tested foundation.

What is the Anthropic API?

Anthropic popped up in 2021, started by a group of former OpenAI researchers who wanted to focus heavily on AI safety. Their mission is to build AI systems that are reliable, predictable, and easier to guide, which is a slightly different goal than just chasing raw power.

Their main model family is Claude, with Claude 3.5 Sonnet being the current star. It’s known for having a massive context window (meaning it can read and remember really long documents), performing well on business-focused tasks, and having safety baked in from the start. They use a method they call "Constitutional AI," where the model is trained to stick to a set of ethical principles. This makes the Anthropic API a solid pick for businesses in regulated fields or any situation where you absolutely need the AI to behave predictably.

A detailed OpenAI API vs Anthropic API comparison

While both platforms have top-tier models, the actual experience of building with their APIs can be completely different. Let's get into the details that you'll actually notice as a developer or team lead.

Developer experience and API features

Spend a little time on developer forums, and you'll see a clear picture: both APIs are powerful, but they have quirks that can make or break a project. The small details in their design really matter.

How they structure messages

-

OpenAI: The API is known for being super flexible. You can add system messages anywhere in a conversation and even have back-to-back messages from the same "person" (like two user messages in a row). This might sound small, but it's a big help for faking complex chats or building workflows where an AI needs to use a few tools before giving its final answer.

-

Anthropic: Things are much stricter here. It forces a "user" -> "assistant" -> "user" pattern and only lets you put a single system prompt at the very beginning. This makes the API predictable, sure, but it can be a real pain if you're trying to build more dynamic apps, like one that needs to pick up a conversation with new information.

How they handle tools (function calling)

-

OpenAI: This is a real strength for OpenAI. Its API has solid function calling, including the ability to call multiple tools at once. This lets the AI decide it needs to do several things, like look up an order and check a help article, and ask for all of them in one go. It’s just more efficient and feels less robotic.

-

Anthropic: Tool use feels a bit more clunky and one-at-a-time. Developers have found that if you need the model to use multiple tools, you have to guide it through a rigid back-and-forth conversation. This adds delays, costs more in tokens, and makes the development more complicated. There's also a surprising amount of token overhead just to turn the feature on.

Working with images and audio

Both companies are making big moves in vision, letting their models understand images. That said, OpenAI's ecosystem feels a bit more complete right now. With tools like Whisper for speech-to-text and DALL·E for image generation all under one roof, it just offers a more complete multimedia toolkit out of the box.

The developer's takeaway

Building directly on either of these APIs means you’re signing up to deal with their specific quirks. For something like customer service, this can feel like you're building the same thing everyone else has already built. A platform like eesel AI handles all that tricky stuff for you. You don't have to fight with message structures or tool-use limits; you just connect your knowledge docs, describe what you want the agent to do in plain English, and let the platform figure out the messy implementation details.

Performance, use cases, and safety

The "best" model really just depends on what you're trying to do.

What they're good for

-

OpenAI: Its flexibility and creative chops make it a great fit for broad, consumer-facing apps, content creation, and anything that needs top-notch general intelligence. If you're building something new and want to test ideas quickly, OpenAI's API is often the faster way to get there.

-

Anthropic: Claude really does well in a business context. It’s perfect for tasks that involve digging through long documents, think reviewing legal contracts, summarizing financial reports, or handling detailed customer support tickets where you can't afford to be wrong.

Their approach to ethics and control

-

OpenAI: The company leans heavily on a technique called Reinforcement Learning from Human Feedback (RLHF). This basically means they have human reviewers rate the model's answers, which teaches it over time to be more helpful and less harmful. It works, but it can sometimes pick up on the subtle biases of its human trainers.

-

Anthropic: Their "Constitutional AI" is what sets them apart. The model is trained with a set of core principles (its "constitution"), and it learns to judge its own answers against those principles. The idea is to create a model that's naturally more predictable and less likely to say something off-the-wall, without needing constant human hand-holding.

For businesses, it often comes down to this: do you need the raw, flexible power of OpenAI, or the steady, predictable safety of Anthropic?

Platforms like eesel AI offer another path. By using powerful models under the hood, eesel AI gives you the best of both. You get the smarts of a top-tier model but with complete control through a simple interface. You can set a custom AI personality, limit its knowledge to only your approved documents, and use a powerful simulation mode to make sure it behaves exactly as you expect before a customer ever talks to it.

Pricing and business models

Both OpenAI and Anthropic have a similar pricing setup. They offer monthly subscriptions for their chatbots (ChatGPT Plus and Claude Pro) and then a pay-as-you-go model for their APIs.

This API pricing is based on "tokens," which are just little pieces of words (a token is about three-quarters of a word). You pay for the tokens you send in (input) and the tokens you get back (output). This is fine for getting started, but it can make your costs really hard to predict. If your support team gets slammed with tickets one month, your API bill could shoot through the roof without any warning.

Here’s a quick glance at how their API pricing stacks up for some of their popular models.

| Model | Provider | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|---|

| GPT-4o | OpenAI | $2.50 | $10.00 |

| Claude 3.5 Sonnet | Anthropic | $3.00 | $15.00 |

| Claude 3 Opus | Anthropic | $15.00 | $75.00 |

| Claude 3 Haiku | Anthropic | $0.25 | $1.25 |

Pricing is based on public info from late 2024 and can change.

The biggest headache with token-based pricing is trying to budget for it. How do you forecast your costs when they're tied directly to how many customers contact you? This is where a different approach can make a lot more sense. In contrast, eesel AI's pricing is clear and predictable. Plans are based on a fixed number of AI conversations per month, with no extra fees per ticket resolved. This lets you scale your support automation without worrying about a surprise bill at the end of the month.

The solution: Thinking beyond the API debate

So, here's the real question: if your goal is to automate customer support, does it really make sense to have your engineers spend months building and maintaining a custom solution on a raw API? For most businesses, the answer is probably no.

An AI integration platform is the smarter, faster way to go.

-

Get going in minutes, not months: With a tool like eesel AI, you can connect your help desk, like Zendesk or Freshdesk, and all your knowledge sources, Confluence, Google Docs, or even past support tickets, with just a few clicks. It’s a self-serve platform that’s worlds away from the long development slog of building from scratch.

-

Bring all your knowledge together: Instead of starting with a generic AI, eesel AI instantly learns from your company's actual knowledge. It reads your past tickets, internal wikis, and help center docs to give answers that are accurate, specific to you, and in your brand's voice.

-

Test without the risk: This is a huge one. eesel AI's simulation engine lets you test your AI agent on thousands of your past tickets in a safe environment. You get an accurate forecast of how well it will perform before you ever turn it on for real customers, which takes a lot of the risk out of the equation.

Making the right choice for your goal

When you’re deciding between OpenAI and Anthropic, the right choice really depends on where you’re trying to go.

-

Go with the OpenAI API if you need maximum flexibility, the latest multimedia features, and a foundation for building all sorts of general-purpose apps.

-

Go with the Anthropic API if you need business-grade reliability, top-notch safety, and a model that's a beast at analyzing long, complex documents.

But for most businesses trying to solve a specific problem like automating customer support, the "build vs. buy" question is way more important than the "OpenAI vs. Anthropic" one. Building from scratch is a long, expensive, and risky path.

Platforms like eesel AI give you a direct route to results. They offer the fastest, most reliable, and most cost-effective way to use powerful AI in a business setting, letting you focus on making your customers happy instead of managing API infrastructure.

Ready to see how easy AI-powered support can be? Get started with eesel AI in minutes and see how it can change your customer service operations.

Frequently asked questions

OpenAI focuses on creating powerful, versatile general-purpose models like GPT-4o, emphasizing broad applicability and flexibility for diverse tasks. Anthropic, with its Claude models, prioritizes AI safety, predictability, and adherence to ethical principles from its "Constitutional AI" training.

OpenAI's API offers more flexibility in message structure and robust multi-tool calling for complex workflows. Anthropic's API is stricter with its "user" -> "assistant" message patterns and its tool use can feel more rigid and token-intensive, often requiring more sequential guidance.

While OpenAI is highly versatile, Anthropic's Claude models particularly excel in business contexts. They are ideal for tasks involving extensive document analysis or detailed customer support where predictability, a large context window, and built-in safety features are crucial.

Both platforms primarily use a token-based, pay-as-you-go API pricing structure, charging for both input and output tokens. This model, while common, can make budgeting challenging as costs directly fluctuate with usage volume, leading to potentially unpredictable monthly bills.

For businesses aiming to automate specific functions like customer support, an integration platform like eesel AI can be a faster, more cost-effective choice. It handles API complexities, integrates various knowledge sources, and offers predictable pricing, allowing you to focus on business outcomes rather than infrastructure.

OpenAI utilizes Reinforcement Learning from Human Feedback (RLHF) to align models with human values, which can sometimes reflect subtle biases from human trainers. Anthropic employs "Constitutional AI," training models with explicit ethical principles to achieve more predictable and inherently safer behavior without constant human intervention.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.