The world of AI moves at a dizzying pace. Just when you get your head around one model, another one lands, promising to be faster, smarter, and cheaper. The latest big release, GPT-4o, is no different. It’s OpenAI’s shiny new successor to GPT-4 Turbo, and on paper, the benchmarks look fantastic.

But here’s the real question for anyone actually running a business: how does it hold up in the real world? When you’re building an AI agent for something as important as customer support, specs on a webpage don’t tell you the full story. This guide is a practical, no-fluff comparison of GPT-4 Turbo vs GPT-4o. We’re going to step past the benchmarks and look at what really matters for your bottom line: performance under pressure, accuracy on tricky tasks, and the true cost of keeping your AI running.

GPT-4 Turbo vs GPT-4o: What are these models?

Before we get into the nitty-gritty, let's do a quick roll call to see what each of these models brings to the table.

What is GPT-4 Turbo?

GPT-4 Turbo was rolled out as a major upgrade to the original GPT-4. You can think of it as the refined, more efficient version of the family. It was built to be quicker, less expensive, and capable of handling a lot more context with its huge 128k token window. Its knowledge is current up to April 203, and it quickly became the favorite for developers building applications that needed GPT-4’s brainpower without its original, hefty price tag.

What is GPT-4o?

GPT-4o, where the "o" stands for "omni," is OpenAI's newest flagship model. This isn’t just a minor tune-up; it’s a whole new engine. Its biggest selling point is its native multimodality, which is a fancy way of saying it can understand text, audio, and images all together in a single model. This makes interactions feel a lot more natural and human. On top of that, it serves up answers much faster and costs 50% less than GPT-4 Turbo, positioning it as the new gold standard for real-time, interactive AI.

Performance and speed: What benchmarks don't tell you

On paper, the speed debate seems pretty clear-cut. OpenAI claims GPT-4o is twice as fast as GPT-4 Turbo, and early tests seem to agree. It has a much higher throughput (the number of words it can generate per second), so for a business, faster responses should mean happier customers. A clear win, right?

Well, not so fast. The trouble with these numbers is that they aren't set in stone. Real-world performance for pay-as-you-go models can be a bit of a rollercoaster. As some great analysis from firms like New Relic has shown, when a new model gets popular, its performance can start to dip under the heavy load. The lightning-fast model you tested last week might feel sluggish this week.

This creates a massive headache for any business that relies on consistent, predictable response times for their customer-facing AI. A slow bot can be even more frustrating than having no bot at all, leading to a genuinely bad customer experience. This is where just grabbing the "latest and greatest" model can come back to bite you. You need a way to test and confirm performance before you let it loose.

This is exactly why simulation is so important. Instead of launching a new model and crossing your fingers, a platform like eesel AI lets you run any AI setup in a powerful simulation mode. You can test it against thousands of your own past support tickets to see precisely how it will perform, what its resolution rate will be, and how quickly it responds. This lets you completely de-risk the rollout of a new model before it ever interacts with a live customer.

A head-to-head performance comparison

Here's a quick look at how the two models stack up on key performance metrics.

| Feature | GPT-4 Turbo | GPT-4o ("Omni") |

|---|---|---|

| Throughput | ~20 tokens/second | ~109 tokens/second (at launch) |

| Latency | Higher | Lower |

| Real-World Consistency | More predictable (especially with provisioned versions) | Variable; can slow down under heavy load |

| Multimodality | Text, images | Native text, images, audio, video |

Accuracy and reasoning for business tasks

Speed is just one piece of the puzzle. What good is a fast answer if it’s wrong or completely misses the point of your instructions? This is where the GPT-4 Turbo vs GPT-4o debate gets really interesting.

If you spend some time on community forums like Reddit or OpenAI's own developer boards, you'll spot a pattern: many developers still lean on GPT-4 Turbo for complex jobs. They find it’s more reliable for things like coding, solving logic puzzles, and following detailed, multi-step instructions.

In contrast, GPT-4o is sometimes described as being a bit more "superficial." While it's incredibly fast and conversational, some users have found it can get stuck in loops, ignore parts of a detailed prompt, or lose track of the conversation's context. It’s a classic trade-off: do you need breakneck speed or thoughtful depth?

For a support team, this has very real consequences. GPT-4o might be perfect for burning through simple, repetitive Tier 1 questions. But for walking a customer through a complicated troubleshooting process or handling a sensitive billing question, the slightly slower but more robust reasoning of GPT-4 Turbo might still be the better bet.

How well you can "steer" a model and guide its behavior is a huge factor here. That’s why having a strong control layer between you and the model is so important. For example, with eesel AI's customizable prompt editor and workflow engine, you get full control over your AI. You can fine-tune its personality, set strict rules on what it can and can't talk about, and build custom actions it can take. This gives you the guardrails to guide any model, making sure it provides accurate, on-brand answers and reliably escalates tricky issues when needed.

When to use each model

Here’s a simple breakdown to help you decide:

-

Choose GPT-4 Turbo for:

- Complex, multi-step problem-solving, like technical support or financial analysis.

- Tasks where you need the AI to follow detailed instructions to the letter.

- Situations where deep logical reasoning is more important than getting an instant reply.

-

Choose GPT-4o for:

- High-volume, real-time chats, like a frontline website chatbot.

- Multimodal tasks, such as analyzing a customer’s screenshot of an error message.

- Applications where speed and cost are your top priorities.

Cost and capabilities: A look beyond the sticker price

Let's talk money. On the surface, GPT-4o is the clear winner, coming in at 50% cheaper for API usage than GPT-4 Turbo. For any business working at scale, that kind of saving is hard to ignore.

But there’s a hidden cost you need to keep an eye on: token consumption. As the New Relic analysis also noted, it's pretty common for newer, more conversational models to be a bit more "chatty." They might use more words (and therefore, more tokens) to say the same thing. So, a model that's 50% cheaper per token but uses 20% more tokens for every answer won't actually cut your bill in half. This can lead to some unpredictable operational costs that are tough to budget for, especially if your support volume goes up and down.

Of course, GPT-4o's killer feature is its native multimodality. This is a huge deal for support teams. A customer can send a picture of a broken part, a screenshot of a software bug, or even a short video, and the AI can understand it directly. That's an incredibly powerful way to solve problems faster, and it's something GPT-4 Turbo just can't do on its own.

Unpredictable costs are one of the biggest hurdles when you're trying to scale up support automation. That’s why a tool like eesel AI uses transparent and predictable pricing. Our plans are based on a set number of monthly AI interactions, with no hidden fees per resolution. This approach protects you from surprise bills if a model update suddenly makes your AI agent a lot more talkative, letting you budget with confidence and scale without fear.

Official pricing

As promised, here is the official API pricing directly from OpenAI. Just remember that these prices can change, so it's always smart to check the source for the latest numbers.

| Model | Input Cost | Output Cost |

|---|---|---|

| GPT-4 Turbo | $10.00 | $30.00 |

| GPT-4o | $5.00 | $15.00 |

Note: Prices are per 1 million tokens and are subject to change. Always check the official OpenAI pricing page for the most current information.

GPT-4 Turbo vs GPT-4o: Which model should you choose?

So, after all that, who wins the GPT-4 Turbo vs GPT-4o matchup? The honest answer is: it depends. There's no single "better" model for everyone. The right choice comes down to your specific needs.

GPT-4o is the undisputed champion for speed, cost, and any task that involves images, audio, or video. For high-volume, real-time chat, it's the new king. However, GPT-4 Turbo is still a heavyweight contender for complex, high-stakes jobs where deep reasoning and reliability are what matter most.

The biggest takeaway here isn't about picking one model over the other. It's about recognizing that building effective, dependable AI for your business requires more than just plugging into the latest API. It requires a platform that lets you test, control, and deploy these powerful tools with confidence.

The smarter way to deploy AI support

Figuring out the right balance between speed, cost, and accuracy is the central challenge of building a great AI support agent. You need a solution that fits into your existing tools, learns from your company's knowledge, and gives you the power to fine-tune the entire customer experience.

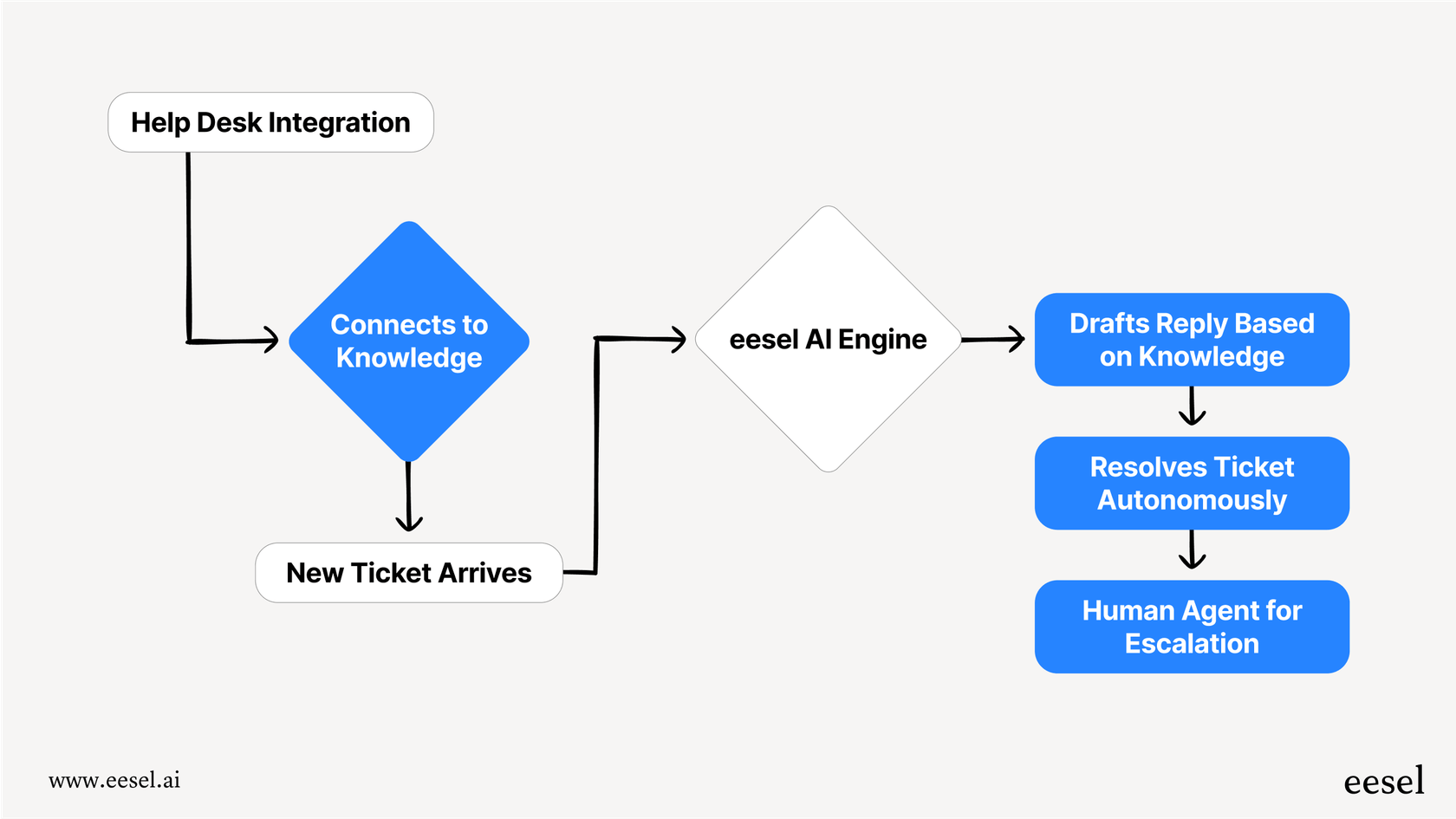

eesel AI is an AI platform designed to do just that. It plugs directly into your helpdesk (like Zendesk, Freshdesk, or Intercom) and connects to all your knowledge sources to automate support right out of the box.

With powerful features like risk-free simulation, a fully customizable workflow engine, and predictable pricing, eesel lets you harness the best of OpenAI's models without the operational headaches and technical risks. You can go live in minutes, not months, and finally build an AI support experience you can actually be proud of.

Frequently asked questions

The main distinction is that GPT-4o is a newer, natively multimodal model capable of understanding text, audio, and images together, offering faster responses and lower API costs. GPT-4 Turbo is a highly refined text and image model, known for its efficiency and larger context window.

GPT-4o is generally faster with higher throughput on paper, but its real-world consistency can vary under heavy load. GPT-4 Turbo, especially provisioned versions, tends to offer more predictable performance, which is crucial for consistent customer experience.

Yes, while GPT-4o has lower per-token API costs, it can sometimes be more "chatty," potentially using more tokens per interaction. This increased token consumption can offset some of the per-token savings, leading to less predictable operational costs.

Many developers still prefer GPT-4 Turbo for complex tasks requiring deep logical reasoning, coding, and precise adherence to multi-step instructions. While GPT-4o is fast and conversational, some users find it can be more superficial or occasionally miss detailed prompt elements.

Choose GPT-4 Turbo for complex problem-solving and tasks needing deep logical reasoning. Opt for GPT-4o for high-volume, real-time chats, and any task involving native multimodal input like analyzing customer screenshots or audio.

GPT-4o offers native multimodality, meaning it can process and understand text, audio, and images directly within a single model. This allows for more natural customer interactions, such as analyzing a customer's screenshot of an error or a short video, which GPT-4 Turbo cannot do on its own.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.