The world of AI is moving at a dizzying pace. Just when you think you’ve got a handle on GPT-4, OpenAI drops GPT-4 Turbo and it feels like you’re back to square one. If you’re trying to figure out which of these models actually makes sense for your business, you’re in good company. It’s a bit like being asked to pick an engine without knowing what kind of car you’re trying to build.

The simple answer is that there’s no single “best” model for everyone. The right choice is a balancing act, a constant trade-off between speed, smarts, and cost. This guide is here to break down the real-world differences between GPT-3.5, GPT-4, and GPT-4 Turbo so you can make a decision that fits what you actually need to do.

But here’s a little spoiler: the model is just the engine. The real power comes from connecting it to your company’s tools and data. After all, the most intelligent AI on the planet is pretty useless if it can’t access your internal knowledge to give a relevant answer.

What are OpenAI's GPT models?

Before we get into the nitty-gritty, let's make sure we're on the same page. "GPT" stands for Generative Pre-trained Transformer. In plain English, they’re huge language models that have been trained on a massive amount of text and data from the internet. This training allows them to understand context, generate human-like text, and power tools like ChatGPT.

What is GPT-3.5?

GPT-3.5 is the reliable workhorse of the group. It’s the engine running the free version of ChatGPT, and it’s built for two things: speed and affordability. For straightforward, high-volume jobs, it’s a great option. It can handle basic Q&As, summarize text, and follow simple instructions without breaking a sweat. Where does it fall short? It can get tripped up by complex reasoning, subtle instructions, or tasks that require a lot of nuance.

What is GPT-4?

GPT-4 is the premium, top-of-the-line model. This is the brain behind ChatGPT Plus, and it was a huge leap forward in terms of intelligence. GPT-4 shines when you throw complex problems at it, from creative writing and multi-step instructions to analyzing data and writing code. Its responses are far more sophisticated and accurate. The catch? All that brainpower comes at a cost. GPT-4 is noticeably slower and more expensive to use than its predecessor.

What is GPT-4 Turbo?

GPT-4 Turbo is the attempt to get the best of both worlds. It was built to offer the high-end intelligence of GPT-4 but with major improvements in speed and cost. It also comes with a couple of massive upgrades: a much larger "context window" (we’ll get to that in a second) and more up-to-date training data. For most businesses today, GPT-4 Turbo is the practical choice, hitting a sweet spot between power, performance, and price.

Speed and performance

In a business context, especially when you have customers waiting, speed isn't just a perk, it's everything. A customer staring at a "thinking" chatbot for 30 seconds is a customer who's about to get frustrated and leave.

Here’s how the models compare on the speed test:

-

GPT-3.5: The undisputed champion of speed. It’s quick and responsive.

-

GPT-4 Turbo: Much faster than the original GPT-4, making it a solid contender for real-time conversations.

-

GPT-4: The slowest of the three. People often say its responses feel more "thoughtful," but in a live chat, that just translates to lag.

But here’s the thing: a fast answer that’s wrong is worse than a slightly slower one that actually helps. When it comes to customer support, you have to balance speed with reliability. A bot that spits out wrong answers instantly just creates more cleanup work for your human agents.

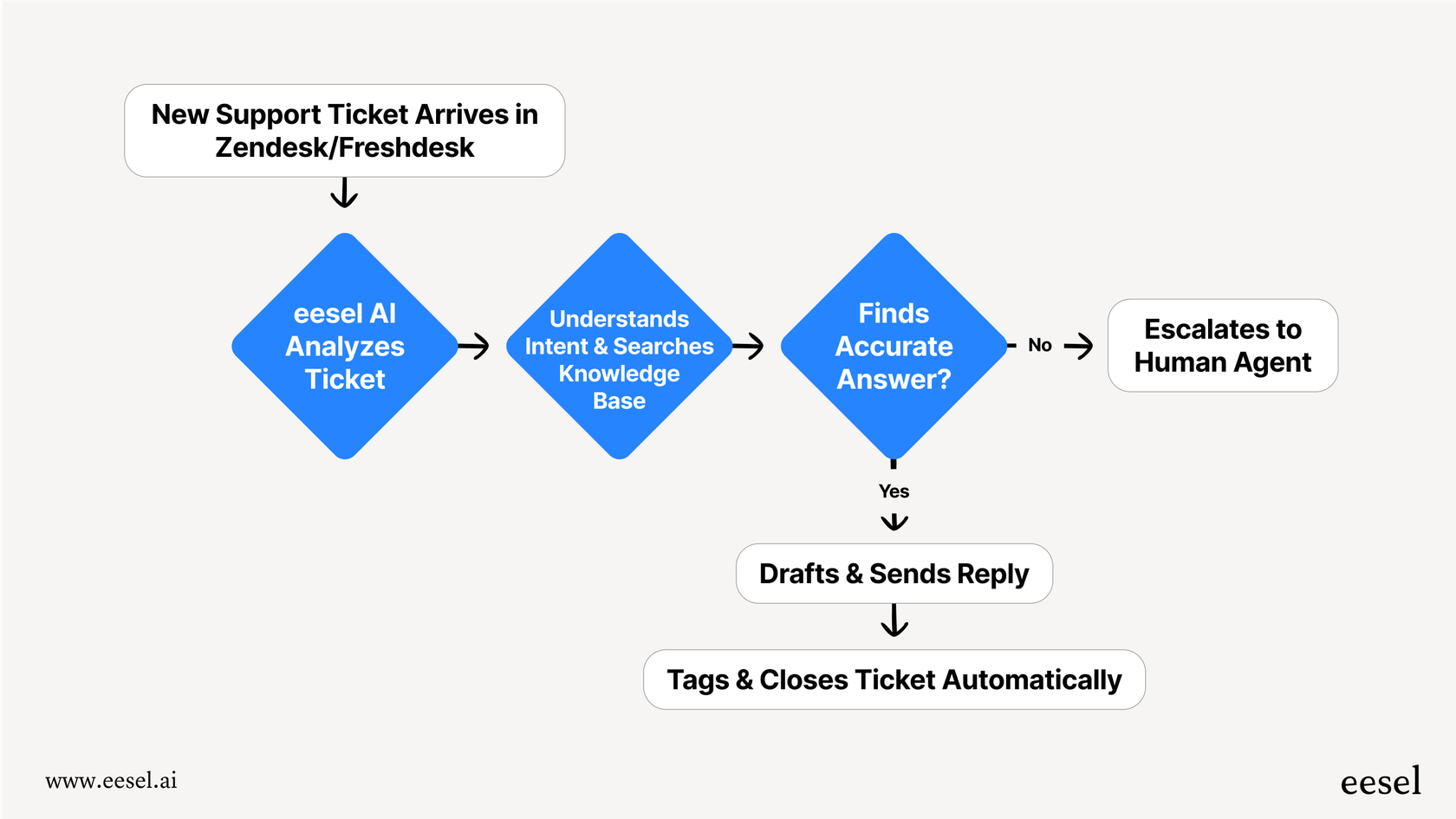

This is where just having a model isn’t enough. For example, an AI support agent from eesel AI does more than just call an API. It uses a smart workflow that can tell when a customer's question is too tricky for a quick automated reply. Instead of guessing and giving a bad answer, it can instantly do something else, like escalate the ticket to the right person. This way, the customer always gets a fast response, whether it’s the perfect answer from the AI or a smooth handoff to a human.

Capabilities and accuracy

Speed is one thing, but what these models can actually do is where things get interesting. For business use, these differences can make or break your decision.

Context window: Understanding the AI's memory

The "context window" is a technical term for a simple idea: how much information the model can remember at any given time in a conversation. A bigger context window means the AI can handle longer chats, process whole documents, and recall details from the beginning of the conversation without getting confused.

-

GPT-3.5: Up to 16,385 tokens (around 12,000 words).

-

GPT-4: Up to 32,768 tokens (around 24,000 words).

-

GPT-4 Turbo: A massive 128,000 tokens (around 100,000 words, basically a 300-page book).

For customer support, a large context window is a huge advantage. It means a customer can explain a complicated, multi-part problem, and the AI can actually follow along without forgetting the first thing they said. It can also read an entire help article or a long email chain to figure out the best solution.

Reasoning, accuracy, and following directions

This is where GPT-4 and GPT-4 Turbo really leave GPT-3.5 in the dust. According to OpenAI’s own research, GPT-4 is 40% more likely to produce factual responses than GPT-3.5. When you’re trusting an AI to interact with your customers, that’s a pretty big deal.

The newer models are also much better at following specific directions. You can tell them to adopt a certain tone of voice or follow a particular format, and they’ll actually do it consistently. For example, if you tell GPT-3.5 to "answer the customer's question about shipping and then ask if they need help with anything else," it might just answer the question and stop. GPT-4 and Turbo are much more reliable at executing that full, multi-step instruction.

But even the smartest model is only as good as the information it has access to. A generic GPT-4 model knows a lot about the world, but it doesn’t know your company’s unique return policy, the workaround for a specific bug in your software, or the status of a customer's recent order.

This is where platforms like eesel AI come in. They ground these powerful models in your company's reality. eesel AI connects directly to your knowledge sources, whether that’s past support tickets, your help center, or internal docs in Confluence or Google Docs. This ensures the AI's powerful brain is working with information that is accurate and specific to your business, turning generic intelligence into a genuinely helpful agent.

Pricing and business use cases

For any business, the budget is a real thing. Using these models through OpenAI's API means you pay "per token," which is for every piece of text going in and out. This can be great for flexibility, but it can also be scarily unpredictable. A busy week for your support team could lead to a surprisingly large bill.

OpenAI API pricing comparison

Here's a quick look at the standard API costs. For reference, one million tokens is about 750,000 words.

| Model | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) |

|---|---|---|

| "gpt-3.5-turbo-0125" | $0.50 | $1.50 |

| "gpt-4" | $30.00 | $60.00 |

| "gpt-4-turbo" | $10.00 | $30.00 |

Source: OpenAI Pricing Page

Choosing the right tool for the job

Keeping these trade-offs in mind, here’s how you might think about applying them:

-

Use GPT-3.5 for: Simple, high-volume tasks where cost is the number one factor. Think things like initial ticket categorization, basic sentiment analysis, or an internal-only FAQ bot where the stakes are low.

-

Use GPT-4 for: The absolutely critical jobs where you need the best possible answer and are willing to pay for it. This could be for drafting sensitive emails to high-value customers or troubleshooting your most complex technical issues.

-

Use GPT-4 Turbo for: Pretty much everything else. It’s the best all-around choice for customer-facing support automation, helping your human agents find answers faster, and any task that needs a mix of smarts, speed, and reasonable cost.

That unpredictable per-token pricing is a huge headache for businesses trying to set a budget. This is where a complete solution offers real value. eesel AI gets rid of that uncertainty with transparent and predictable pricing. Our plans are based on a flat monthly fee for a certain number of AI interactions, with no hidden fees per ticket solved. This means you can automate as much as you need to without getting a nasty surprise at the end of the month. It lets you scale up your support with confidence, knowing your costs are completely under control.

From a raw model to a real solution

Alright, let's tie this all together. Which model is right for you? In short: GPT-3.5 is for speed and savings, GPT-4 is for maximum power when money is no object, and GPT-4 Turbo is the smart, balanced choice for most businesses.

But the bigger point is that the model is just an engine. To get any real value out of it, you need to build a whole car around it. A simple API call can’t connect to your order database, learn from your team’s past tickets, or be safely tested on your real data before you let it talk to a single customer.

That’s what a platform like eesel AI is designed to do. We take the raw power of these models and turn it into a practical, integrated tool for your support team. You can get started in a few minutes, connect all your scattered company knowledge into one brain, and use a powerful simulation mode to test your AI without any risk. If you’re ready to stop thinking about the engine and start driving the car, you can see how a complete solution can transform your support experience.

Frequently asked questions

The main distinctions lie in intelligence, speed, and cost. GPT-3.5 is fast and cheap but less accurate, GPT-4 is highly intelligent but slow and expensive, while GPT-4 Turbo offers a strong balance of GPT-4's intelligence with improved speed and lower cost.

GPT-3.5 is the most affordable, followed by GPT-4 Turbo, which is significantly cheaper than the original GPT-4. Pricing is based on tokens, meaning you pay for both input and output text, with GPT-4 incurring the highest costs.

GPT-3.5 is the fastest, ideal for high-volume, simple tasks. GPT-4 Turbo is a strong contender for real-time interactions, offering significantly better speed than the slower, original GPT-4, while still maintaining high intelligence.

GPT-4 and GPT-4 Turbo are both far superior to GPT-3.5 in terms of reasoning, accuracy, and following complex instructions. GPT-4 Turbo, specifically, delivers near GPT-4 intelligence with more up-to-date training and a larger context window.

A larger context window allows the AI to "remember" more information from a conversation or document. GPT-4 Turbo's massive 128,000-token window enables it to handle much longer, more complex interactions and process entire documents without losing context, unlike GPT-3.5 or even GPT-4.

GPT-3.5 is suited for simple, high-volume tasks where cost is critical. GPT-4 is for critical jobs demanding the absolute best answer regardless of cost. GPT-4 Turbo is ideal for most customer-facing support and tasks requiring a balance of smarts, speed, and reasonable cost.

GPT-4 Turbo is generally the best all-around choice for most businesses. It offers a powerful blend of high intelligence, improved speed, and significantly lower costs compared to the original GPT-4, making it a practical and efficient option.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.