You've probably seen the name Mistral AI popping up everywhere lately. This French startup has made a huge splash, pulling in massive funding and hitting a valuation that puts it right up there with giants like OpenAI and Google. But they’re not just another face in the AI crowd. Their goal is to make top-tier AI accessible to everyone by mixing open-source models with powerful commercial ones.

So, what’s the big deal? In this guide, we’ll walk through everything you need to know about Mistral AI in 2025. We'll get into what it is, its main products, how companies are actually using it, and how it compares to the other big names.

What is Mistral AI?

At its core, Mistral AI is a company that builds Large Language Models (LLMs). It was started in 2023 by some real heavy-hitters in the AI world: Arthur Mensch from Google’s DeepMind, and Guillaume Lample and Timothée Lacroix from Meta. So, yeah, these folks know what they’re doing.

What really makes Mistral different is its "open-source-first" philosophy. While competitors like OpenAI have become more guarded over time, Mistral has released some of its core models under friendly licenses like Apache 2.0. This means developers and businesses can download, tweak, and run these models on their own servers, without being tied to a single company.

This open strategy has turned Mistral AI into a symbol of Europe's tech ambitions, attracting big investments from people eager to back a homegrown alternative to the US-based AI giants. It's a smart move that offers both transparency and control, which is getting harder to find in the world of advanced AI.

A breakdown of Mistral AI's key products and models

Mistral AI has a menu of products that range from raw models for developers to polished apps for everyday users. Here’s a quick look at what they offer.

Foundational Mistral AI models for developers

Mistral's models fall into two main buckets: open-source ones you can run yourself, and commercial ones you use through their API.

Open-Source Models: These are free to download and use, even if you're building a commercial product. They're well-known for being surprisingly powerful for their size.

- Mistral 7B: A small but mighty model that gives you great performance without needing a supercomputer to run. It's a popular choice for fine-tuning on specific tasks.

- Mixtral 8x7B: This one uses a clever setup called a Sparse Mixture-of-Experts (MoE). You can think of it as having eight specialist models working together as a team. This makes it much faster and cheaper to run than one giant model of the same power.

- Devstral & Codestral: These are models built specifically for programming. They know over 80 programming languages and are designed to help developers write, fix, and understand code much faster.

Commercial Models (via API): For businesses that want all the power without managing the hardware, Mistral offers its top-performing models through an API platform called "La Plateforme."

- Mistral Large 2: This is their top-of-the-line model, built to go head-to-head with things like GPT-4. It's excellent at reasoning, can handle huge documents with its 128,000-token context window, and speaks several languages fluently.

- Mistral Small: A more budget-friendly option that's still very capable. It's built for speed, making it great for tasks that need a near-instant response.

You can also find these commercial models on big cloud platforms like AWS Bedrock and Google Cloud Vertex AI.

| Model | Type | Key Feature | Best For |

|---|---|---|---|

| Mistral 7B | Open-Source | Very efficient for its size | Cost-effective, fine-tuning tasks |

| Mixtral 8x7B | Open-Source | Sparse Mixture-of-Experts (MoE) | High throughput, balanced performance |

| Mistral Large 2 | Commercial API | Top-tier reasoning, 128k context | Complex enterprise tasks, RAG |

| Devstral | Open-Source | Specialized for code generation | Software development, agentic coding |

| Codestral | Open-Source | Fluent in 80+ programming languages | Code completion, writing tests |

User-facing Mistral AI applications

Mistral AI isn’t just for coders. They’ve also built apps that put their tech directly into anyone's hands.

- Le Chat: This is Mistral's version of ChatGPT. It’s a chat assistant that can browse the web, read your documents, make images, and help you organize your thoughts into "Projects." You can use it on the web or get the mobile app for iOS and Android.

- Mistral Code: A dedicated AI coding assistant that helps developers complete code, suggest improvements, and squash bugs across dozens of languages, all within their usual workflow.

Mistral AI's enterprise solutions

For bigger companies, Mistral provides a set of services designed for business needs.

- La Plateforme: Their main developer hub, offering pay-as-you-go API access to their best commercial models.

- Flexible Deployments: Mistral allows companies to run its models on their own servers (on-premise) or in a private cloud. This gives organizations with tight security and privacy rules complete control over their AI.

- Custom Solutions: The company also helps businesses train or fine-tune models on their private data, letting them build custom AI tools that are perfectly suited to their industry.

How businesses are using Mistral AI

With a mix of powerful and flexible models, companies are finding all sorts of ways to put Mistral AI to work.

Building custom Mistral AI applications for customer support

A lot of companies are using Mistral's APIs to build their own chatbots and internal tools for their support teams. The models are great with multiple languages and can understand long documents, which makes them a solid starting point for handling customer questions from all over the world.

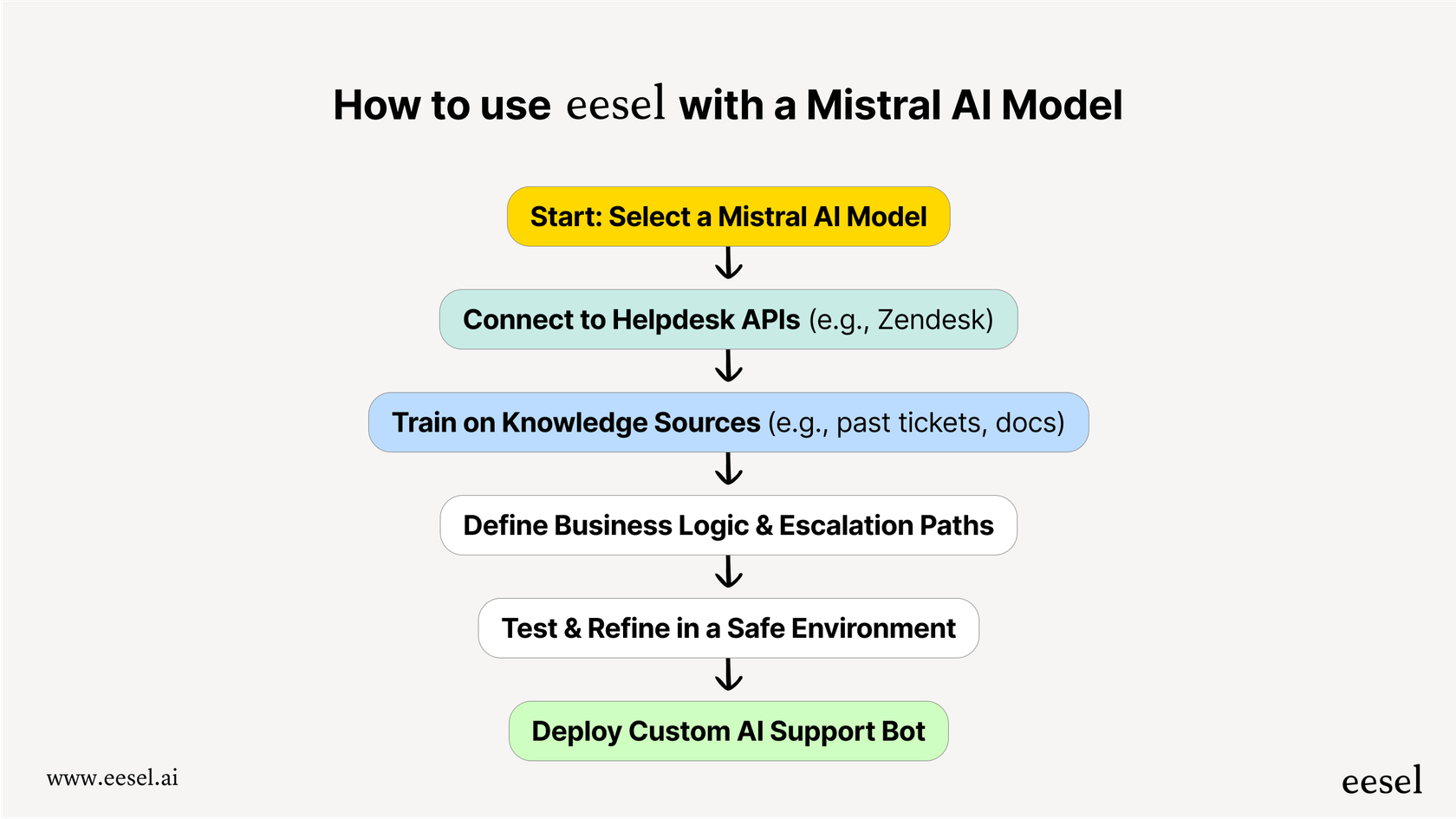

But here’s the thing: building a genuinely helpful support bot from the ground up involves more than just plugging into a smart LLM. You have to connect it deeply with your help desks like Zendesk or Intercom, teach it using all your past support tickets, and build out ways for it to handle real-world situations.

Pro Tip: Think of Mistral AI as a Formula 1 engine. It’s incredibly powerful, but you still need to build the car around it. For a support team, that means connecting it to your helpdesk, feeding it your specific knowledge, and setting up rules for when to hand off a conversation to a human. This is exactly where a tool like eesel AI comes in. It handles all that heavy lifting, so you can get a smart AI agent up and running in minutes, not months.

Enhancing internal workflows and productivity with Mistral AI

Inside a company, Mistral's models are helping teams get more done.

- Code Generation: Dev teams use Devstral and Codestral to write routine code, create tests, and even translate code from one language to another, which helps them move a lot faster.

- Content Summarization: Instead of spending hours reading, teams use Mistral to get the key takeaways from long reports, dense legal documents, and research papers.

- Data Analysis: The smart reasoning of models like Mistral Large 2 can help analysts find trends in data without having to be a coding wizard.

Powering customer-facing features with Mistral AI

Companies are also putting Mistral's tech right into their products to make them smarter. For instance, an e-commerce app could use a Mistral model for a search bar that understands what you mean, not just what you type. A marketing tool could use it to draft ad copy or social media posts. Having both open-source and API models gives businesses the freedom to pick the right tool for the job.

Mistral AI vs. the competition: What you need to know

Mistral AI has managed to find its own lane in a very crowded AI market. Here’s a quick rundown of how it stacks up against the other big players.

Mistral AI: Open-source flexibility vs. closed-source simplicity

Here's where Mistral really stands out from the crowd. By offering strong open-source models, Mistral gives businesses total control over their data and AI setup. You can fine-tune a model on your own private data without ever sending it to an outside company, and you aren't stuck with one vendor.

On the flip side, a polished, closed-off API like OpenAI's GPT-4 is simple. It's easy to get started with and works great for general tasks. The trade-off? Less control, potentially higher costs, and being locked into their system. Mistral’s open approach gives you more power, but it usually takes more technical know-how to get it running.

Mistral AI's focus on performance and efficiency

Mistral has clearly focused on building models that are efficient, not just powerful. Their use of clever architectures like Mixture-of-Experts (MoE) in Mixtral lets them deliver top-level performance without the eye-watering compute costs of some of their competitors' single, massive models.

For businesses, this means real savings: lower costs to run the AI, faster responses for users, and the ability to run powerful AI on cheaper hardware, sometimes even on your own servers. This focus on efficiency makes advanced AI a bit more down-to-earth and affordable.

The challenge of Mistral AI implementation for support teams

Getting API access to a model like Mistral Large 2 is exciting, but it's really just the starting line. For a support team, turning that raw AI power into an agent that can actually solve customer problems is a whole other project.

You'd have to build all the essential plumbing yourself, like:

- Helpdesk connections: Figuring out how to properly link the AI to tools you already use, like Zendesk, Freshdesk, and Gorgias.

- Real-world training: Teaching the AI how your business actually works, using your past tickets, internal docs in Confluence or Google Docs, and help center articles.

- Actionable workflows: Building a simple way to tell the AI what to do, like how to tag a ticket, look up an order in Shopify, or when to pass a tricky conversation to a human.

- A safety net for testing: Creating a way to test the AI on thousands of your past tickets to see how it behaves before you let it talk to a single real customer.

This is where a solution like eesel AI fits in. It’s a self-serve platform that gives you all these missing pieces right away. It acts as the user-friendly layer on top of powerful LLMs, letting support teams connect all their knowledge, test safely, and launch a customized AI agent in minutes, without needing a team of AI engineers.

This video provides a practical guide on how to start building applications using the powerful models from Mistral AI.

Is Mistral AI right for your business?

Mistral AI has proven it’s a major force in the AI world. Its dedication to open-source, paired with its efficient and powerful models, makes it a fantastic option for businesses that want more control, customization, and good performance without breaking the bank.

But while Mistral provides the amazing core technology, you often need specialized tools to turn that raw power into a practical solution for specific jobs, like customer support.

Turn powerful AI into practical support automation with eesel

If you're intrigued by the power of models from companies like Mistral AI, the next move is to put that power to work where it can really make a difference. eesel AI is the quickest and simplest way to launch a smart, helpful AI agent directly inside the helpdesk you already use.

You can get started in just a few minutes, train the AI on all your scattered knowledge sources, and test its performance risk-free before it goes live. Sign up for a free trial and see how you can transform your customer support with an AI that works the way you do.

Frequently asked questions

Using an open-source model gives you maximum control; you can run it on your own servers and customize it deeply, which is great for data privacy. The API is simpler and quicker to start with, as Mistral manages all the hardware and maintenance for you.

The main reasons are control and efficiency. Mistral AI offers powerful open-source models that you can run on your own infrastructure for complete data privacy. Additionally, models like Mixtral are designed for high performance at a lower computational cost.

While their foundational models are for developers, Mistral AI also has user-friendly applications like "Le Chat," which is a direct competitor to ChatGPT. For business tasks like support, you'd typically use a platform that builds on their models, requiring no code from your team.

Yes, that's a primary benefit of their open-source approach. When you download and run a Mistral model on your own servers (on-premise) or in your private cloud, your data is never sent to Mistral or any other third party.

It means you can get comparable or better performance while using less expensive hardware and consuming less energy. For your business, this translates directly into lower operational costs for running AI tasks and potentially faster response times for your users.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.