The AI world moves at a dizzying speed. One minute you've heard of a new model, and the next, it feels like old news. For anyone trying to actually build something with AI, picking the right large language model (LLM) can feel less like a strategic choice and more like trying to catch a speeding train.

When you need to build something real, you have to cut through the hype and figure out what actually works. Two of the heaviest hitters right now are Google's Gemini and Mistral AI. They come from very different places and have different ideas about how to build powerful AI. This guide will walk you through the Gemini vs Mistral debate, comparing how they perform, what they cost, and how they stack up for day-to-day business use.

Gemini vs Mistral: What are Google Gemini and Mistral AI?

Before we get into the weeds, let's get a quick feel for these two contenders. They aren't just interchangeable AI brains; they have different backgrounds and different goals.

Google Gemini: The powerhouse

Gemini is Google's baby, and it has the full backing of one of the world's biggest tech companies. It’s a multimodal model, which is just a technical way of saying it was designed from the start to understand text, code, images, and video all at once. You’ll find Gemini powering tools like Gemini Advanced and woven into the Google ecosystem, from Workspace to the enterprise-level Vertex AI platform.

The main draw for Gemini is its sheer scale. It has access to Google’s enormous datasets and shows impressive skill at tasks that require complex, multi-step thinking. It's built to be a do-it-all AI that can handle pretty much whatever you throw at it.

Mistral AI: The challenger

On the other side, we have Mistral AI, a Paris-based company that shot to fame by focusing on efficiency and open-source models. While they do offer paid commercial models, they're well-known for their "open-weight" models like Mistral 7B and Mixtral. This means they release the core model files, letting developers tweak, fine-tune, and run the models on their own servers.

Mistral has built a solid reputation among developers for making models that punch way above their weight class. They deliver strong performance without demanding a warehouse full of GPUs. Their answers are often described as concise and direct, which makes them a favorite for business workflows where you just need a reliable answer, no extra chatter needed.

Gemini vs Mistral: Performance and capabilities

So, how do they actually do? The "best" model really comes down to what you’re trying to accomplish. An AI that’s brilliant at writing a creative marketing email might not be the right fit for generating clean, production-ready code.

Gemini vs Mistral: Reasoning, accuracy, and "the fluff factor"

Spend five minutes on any developer forum, and you'll spot a common theme: people talk a lot about the vibe of the responses. Users often say that Mistral models get straight to the point. They give you an answer without the conversational warm-ups like "That's a great question!" or other filler that can sometimes bog down responses from bigger models. For a lot of business uses, this direct, "no-fluff" style feels more predictable and trustworthy.

Of course, any model can "hallucinate" or make things up, but Mistral's conciseness can make it feel more dependable for straightforward tasks. Gemini, on the other hand, usually gives more detailed, comprehensive answers. This can be great for brainstorming or when you need a deep dive into a tricky subject, but it can also mean you have to read through more text to find the core answer.

It's also worth remembering that performance can change drastically depending on the task. For example, one head-to-head comparison on OCR accuracy found that Gemini Flash 2.0 was quite a bit more accurate than Mistral's OCR model across a varied set of documents. It’s a good reminder that the benchmarks released by the companies themselves don't always reflect real-world performance. You have to test it for yourself.

Task-specific strengths

Let's see how these models fare with a few common business jobs.

-

Coding: Both models are pretty handy for generating code. However, you'll find many developers leaning toward Mistral for production scenarios. Its output is often cleaner and more consistent, which makes it easier to plug into automated workflows. Mistral also has specialized models like Codestral, which are fine-tuned just for coding.

-

Writing & Creativity: This is where Gemini often shines. Its conversational and thorough style is well-suited for creative writing, brainstorming marketing copy, and generating text that feels human. Mistral's default tone tends to be more professional and factual, which is perfect for reports or summaries but maybe not for your next big ad campaign.

-

Document & Data Analysis: Gemini has a huge leg up here because of its massive context window. The context window is basically the amount of information the model can keep in its short-term memory at one time. With Gemini 1.5 Pro able to handle up to 2 million tokens (that's about 1.5 million words), it can chew through incredibly long documents, entire codebases, or hours of video. Mistral's models, like Mistral Large with its 32,000-token window, are much more limited here.

A head-to-head comparison table

Here’s a quick rundown of their qualitative differences.

| Feature | Google Gemini | Mistral AI | Winner |

|---|---|---|---|

| Response Style | More conversational and comprehensive, can be lengthy. | Concise, direct, and to-the-point; less "fluff". | Depends on Use Case |

| Coding | Strong, general-purpose coding capabilities. | Highly regarded for clean, stable code generation; specialized models. | Mistral (for production) |

| Creative Tasks | Often preferred for brainstorming and human-like writing. | More factual and professional tone by default. | Gemini |

| Document Analysis | Excellent due to massive context window (up to 2M tokens). | Limited by smaller context window (e.g., 32K tokens). | Gemini |

| Perceived Trust | Can sometimes feel overly cautious or refuse political queries. | Directness is often perceived as more stable and reliable for business. | Mistral (for business) |

Gemini vs Mistral: Technical specifications and features

For anyone actually building with these models, the technical details are where the rubber meets the road. Here’s how they compare.

Model families and variants

Both Google and Mistral offer a menu of models designed for different needs, letting you balance performance, speed, and cost.

-

Gemini: The two main options available via their API are Gemini 1.5 Pro, their powerful, top-of-the-line model, and Gemini 1.5 Flash, which is built for speed and efficiency in high-volume situations.

-

Mistral: Their lineup has more tiers:

- Open-weight models: Mistral 7B and Mixtral 8x7B are go-to choices for developers who want to host the models themselves or fine-tune them with their own data.

- Commercial models: Mistral Small, Mistral Medium, and Mistral Large 2 are their optimized models you can access via API, offering a good mix of price and power.

- Specialist models: They also cook up specific models like Codestral, which is laser-focused on code generation and completion.

Gemini vs Mistral: Context window and knowledge cutoff

The context window is one of the biggest technical differences between them. As we mentioned, Gemini 1.5 Pro's ability to process up to 2 million tokens is a massive deal for anyone working with large volumes of information. Mistral Large's 32,000-token window is still very useful, but it's just not in the same ballpark.

Another thing to keep in mind is the knowledge cutoff, which is the "use by" date on the model's training data. Some of Mistral's models have a slightly older cutoff, but this is becoming less of a problem. Both companies now offer models that can perform live web searches to get you the latest information.

API and developer ecosystems

Both platforms provide a pretty smooth experience for developers. Google's Vertex AI is a massive, enterprise-ready platform with a huge toolbox, lots of integrations, and detailed documentation for building with Gemini.

Mistral's API, which they call "la Plateforme," gets a lot of praise for being simple and easy to work with, which fits their developer-first attitude. It's designed to be simple to get started with so you can get up and running fast.

Gemini vs Mistral: Pricing and business application

At the end of the day, the decision often boils down to money and business value. Let's look at how their pricing stacks up and how you can actually use these models in your business without hiring a whole new engineering team.

A breakdown of pricing models

Both companies mainly use a pay-per-token model for their APIs. You get charged for the amount of text you send to the model (input) and the amount of text the model gives back (output).

- Gemini (via Vertex AI): Google's pricing is pretty competitive, especially for its speedy Flash model.

Source: Google Cloud Pricing Page

| Model | Input Price / 1M tokens | Output Price / 1M tokens |

|---|---|---|

| Gemini 1.5 Pro | $3.50 | $10.50 |

| Gemini 1.5 Flash | $0.35 | $1.05 |

- Mistral: Mistral's API pricing is also competitive, and their smaller, efficient models are particularly budget-friendly.

Source: Mistral AI Website

| Model | Input Price / 1M tokens | Output Price / 1M tokens |

|---|---|---|

| Mistral Large 2 | $3.00 | $9.00 |

| Mistral Small | $0.50 | $1.50 |

| Codestral | $1.00 | $3.00 |

Mistral also has a "Le Chat Pro" subscription for about $15 a month, aimed at individuals and small teams who want to use their chatbot without messing with the API.

Applying AI models in your business: The easy way

Picking a model and paying for API access is just step one. The real work is turning that raw power into a useful business tool, like a customer support agent that actually helps people. This usually involves a lot of complicated setup:

-

You have to connect the model to all of your company's knowledge, which might be spread across a help desk, internal wikis, Google Docs, and old conversations.

-

You need to build a workflow engine that knows when to escalate a ticket, how to tag it correctly, or when to do custom things like look up an order status.

-

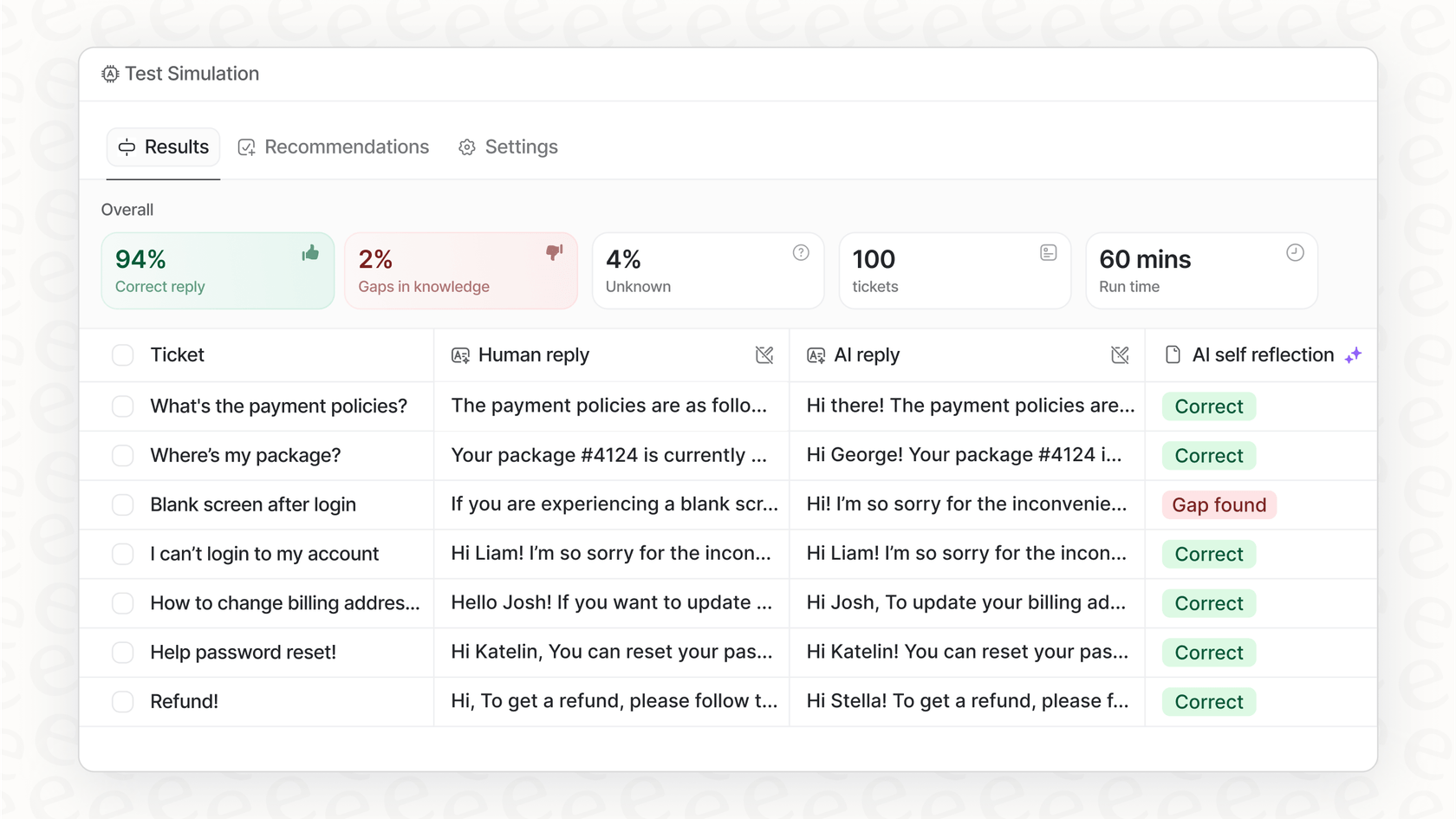

You need a safe way to test everything on your own data before you ever let it talk to a real customer.

That's a ton of heavy lifting, and it's exactly the problem a platform like eesel AI is built to solve. Instead of you spending months building all this plumbing from scratch, eesel AI gives you a self-serve solution that does the hard work for you. It acts as an intelligent layer on top of powerful models from Google and Mistral, making them useful for your business right out of the box.

- Instant knowledge unification: eesel AI connects to all your tools with one-click integrations for platforms like Zendesk and Confluence. It learns from your help center, your documents, and even your past support tickets to get up to speed on your business from day one.

Making the right choice for your needs

So, what's the final verdict in the Gemini vs Mistral showdown? Honestly, there’s no single winner. It all boils down to what you need to get done. Here’s a quick cheat sheet:

-

Go with Gemini if your main job is analyzing huge documents or datasets, if you're already plugged into the Google Cloud ecosystem, or if you need top-notch creative writing and multimodal features.

-

Go with Mistral if you care most about efficiency, cost, and having the flexibility of open-source models you can host yourself. It's also probably the better pick if you need an AI that gives you concise, business-ready answers without the extra fluff.

The best model is always the one that fits the job you need to do. But remember, the model itself is just one piece of the puzzle. The real magic happens when you integrate and use it inside your workflows to solve actual business problems.

Ready to turn the power of these top-tier AI models into real business results? eesel AI gives you the complete platform to build, simulate, and launch AI agents for customer support and internal knowledge, all in a few clicks. Start your free trial today.

This video offers a helpful head-to-head test to see how Gemini and Mistral AI perform on various tasks.

Frequently asked questions

Mistral models are often favored for their directness and "no-fluff" responses, making them ideal for business workflows needing reliable and concise answers without extra conversational filler.

Gemini 1.5 Pro has a clear edge here due to its massive 2 million token context window, allowing it to ingest and analyze vastly more information than Mistral's current offerings.

Mistral's smaller, efficient models offer competitive pricing for high-volume tasks. Gemini's 1.5 Flash model also provides a very cost-effective option for speed and efficiency.

Mistral AI is well-known for its open-weight models like Mistral 7B and Mixtral, which provide developers with the flexibility to host, modify, and fine-tune them on their own servers.

Gemini often shines in creative tasks, offering a more conversational and thorough style suitable for marketing copy, brainstorming, and generating text that feels nuanced and human.

While both offer APIs, integrating models like Gemini or Mistral effectively often requires significant development. Platforms like eesel AI simplify this by providing an intelligent layer to unify knowledge and launch AI agents quickly, regardless of the underlying model.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.