The world of video editing is about to get very interesting. Adobe recently announced it’s building third-party AI video generators, including OpenAI's much-hyped Sora, directly into Premiere Pro. If you've ever spent a whole afternoon searching for the right B-roll clip or rotoscoping a coffee cup out of a perfect take, you know what a big deal this could be.

This move is all about mixing traditional video editing with new generative AI, and it’s likely to change how everyone from Hollywood editors to indie filmmakers gets their work done. It's a peek into a future where your editing software doesn't just piece clips together but helps you create them from scratch.

So, let's break down what these integrations actually mean. We'll look at the new features, explore the creative possibilities, and get real about the practical challenges and ethical questions that come with technology this powerful.

What are the new AI video tools in Premiere Pro?

For a long time, Adobe Premiere Pro has been the tool of choice for professional video editing. It’s an industry standard for good reason, but it's about to get its biggest update in years. The main change is the introduction of generative AI video models right inside the app.

The one getting all the attention is OpenAI's Sora, an AI model that can create surprisingly realistic, minute-long videos from a simple text description. The demos have been pretty wild, showing everything from photorealistic drone footage of Big Sur to a woman walking through a neon-soaked Tokyo street. Putting that power inside Premiere Pro means you could generate footage without ever leaving your project timeline.

But Adobe isn't just betting on Sora. They're also looking at integrations with other popular AI video tools like Runway ML and Pika Labs. This is a clever approach because each model has different strengths. It's like having a full kit of specialized lenses instead of just one do-it-all zoom. Adobe seems focused on giving editors options so they can choose the right AI for the task at hand.

To top it off, Adobe is also working on its own model, Firefly for Video. This will handle some features directly within Premiere Pro, working alongside the other integrations to build out a full, AI-assisted editing suite.

How these integrations will change video editing

Okay, so what will this look like when you’re actually sitting at your desk? Adobe has shown off a few upcoming features that could really change the daily grind of a video editor.

Generate b-roll and extend clips

One of the biggest time-sinks in editing is sourcing B-roll, that extra footage that adds context and keeps things visually interesting. With these new tools, you could generate it right in your timeline. Need a quick shot of a busy city street or a quiet forest? Instead of digging through stock footage libraries, you could just type what you need and let Sora or Runway create a custom clip.

Adobe's own Firefly model is behind another handy feature called "Generative Extend." We've all been there: a great take is just a few frames too short to work with the edit's pacing. Generative Extend will let you add frames to the beginning or end of a clip, giving you that extra bit of room you need to nail a transition or let a shot breathe. As one filmmaker mentioned in a VentureBeat article, this is the kind of AI that will just make everyone's job a little easier.

Add, remove, and change objects in your shot

If you’ve used Photoshop’s Generative Fill, you know how it feels to just circle an object and have AI remove it or replace it. That same concept is coming to video. The new tools will be able to identify and track things like props, people, or a boom mic that dipped into the shot, across entire clips.

From there, you can use text prompts to add, remove, or alter those objects. Think about changing a character’s shirt color throughout a scene with one command or getting rid of a distracting sign in the background without hours of manual masking. This could save a ton of time in post-production and salvage shots that might have otherwise required an expensive reshoot.

Keep your entire workflow in one place

The real benefit here is getting all these tools to work together in one application. The goal is to ditch the awkward process of creating a clip in one app, exporting it, importing it into your editor, and then trying to make it fit. By putting these models inside Premiere Pro, Adobe is aiming for a fluid workflow where your real footage, AI-generated clips, and graphics all exist on the same timeline.

graph TD subgraph Old Workflow A[Generate Clip in External App] --> B[Export Video]; B --> C[Import into Premiere Pro]; C --> D[Edit and Adjust]; end subgraph New Integrated Workflow E[Generate Clip Directly in Premiere Pro Timeline] --> F[Edit Immediately]; end

This approach means your creative flow isn't constantly interrupted by technical hurdles. You can experiment, try different ideas, and blend different types of media together in real-time, letting you focus more on the story you're trying to tell.

The creative impact on video production

These new tools aren't just about speeding up old tasks; they open the door to completely new creative ideas. The effects will likely be felt across the board, from individual creators to large film studios.

More power for solo creators and indie filmmakers

For a long time, high-quality visual effects were reserved for productions with big budgets. Generative AI is starting to change that. A solo YouTuber or an indie filmmaker can now create a shot of a futuristic city or a fantasy creature without needing a huge budget or a team of VFX artists.

A more efficient workflow for professional studios

For bigger studios, the main upside is speed and efficiency. Instead of building detailed 3D models for pre-visualization, a director could generate mock-ups of entire scenes in a matter of minutes. B-roll could be created as needed, saving time on location scouting and shooting.

In post-production, these tools could fix small continuity errors, extend shots to perfect the timing, or add small environmental details that weren't captured on set. It’s all about spending less time and money on tedious work so more resources can go toward the creative side of filmmaking.

Blurring the lines between real and generated

Practical benefits aside, these integrations unlock a whole new world of artistic expression. Editors will be able to blend real-world footage with surreal, AI-generated environments. They could animate static objects in a scene, create impossible camera moves, or build entire worlds from scratch. The line between what was shot with a camera and what was generated by an AI is getting thinner, giving storytellers a completely new set of tools to play with.

Practical challenges and ethical questions

Of course, this shiny new tech isn't without its problems. It's still early days, and there are some real practical and ethical hurdles to consider.

The learning curve and quality control

Anyone who has played around with AI image generators knows that getting a good result is more than just typing a single sentence. As some users on a fanediting forum noted, generative AI isn't a "one-button" fix. It requires a new skill: writing effective prompts. Editors will have to learn how to clearly communicate their vision to the AI.

Even with a perfect prompt, AI models can still produce strange results, like hands with six fingers, objects that bend in weird ways, or blurry artifacts. Editors will still need a sharp eye to catch these mistakes and the skills to fix them. A solid grasp of composition, lighting, and color grading will be more important than ever to make sure AI-generated clips actually match the rest of the project.

The misinformation and deepfake problem

With this much power comes a lot of responsibility. The ability to easily create realistic fake videos is exciting for filmmakers but scary when you think about misinformation, a concern that came up on Reddit regarding Sora's release around major elections.

Adobe knows this is a risk and is relying on its Content Credentials initiative to encourage transparency. This technology basically adds a "nutrition label" to digital content, showing how it was made and which AI models were involved. It’s a good step, but it won’t solve the problem on its own.

A quick look at the integrated AI models

While Sora is getting most of the buzz, it helps to remember that Runway and Pika have their own unique advantages. Here’s a quick rundown of what makes each one different:

-

OpenAI Sora: This is the one to watch for high-fidelity, cinematic realism. It's designed to create longer clips (up to 60 seconds) that look and feel like they were shot with a real camera, making it a good fit for realistic B-roll and complex scene mock-ups. It's not publicly available yet.

-

Runway Gen-2: Runway's strength lies in its creative controls. It offers powerful effects for camera motion and style, which is great for stylized animations, music videos, or adding dynamic movement to still images. It's already available to the public.

-

Pika 1.0: Pika is known for being user-friendly and versatile. It has some neat features like lip-syncing and is great for generating quick videos for social media, creating concept art, or making animated shorts. It's also publicly available.

| Feature | OpenAI Sora | Runway Gen-2 | Pika 1.0 |

|---|---|---|---|

| Best For | Cinematic realism | Creative controls & effects | User-friendliness & versatility |

| Max Clip Length | Up to 60 seconds | Shorter clips | Shorter clips |

| Key Strength | High-fidelity, realistic video | Camera motion & style effects | Lip-syncing, social media clips |

| Availability | Not yet public | Publicly available | Publicly available |

Figuring out the cost

To get your hands on these new AI features, you'll first need an Adobe Premiere Pro subscription. Here's a look at the current pricing:

-

Premiere Pro: Starts at US$22.99/mo (when billed annually).

-

Creative Cloud All Apps: Starts at US$69.99/mo (when billed annually) and gets you Premiere Pro, Photoshop, After Effects, and more than 20 other apps.

The bigger unknown is what it will cost to use the AI models. Sora isn't public, and OpenAI hasn't said anything about pricing. Other tools like Runway use a credit-based system on top of their monthly fees. It’s very likely that using these generative features inside Premiere Pro will come with extra costs, either through credits or a more expensive subscription plan.

This video explores the groundbreaking collaboration between Adobe Premiere Pro and OpenAI's Sora model.

A new chapter for video creation

Bringing Sora, Runway, and Pika into Adobe Premiere Pro is more than just a software update; it marks a real shift in how we think about making videos. It points to a future where technical hurdles are less of a roadblock to creativity, and where efficiency and artistic vision can coexist more easily.

While the possibilities are exciting, it’s good to stay grounded. Editors will need to adapt by learning new skills and thinking through the ethical issues that come with this tech. The costs are still a question mark, and the output isn't always perfect. But one thing seems certain: video editing is changing for good.

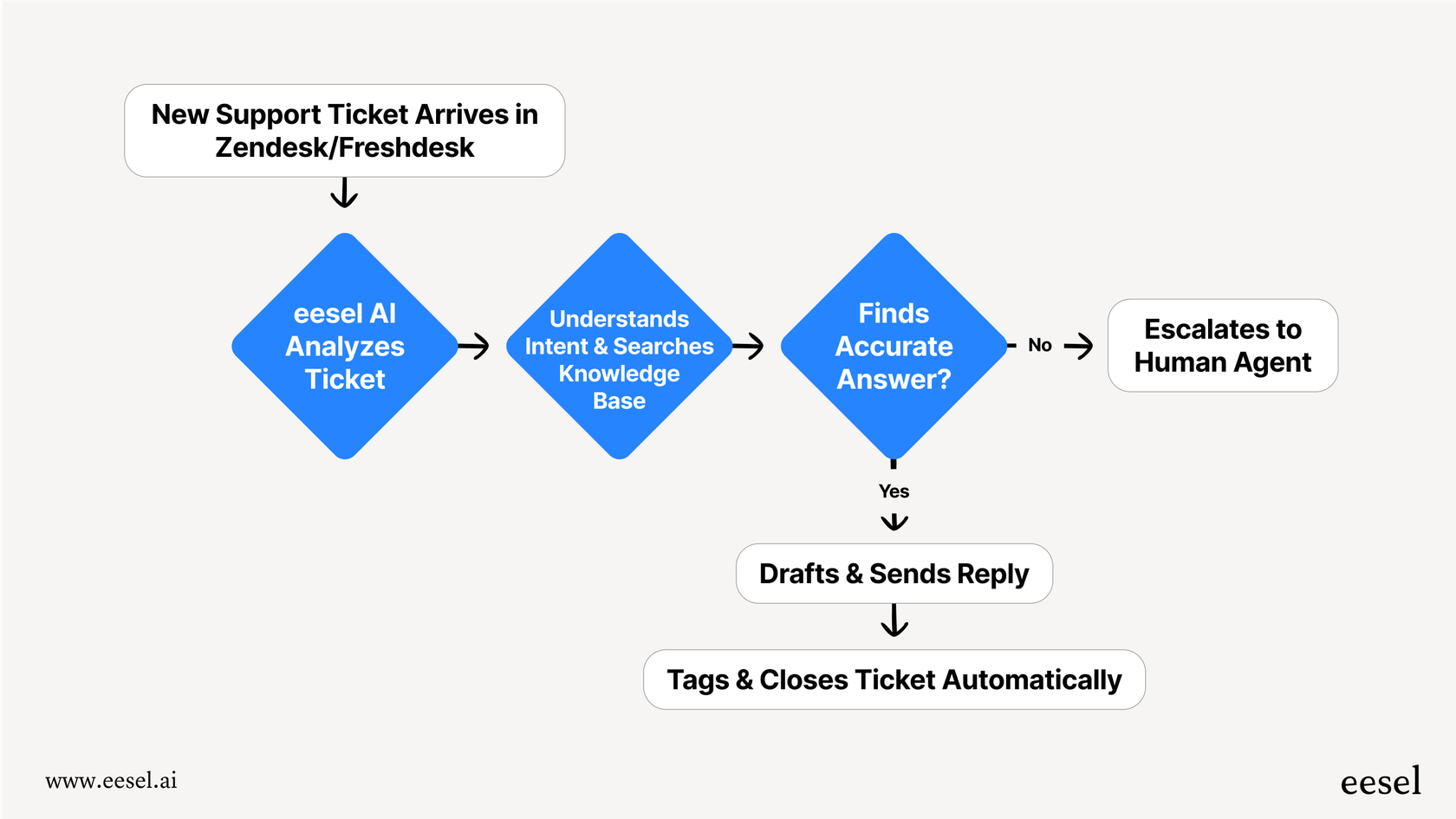

This is all part of a bigger trend of powerful AI being built directly into the tools professionals use every day to handle tedious work and open up new possibilities. Adobe is doing it for video editing, and other platforms are doing it for different fields. For customer support teams, the goal isn't generating video, but delivering fast, accurate, and personal help across thousands of different conversations.

Just as video editors need a platform that’s both powerful and easy to use, so do support teams. If you're looking to bring that same kind of AI-driven efficiency to your customer service, without a complicated setup or unpredictable costs, check out how eesel AI works with your existing helpdesk to automate support, help agents, and bring all your knowledge together.

Frequently asked questions

You can expect features like generating custom B-roll footage, extending clips to adjust timing, and the ability to add, remove, or change objects within your shots using text prompts. These tools aim to streamline your editing process and keep your workflow entirely within Premiere Pro.

You'll first need an Adobe Premiere Pro subscription. It is highly likely that using the generative AI features will incur additional usage-based fees or require credits for models like Sora, on top of your existing subscription cost.

Practical challenges include a learning curve for writing effective AI prompts and maintaining quality control over generated content to avoid artifacts. Ethically, there are significant concerns about misinformation and deepfakes, which Adobe is addressing with its Content Credentials initiative.

Yes, mastering effective prompt writing will be crucial for guiding the AI to produce desired results. A strong understanding of core video editing principles like composition, lighting, and color grading will also be vital to seamlessly integrate AI-generated content.

Adobe is also integrating other popular AI video tools such as Runway ML and Pika Labs, each offering distinct creative strengths. Additionally, Adobe is developing its own model, Firefly for Video, to enhance the AI-assisted editing suite.

These integrations will democratize access to high-quality visual effects, allowing creators to generate complex shots or environments without needing large budgets or extensive VFX teams. This efficiency frees up resources, enabling a greater focus on creative storytelling.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.