A simple guide on how AI content detectors work

Kenneth Pangan

Stanley Nicholas

Last edited January 27, 2026

Expert Verified

With experts predicting that 90% of online content could be AI-generated by 2026, figuring out the difference between human and machine writing is becoming a pretty important skill. From universities to publishing houses, lots of people are turning to AI content detectors for answers. But what’s actually going on under the hood? How do these tools try to tell if a text was written by an algorithm?

This guide will break down the mechanics of AI content detectors. We’ll walk through the techniques they use, uncover their surprising (and pretty significant) limitations, and look at what’s next with technologies like AI watermarking. We'll also explain how focusing on high-quality content with tools like the eesel AI blog writer can help you create valuable articles that connect with readers, making the whole detection debate less of a concern.

The basics of how AI content detectors work

AI content detectors are tools built to guess the probability that a piece of text was created by a generative AI model like ChatGPT. Unlike plagiarism checkers that just scan for copied content, AI detectors analyze the writing itself. They look at patterns, structure, and word choice to identify the subtle fingerprints left by a machine.

Their main goal is to bring some transparency to a world that's getting more and more crowded with AI-generated text. They’re used all over the place:

- Education: To maintain academic integrity and make sure student work reflects their own thinking.

- Publishing and media: To fight misinformation and verify that articles are written by humans.

- SEO and content marketing: To avoid publishing stuff that search engines might flag as low-quality, automated content.

- Recruitment: To check that job application materials are actually written by the candidates themselves.

It's really important to know the difference between AI detection and plagiarism checking. They both look at content originality, but they work in completely different ways.

| AI detectors | Plagiarism checkers | |

|---|---|---|

| Purpose | Estimates if text was AI-generated | Checks if text matches existing sources |

| How it works | Analyzes linguistic patterns and predictability | Compares text against a database of published content |

| Focus | Statistical characteristics of the writing style | Directly copied or improperly cited content |

| Result | A probability score (e.g., "95% likely AI-generated") | A similarity report with links to sources |

The core mechanics of AI content detection

AI detectors aren't magic; they run on machine learning models and natural language processing (NLP). These systems are trained on huge datasets packed with millions of examples of both human-written and AI-generated text. This training helps them learn the subtle differences between the two, but the methods they use have some serious flaws.

Perplexity and burstiness: Traditional analysis methods

Two of the most common ideas in AI detection are "perplexity" and "burstiness." They basically measure how predictable and varied a text is.

-

Perplexity measures how predictable or surprising a text is. AI models are trained to predict the next most likely word in a sentence, which often leads to writing that's smooth and logical, but also very predictable. Human writing usually has more creative and unexpected word choices, giving it a higher perplexity. A low perplexity score is often seen as a red flag for AI-generated content.

-

Burstiness is all about the variation in sentence length and structure. Humans naturally write with a certain rhythm, mixing short, punchy sentences with longer, more complex ones. AI-generated text often lacks this dynamic and tends to stick to sentences of a similar length, which gives it low burstiness.

The flaws of perplexity and burstiness

While these metrics sound good in theory, they're not very reliable in the real world. According to Pangram Labs, detectors that rely on these metrics fail for a few big reasons:

- They flag classic human writing as AI: Since AI models are trained to keep perplexity low on their training data, famous historical documents like the Declaration of Independence often get misclassified as AI-generated because they show up so much in the training sets.

- They are biased against certain writers: These detectors often show bias against non-native speakers, neurodivergent individuals, or just anyone with a more structured writing style, since their writing can naturally have lower perplexity.

- Results are inconsistent across models: Perplexity is relative to the language model doing the detecting. A text might have low perplexity for GPT-4 but high perplexity for Claude, which means you get inconsistent and unreliable results.

Proactive watermarking: The future of AI detection

A much more robust and reliable method is AI watermarking. This technique involves embedding an invisible, statistical signature directly into AI-generated text as it's being created.

According to a report from the UK's National Centre for AI, here’s how it works:

- The AI model uses a secret key to split its potential next-word choices into two groups (think of it as a "green list" and a "red list").

- It then subtly favors words from one group over the other. The change is so tiny that it doesn't mess with the quality of the text.

- Across a whole document, this creates a pattern that can be detected. A detection tool that has the same secret key can analyze the text and find the watermark with a high degree of confidence.

Major companies like Google are already trying this out with SynthID in their Gemini model. Because this method doesn't rely on subjective writing styles, it's way less biased and much harder to fool with simple edits.

Limitations of AI content detectors

While AI detectors are getting better, they are far from perfect. It’s really important to understand their limitations so you can use them responsibly and avoid making unfair judgments based on what they spit out.

The problem of false positives and bias

No AI detector is 100% accurate. The biggest issue is false positives, where human-written content is mistakenly flagged as being AI-generated.

- High error rates: One study found that while some detectors are improving, others wrongfully flagged up to 30.4% of human-written academic abstracts as AI-generated. Even Turnitin, which claimed a false positive rate of less than 1%, was found by the Washington Post to have a higher rate in a small test.

- Systemic bias: These tools are often biased against non-native English speakers, whose writing patterns can look a lot like AI. Studies show that Black students are more likely to be falsely accused of using AI compared to their white peers.

The cat-and-mouse game: Detectors versus advanced AI

The world of generative AI is moving incredibly fast. As AI models produce more and more human-like text, detectors are always playing catch-up.

- Evasion is simple: It's easy for users to get around detectors by paraphrasing text, throwing in personal stories, or using "AI humanizer" tools. One expert noted she could fool detectors 80-90% of the time just by adding the word "cheeky" to her prompt.

- Watermarking isn't a silver bullet (yet): While it's a promising idea, watermarking has some adoption hurdles. A 2026 study found that only 38% of AI image generators had adequate watermarking, and there will always be a market for models that don't have it.

Probabilities, not proof: A key detail

It's crucial to remember that AI detectors give you a probability, not definitive proof. A score of "80% likely AI" just means the text shares characteristics with AI-generated content in the detector's training data, not that a machine definitely wrote it. Their results should be a starting point for a conversation, not a final verdict. This is especially true as legal frameworks like the EU AI Act, which is set to take effect in August 2026, start to require more transparency.

Practical applications and best practices

Despite their flaws, AI detectors can still be useful when they're used the right way. The key is understanding who uses them and how to apply them responsibly.

Who uses AI detectors and why

A wide range of professionals rely on AI detectors to maintain standards. Educators use them to check for academic dishonesty, publishers to protect against AI-generated spam, and recruiters to make sure cover letters are authentic. In the world of SEO and content marketing, teams use them to ensure their content doesn't get flagged by search engines as low-quality.

How to use them responsibly

To use these tools effectively, just follow a few best practices:

- Acknowledge limitations: Treat the results as one piece of information among many, not as concrete evidence.

- Use multiple tools: Since research shows different tools have different accuracy levels, checking a text with multiple tools can give you a more balanced view. One study noted that GPTZero performed better than others in its test set, which just goes to show that your choice of tool matters.

- Combine with human judgment: Always review the flagged content yourself. The detector's score should trigger a closer look, not an immediate conclusion.

- Focus on the source, not just the text: Instead of asking "Was this written by AI?", it can be more helpful to ask, "Does this content show original thought and provide real value?"

Focus on quality: A better approach to content creation

Instead of getting caught up in trying to "beat" AI detectors, the real goal should be to produce high-quality, valuable content that search engines want to rank and that humans actually want to read. When your content is deeply researched, well-structured, and written in a natural, engaging tone, the whole "AI or not" question becomes much less important.

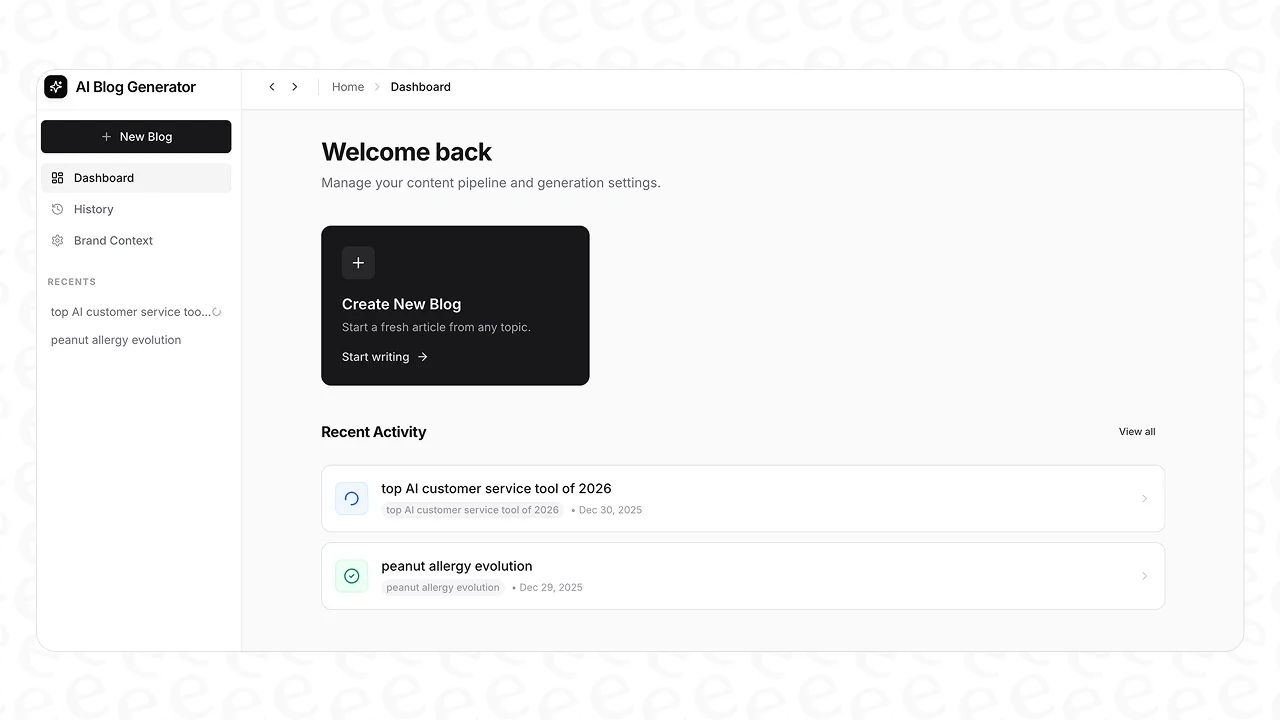

This is where advanced tools like the eesel AI blog writer come in. It’s designed to go way beyond the generic, low-perplexity text that many AI tools produce and that detectors easily flag. By focusing on quality and deep research, it helps you create content that stands on its own.

Here’s how it pulls this off:

- Genuinely human tone: The model has been fine-tuned to avoid robotic phrasing and create content people actually enjoy reading.

- Deep research with citations: It automatically researches your topic and includes citations, adding a layer of authority that generic tools just can't match.

- Automatic assets and social proof: By integrating AI-generated images, infographics, and real quotes from Reddit, it adds layers of human-like curation and variation that boost the overall quality of the post.

The proof is in the results. At eesel, we used our own tool to grow our blog's search traffic from 700 to 750,000 impressions per day in just three months. You can try it for free and see the difference for yourself.

To get a deeper visual understanding of the mechanics and unreliability of these tools, the video below offers a great explanation of how AI checkers actually work and what you can do to protect yourself from false flags.

A video explaining the technical details of how AI content detectors work and discussing their inherent limitations and unreliability.

Final thoughts on AI content detection

AI content detectors are a complex and rapidly changing technology. By analyzing linguistic patterns or looking for invisible watermarks, they offer a glimpse into a text's likely origin. But today's methods aren't perfect. They're prone to errors and biases, and their results should always be used as a guide, not a final judgment. Human oversight is still essential.

Ultimately, the conversation shouldn't just be about detection, but about creation. As AI tools get better, the focus has to shift towards using them to produce genuinely valuable, well-researched, and engaging content. By prioritizing quality above all else, you can create content that succeeds, no matter how it was made.

Frequently Asked Questions

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.