AI-generated content is pretty much everywhere you look these days. That’s led to a boom in tools that claim they can spot the difference between human and machine writing. But here’s the million-dollar question: can you actually trust them? Honestly, relying on an AI detector often feels like a coin toss. We’ve all seen the horror stories online, students getting flagged for cheating when they didn’t, and businesses tossing out perfectly good content written by a real person.

This guide is going to dig into the data to answer the big question: how accurate are AI detectors? We'll break down their core flaws, the serious ethical problems they create, and why it makes way more sense to control and verify your own AI rather than trying to police content from the outside.

How do AI detectors work?

An AI detector is a tool designed to analyze a piece of text and guess whether it was written by a large language model (LLM) like ChatGPT or Gemini. You paste your text in, and it gives you a score, usually a percentage, indicating how "AI-like" it thinks the writing is.

Basically, these tools are on the lookout for patterns common in AI writing. Two of the main signals they look for are:

-

Perplexity: This is a fancy way of measuring how predictable the word choices are. AI models are trained to pick the most statistically likely word to come next, which can make their writing feel a little too smooth and logical.

-

Burstiness: This looks at the variety in sentence length and structure. Humans naturally mix it up, using long, flowing sentences alongside short, punchy ones. AI-generated text can sometimes feel more uniform and lack that natural rhythm.

While it sounds good in theory, the reality has been a bit of a mess.

The accuracy problem: Why they are unreliable

It's not just a few isolated incidents. One study after another has confirmed what many of us suspected: AI detectors are, as MIT puts it, "neither accurate nor reliable." The issue is so widespread that even OpenAI, the company behind ChatGPT, had to shut down its own detection tool because it just wasn't accurate enough.

The unreliability really comes down to two big problems: false positives and false negatives.

The challenge of false positives

A false positive is when a detector wrongly flags human-written text as being generated by AI. This is where things get really complicated, and the fallout can be pretty serious.

Spend five minutes on Reddit, and you'll find plenty of examples. One student mentioned their teacher ran an analysis of "Jack and the Beanstalk" through a detector and it came back with an 80% AI score. People have found that pasting in text from the Bible, Lord of the Rings, or even the US Constitution can trigger a high AI score. These tools often can't tell the difference between a classic piece of literature and something an AI just produced.

The data backs this up. A study from Stanford University revealed that AI detectors show a significant bias against non-native English writers, frequently flagging their work simply because of different sentence structures and word choices. A Washington Post investigation found that Turnitin, a popular tool in universities, had a much higher rate of false positives than the company claimed.

For businesses and schools, these mistakes are more than just an annoyance. They can lead to unfair punishments, erode trust between managers and their teams, and foster a culture of suspicion.

The problem with false negatives

On the other side of the coin, a false negative is when a detector fails to catch AI-generated content, letting it pass as "human-written." This happens all the time, and it’s a huge reason why relying on detectors is a losing game.

As soon as AI detectors got popular, "AI humanizer" tools started appearing. These services take AI text and tweak it just enough to fool the detectors, maybe by adding a few grammatical errors or swapping out words to make it less predictable. It's also surprisingly easy to get around them with smart prompting. Just asking an AI to write in a more "human" or "cheeky" style is often enough to fly under the radar.

This has kicked off an endless arms race: LLMs get better, detectors try to adapt, and humanizers find new ways to outsmart them. The detectors are always one step behind, and it’s a race they can’t win.

| AI Detector | Claimed Accuracy | Notable Findings from Research | Source |

|---|---|---|---|

| GPTZero | 99% | Flagged human-written text in a comparative test. | BestColleges.com |

| Originality.ai | 97%+ (varies by model) | Shown to be more accurate in some studies, but still prone to false positives. | Originality.ai |

| Turnitin | 98% | A test showed it can be reasonably accurate but also produced a 50% false positive rate in a small sample. | Washington Post |

| OpenAI Classifier | Discontinued | Pulled by OpenAI due to a "low rate of accuracy." | MIT Sloan EdTech |

The ethical risks of AI detectors

The issues with AI detectors run deeper than just poor accuracy. Using them opens up a whole can of ethical worms. Depending on these tools isn't just unreliable; it can be discriminatory and create real fairness problems for your team or organization.

Bias against non-native and neurodiverse writers

Most AI detectors are trained on massive datasets of what's considered "standard" English prose. As a result, they're more likely to flag writing that doesn't fit neatly into that box.

The Stanford study is a prime example. It showed that detectors were far more likely to label essays from non-native English speakers as AI-generated, just because their writing style was different. It's a clear case of technology penalizing people for not writing like a native speaker.

This bias can also impact neurodiverse individuals. Writing from people with conditions like autism or ADHD might include patterns, such as repetition or unique sentence structures, that a detector could easily mistake for AI. That means you could be unfairly flagging people based on their natural communication style.

For any organization, that's a huge problem. Using these tools can create an environment that punishes diversity and leads to serious equity issues.

A better approach: Controlling your AI

So, if detectors are a dead end, what's the alternative? The answer is to switch your focus from detection to control. Instead of trying to guess if content came from some random AI on the internet, you should use a trusted AI platform that you can configure, manage, and verify yourself.

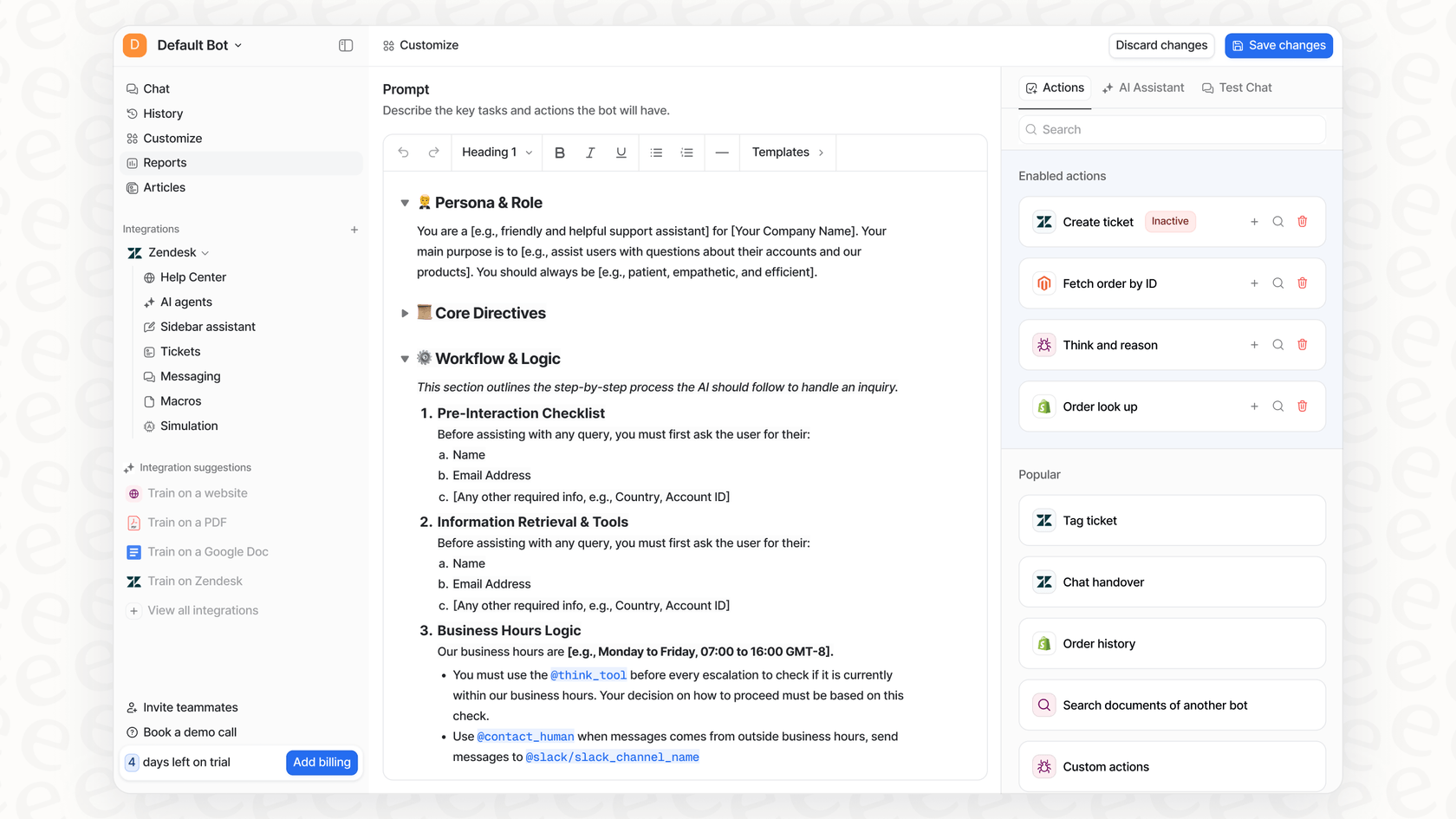

This is where a tool like eesel AI comes in. It’s about moving away from a reactive, policing mindset to a proactive, performance-driven one. You stop asking "is this AI?" and start telling your AI exactly what it needs to do.

Build on your own trusted knowledge

AI detectors make their best guess based on patterns scraped from the entire internet. In contrast, eesel AI works by connecting directly with your company's knowledge. It learns from your Zendesk history, your Confluence pages, your Google Docs, and past support tickets.

The advantage here is enormous. Your AI isn't learning from generic web content; it’s learning your brand's voice, your company's specific solutions, and the unique context of your business. The output isn't some bland, soulless text, it’s a direct reflection of your own team's expertise. When the AI is built on your company's knowledge, the whole question of authenticity goes away, because it’s your AI.

Simulate and test with confidence

One of the most frustrating things about AI detectors is that they're a total black box. You get a score, but you have no idea if it's right. The powerful simulation mode in eesel AI flips that completely.

Before your AI ever talks to a customer or employee, you can test it on thousands of your past tickets in a safe sandbox environment. You can see exactly what it would have said, how it would have tagged the ticket, and whether it would have solved the issue.

This means you don't have to guess about accuracy. You get a precise forecast of its performance and resolution rate before you even switch it on. That kind of risk-free validation is something no public AI detector could ever give you. It replaces the uncertainty of detection with the confidence of verification.

Total control over workflows and actions

An AI detector gives you one piece of information, and it's often wrong. A platform like eesel AI gives you a fully customizable workflow engine. You're the one in control.

A few features that put you in charge include:

-

Selective Automation: You decide exactly which types of tickets the AI handles. You can start small with simple, common questions and have it escalate anything more complex to a human agent.

-

Custom Persona & Actions: You can define the AI's tone of voice and tell it exactly what it's allowed to do, whether that's escalating a ticket, looking up an order in Shopify, or adding a specific tag. This level of control makes sure the AI works as a true extension of your team, not some unpredictable tool you can't trust.

AI detector pricing: What are you paying for?

When you look at how AI detectors are priced, you'll see they often use credit systems or charge per word scanned. You're paying for a guess, which is a fundamentally different value proposition than an AI automation platform.

| Tool | Pricing Model | Key Limitation |

|---|---|---|

| Originality.ai | Pay-as-you-go ($30 for 30k credits) or monthly subscription ($14.95/mo for 2k credits). | The cost is tied to how much you scan, not the value you get. You're paying to check content, not to improve it. |

| GPTZero | Free tier (10k words/mo), paid plans start at ~$10/mo (billed annually) for more words and features. | You're paying to check for AI, not to solve the underlying problem with your workflows or knowledge. |

| eesel AI | Tiered plans (starting at $239/mo) based on features and monthly AI interactions. No per-resolution fees. | Your cost is predictable and includes a full suite of AI tools (Agent, Copilot, Triage) designed to automate work, not just police it. |

The key takeaway is pretty simple: with detectors, you're paying for a probability score with no guarantee of accuracy. With a platform like eesel AI, you’re investing in a complete automation solution with a predictable, transparent cost that delivers real results.

Stop detecting, start directing

The bottom line is that AI detectors are inaccurate, biased, and ultimately a distraction. Trying to play "catch the AI" is a losing game in an arms race that's only getting faster.

The most effective, ethical, and practical path forward isn't to chase detection but to embrace controlled automation. It's time to stop guessing and start directing. By giving your teams reliable, transparent AI tools built on your own knowledge, you can move past the fear and uncertainty and actually use AI to make your business better.

Instead of pouring time and money into flawed detectors, invest in an AI platform you can trust and verify. See how eesel AI gives you the confidence to automate support by simulating its performance on your own data. Start your free trial or book a demo to learn more.

Frequently asked questions

AI detectors are generally unreliable and inaccurate, with studies showing they frequently make mistakes. Even major AI developers have acknowledged their low accuracy rates and limitations.

The main errors are false positives (flagging human-written text as AI) and false negatives (failing to detect AI-generated content). Detectors struggle with both, leading to significant unreliability.

Ethical concerns include bias against non-native English speakers and neurodiverse writers, whose unique styles are often wrongly flagged as AI. This creates fairness issues and can lead to unfair punishments.

Research indicates that many AI detectors from various companies also suffer from significant accuracy problems, particularly high false positive rates. OpenAI's decision highlights the inherent difficulty in building consistently reliable detection.

A more reliable approach is to shift from detection to control by using trusted AI platforms. These platforms allow you to manage and verify your own AI, ensuring it's built on your specific knowledge and operates within defined parameters.

The long-term outlook for AI detectors is challenging. They are caught in an "arms race" with evolving AI models and "humanizer" tools, often remaining one step behind and struggling to maintain consistent accuracy.

AI detectors offer a probability score with no accuracy guarantee, meaning you're paying to guess. In contrast, platforms like eesel AI provide a complete automation solution with predictable costs, delivering tangible results and verified performance.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.