Trying to choose the right Large Language Model (LLM) for your business can feel like you're staring at a wall of specs, benchmarks, and marketing jargon. On one side, you have OpenAI’s GPT-4 Turbo, the big name everyone knows for its sheer power. On the other, you have Mistral, a seriously impressive European challenger that's turning heads with its performance and much friendlier price tag.

So, which one is actually better for the stuff you need to get done, like handling customer service or building an internal helpdesk? This guide cuts through the noise of the GPT-4 Turbo vs Mistral debate. We're going to skip the purely technical stuff and focus on what really matters: how they perform in the real world, how much they cost, and what it actually takes to get them working for you. We’ll look at where each model shines and uncover why the model you pick is really only half the story.

What is GPT-4 Turbo?

GPT-4 Turbo is the top-of-the-line model from OpenAI, the folks behind ChatGPT. Think of it as the most advanced tool in their publicly available toolbox, built for chewing on complex problems, thinking through tricky situations, and writing high-quality text that sounds like it came from a person. It’s a closed-source model, so you typically access it through an API from OpenAI or Microsoft Azure.

Businesses usually reach for GPT-4 Turbo when they need something done with high accuracy and a deep understanding of nuance. We're talking about tasks like drafting dense technical documents, digging through data for insights, or powering a chatbot that can handle complicated conversations. Its main strengths are its massive pool of general knowledge and its knack for following detailed, multi-step instructions.

What is Mistral?

Mistral AI is a Paris-based company that shot onto the scene and quickly made a name for itself. While they have a strong open-source-friendly vibe with some great open-weight models, their most powerful model, Mistral Large, is a direct competitor to GPT-4 Turbo.

Mistral’s big pitch is that it offers top-tier performance without the top-tier price. It has solid multilingual skills and people often comment on how fast it is. For businesses, Mistral is a really compelling alternative for all sorts of things, from creating content to automating customer support. It strikes a balance between power and price that's making the industry giants pay attention.

GPT-4 Turbo vs Mistral: A head-to-head comparison

Alright, let's put these two AI heavyweights in the ring and see how they stack up. We’ll compare them on three fronts that every business needs to consider: raw performance, practical things like speed and cost, and the reality of getting them up and running.

Performance and capabilities

Let's start with the most obvious question: which model is actually "smarter"? When you look at user tests and formal benchmarks, a pretty clear picture starts to form.

Reasoning & problem-solving

When you throw complex logic puzzles or problems that require multiple steps at them, GPT-4 Turbo usually comes out on top. A really detailed user comparison found that GPT-4 did better than Mistral Medium on reasoning tests, figuring out tricky scenarios that stumped other models. For tasks that need deep, analytical thinking, GPT-4 Turbo is still the one to beat.

Knowledge & accuracy

Both models know a ton about, well, everything. But GPT-4 Turbo tends to be slightly more accurate and is a little less likely to have "hallucinations" (which is the technical term for making things up). In that same Reddit test, GPT-4 scored a 9/10 on general knowledge questions, while Mistral Medium got an 8/10. That might seem like a small difference, but it can be a big deal when getting the facts right is non-negotiable.

Instruction following & structured output

For a lot of business uses, you need an AI that can spit out structured data, like clean JSON code. In tests comparing this, GPT-4 Turbo is more dependable, almost always giving you a valid format. Mistral, on the other hand, can sometimes stumble here.

But honestly, relying on any raw model to give you perfectly structured data every single time is a bit of a gamble. This is where the platform you use makes a huge difference. A dedicated AI platform like eesel AI adds a workflow engine on top of the LLM. This extra layer makes sure the AI's output is formatted correctly and can trigger specific actions, like adding a tag to a support ticket or escalating it to a human agent. It gives you a layer of reliability, no matter which model is running under the hood.

Here’s a quick table to sum up their core skills:

| Feature | GPT-4 Turbo | Mistral Large | Winner |

|---|---|---|---|

| Advanced Reasoning | Excellent | Very Good | GPT-4 Turbo |

| General Knowledge | Excellent | Very Good | GPT-4 Turbo |

| Reducing Hallucinations | Very Good | Good | GPT-4 Turbo |

| Structured Output (JSON) | Highly Reliable | Less Reliable | GPT-4 Turbo |

| Multilingual Support | Strong | Strong (Natively) | Tie |

Practical business factors

Being the smartest isn't everything. For a business, practical things like speed, memory (its "context window"), and especially the price tag are just as, if not more, important.

Speed & latency

In something like a live chat with a customer, every second of delay feels like an eternity. Mistral models are generally known for being faster than their GPT-4 counterparts. While the exact speed can vary, this can make for a much smoother and more natural experience for your users.

Context window

Both GPT-4 Turbo and Mistral Large have big context windows (128k tokens for GPT-4 Turbo, 32k for Mistral Large). A large context window is basically the AI's short-term memory. The bigger it is, the more information it can hold and refer back to from a single conversation or document. This is super important for tasks like summarizing a long support email chain or answering questions about a dense technical manual.

Pricing

This is where Mistral really shines. It's a whole lot cheaper than GPT-4 Turbo, which makes it a fantastic option for businesses that want to use AI at scale without their budget spiraling out of control.

Let's look at the actual API pricing for both.

| Model | Provider | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|---|

| GPT-4 Turbo | OpenAI | $10.00 | $30.00 |

| Mistral Large | Mistral AI | $8.00 | $8.00 |

As you can see, Mistral Large isn't just a bit cheaper on the way in, it's drastically cheaper for the text it generates. For anything high-volume, like an AI customer support agent, that cost difference adds up fast and can completely change the economics of your project.

Implementation for your business

Picking a model is just step one. The real work begins when you try to plug it into your daily operations and connect it to all your company's knowledge. Just using the raw API requires a ton of ongoing engineering work to build and maintain all the systems around it.

This is the "missing piece" where a real platform changes the game. For instance, launching an AI agent for customer support is so much more than just making an API call. You need to:

-

Bring all your knowledge together: The AI needs access to everything, not just what's on the public internet. This means your help center, your past support tickets, internal wikis on Confluence or Google Docs, and even conversations from Slack.

-

Build custom workflows: You need to be in control of what the AI does. When should it try to answer? When should it pass the conversation to a human? What actions can it take, like tagging a ticket in Zendesk or looking up a customer's order history?

-

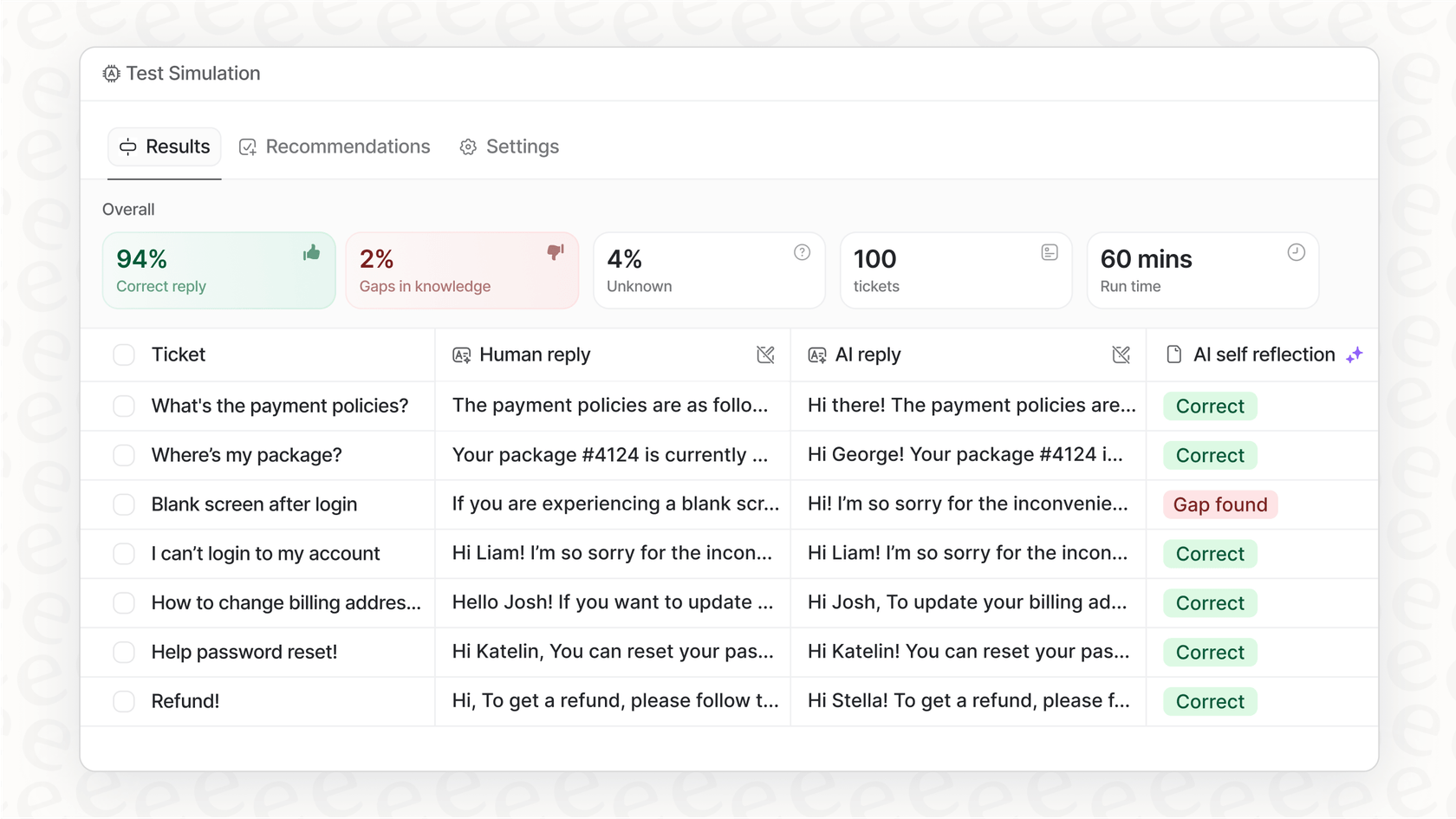

Test it out with confidence: How do you launch an AI agent without annoying your customers? You need a way to test it on your actual data to see how it performs before it ever talks to a single person.

This is exactly the problem eesel AI was built to solve. It’s a platform that provides that crucial application layer on top of powerful LLMs, letting you go live in minutes instead of months. With eesel AI, you can:

-

Connect all your tools instantly: It has one-click integrations with your help desk, wikis, and other apps.

-

Train the AI on your history: eesel AI learns from your thousands of past support conversations to understand your company's tone of voice and the common problems you solve.

-

Simulate before you activate: You can test your AI agent on your old tickets to see exactly how it would have responded. This gives you a clear ROI calculation and lets you fine-tune everything in a completely risk-free environment, which is something you just can't do with a raw API.

At the end of the day, the platform you use to roll out your AI is often more important than the specific model you choose.

GPT-4 Turbo vs Mistral: Choosing the right model is only half the battle

So, what’s the final call in the GPT-4 Turbo vs Mistral showdown?

-

GPT-4 Turbo is still the champion of raw intelligence. If your project absolutely requires the highest level of accuracy and complex problem-solving, it’s the safest choice.

-

Mistral is a very powerful and much more affordable challenger. Its speed and significantly lower price make it a brilliant option for scaling up your AI use, especially if you can live with a tiny trade-off in reasoning power.

But the biggest takeaway here is that the model itself is just one ingredient. For most companies, the winning move isn't picking one "best" model for everything. It's choosing a platform that lets you use these amazing technologies easily, safely, and in a way that actually helps your business. A platform like eesel AI handles all the backend complexity, so you can focus on solving problems instead of building infrastructure.

Ready to put AI to work?

Instead of getting lost in API documentation, you could see just how quickly you can automate your support. With eesel AI, you can connect your knowledge base, build a custom AI agent, and see how it performs in a simulation, all in just a few minutes.

Frequently asked questions

GPT-4 Turbo generally excels in advanced reasoning and complex problem-solving, making it the stronger choice for tasks demanding deep analytical thinking and multi-step logic. Mistral is very capable, but GPT-4 Turbo usually comes out on top for these specific challenges.

Mistral is significantly more cost-effective, especially for output tokens, making it a highly compelling option for high-volume applications where budget is a primary concern. GPT-4 Turbo is considerably more expensive per token, which can quickly add up at scale.

Mistral models are generally known for being faster and having lower latency than their GPT-4 counterparts, which can result in a smoother and more natural real-time user experience. This difference is crucial for applications where immediate responses are vital.

GPT-4 Turbo tends to be slightly more accurate and has a lower propensity for "hallucinations" compared to Mistral. While both are highly knowledgeable, GPT-4 Turbo offers a slight edge when factual correctness is non-negotiable.

No, the need for an AI platform remains crucial regardless of your choice between GPT-4 Turbo vs Mistral. Such platforms handle the complexities of integrating the model with your data, building workflows, and ensuring reliable, structured output, which raw APIs cannot do alone.

GPT-4 Turbo is generally more dependable for producing highly reliable structured data output, such as clean JSON. While Mistral can perform this task, it may sometimes stumble, making an additional platform layer even more valuable for consistent formatting.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.