Just when you thought the AI space couldn't get any faster, Google drops another major update. This time, it's Gemini 3 Flash, the latest model in their lineup, and it’s built for one thing: balancing serious brainpower with speed and a much lower cost. It’s designed to be the quick, efficient workhorse for high-volume tasks.

If you’re trying to wrap your head around what this new model is all about, you’re in the right place. This guide will walk you through everything you need to know about Gemini 3 Flash, what it does, who it’s for, how much it costs, and its real-world limitations. We’ll cut through the hype to answer one core question: is this powerful new model the right tool for your needs, or is a more specialized, ready-to-go platform a better fit for your business?

What is Gemini 3 Flash?

At its heart, Gemini 3 Flash is a lightweight, super-fast, and cost-effective large language model (LLM) from Google. Think of it as the nimble, energetic sibling in the Gemini 3 family, which also includes the more powerful Gemini 3 Pro and the heavyweight Gemini 3 Deep Think. It's built for high-frequency tasks where you need a good answer, and you need it now. This visual breaks down where Flash fits within the Gemini family.

Google is promising "Pro-grade reasoning with Flash-level latency", which really just means you get the smarts of a premium model without the lag or the hefty price tag.

One of its most talked-about features is its multimodal capability. This isn't just a text-in, text-out model. It can understand and process a mix of inputs, including text, images, audio, video, and even long PDF documents.

It’s important to remember that Gemini 3 Flash is a foundational model. This means it’s primarily a tool for developers and businesses to build applications on top of. While you might not use its API directly, you’re already starting to see it power consumer-facing products like the free Gemini app, making them faster and more capable.

Key features and capabilities

So, what makes this model a big deal? It’s not just one thing, but a combination of speed, cost, and new controls that make it a seriously flexible tool for developers.

A new balance of speed, cost, and intelligence

The main selling point for Gemini 3 Flash is how it hits the sweet spot between performance, speed, and cost. It’s trying to end the days where you had to make a huge trade-off between the three.

The numbers back this up. It scores an impressive 90.4% on the GPQA Diamond benchmark (a test of graduate-level reasoning) and 33.7% on Humanity’s Last Exam, showing it can hang with much larger, more expensive models. In fact, it's way more powerful than the previous generation's Gemini 2.5 Pro while being 3x faster and costing a lot less to run. That's a huge leap in efficiency.

Advanced multimodal understanding with a key limitation

As mentioned, Gemini 3 Flash is multimodal, meaning it can process a wide range of media types. You could feed it a video of your golf swing and ask for feedback, or upload an hour-long lecture and get a transcript and summary in seconds, just like in some of Google's demos. This opens up a ton of possibilities for analyzing unstructured data.

But here’s the catch: it only outputs text. While it can understand images, videos, and audio, it can't create them. You can't ask it to generate a picture or create a short audio clip. This diagram illustrates the flow of information.

Another technical detail worth noting is that the image segmentation feature from Gemini 2.5 is gone. According to some experts, this means you can no longer get pixel-level masks that identify specific objects in an image, which was a handy feature for certain computer vision tasks.

Fine-tuned control with "thinking level" and code execution

Google has introduced a clever new parameter called "thinking_level". This gives developers a slider to control how much reasoning the model applies to a task. You can choose from "minimal", "low", "medium", and "high".

It’s a classic trade-off. A "high" thinking level will give you more nuanced reasoning, but it will also be slower and cost more. For simple, quick tasks, you can dial it down to "minimal" for a near-instant, cheap response. This level of control is great for fine-tuning performance and cost for specific applications.

On top of that, it’s a beast at coding. It scored a massive 78% on the SWE-bench Verified benchmark, which tests its ability to fix real-world code issues. This makes it an incredibly useful tool for developers building agentic workflows or complex software.

Use cases and applications: Who is this for?

With all these features, Gemini 3 Flash is set to be used in a lot of different ways, from consumer apps to complex enterprise systems.

For developers building responsive applications

For developers, the combination of low latency and strong reasoning is a big win. It's perfect for interactive applications where a delay can ruin the user experience. Think about things like:

-

Real-time chat assistants in an app.

-

In-game characters that can have dynamic conversations.

-

Tools that analyze and summarize data streams on the fly.

Developers can access it through the Gemini API in tools like Google AI Studio, Google Antigravity, the Gemini CLI, Android Studio, and Vertex AI, making it pretty easy for anyone looking to build with it.

For businesses analyzing complex information

In the business world, Gemini 3 Flash is already being used to tackle some heavy-duty problems. For instance, Resemble AI is using it for real-time deepfake detection, and the legal tech firm Harvey is using it to analyze legal documents.

Its ability to quickly sift through massive amounts of unstructured data, like PDFs, videos, or audio recordings, and pull out key insights is a huge advantage for technical teams. It can help automate tedious review processes and let experts focus on more strategic work.

For everyday users via Google products

Most of us won't be messing with the API, but we'll definitely feel the impact of Gemini 3 Flash in the Google products we use every day. It's already being rolled out to the free tier of the Gemini app and is powering features in the new AI Mode in Google Search.

This means when you ask Gemini to plan a multi-stop road trip or to explain a complex scientific concept, you’re getting a faster, more thorough answer. It's all about making AI more responsive and useful for everyday questions.

To see Gemini 3 Flash in action and get a feel for its speed and capabilities, check out this deep dive from Sam Witteveen, which explores how it performs as a daily workhorse model.

This video from Sam Witteveen explains the new features and benefits of the gemini 3 flash model for developers and businesses.

The practical limitations of using a raw AI model

Gemini 3 Flash is undeniably powerful, but it’s important to understand what it is, and what it isn’t. This is where the hype meets reality, especially for businesses looking for a quick fix.

It's a powerful component, not a finished product

Think of Gemini 3 Flash as a box of brilliant, high-performance LEGO bricks. It has incredible potential, but you still need to know how to build the castle. To get any real value out of it for your business, you need someone to put it all together.

This means you need real technical expertise. You need developers who are skilled in API integration, prompt engineering, data security, and building a user interface. It is not a ready-to-use solution for specific business problems like automating customer support or creating an internal knowledge base. Turning its raw power into a functional, reliable business tool is a major development project that can take months.

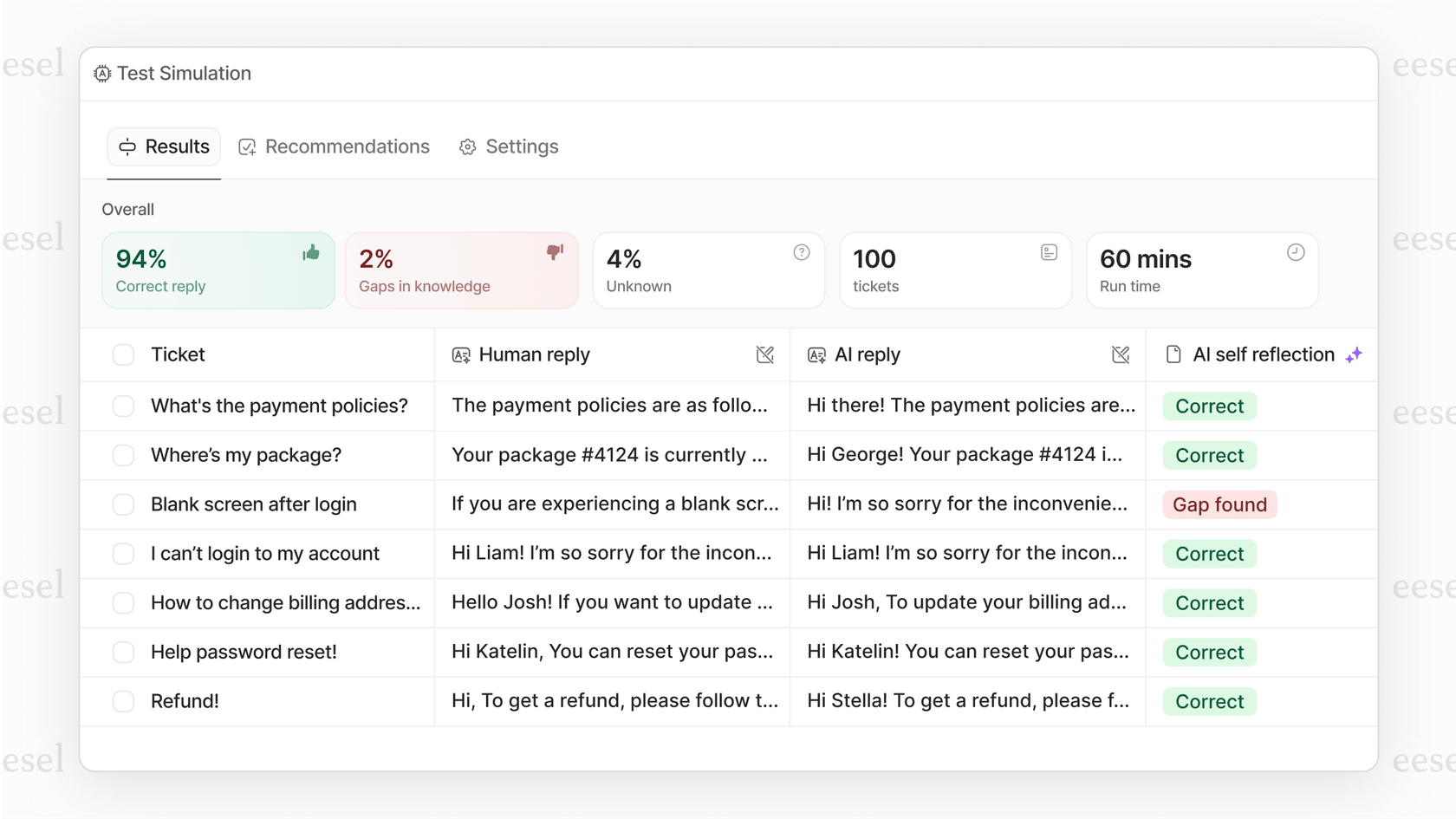

Bridging the gap from model to business solution

This is where AI platforms built to solve specific problems come in. While a team of developers could spend a quarter or more building a custom support chatbot with Gemini 3 Flash, a platform like eesel AI is designed to deliver that value right out of the box.

eesel is an "AI teammate" platform that connects directly to the tools you already use, like Zendesk, Slack, Notion, or Google Docs. It’s a no-code, self-serve platform that starts working in minutes, not months. You don’t need a developer to set it up. You can have an AI Copilot drafting replies for your support agents on day one, or deploy a fully autonomous AI Agent to handle frontline questions. The goal is to give you a complete solution, not just a box of parts.

Specific feature and accessibility gaps

Let's circle back to the model's limitations. The text-only output is a big one for many business use cases. For example, if you run an e-commerce store, you probably want a chatbot that can show customers product images or carousels. A raw model like Gemini 3 Flash can't do that. You'd have to build that visual functionality on top of it. In contrast, a specialized tool like eesel's AI Chatbot for e-commerce has features like product carousels and "add to cart" buttons built-in.

Similarly, the lack of image segmentation means if your business relies on visually identifying parts of an image or schematic, you’d need another tool or a lot of custom code to replicate that feature. Specialized platforms are designed with these end-user features in mind from the start.

Pricing explained

Google has been very transparent about the pricing, which is a big part of its appeal. It's designed to be affordable for high-volume use cases. Here’s a quick breakdown of the cost per 1 million tokens (a token is roughly ¾ of a word).

| Model | Input Price (/1M tokens) | Output Price (/1M tokens) |

|---|---|---|

| Gemini 2.5 Flash | $0.30 | $2.50 |

| Gemini 3 Flash | $0.50 | $3.00 |

| Gemini 3 Pro (≤200k) | $2.00 | $12.00 |

| Gemini 3 Pro (>200k) | $4.00 | $18.00 |

Google also offers features like context caching and a Batch API for asynchronous tasks, which can help developers reduce costs even further for certain types of jobs.

A powerful tool, but not the whole solution

There’s no question that Gemini 3 Flash is an impressive step forward. It offers a fantastic blend of speed, intelligence, and affordability that makes advanced AI more accessible than ever. Its flexibility as a foundational model unlocks a huge range of possibilities for developers who have the time, resources, and expertise to build custom applications.

But for most businesses, the main takeaway is this: the challenge isn't just getting access to a powerful AI model. It's about deploying a complete, integrated solution that solves a real-world problem without derailing your entire roadmap.

For teams that need to improve customer support, manage internal knowledge, or drive sales without starting a dedicated AI development project, a ready-made platform is almost always the more practical path. If you want an AI teammate that learns from your existing tools and starts adding value in minutes, explore what eesel AI can do.

Frequently asked questions

The biggest advantage is its balance of speed, cost, and intelligence. It provides Pro-level reasoning at a much lower price and with less lag, making it perfect for high-volume, interactive tasks.

No, it cannot. While Gemini 3 Flash has powerful multimodal understanding (it can process images, video, and audio), its output is strictly limited to text.

The ideal user is a developer or a business with a technical team. It's a foundational model, meaning it's a powerful building block for creating custom applications, not a ready-to-use solution for specific business problems.

Gemini 3 Flash is significantly cheaper. For example, its input price per 1 million tokens is $0.50, whereas Gemini 3 Pro starts at $2.00, making Flash about four times more cost-effective for inputs.

Not on its own. Building a chatbot with Gemini 3 Flash requires significant development work to create a user interface and business logic. For a no-code solution, you'd be better off with a specialized platform like eesel AI that uses powerful models under the hood.

Multimodal means the model can understand and process different types of information beyond just text. You can give Gemini 3 Flash a mix of text, images, audio clips, and even videos as input to analyze.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.