The partnership between Microsoft and OpenAI has put some seriously powerful AI into the hands of big businesses through the Azure OpenAI Service. It's essentially the corporate, locked-down version of the models everyone's been talking about. But getting it up and running isn't exactly a walk in the park.

This guide is here to help you cut through the jargon. We’ll break down what the available Azure OpenAI models are, what they’re good for, and what it really takes to deploy them. While these tools are incredibly powerful, they’re built for large companies with a lot of technical resources. We’ll also look at why, for specific jobs like customer support, a more direct path might be a much better idea.

What are Azure OpenAI models?

At its heart, Azure OpenAI is a collection of OpenAI's language and multimodal models running on Microsoft's secure cloud. The main difference between using OpenAI’s regular API and Azure’s service is the enterprise-level security and compliance wrapper. As industry experts at US Cloud have pointed out, Azure provides the private networking, regional availability, and compliance features that large organizations require.

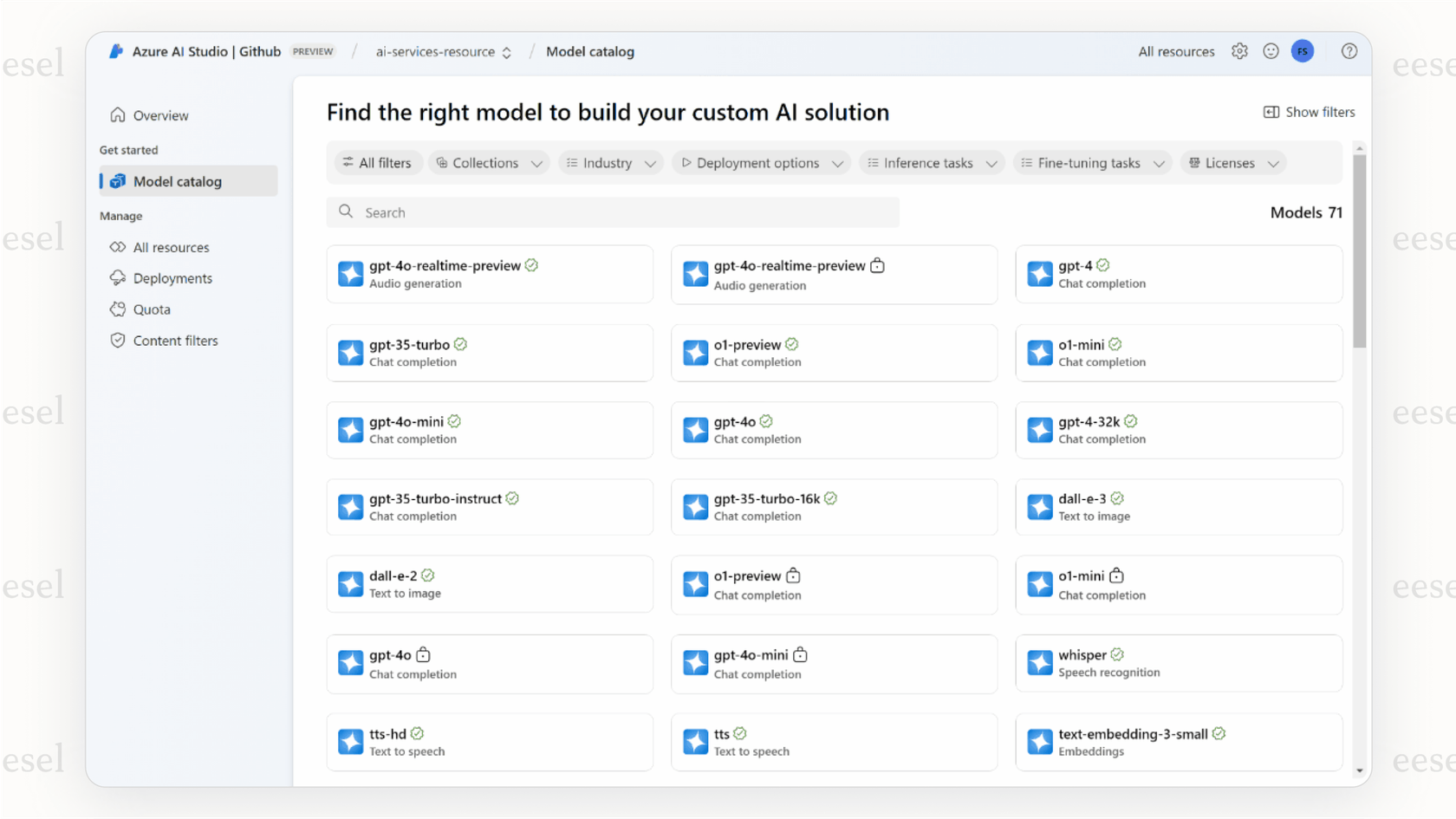

You find and manage these models through the Azure AI Foundry, which is a central hub for browsing, tweaking, and deploying them. It’s not just a list of models; it’s a whole catalog with options for text generation (the GPT series), advanced reasoning (the o-series), and tasks that mix text with images and audio. It’s really designed for teams who are building custom AI applications from the ground up.

The landscape of current Azure OpenAI models

Azure offers a huge menu of AI models, each with its own strengths, price, and set of skills. One of the first things you'll run into is that model availability changes depending on the region you choose, so a bit of planning is definitely needed.

Here’s a quick overview of the main model families you’ll find.

| Model Family | Key Models | Primary Use Case | Special Features |

|---|---|---|---|

| GPT-5 Series | gpt-5, gpt-5-mini | Advanced reasoning, complex problem solving, chat | Cutting-edge performance, large context window (400k) |

| GPT-4 Series | GPT-4o, GPT-4.1, GPT-4o mini | High-accuracy multimodal tasks (text, image, audio) | Integrates text and vision in one model, strong non-English performance |

| o-series (Reasoning) | o3, o4-mini | Deep reasoning for science, coding, and math | Spends more processing time to solve complex problems |

| GPT-3.5 Series | gpt-35-turbo | Cost-effective chat and completion tasks | Balanced performance and cost, ideal for high-volume applications |

| Specialized Models | DALL-E 3, Whisper, Sora | Image generation, speech-to-text, video generation | Task-specific models for creative and transcription needs |

The latest generation: GPT-5 and reasoning models

The newest kids on the block, like the GPT-5 series and the o-series models, are built for some heavy lifting. These models are designed for tasks that require deep, multi-step thinking, things like complex coding problems, scientific analysis, or untangling tricky logical puzzles. They take more time to "think" through a problem to give you a more accurate answer, which makes them perfect for specialized, high-stakes work.

Azure OpenAI models with multimodality: GPT-4o and beyond

Models like GPT-4o have really shaken things up by being able to handle text, image, and audio all at the same time. This "multimodal" capability makes them incredibly flexible. They can look at a chart and describe it, listen to a question and answer it, or analyze a product photo and write a description. This is especially useful for anything involving vision or for working in languages other than English.

Workhorse Azure OpenAI models like GPT-3.5 Turbo

While the latest models get all the attention, the GPT-3.5 series is still the engine behind many real-world applications. These models hit a sweet spot between performance and cost, making them the default choice for standard chatbots, everyday content creation, and summarizing text at scale. They're fast, reliable, and much easier on the budget, which is a big deal when you're processing thousands of requests every day.

Common use cases

Businesses are finding all sorts of clever ways to use these models to work smarter, improve customer experiences, and automate tasks that used to be a real grind. While the possibilities are nearly endless, most uses fall into a few main categories.

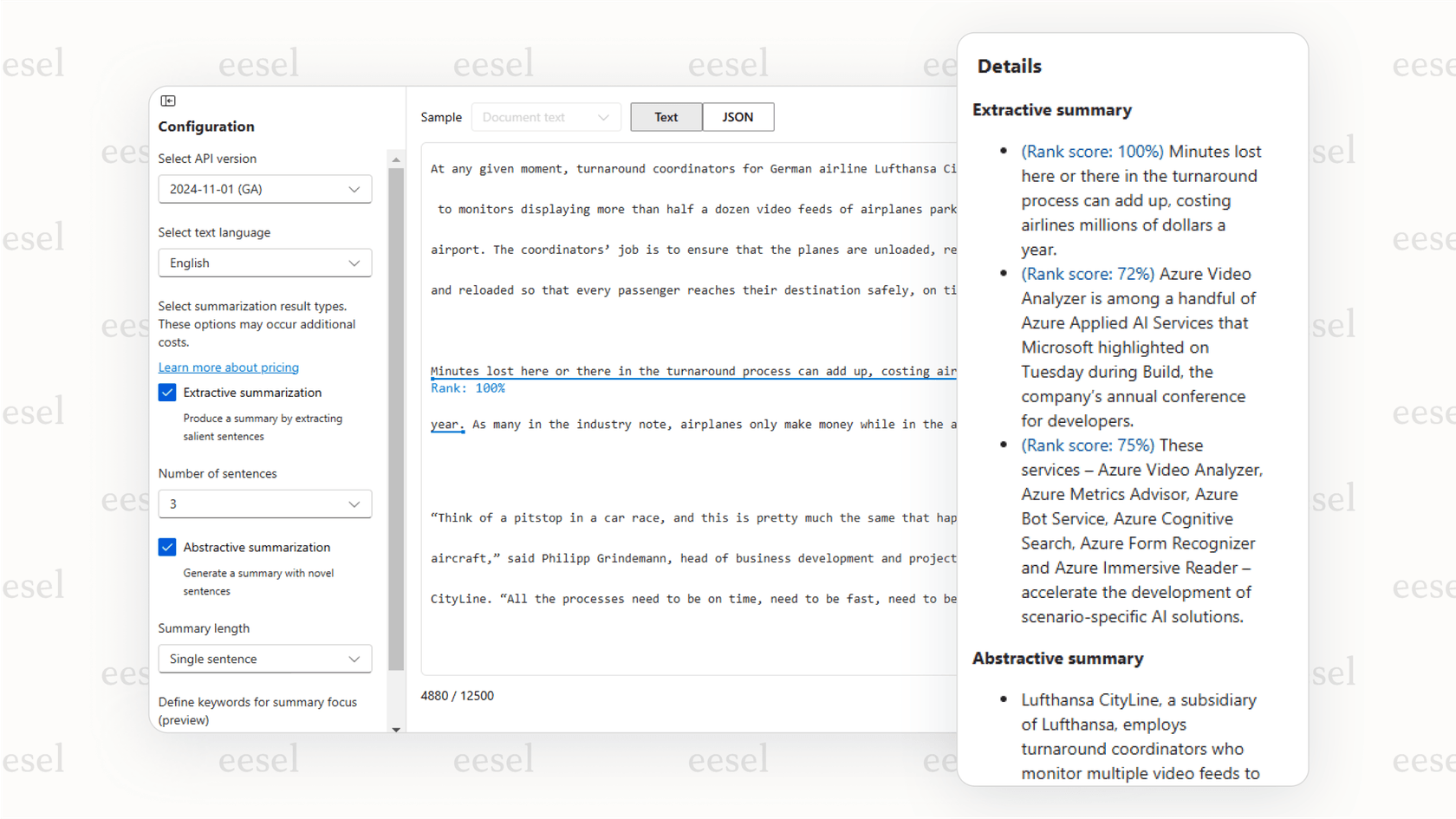

Content generation and summarization

This is easily one of the most popular applications. Teams use Azure OpenAI models to draft marketing emails, create personalized product descriptions, and summarize long documents or meeting notes into quick takeaways. It’s a huge time-saver that helps scale up content production.

Intelligent contact centers and customer support

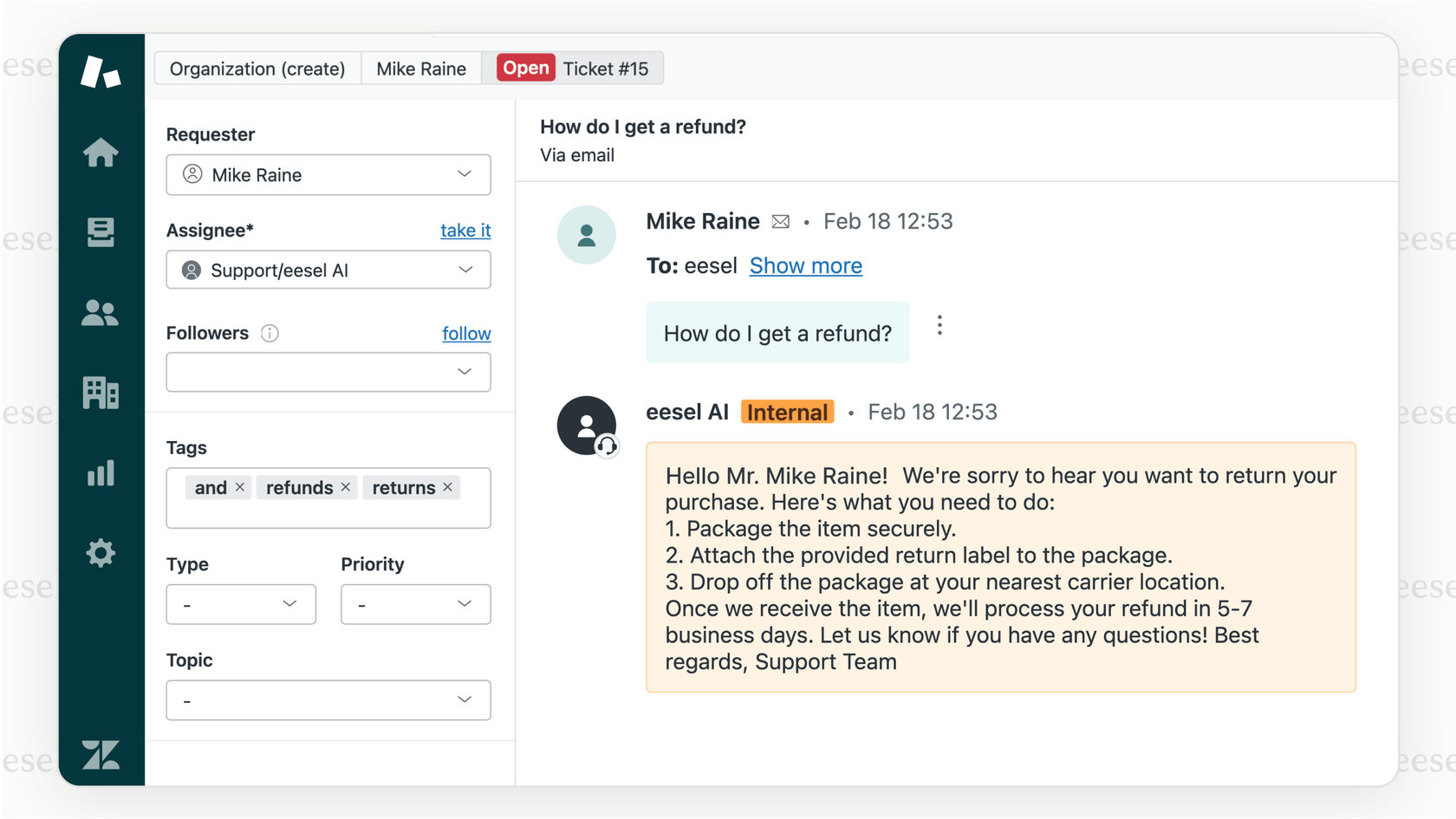

Building custom AI for customer support is another major area of focus. Companies use these models to run chatbots that can handle common questions around the clock or to build tools that help human agents by suggesting replies and pulling up relevant info on the fly.

But let's be real, building these kinds of solutions from scratch with Azure is a massive project. It takes a lot of developer time and can easily stretch out for months. For teams that just want to get support automation running without a huge development project, a platform like eesel AI offers a solution that's ready to go. It gives you an AI agent that plugs directly into help desks like Zendesk and Freshdesk in just a few minutes, with zero coding needed.

Code generation and data analysis

For the tech folks, the ability of models like GPT-4 to understand and write code is a huge boost. Developers are using them to turn plain English prompts into working code, track down bugs, and analyze big datasets for insights without having to write painful, complex queries.

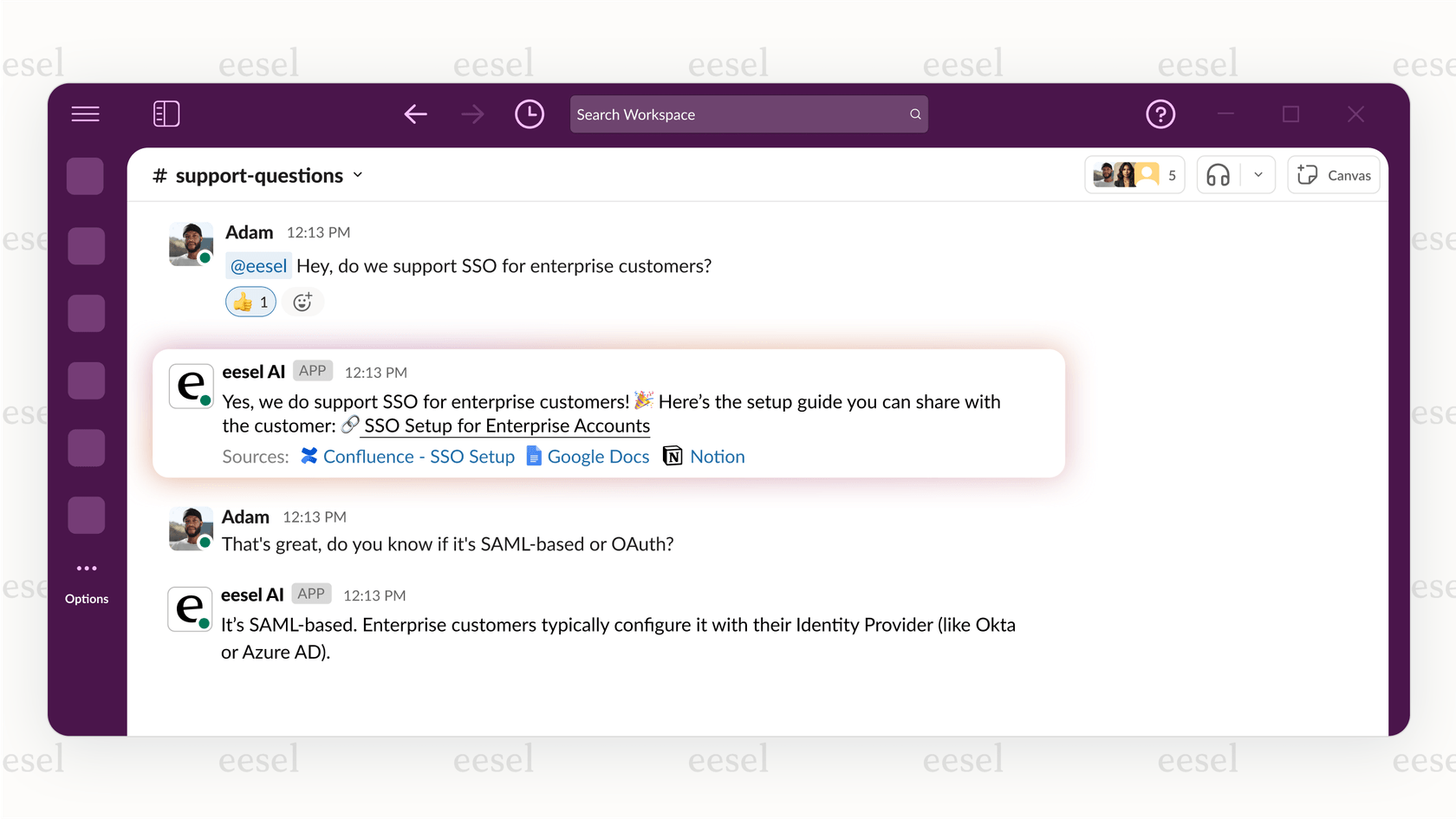

Internal knowledge management

Every company has a mountain of internal knowledge scattered across dozens of different apps and folders. These models can power smart internal search bots, allowing employees to ask questions in plain English and get instant answers from company wikis, HR policies, and technical docs.

Of course, the trickiest part is connecting the AI to all those different knowledge sources. An out-of-the-box solution like the eesel AI Internal Chat can securely unify knowledge from places like Confluence, Google Docs, and Slack right away, solving the connection problem without any custom development.

How to get started: Pricing, access, and the hidden complexities

While the power of Azure OpenAI models is clear, getting access to them isn't as easy as signing up for a new app. It's a platform built for enterprises, and that comes with a process that can be pretty slow and complicated. Let's look at what it actually takes.

The path to deploying Azure OpenAI models

Getting started with Azure OpenAI involves a few key steps, and it's definitely a marathon, not a sprint.

- The enterprise barrier: First, you typically need an existing Azure subscription and a business relationship with Microsoft. This isn't really a tool for weekend side projects.

- Application and approval: You can't just flip a switch. Businesses have to formally apply for access, and Microsoft reviews each application to ensure the use case fits their responsible AI guidelines. This can take a while.

- Resource creation and deployment: Once you're in, the technical work starts. You have to create an Azure OpenAI resource in the portal, pick a geographic region (which affects which models you can use), and then deploy the specific model you want.

Curious how Azure works with OpenAI? Here's a video for you to watch.

Understanding the pricing

Azure's pricing is flexible, but it can also be confusing and difficult to predict. There are two main ways you'll get billed.

- Standard (Pay-As-You-Go): This model charges you for every 1,000 tokens that go in and out of the model. It’s a good way to start or for workloads that have unpredictable traffic, but your costs can jump unexpectedly if usage suddenly spikes.

- Provisioned Throughput Units (PTUs): For more consistent, high-volume work, you can reserve a chunk of dedicated processing power for a fixed hourly rate. This gives you guaranteed performance and a stable bill, but it's a bigger financial commitment and means you have to be pretty good at forecasting your needs.

- Fine-tuning costs: If you want to train a model on your own data to specialize it, that comes with extra costs for both the training process and for hosting your new custom model.

The simpler, self-serve alternative

Honestly, the whole Azure process is a lot to handle. For teams whose main goal is just to solve a specific problem like customer support or internal Q&A, that level of complexity is usually overkill.

This is where a more focused tool can really shine. Instead of a months-long deployment project, eesel AI is designed to get you up and running right away.

- Go live in minutes, not months: eesel AI is completely self-serve. You can sign up, connect your tools, and have a working AI agent without ever having to talk to a salesperson or sit through a mandatory demo.

- One-click integrations: There's no custom code or developer time required. You can connect your help desk, like Gorgias or Intercom, and your knowledge sources with just a few clicks. The AI immediately starts learning from your existing information.

- Transparent and predictable pricing: Forget about counting tokens. eesel AI offers simple monthly plans based on the number of AI interactions. You won’t get hit with per-resolution fees or a surprise bill after a busy month.

Are Azure OpenAI models right for you?

Azure OpenAI models offer incredible power and security for big companies that are ready to invest the time, money, and technical skill to build custom AI solutions from scratch. If you have a dedicated dev team and a long-term plan to create a unique, deeply integrated AI application, it’s one of the best platforms you can choose.

But for most businesses that are trying to solve immediate problems, the overhead of Azure can be a serious roadblock. If your goal is to automate customer support or make your internal knowledge easier to access now, you don't need to build a rocket ship when a perfectly good car will get you there faster and with a lot less hassle.

Get the power of AI without the complexity

If you want the benefits of a powerful AI that’s trained on your company's knowledge, without having to navigate enterprise contracts and complex cloud setups, eesel AI is the practical, fast, and powerful choice.

Instead of spending months on setup, you can connect your existing tools and automate your support in minutes. You can even simulate how our AI would perform on your own past support tickets to see the potential impact before you commit.

Start your free trial with eesel AI and see for yourself how quickly you can deploy an AI agent that actually gets the job done.

Frequently asked questions

For simple chatbots or content summarization, GPT-3.5 Turbo offers a great balance of speed and cost. For complex tasks like code generation or deep data analysis where accuracy is critical, you should look at the more powerful GPT-4o, GPT-5, or o-series models.

The underlying AI models themselves are the same powerful versions developed by OpenAI. The key difference is the Azure wrapper, which provides enterprise-grade security, private networking, regional compliance, and integration with the broader Microsoft cloud ecosystem.

The process is designed for enterprises, so you should plan for a multi-week timeline at minimum. It involves applying for access, waiting for Microsoft's approval based on responsible AI guidelines, and then handling the technical resource deployment, which requires engineering effort.

For predictable workloads, reserving capacity with Provisioned Throughput Units (PTUs) gives you a fixed hourly cost and guaranteed performance. If your usage is variable, the Pay-As-You-Go token-based model is more flexible but requires careful monitoring to avoid surprise bills.

Building with Azure is best when you need a deeply customized AI application and have a dedicated development team to manage a long-term project. For standard use cases like customer support or internal Q&A, a pre-built tool can deliver results much faster and without the heavy technical overhead.

Your choice of region is a critical factor, as not all models, especially the newest ones, are available everywhere. You should always check Microsoft’s official regional availability chart before deploying to ensure the specific model you need is supported in that location.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.