We've all been there. You're deep into a game, and an NPC repeats the same line for the tenth time. It kind of shatters the illusion, right? For years, the goal has been to create NPCs that feel less like robots and more like real, reactive characters. The kind that remember what you did, react to the world, and can hold a decent conversation.

With models like GPT-Realtime-Mini, that goal is getting a lot closer. Hooking this kind of AI up to a game engine like Unity could really change how we experience games.

This guide is a practical look at "Unity integrations with GPT-Realtime-Mini". We’ll cover what the tech is, a few ways to implement it, and the big hurdles like cost and latency you'll definitely need to think about.

What are Unity and GPT-Realtime-Mini?

Before we get into the nitty-gritty of connecting these two, let's do a quick refresh on what each one is.

A quick look at Unity

Unity is a hugely popular, cross-platform game engine. It's the workhorse behind countless games, from tiny indie projects to big commercial hits. It’s known for being flexible enough for both 2D and 3D games, and its main scripting language is C#. If you’ve played an indie game in the last decade, chances are pretty high it was made with Unity.

Understanding GPT-Realtime-Mini

GPT-Realtime-Mini is one of OpenAI's models built for one specific purpose: fast, conversational interactions. It’s part of a family of AI models designed for the kind of quick back-and-forth you have in a normal conversation. Here's what makes it different:

-

Made for voice: The API was designed from the ground up for speech-in, speech-out conversations, not just typing in a chat box.

-

Keeps up the pace: It's built to respond quickly. This helps get rid of those awkward pauses that make AI chats feel so unnatural and clunky.

-

More efficient: As a "mini" model, it tries to find a sweet spot between being smart and being affordable. This makes it a more realistic choice for real-time uses compared to bigger, slower models like GPT-4.

Why use Unity integrations with GPT-Realtime-Mini in your game?

So, is it worth the effort to set up "Unity integrations with GPT-Realtime-Mini"? For a lot of game designers, the answer is a big "yes." This isn't just about cool tech; it's about breaking free from the old, stiff systems we're used to.

Creating truly dynamic NPCs

Most game characters are stuck in pre-written dialogue trees. You click an option, they say a line, and the loop repeats. Real-time AI throws that out the window. You can feed NPCs a constant stream of information about what's happening in the game, and they can react to it on the fly.

For instance, say an NPC has access to the game's event log. If a new line appears that says, "" dealt 30 damage to ,"" the NPC could generate a unique reaction. Instead of a generic "Stop that!", it might actually say, "Hey, what did that poor cow ever do to you?" It’s a small thing, but it makes the world feel like it's actually paying attention.

Enabling natural voice conversations

One of the coolest possibilities here is being able to just talk to a character and have them talk back with an intelligent, unique response. The GPT Realtime API is built for this. Players wouldn't have to scroll through menu options anymore. They could just have a normal, voice-driven conversation, which pulls you into the game so much more.

Powering adaptive storytelling

This tech can go way beyond just individual characters. An AI could act as a sort of "Dungeon Master" or an adaptive narrator for the whole game. It could watch what a player does and generate new challenges, describe scenes differently, or change the story based on their choices. This means every single playthrough could be truly different, shaped by the player in a way that a pre-written script just can't match.

Core methods for building Unity integrations with GPT-Realtime-Mini

The idea of talking to an AI character is cool, but how do you actually build it? The devil is in the details. There are a few different ways to tackle "Unity integrations with GPT-Realtime-Mini", and each has its own set of headaches and benefits.

The manual approach: Direct API calls in C#

The most direct route is using Unity's built-in tools, like "HttpClient" or "UnityWebRequest", to send requests straight to the OpenAI API. You’ll find plenty of tutorials and Stack Overflow answers that show you this method. It involves putting together your own JSON requests, adding authentication headers, sending them off, and then picking apart the JSON response you get back.

This approach gives you total control, which is great. The downside? Standard HTTP requests are often just too slow for a real-time voice chat. That round-trip can create a noticeable lag that completely kills the feeling of a natural conversation.

This video demonstrates a proof of concept for integrating ChatGPT directly into the Unity editor.

The streamlined approach: Using Unity packages

To save you some time, the community has created some great wrapper libraries, like the popular "com.openai.unity" package. These tools take care of a lot of the boring stuff, like authentication and formatting requests, so you can focus on your game's logic.

But there’s a catch. Many of these packages were originally built for text-based chat, not the specialized protocols you need for real-time audio. They might support the Realtime API, but they probably aren't optimized for the low-latency streaming that makes models like GPT-Realtime-Mini so appealing.

The low-latency approach: Connecting via WebRTC and WebSockets

If you want the snappy performance that GPT-Realtime-Mini is capable of, you need to use protocols built for real-time communication. Both the official OpenAI documentation and Microsoft's Azure guides point towards using WebRTC or WebSockets.

Instead of sending a request and waiting for a response, these protocols open up a persistent, two-way connection between your game and the AI. This lets you stream audio data back and forth in tiny, continuous chunks, making the whole experience feel much more fluid.

The hurdle here is that setting this up is a serious engineering task. You'll likely need a middle-tier server just to manage the connections and securely create the client tokens needed. That's a level of complexity that puts it out of reach for many solo and indie devs.

The biggest challenges with Unity integrations with GPT-Realtime-Mini

Now for the reality check. Getting this to work isn't just about writing code. As anyone who's browsed threads on Reddit's r/Unity3D knows, there are some huge practical problems that can stop a project in its tracks.

Sky-high API costs

This is the big one. Every time an NPC has a thought or says a line, you're making an API call, and every one of those calls costs money. Now, picture a popular game with thousands of players all chatting with dozens of NPCs. The bill could get out of control, fast.

This means you have to be smart about optimizing costs from the very beginning. You have to think about ways to limit API calls, use the most efficient models you can, and maybe cross your fingers that powerful models will be able to run locally someday. For now, the cost is a massive barrier.

Managing context and knowledge

An AI is only as good as the information you feed it. For an NPC to be believable, it needs a "memory" of what's happened and an "awareness" of its surroundings. The question is, how do you give it that information without slowing everything down?

You can't just send the entire game history with every request; it would be incredibly slow and expensive. You need a clever "memory" system that can figure out and pull only the most relevant bits of information for any given moment. This is a tough problem that researchers are still trying to crack, as you can see in papers on topics like generative agents.

Ensuring control and predictability

A large language model is naturally unpredictable. What's to stop an NPC from accidentally spoiling a quest, breaking character, or doing something that crashes the game? If you don't set up proper guardrails, you could end up with a chaotic and frustrating experience for the player.

To fix this, you need a solid workflow engine. You need to be able to define the AI's personality, give it strict rules about what it can and can't do, and provide a clear list of actions it's allowed to take, like "moveTo(x,y)" or "attack(target)".

Lessons from enterprise AI

These problems aren't new. The customer support industry has been wrestling with the exact same issues of cost, context, and control for years. The solutions they've come up with can be a useful map for anyone trying to build a complex AI system.

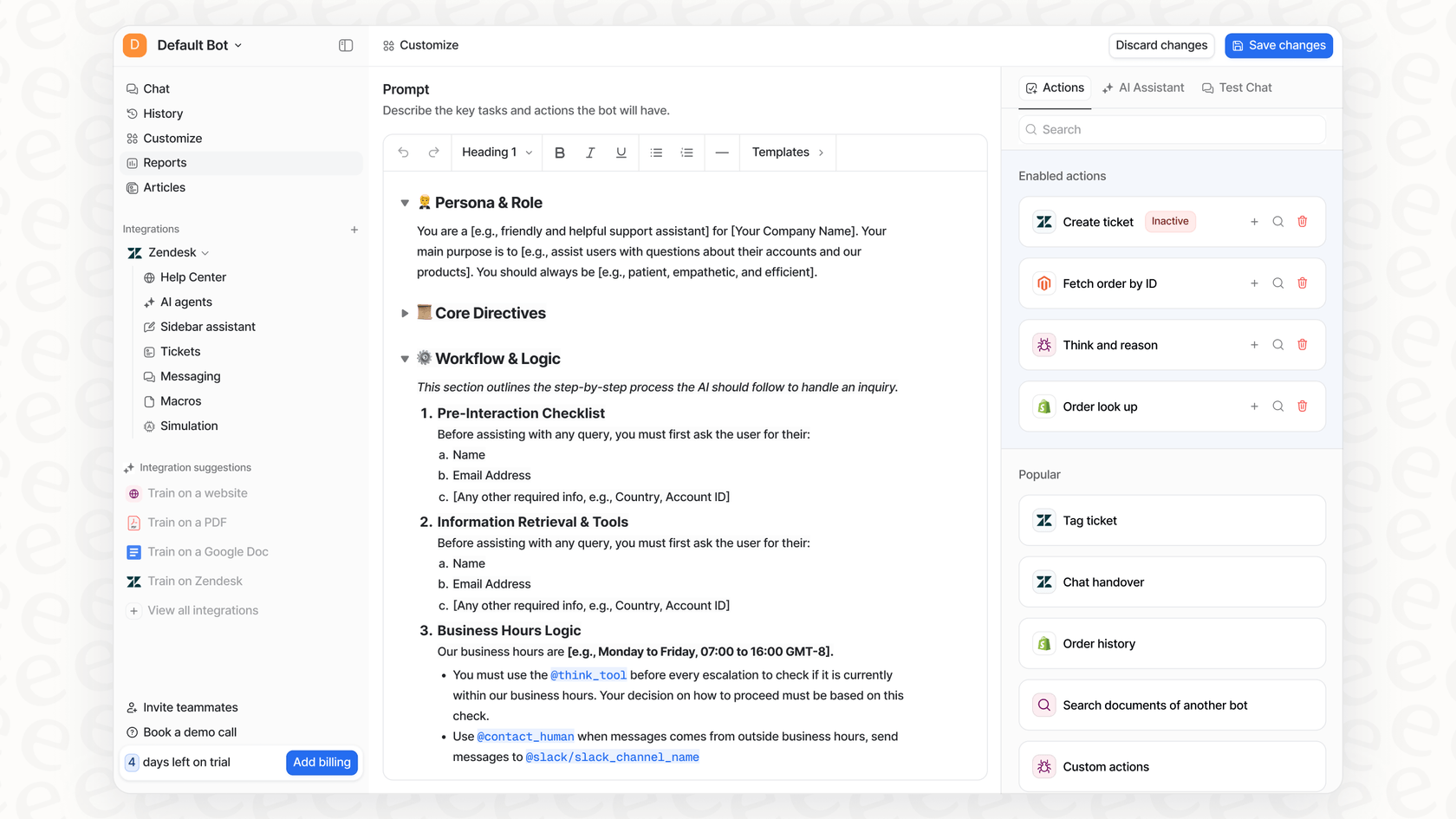

Platforms like eesel AI were built specifically to handle these issues for support teams.

- Unified knowledge: To solve the context problem, eesel plugs into a company's knowledge sources, like help centers and internal docs. It gives the AI access to just the right information it needs for a query, keeping things relevant and cost-effective.

- Customizable workflows: To solve the control problem, eesel has a simple workflow engine. You can define an AI's persona, when it should escalate a ticket, and what custom actions it can take, like looking up an order status.

- Simulation and gradual rollout: To avoid deploying a broken system, eesel lets you test your AI on thousands of past customer conversations before it ever talks to a real person. This gives you a clear picture of how it will perform so there are no nasty surprises.

The future of Unity integrations with GPT-Realtime-Mini

So, "Unity integrations with GPT-Realtime-Mini" are genuinely exciting. This stuff could lead to the kind of dynamic, living game worlds we've been talking about for ages. The technology is getting there, and the creative ideas are flowing.

But let's be real, it's not a simple plug-and-play solution. The challenges around cost, the technical difficulty of getting low latency, and the absolute need for systems to control the AI are serious hurdles.

The main thing to remember is that you're not just calling an API. You're building a whole system around it to keep it useful, predictable, and affordable. While building that kind of system for a game is a massive project, the same principles can be applied to customer and internal support.

If you're looking to build a powerful, controllable, and easy-to-manage AI for your support team, check out how eesel AI offers a solution you can get running in minutes, not months.

Frequently asked questions

API costs can be substantial, as every AI interaction generates a charge. For popular games with many players and NPCs, expenses can quickly accumulate, making cost optimization a critical consideration from the outset.

For optimal real-time performance, you'll need to use protocols like WebRTC or WebSockets. These create persistent, two-way connections, allowing for continuous streaming of audio data and minimizing the noticeable lag found with standard HTTP requests.

Implementing true low-latency integration is a significant engineering task, often requiring a middle-tier server to manage connections and tokens. While direct API calls or existing Unity packages can simplify some aspects, they may not be optimized for the demanding real-time requirements.

It's crucial to build a robust workflow engine around the AI. This involves defining specific personas, establishing strict rules for behavior, and providing a controlled list of actions the AI is permitted to take within the game environment.

Managing context requires a clever "memory" system that can dynamically extract and provide only the most relevant information from the game's history or environment for any given interaction. Sending entire game logs with every request would be too slow and expensive.

You can start by using community-provided Unity packages like "com.openai.unity" or making direct "HttpClient" calls. While these might not offer optimized real-time audio streaming, they provide a good foundation for understanding the API and integrating basic text-based interactions.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.