AI coding assistants are popping up everywhere. It seems like you can't scroll through tech news without hearing about tools like GitHub Copilot or OpenAI's Codex, both promising to write, debug, and even refactor code from a simple prompt. It’s definitely an exciting time to be a developer.

But let's be honest. Getting from a slick demo to a tool you actually use every day isn't always a straight line. While these assistants are incredibly powerful, figuring out where to even start, or what their real-world limits are, can be a bit of a puzzle. A lot of us want to play around with them in a flexible space like Google Colab but aren't sure how to get set up or what to watch out for.

That’s what this guide is for. We'll walk through what OpenAI Codex is, get it running in a Google Colab notebook, and then zoom out to look at the bigger picture. We’ll cover the promise, the frustrating limits of specialized AI agents, and what a truly useful AI integration platform should look like.

What is OpenAI Codex?

OpenAI Codex is an AI model built to turn natural language into code. You can think of it as a programming partner you can talk to. It’s a direct descendant of the GPT-3 model and is the same technology that powers GitHub Copilot, which you’ve probably seen in action.

Codex is fluent in over a dozen programming languages, but it’s especially good with Python. It also handles JavaScript, Go, Ruby, and others quite well. Its skills go beyond just writing code from scratch. You can also use it for:

-

Transpilation: Translating code from one language to another (like from Python to JavaScript).

-

Code explanation: Asking it to explain a confusing function or block of code in plain English.

-

Refactoring: Helping you clean up or restructure existing code for better readability or performance.

It's a seriously impressive piece of tech that offers a peek into how we might be building software in the near future. You can read more about it on the official OpenAI Codex page.

Why use Google Colab?

If you're looking for a good place to mess around with AI, Google Colab is hard to beat. It's a free, cloud-based Jupyter notebook environment that has become the default sandbox for tons of developers and data scientists.

Here’s why it’s a great fit for playing with APIs like Codex:

-

No setup required: You just open a new notebook in your browser and you're ready to code. You don't have to worry about installing Python, managing virtual environments, or any of the usual local setup headaches.

-

Free GPU access: For heavier machine learning tasks, Colab offers free access to GPUs. This is a huge plus and can save you a lot of money compared to running things on your own machine or a paid cloud service.

-

Simple collaboration: Colab notebooks are saved right in your Google Drive, so sharing them with your team is as easy as sharing a Google Doc. You can even work on the same notebook in real-time.

It’s pretty much the ideal spot for tinkering, prototyping, and sharing your AI experiments without any of the friction. You can check it out at the Google Colab homepage.

Getting started with OpenAI Codex in Colab

Ready to see Codex do its thing? Getting it up and running in Colab is surprisingly simple. This won't be a deep, exhaustive tutorial, but it’ll give you a clear overview to get you started.

Setting up your environment

First, you need to install the OpenAI Python package. Just open a new code cell in your Colab notebook and run this command:

!pip install openai

Next up, you’ll need an API key from OpenAI. Once you have your key, you need to store it somewhere safe. Just pasting it directly into your notebook is a bad habit, especially if you plan on sharing the notebook with anyone.

Making your first API call

With your environment ready, you can make your first call to a Codex-powered model. The trick is to give it context. The AI understands instructions from comments in your code, which feels a bit like magic the first time you see it.

Here’s a simple example where we ask it to write a basic function.

import openai

from google.colab import userdata

# Grab your API key from Colab secrets

openai.api_key = userdata.get('OPENAI_API_KEY')

# Prompt the model using a simple comment

prompt = """

# Python function to calculate the factorial of a number

"""

response = openai.Completion.create(

engine="code-davinci-002", # This is a model from the Codex family

prompt=prompt,

max_tokens=100

)

print(response.choices[0].text)

In the output, you'll see the complete Python function, generated on the fly. It's a small thing, but it’s a great demonstration of how the model can understand your intent from plain language.

A practical example: Data analysis with Pandas

Alright, let's try something a bit more useful, like a task you might actually do in data science. You can use a series of natural language comments to walk Codex through a few steps.

Let's say you want to create a small dataset with pandas and then visualize it. Instead of writing all the code yourself, you can just tell Codex what you want to achieve.

# Create a pandas DataFrame with columns 'Name', 'Age', 'City'

# Add 5 rows of sample data to the DataFrame

# Plot a bar chart showing the ages of the people in the DataFrame

By feeding these comments into the prompt, Codex can generate all the pandas and Matplotlib code needed to get it done. This is where it starts to feel less like a tool and more like a partner, especially for tasks that involve a lot of boilerplate code you always have to look up.

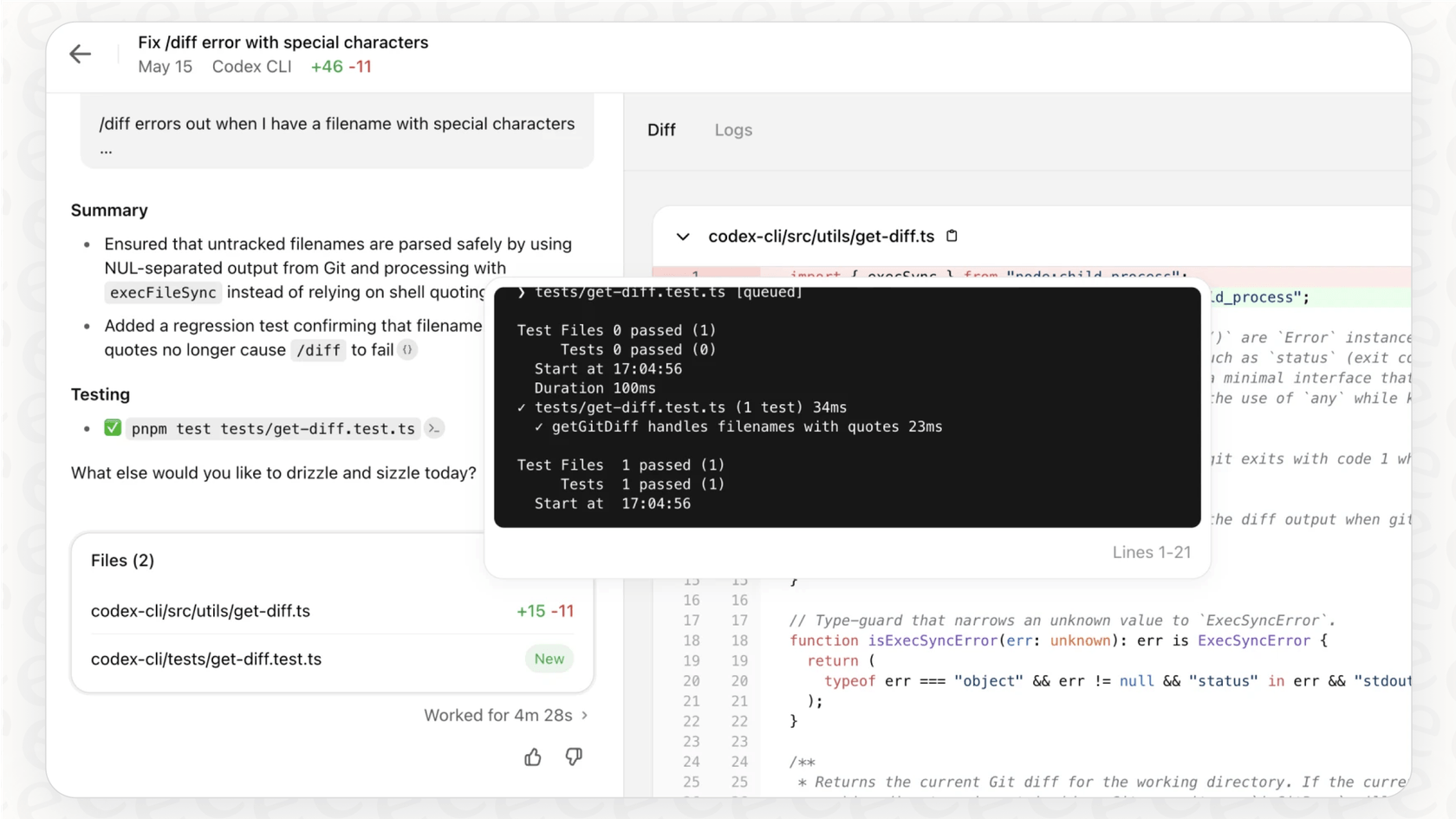

The reality check: Key limitations

So, you've run a few experiments in Colab, and it’s obvious that Codex is a powerful tool. But when you start to think about using something like this in a real team setting, some pretty big cracks start to show. The truth is, most of these specialized AI agents live in closed-off ecosystems, and that causes some major headaches.

The integration bottleneck

One of the biggest frustrations you'll see in developer communities is the lack of integration options. A quick browse of the OpenAI community forums reveals a lot of developers who are stuck because their teams use tools outside of the GitHub ecosystem. As it stands, Codex is tightly woven into GitHub, which makes it a non-starter for the huge number of teams running on Azure DevOps, GitLab, or Bitbucket.

An AI agent, no matter how intelligent, is only useful if it works where you work. Expecting a company to switch its entire version control system just to use one new tool is completely unrealistic. This integration bottleneck means that for many businesses, these powerful AI tools remain just out of reach.

Lack of control and customization

Another common problem is the "black box" feel of these tools. You can guide Codex with prompts, sure, but you have very little control over its underlying behavior. You can't really set its personality, restrict its knowledge to certain domains, or build custom actions that go beyond just writing code.

For any kind of business use, this is a showstopper. You need the ability to:

-

Define the AI's persona: Do you want it to be formal and professional, or casual and friendly?

-

Scope its knowledge: How do you prevent it from trying to answer questions about things it shouldn't know?

-

Create custom actions: What if you need it to do more than just code? Maybe it needs to create a Jira ticket or pull customer info from your internal database.

Without that level of control, you’re left with a tool that's powerful but untamed, and one that might not fit your company’s rules or workflows.

The risk of using untested integrations

Letting a new AI agent run wild on your production codebase is a scary thought. How can you be sure it won't introduce subtle bugs, violate your team's coding standards, or accidentally leak something sensitive? With most of these tools, you can't be sure.

Businesses need a safe way to test and validate an AI agent's performance before it's live. You should be able to run simulations on historical data to see how it would have performed in the past. This lets you measure its potential impact, find its weak spots, and build confidence before you let it near your customers or your production environment. Without a solid testing framework, you're just hoping for the best.

A better way forward

It’s pretty clear that while specialized AI agents are promising, their closed-off nature is holding them back. The future isn’t about being stuck with a single-purpose, inflexible tool. It's about building your own flexible, custom AI workflows that are tailored to your team's specific needs.

Why a platform approach makes sense

Instead of being locked into one vendor's world, teams need a platform that lets them call the shots. A good AI platform should be built on three main ideas:

-

Open integration: It should connect to all the tools your team already relies on, whether that's a help desk, an internal knowledge base, or a version control system.

-

Deep customization: It has to provide a workflow engine that lets you define exactly what the AI can and can't do, from its tone of voice to the specific tasks it can perform.

-

Confident deployment: It needs to have simulation and gradual rollout features so you can test, measure, and go live without holding your breath.

How eesel AI enables true workflow automation

This platform-first vision is exactly what we're focused on at eesel AI, particularly for customer service and internal support teams. It turns out the challenges developers have with coding agents are the same ones support teams have with support automation. They need more than a simple chatbot, they need a platform to build their own AI workflows.

Here’s how eesel AI tackles the problems we've been talking about:

-

Get up and running in minutes, not months: We hit the integration problem head-on. With one-click integrations for tools like Zendesk, Slack, and Confluence, you can connect your existing knowledge and systems in minutes. There's no need to rip out everything you're currently using.

-

You're in total control of your AI: We give you a fully customizable workflow engine. Unlike the rigid, one-size-fits-all behavior of other tools, eesel AI lets you build custom prompts to define the AI's personality and create custom actions so it can do things like tag a ticket, escalate an issue to a human, or look up order details using an API.

-

Test without the risk: Our simulation mode is designed to solve the problem of untested rollouts. You can test your AI setup on thousands of your past support tickets to see exactly how it will perform. This gives you a real, data-backed forecast of its effectiveness before you ever switch it on for your customers.

This guide walks you through setting up Google Colab with OpenAI's API to customize your coding experience.

From code generation to integrated AI colleagues

Playing around with OpenAI Codex integrations with Colab is a great way to see the raw potential of AI-driven code generation. It’s an eye-opening experience that gives a clear signal about where software development is headed.

But, these experiments also highlight a massive need for platforms that give you flexibility, control, and safety. The real leap forward isn't just about creating smarter AI assistants that can suggest a line of code. It's about building "AI colleagues" that are deeply and safely woven into your team's unique way of working, whether you're shipping software or helping customers.

The core ideas are the same no matter what you're doing: the best AI is the AI you can easily connect, confidently control, and safely deploy.

Ready to build AI agents that actually fit your team's workflow? See how eesel AI makes it possible to go live in minutes with powerful, customizable AI for your support and internal teams. You can start a free trial today.

Frequently asked questions

You first need to install the OpenAI Python package in a Colab notebook ("!pip install openai"). Then, secure your API key using Colab's built-in secrets manager and access it to make your first API call to a Codex model.

Colab is excellent because it requires no local setup, offers free GPU access for heavier tasks, and simplifies collaboration as notebooks are saved directly in Google Drive. This makes it ideal for quick prototyping and sharing AI experiments.

You can use it to generate code from natural language descriptions, such as creating Pandas DataFrames or plotting charts. Codex also helps with code transpilation, explanation, and refactoring, making it a versatile coding partner.

Key challenges include integration bottlenecks, as Codex is tightly coupled with GitHub, limiting use for teams on other platforms. There's also a lack of control over the AI's behavior and the risk of deploying untested code without proper validation frameworks.

It's crucial to avoid pasting API keys directly into your notebook. Instead, use Colab's built-in secrets manager (accessible via the key icon) to securely store and retrieve your OpenAI API key, especially if you plan to share your notebook.

The blog advocates for a platform approach that offers open integration with existing tools, deep customization to define AI persona and actions, and confident deployment through simulation and gradual rollout features. This enables building AI workflows tailored to specific team needs.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.