There's a lot of chatter about advanced AI coding tools like Anthropic's Claude Code. It’s an AI assistant that can write, debug, and even refactor complex software from simple English instructions. It’s the kind of tech that makes you step back and say, "wow."

But here's a thought: while your support team probably doesn't need an AI that can build an app from the ground up, the principles that make Claude Code so effective are exactly what you need to build a truly great AI support agent. The technique behind it all is called Claude Code prompt engineering, and it’s how you turn a generic chatbot into an intelligent, autonomous teammate.

This article will break down what we can learn from Claude Code prompt engineering and show you how to apply those lessons to your customer support AI, no developers required.

First, what is Claude Code prompt engineering?

Let's quickly get on the same page with these terms.

Claude Code is an AI assistant built for developers. Think of it as a pair programmer that lives in your computer. It can scan an entire codebase, figure out what you’re trying to do, and help you write new features, fix bugs, or get the hang of a new library. It's made to handle really technical, detailed work.

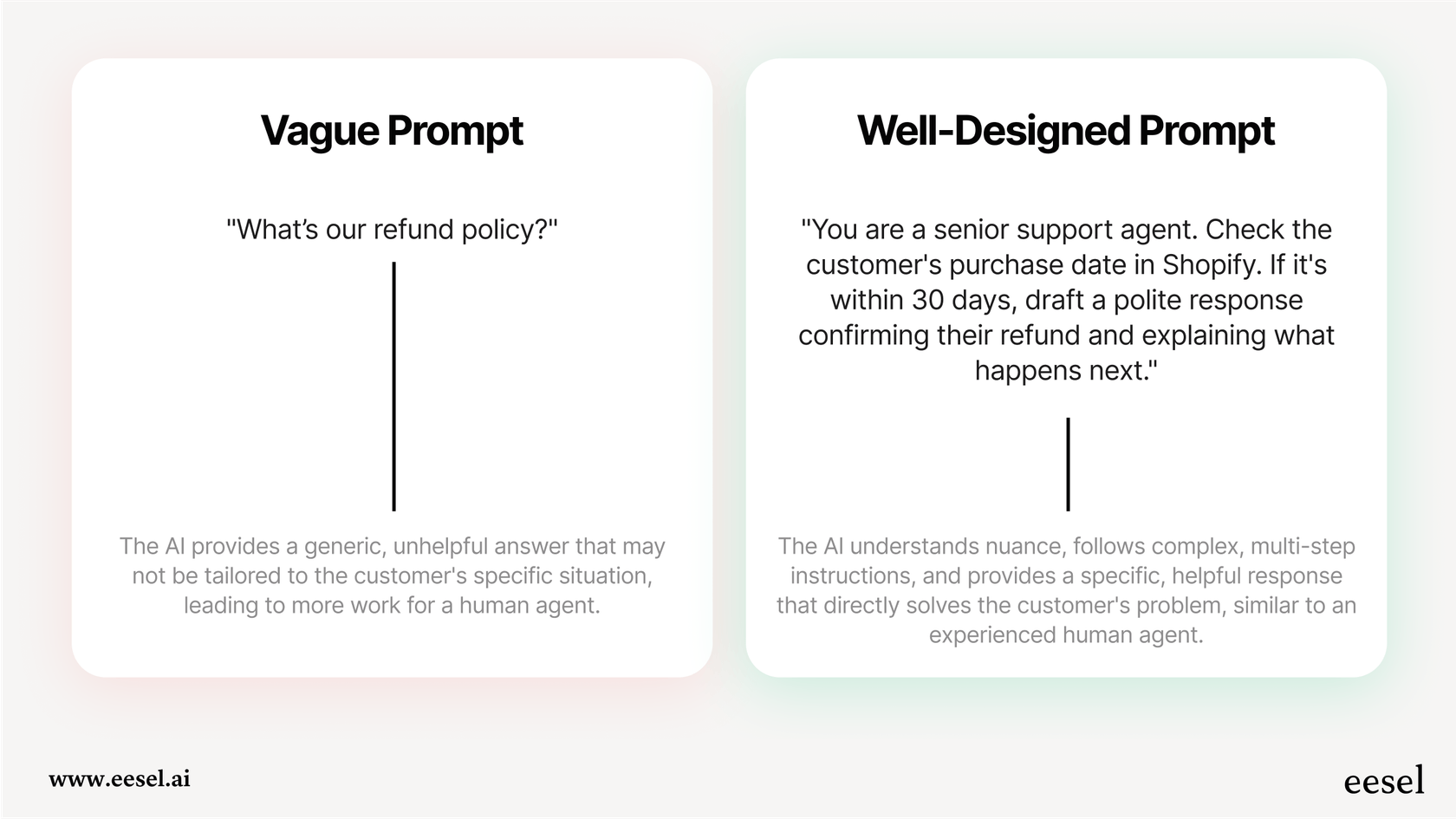

Prompt Engineering is the practice of writing detailed instructions (prompts) to get the best possible answers from an AI model like Claude. It’s the difference between asking an AI, "What’s our refund policy?" and telling it, "You are a senior support agent. Check the customer's purchase date in Shopify. If it's within 30 days, draft a polite response confirming their refund and explaining what happens next."

For support teams, that difference is huge. Vague prompts get you those generic, unhelpful AI answers that drive customers crazy and make more work for your agents. Well-designed prompts, on the other hand, create an AI that understands nuance, follows complex steps, and actually solves problems, just like a seasoned human agent.

Key lessons from Claude Code prompt engineering

Getting great results from a powerful AI doesn't happen by accident. The folks at Anthropic and other experts have shared tons of best practices for getting the most out of their models. And while they were thinking about code, these ideas translate perfectly to the world of customer support.

Let’s walk through three core techniques that really move the needle.

Giving the AI a job title and a rulebook

The first, and maybe most important, step is to tell the AI who it is and what its rules are. A developer using Claude Code might start a prompt with, "You are an expert Python developer who specializes in data analysis." This single instruction, often called a "system prompt," frames the whole conversation. It tells the AI which parts of its massive brain to use and what kind of tone to take.

How this applies to support:

In customer support, this means giving your AI a clear persona. Is it a friendly, empathetic agent who uses emojis? Or is it a more formal, technical expert who gets right to the business? This isn't just about branding; it sets expectations for the customer and keeps your responses consistent.

Just as important are the rules of engagement. A system prompt for a support AI should have clear guardrails, like:

- "Never promise a feature that isn't on our public roadmap."

- "If a customer seems frustrated, use an empathetic tone and acknowledge their feelings before offering a solution."

- "If you can't find the answer in the knowledge base with 100% certainty, pass the ticket to a human agent right away."

Without a role and a set of rules, an AI is just a fancy search engine. With them, it starts acting like a real member of your team.

Using structure and good examples

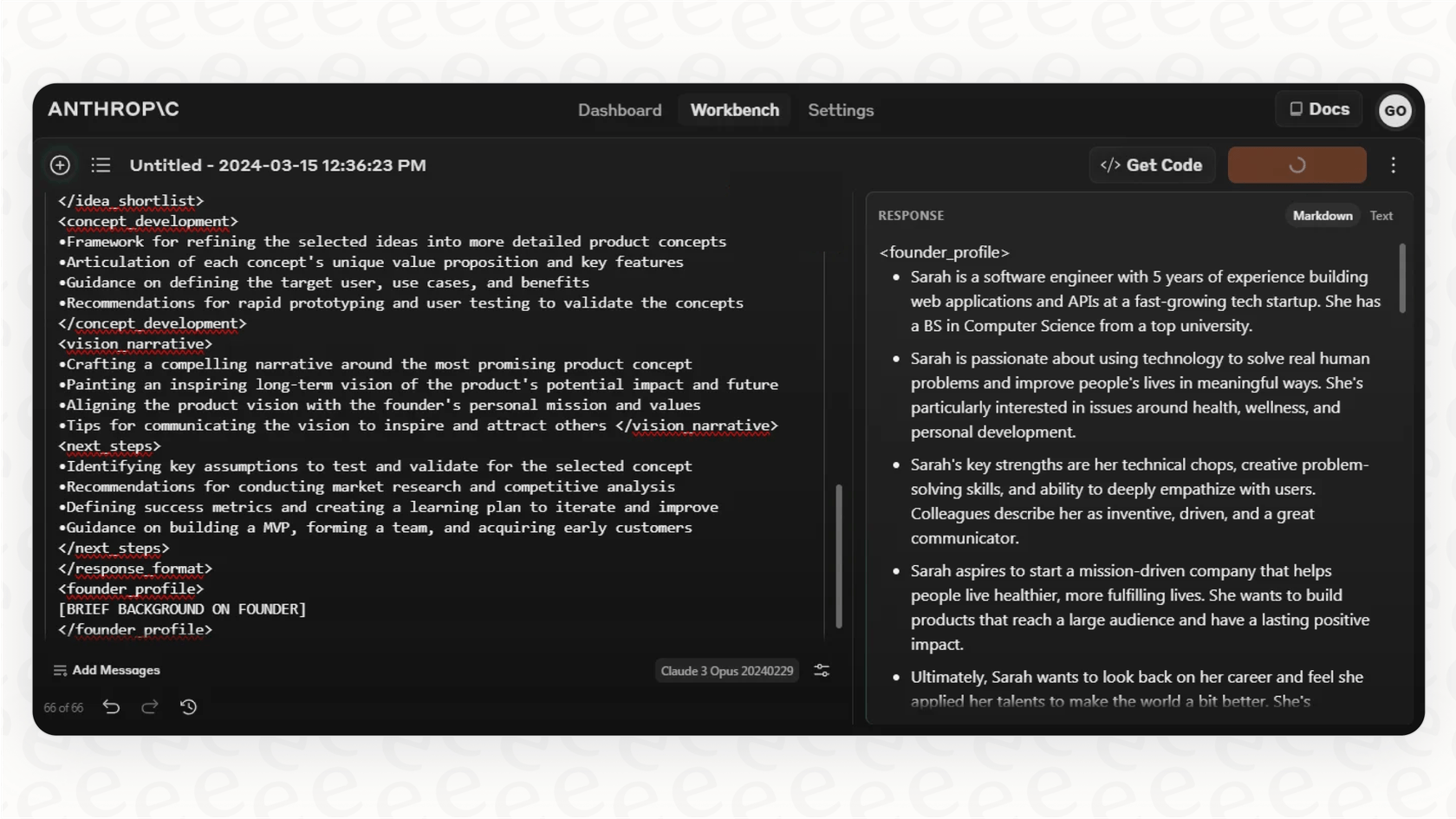

If you look at the prompts a developer writes for Claude, you won't see a big, messy paragraph. You'll see a structured format, often using simple tags (like <document> or <example>) to separate instructions from context and examples. This structure is a big deal because it helps the AI sort through the information. It knows the text inside <instructions> is a command to follow, while text inside <knowledge_base_article> is just for reference.

This is often paired with a technique called "few-shot prompting." All that means is you give the AI a few examples of a good question and the perfect answer. This works way better than just telling the AI what to do because it can see what you’re looking for.

How this applies to support:

Your support AI is constantly looking at different pieces of information: the customer's question, your knowledge base, past tickets, and its own instructions. Using a structured format helps it keep everything straight.

More importantly, giving it examples is the fastest way to teach an AI your company’s specific way of doing things. You could show it a couple of successfully handled tickets for a common issue, like a refund request.

- Example 1: A customer asks for a refund one day after buying. The AI sees the example response is quick, apologetic, and the refund is processed immediately.

- Example 2: A customer asks for a refund 45 days after buying. The AI sees the example response politely explains the 30-day policy and offers store credit as an alternative.

By seeing these two examples, the AI learns the nuances of your policy much better than it could by just reading a document. It picks up on your tone, your process, and how you handle edge cases.

Breaking big problems into smaller steps

You can't expect an AI to solve a complicated problem in one go. The best prompt engineers tell Claude to "think step-by-step" before it gives a final answer, sometimes asking it to put its thoughts in a special <thinking> tag. This is called "Chain of Thought" reasoning. It forces the AI to slow down, break down the problem, and show its work, which helps it get to the right answer on tricky, multi-step tasks.

"Prompt chaining" takes this even further by turning one big task into a series of smaller ones. The output from the first prompt becomes the input for the second, creating a workflow.

How this applies to support:

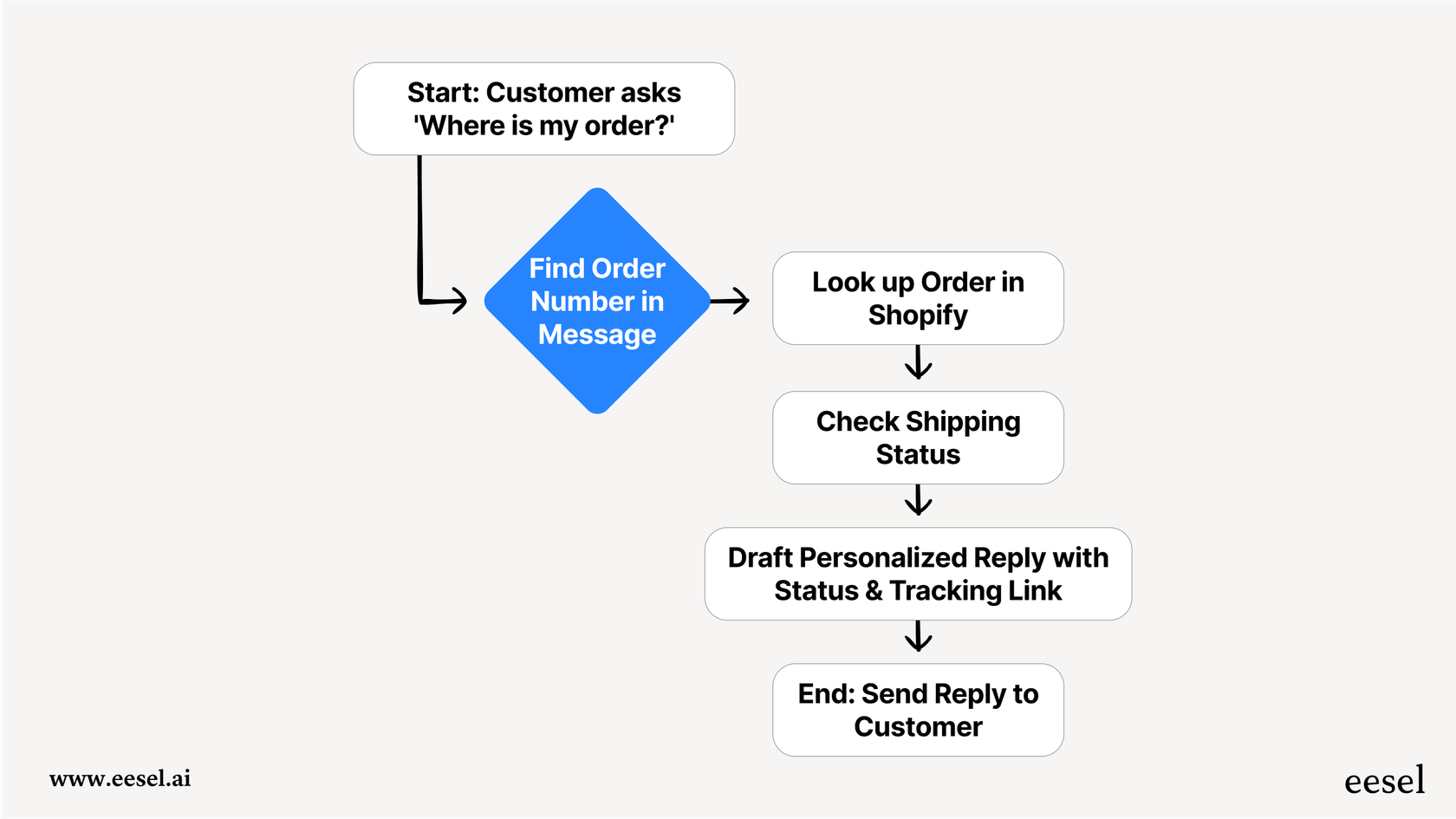

A real customer issue rarely has a one-shot solution. Take a simple "Where is my order?" request. A genuinely helpful answer requires a few steps that a basic AI can't manage:

- Step 1 (Prompt 1): Find the customer's order number in their message.

- Step 2 (Prompt 2): Use that order number to look up the details in a tool like Shopify.

- Step 3 (Prompt 3): Check the latest shipping status from the carrier.

- Step 4 (Prompt 4): Write a personalized reply that includes the current status, a tracking link, and the estimated delivery date.

This is the kind of step-by-step thinking that separates a simple FAQ bot from an agent that can actually resolve issues on its own.

The catch: why Claude Code prompt engineering is hard to do in a busy support team

So, these principles clearly work. The problem? You can't expect your support managers or agents to suddenly become prompt engineering experts.

It's just too technical. Writing structured prompts with tags, managing complex instructions, and connecting different systems for multi-step tasks is a developer's job. Support teams have better things to do, namely, helping customers.

It also doesn't scale. You can't sit there and manually write the perfect, multi-step prompt for every single type of ticket that comes in. You need a way to apply these principles automatically across thousands of unique conversations every day.

This is where a lot of the generic "AI for support" tools hit a wall. The built-in AI in help desks like Zendesk or Jira often just gives you a text box to write a simple prompt. They don't have the engine underneath to handle multi-step reasoning or learn from your specific data. It feels clunky and manual.

How to get expert results without being a Claude Code prompt engineering guru

The good news is you don't have to choose between a simplistic, ineffective AI and hiring a team of engineers. A purpose-built platform like eesel AI has these advanced prompt engineering principles baked right into an easy-to-use interface.

Here’s how it closes that gap:

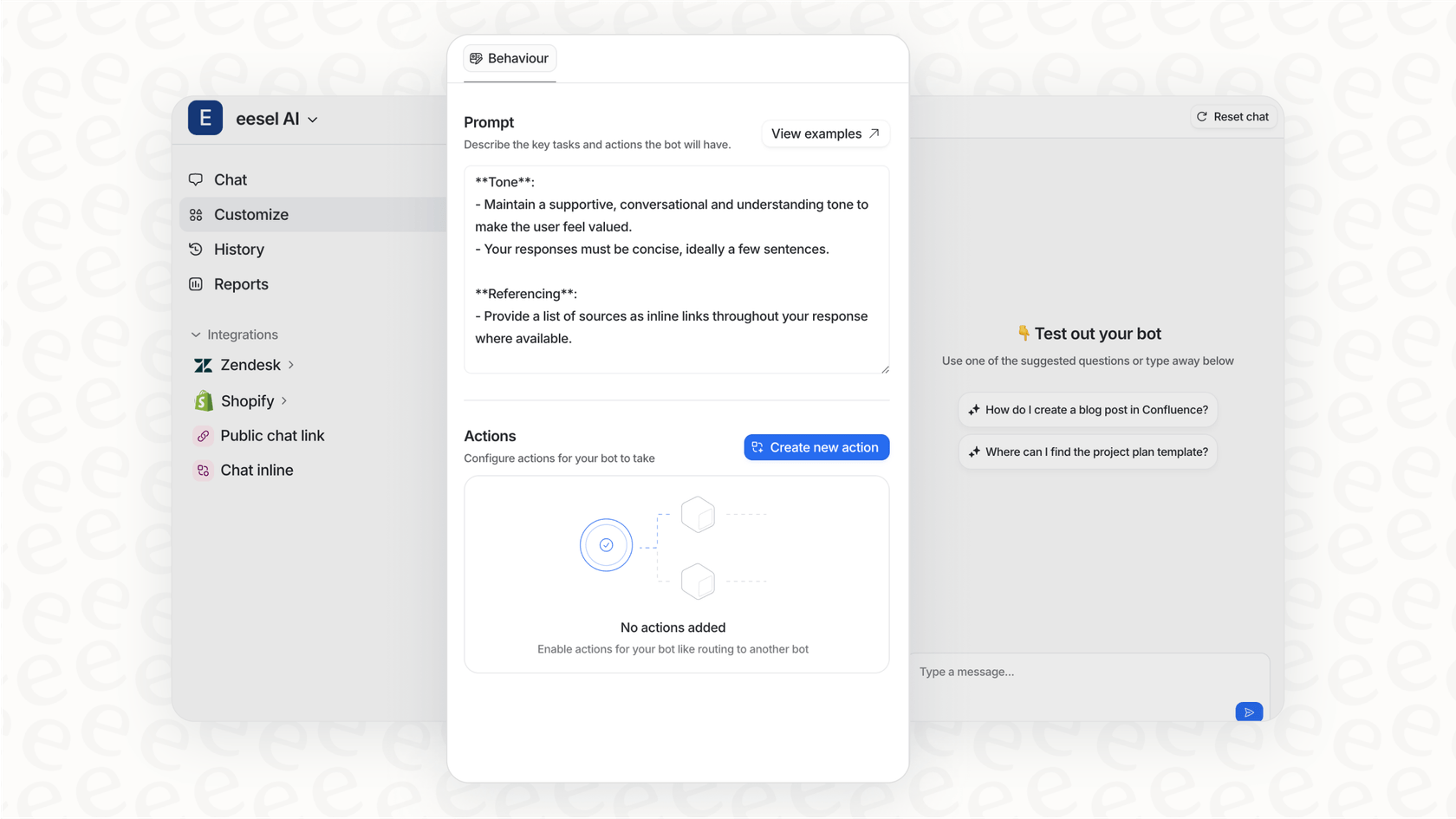

- You're the director, without the code: Instead of asking you to write technical system prompts, eesel AI gives you a simple editor to define your AI's persona, tone, and when it should escalate. You get all the power of setting roles and rules without writing a single line of code.

-

It learns from your best work, automatically: You don't need to manually create examples for the AI. eesel AI offers a one-click integration with helpdesks like Zendesk or Freshdesk and learns from your thousands of past resolved tickets. It studies your conversation history to immediately understand your specific issues, common solutions, and brand voice.

-

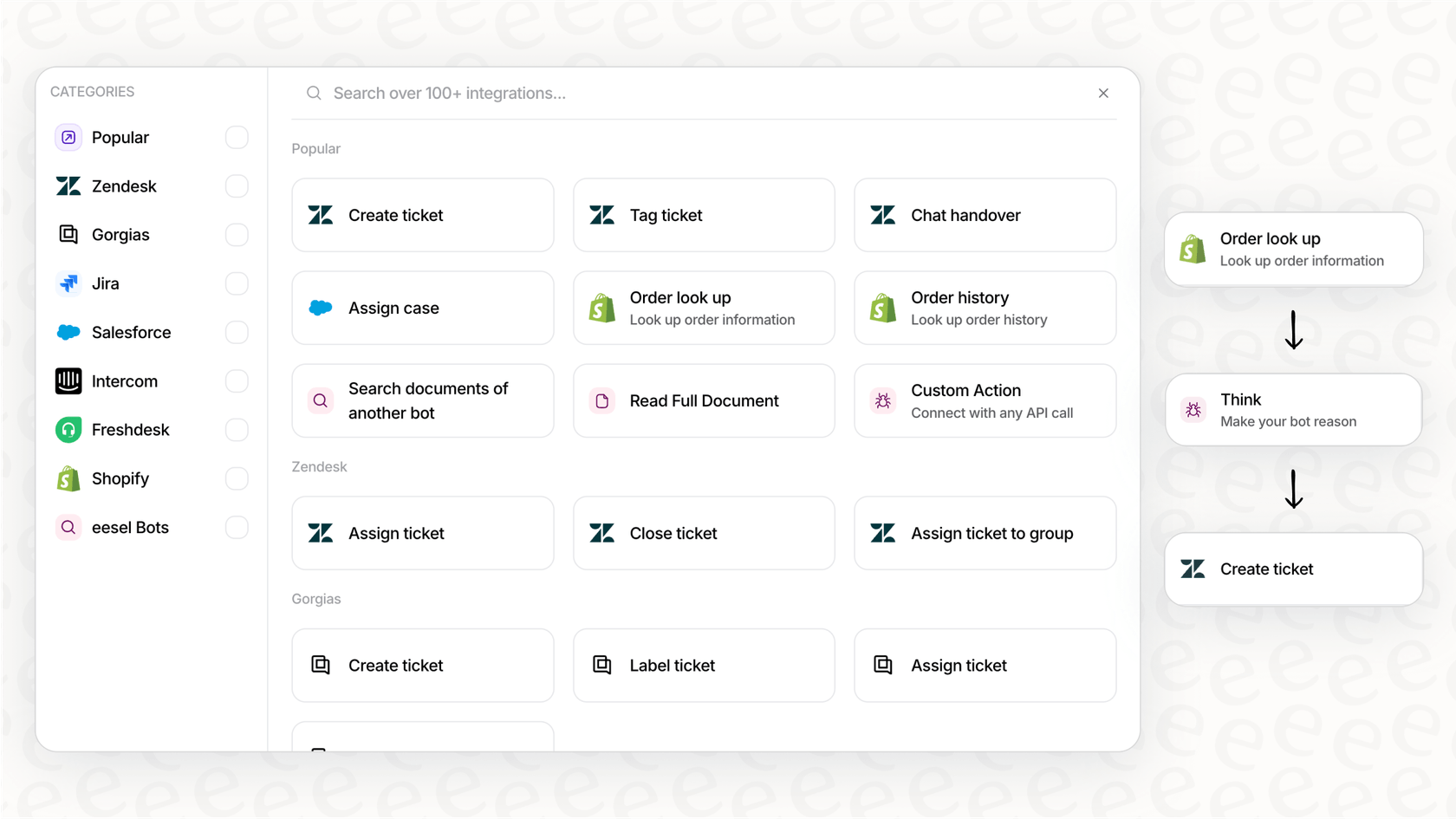

Build powerful workflows, no coding needed: Forget about complex prompt chaining. With eesel AI's "AI Actions," you can build out sophisticated, multi-step workflows with a few clicks. You can set up your AI agent to look up order info in Shopify, triage tickets in Zendesk, create an issue in Jira Service Management, or ping an agent in Slack. It delivers the power of "Chain of Thought" reasoning in a manageable way.

- Test drive it with your own data: One of the biggest headaches with advanced prompts is wondering if they'll work in the real world. With eesel AI, you can run simulations on thousands of your past tickets in a safe environment. You can see exactly how your AI agent would have responded, get solid forecasts on your resolution rates, and see your potential cost savings before the AI ever talks to a live customer.

Start building smarter AI support today with Claude Code prompt engineering

The amazing power of tools like Claude Code comes from sophisticated Claude Code prompt engineering. But for support teams, the answer isn't learning to write prompts like a developer. It’s about adopting a platform where that expertise is already built in.

eesel AI gives you all the perks of advanced AI, high accuracy, custom workflows, and deep contextual knowledge, in a platform that's surprisingly simple to set up and manage. You can connect your helpdesk, train your AI on your real data, and see it working in minutes, not months.

Don't settle for a chatbot that just repeats your FAQ page back to customers. See what a truly intelligent AI support agent can do for your team. Sign up for a free trial and run a simulation on your own tickets to see your automation potential.

See the foundational principles of prompt engineering in action with this guide from the creators of Claude.

Frequently asked questions

Not at all. The key takeaway is to understand the principles, but you shouldn't have to do the technical work yourself. A purpose-built platform should handle the complex engineering, letting you focus on defining the rules and tone for your AI agent.

The most important lesson is that context and instructions are everything. Giving the AI a clear role, a set of rules, and examples of what good looks like is the difference between a generic bot and an intelligent agent that acts like a true member of your team.

A great starting point is defining a clear persona and a few non-negotiable rules for your AI in a system prompt. Simply telling it "You are a friendly support agent who never promises future features" can significantly improve the quality of its responses.

A good FAQ provides static answers, but Claude Code prompt engineering teaches an AI how to think and solve problems. It allows the AI to follow multi-step processes, like looking up an order and then drafting a personalized status update, which is far more dynamic than an FAQ.

Yes, doing it manually is not scalable, which is the main challenge the article highlights. That's why platforms designed for support automation are so valuable, they apply these advanced principles automatically by learning from your past tickets and providing no-code workflow builders.

Customer issues often require multiple steps like identifying the customer, looking up data, and then forming a response. This technique forces the AI to think logically through those steps instead of just guessing at a final answer, which dramatically increases accuracy and resolution rates.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.