Molt Bot: A complete overview of the viral AI assistant

Kenneth Pangan

Stanley Nicholas

Last edited January 30, 2026

Expert Verified

If you're in the dev or AI world, you've probably seen Molt Bot pop up everywhere. It used to be called Clawdbot, then OpenClaw, and it gained popularity on GitHub, hitting over 20,000 stars quickly. The project is an experiment in giving an LLM full control of a computer, including the keyboard and mouse.

Think of it less like a chatbot and more like a digital assistant that can perform actions. However, this capability also introduces significant security considerations. This article will break down what Molt Bot is, its appeal to developers, and the security risks involved with its use in a business setting. We'll also explore what to look for in a business-ready AI teammate.

What is Molt Bot?

So, what exactly is Molt Bot? It's an open-source AI assistant that you host yourself. That's the important part: it's not running in the cloud on some corporate server, it's on your computer. That could be your everyday Mac or Windows machine, a Raspberry Pi, or a cloud server you're renting.

You interact with it through common chat apps like WhatsApp, Telegram, Discord, or Slack. According to its website, the goal is to have an AI that does more than just wait for you to type a command. It's built to be a proactive partner that can handle tasks, start workflows, and even learn new things without you having to guide it every step of the way. It's a fast-moving project, which explains why it's gone through several names so quickly.

Key features: What makes Molt Bot so powerful?

The reason Molt Bot got so much attention is that it does more than just chat. It's like an AI with "eyes and hands" that can actually get things done on your computer and across the web.

Full system and browser access

Molt Bot's main strength is its complete, unrestricted access to the computer it's on. It can read and write files, launch apps, and run any command-line script you can. This is what allows it to go way beyond just generating text.

It also has full control over a web browser, so it can visit websites, click buttons, fill out forms, and scrape data. It’s like having an intern who can browse the web for you 24/7. As one user described it, it’s a "smart model with eyes and hands." In one example, a user said their Molt Bot taught itself how to get its own API keys by navigating the entire Google Cloud Console interface.

Proactive and autonomous agent

Unlike assistants like Siri or ChatGPT that just sit there waiting for you, Molt Bot is designed to be autonomous. It has "heartbeats" that let it check on tasks proactively and can be scheduled with cron jobs to perform actions without any input from you.

It also has a persistent memory, so it remembers past conversations and context across different chat apps. This makes it feel more like a continuous collaboration rather than a tool you have to re-brief every time. One developer even shared that their bot found and fixed failing tests in their code on its own, then opened a pull request with the fix. That's a completely different league of "assistant."

Extensibility through community-driven skills

Molt Bot isn't limited to its out-of-the-box features. It uses a plugin system called "skills," which are just small bits of code that teach it new abilities. There's a community library called ClawdHub where users share skills for integrating with tools like Todoist, WHOOP, and various smart home gadgets.

Molt Bot can also write its own skills. You can ask it to build a new integration, and it will try to generate the code itself. This makes it almost infinitely customizable and is a huge part of why the developer community is so excited about it.

Security considerations: Why Molt Bot presents risks

While Molt Bot's capabilities are impressive, they come with significant security considerations. The project's own documentation acknowledges these risks, stating, "There is no ‘perfectly secure’ setup." For individual developers experimenting with the tool, these risks may be acceptable. For a business, however, they present serious challenges.

Plaintext secrets and data exposure

Let's start with the basics. Security researchers from firms like Noma Security and Snyk found that Molt Bot stores almost everything in plain text. Its memory, config files, and all your API keys for services like OpenAI are just sitting in a file named clawdbot.json.

This presents a significant security risk. If the computer running Molt Bot gets compromised, an attacker can just snatch that file and gain access to your entire digital life. As Snyk noted, this isn't just a password leak; it’s a blueprint of the AI's "brain." An attacker could use that information for highly convincing impersonation or social engineering attacks that would be nearly impossible to spot.

Unconstrained autonomy and prompt injection risks

Giving an AI full access to your system and the internet is a bold move. Giving it the ability to act on its own is another level of risk. This is where a vulnerability known as "indirect prompt injection" becomes a problem. It's a way of tricking an AI by feeding it malicious instructions from an outside source.

A Snyk security researcher showed how this works. He sent a specially crafted email to an account Molt Bot was monitoring. The email had hidden instructions that tricked the agent into reading its own clawdbot.json file and sending all the sensitive data back to the attacker. Because Molt Bot is designed to interact with the outside world, any input, like an email, a website, or a community "skill," could be a Trojan horse that hijacks the agent.

The need for a business-ready AI teammate

Molt Bot lacks features typically required for business environments, such as a formal security model, user permissions, audit trails, and sandboxed testing environments. Businesses generally require an AI teammate that can be managed, trained, and overseen. For these use cases, platforms like eesel AI are built on a "teammate model" for professional use. They offer enterprise-level security features, including data encryption, role-based access, and SOC 2 Type II certified infrastructure. Their architecture is also designed to mitigate risks like prompt injection by creating a secure boundary between the AI and company data. This approach provides the benefits of an AI agent within a more controlled framework.

Molt Bot setup and hosting: A look at the complexity

Setting up Molt Bot isn't like downloading something from the app store. Guides from DigitalOcean and Hostinger show that you need to be pretty tech-savvy. If you're not comfortable using the command line, managing servers, and doing regular maintenance, it's going to be a tough time.

The reality of self-hosting an AI agent

A typical installation means logging into a server over SSH, installing software like Git and Docker, cloning the Molt Bot code, and running a bunch of command-line scripts. It's far from a simple experience.

This has led to people buying dedicated Mac minis or renting a virtual private server (VPS) just to keep their Molt Bot online 24/7. That adds hidden hardware and maintenance costs you might not expect. This DIY setup also means you are completely responsible for security. You have to set up firewalls and ensure nothing is exposed to the internet, a risk that has already led to unsecured Molt Bot instances being discovered on Shodan.

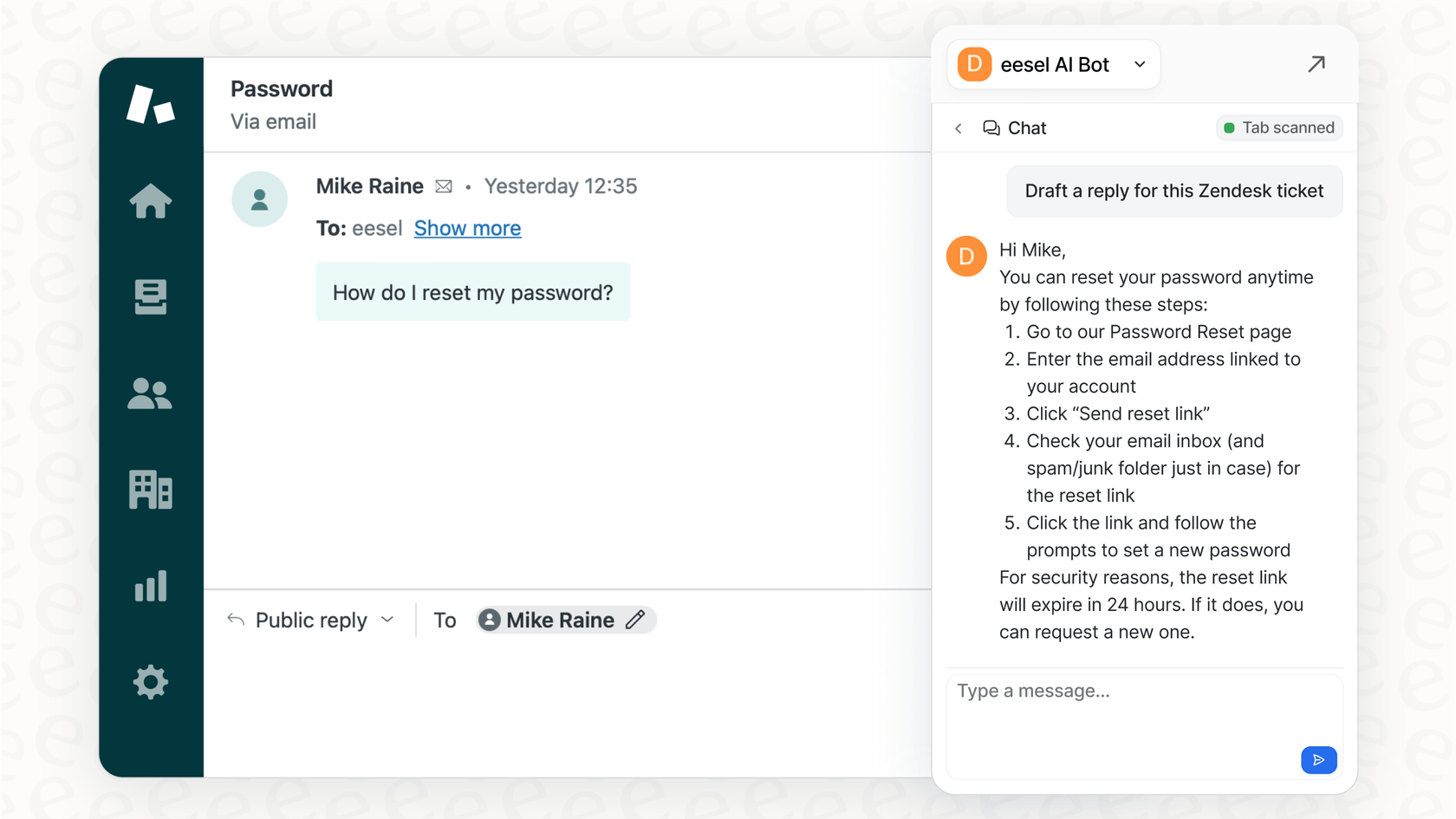

An alternative approach: Managed platforms

This contrasts with the user experience of a business-ready platform. With a tool like eesel AI, a user can start a free trial and connect it to their existing systems like Zendesk, Intercom, or Confluence within minutes. The AI begins learning from past tickets, help articles, and internal documents immediately. This approach eliminates the need for server setup, command-line interaction, and direct security management by the user, offering a managed platform for teams looking to leverage AI.

Final thoughts on Molt Bot

Molt Bot is an important and innovative project. It serves as a trailblazer for personal, autonomous AI, and the community behind it is doing exciting work. It provides a glimpse into a future where AI agents work as our partners.

However, the features that make it compelling for hobbyists, such as deep system access and a self-hosted design, are the same reasons it presents challenges for many professional teams. The inherent security risks, setup complexity, and lack of business-oriented controls and oversight are key factors to consider.

For a deeper dive into how Molt Bot works and what the community is building with it, the video below offers a clear explanation of its core concepts.

A video from Greg Isenberg explaining the core concepts of Moltbot and how it can be used as a proactive AI teammate.

Finding a trustworthy AI teammate for your business

For those interested in the potential of AI agents but concerned about the risks of experimental open-source projects, a managed platform may be a more suitable approach. eesel AI adapts the concept of an AI teammate for practical and secure business applications.

It offers a secure and manageable AI teammate that can be deployed with confidence. Teams can begin by using it as an AI Copilot, which drafts replies for human agents to review. Based on its performance, its role can be expanded to that of a fully autonomous AI Agent that handles tickets independently within a controlled and secure environment.

This allows businesses to leverage the power of AI agents while maintaining strict control over security, data privacy, and brand voice.

Ready to see the difference? Learn how you can "hire" an AI teammate that gets up to speed on your business in minutes and starts working safely with your team from day one.

Frequently Asked Questions

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.