With generative AI popping up everywhere, everyone's scrambling to figure out how to actually use it for their business. For developers, this often means finding the right tools to build custom apps that are more than just a simple chatbot. This is where LangChain enters the picture. It's one of the most popular open-source toolkits out there for creating applications powered by large language models (LLMs).

This guide will give you a clear, no-fluff rundown of what LangChain is, how its pieces fit together, what you can build with it, and what it really costs to get a project off the ground.

Here’s the main takeaway: While frameworks like LangChain are incredibly powerful, they require a serious amount of developer time and effort to get right. We'll also look at how modern platforms can give you all that power without the headache of long development cycles.

What is LangChain?

At its heart, LangChain is an open-source framework that helps you build applications using LLMs. It's not an AI model itself. Instead, think of it as a toolbox that helps developers connect an LLM (like GPT-4) to other data sources and software. It’s available in Python and JavaScript and offers a bunch of pre-built components that make common tasks a bit easier.

This video provides a great overview of what LangChain is and how it helps developers build applications using large language models.

Its popularity exploded right alongside tools like ChatGPT because it lets developers go way beyond basic questions and answers. Instead of just asking a model something, you can build complex systems that are aware of your specific data, can reason about it, and even take actions. Essentially, LangChain acts as the glue between your chosen LLM and the rest of the world, your documents, databases, and other tools.

The name itself gives a clue to its core idea: you "chain" different components together to create a workflow. For instance, you could build a chain that takes a customer's question, finds the answer in a specific PDF, and then passes both the question and the info to an LLM to generate a perfect response.

How LangChain works: The core pieces

The real magic of LangChain is its modular setup. It gives developers a box of Lego-like pieces they can snap together to build all sorts of AI applications. Let's break down the most important ones.

LangChain models, prompts, and parsers

First, you've got the essentials: an AI model, a way to talk to it, and a way to make sense of its reply.

-

Models: LangChain gives you a standard way to connect to pretty much any LLM out there, whether it's from OpenAI, Anthropic, or an open-source model you're running yourself. This is great because it means you can swap out the brain of your operation without rewriting everything.

-

Prompts: A "PromptTemplate" is basically a reusable recipe for the instructions you feed the LLM. Getting these instructions just right, a skill known as prompt engineering, is a huge part of making a good AI app. It's also one of the most tedious parts of the process, often requiring endless tweaking.

-

Parsers: LLMs usually just spit out plain text, which can be tough for a program to work with. Output parsers help you force the model to give you a response in a structured format, like JSON, so you can reliably use its output in the next step of your chain.

While developers use LangChain to code these templates and parsers by hand, it takes a lot of specialized know-how. For teams that just need results, a platform like eesel AI has a simple prompt editor where you can define an AI's personality and rules through a friendly interface, with zero code involved.

LangChain indexes and retrievers for your own knowledge

LLMs have a big blind spot: they only know what they were trained on. They have no idea about your company's internal documents or your latest help articles. To make them truly useful, you need to give them access to your specific knowledge. This whole process is called Retrieval Augmented Generation, or RAG for short.

LangChain gives developers the tools to build a RAG system from the ground up:

-

Document Loaders: These are connectors that pull in data from all sorts of places, like PDFs, websites, Notion pages, or databases.

-

Text Splitters: LLMs can't read a 100-page document all at once. Text splitters are used to break down big files into smaller, bite-sized chunks.

-

Vector Stores & Embeddings: This is where the RAG magic happens. Your text chunks are turned into numerical representations called "embeddings" and stored in a special database called a vector store. This lets the system do a super-fast search to find the most relevant pieces of information for any given question.

Building a solid RAG pipeline with LangChain is a real data engineering project. In contrast, eesel AI helps you bring all your knowledge together instantly. You can connect sources like Confluence, Google Docs, and your past help desk tickets with just a few clicks. The platform handles all the tricky parts of chunking, embedding, and retrieval for you.

LangChain chains and agents

This is where all the pieces come together to do something useful.

-

Chains: Just like the name implies, chains are how you link different components into a single workflow. The simplest version is an "LLMChain", which just connects a model with a prompt template. But you can build much more complex chains that string together multiple steps to get a job done.

-

Agents: Agents are a big step up from chains. Instead of just following a fixed set of steps, an agent uses the LLM as a "brain" to decide what to do next. You give the agent a toolbox, things like a web search, a calculator, or access to an API, and it figures out which tools to use, and in what order, to solve a problem. It’s the difference between following a recipe and actually being a chef.

Common LangChain use cases (and their challenges)

Because LangChain is so flexible, you can use it to build almost anything you can think of. Here are a few of the most common applications, along with some of the real-world hurdles you'll hit when building them yourself.

Custom LangChain chatbots and Q&A systems

This is probably the most popular reason people turn to LangChain. Businesses want to build custom LangChain chatbots that can answer questions based on their own private knowledge, like an internal wiki or a public help center. It’s the classic use case for RAG.

-

The challenge: The tough part? Making sure the chatbot is actually right and doesn't just make stuff up (which AI is known to do). When it does get an answer wrong, digging through the agent's logic to figure out why it messed up can be a massive debugging headache.

-

The platform solution: This is where a ready-made platform really helps. eesel AI's AI Chatbot and Internal Chat are built for this exact purpose. Even better, you can use a powerful simulation mode to test the AI on thousands of your past customer questions. This lets you see how accurate it will be and find gaps in your knowledge before it ever talks to a real user.

Autonomous LangChain agents for automating tasks

This is where things get really interesting. You can use LangChain to create agents that handle multi-step tasks on their own. For example, you could build an agent that looks up a customer's order in Shopify, checks the shipping status with a carrier's API, and then drafts a personalized email update for them.

-

The challenge: Building, deploying, and keeping an eye on these autonomous agents is a huge job. LangChain eventually released a separate tool called LangSmith to help with debugging and monitoring, but it's yet another complex system for your developers to learn, manage, and pay for.

-

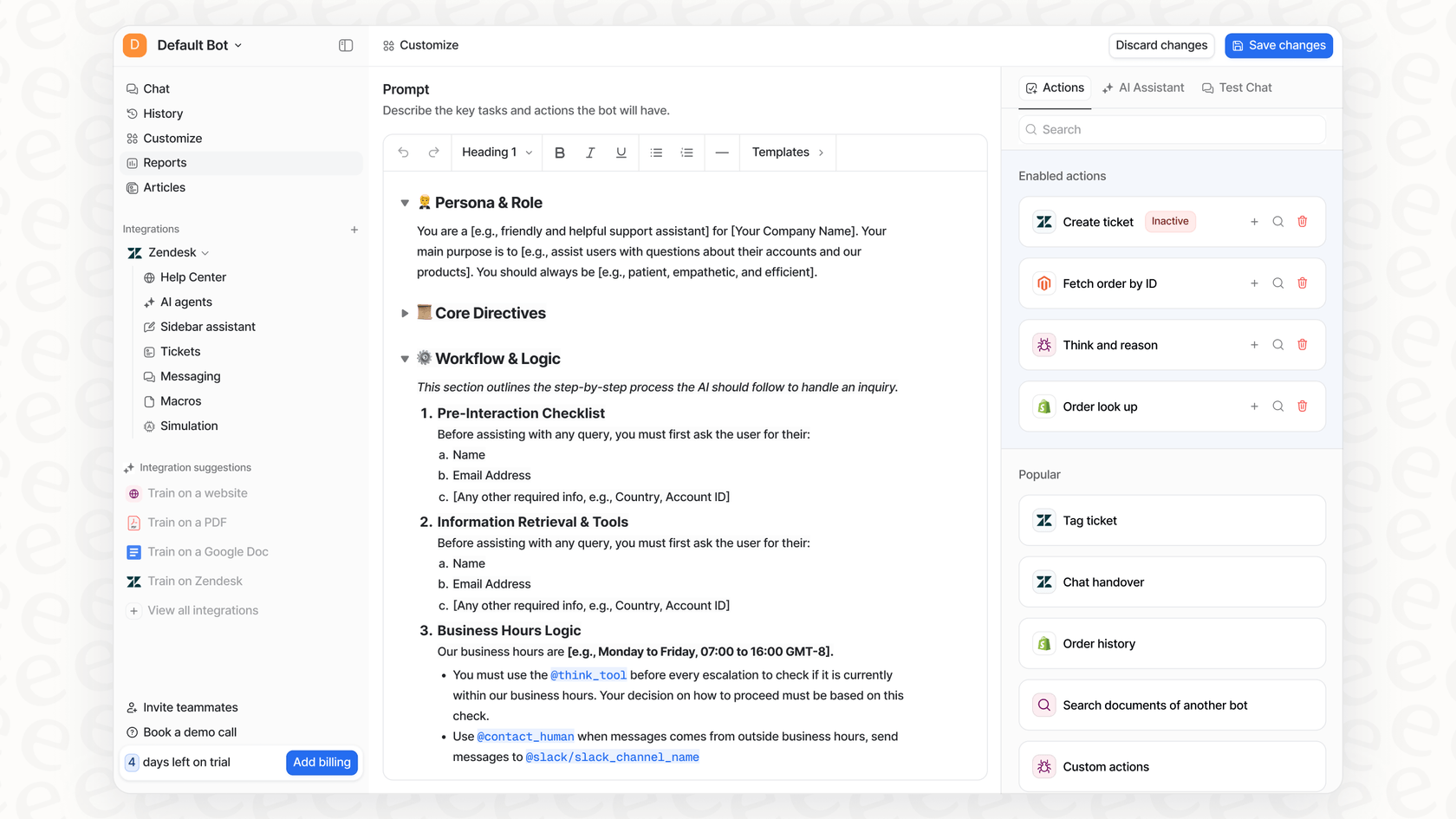

The platform solution: With eesel AI, you don't have to build these agents from scratch. The AI Agent can be set up with custom actions to look up order info or sort tickets in help desks like Zendesk or Freshdesk. You do it all through a simple interface, with reporting already built-in, so you have full control without all the engineering overhead.

LangChain for summarization and data extraction

Another common use is getting an LLM to make sense of huge walls of text. This could mean summarizing long documents, creating digests of meeting notes, or pulling key details out of customer support chats.

-

The challenge: To get consistently good summaries that capture the right details, you have to get your instructions just right. This often means a lot of trial and error with your prompts, and sometimes even fine-tuning your own model, which adds another layer of cost and complexity.

-

The platform solution: eesel AI automatically learns from your old support tickets to understand your brand's voice and common problems. Its AI Copilot then uses this context to help your agents draft high-quality, relevant replies. It can even generate draft help articles from resolved tickets, turning messy conversations into clean, reusable knowledge without any extra work.

LangChain pricing and getting started

Before you dive in, it’s a good idea to understand the real cost and effort that goes into a LangChain project for a business.

The cost of using LangChain

The LangChain framework itself is open-source and free, but that "free" comes with some asterisks. The total cost of a LangChain app really comes from a few different places:

-

LLM API Costs: You have to pay the model provider (like OpenAI) for every single request your application makes. These costs can be unpredictable and can add up quickly as more people use your app.

-

LangSmith: To properly debug, monitor, and deploy a professional-grade agent, you'll probably need LangSmith, LangChain's paid monitoring platform.

Here's a quick look at what LangSmith costs:

| Feature | Developer | Plus | Enterprise |

|---|---|---|---|

| Pricing | Free (1 seat) | $39 per seat/month | Custom |

| Included Traces | 5k base traces/mo | 10k base traces/mo | Custom |

| Deployment | N/A | 1 free Dev deployment | Custom |

Source: LangChain Pricing

This kind of variable, usage-based pricing makes it really hard to budget. In contrast, eesel AI offers clear, predictable pricing based on a set number of AI interactions per month. You get all the features, agents, copilots, reporting, included in one simple plan, so you don't have to worry about a surprise bill from your LLM provider.

Getting started and the LangChain learning curve

Let's be real about who LangChain is for. As you'll see in popular Udemy courses on the topic, LangChain is built for software developers and AI engineers who are already comfortable with Python or JavaScript.

Building a production-ready app isn't a weekend project. It means setting up development environments, securely handling API keys, writing a lot of code, debugging tricky interactions, and figuring out how to deploy and scale the whole thing. It’s a full-on software project.

For businesses that need a powerful AI solution now, the steep learning curve and long development time of LangChain can be a major hurdle. eesel AI is designed to be completely self-serve. You can connect your help desk, train the AI on your knowledge, and go live in minutes, not months, often without needing a developer at all.

The bottom line on LangChain: Building vs. buying

LangChain is an undeniably powerful and flexible framework for developers who need to build highly custom AI applications from the ground up. If your team has the right technical skills and resources, it gives you complete control.

But all that control comes at a price. It puts you in a classic "build vs. buy" situation. Do you want to pour significant engineering time, money, and ongoing maintenance into building a custom solution with LangChain? Or would you rather use a ready-made platform that can start delivering value from day one?

For most customer service, IT, and internal support teams, the goal isn't to build AI; it's to solve problems faster. eesel AI provides a platform built specifically for that. You get all the key benefits of AI agents, like automated ticket resolution, intelligent reply suggestions, and instant answers to internal questions, without the cost, complexity, and risk of a ground-up development project. It’s all the power of a custom-built agent with the simplicity of a self-serve tool.

Ready to see how easy it can be to get an AI agent working for your team? Try eesel AI for free and set up your first one in under five minutes.

Frequently asked questions

LangChain acts as an open-source framework and a toolbox that connects large language models (LLMs) to other data sources and software. It provides pre-built components that streamline common tasks, enabling developers to build complex systems that are aware of specific data and can take actions. Essentially, it helps "chain" different components into a complete AI workflow.

Key components include models (for connecting to LLMs), prompt templates (for engineering instructions), and output parsers (for structuring LLM responses). For integrating custom knowledge, LangChain offers document loaders, text splitters, and vector stores with embeddings. These pieces are then combined into chains or agents to perform specific tasks.

LangChain is versatile enough for various AI applications. Common use cases include custom chatbots and Q&A systems powered by your own data, autonomous agents for automating multi-step tasks like order processing, and tools for summarization and data extraction from long documents or customer interactions.

LangChain provides tools to implement Retrieval Augmented Generation (RAG). This involves document loaders to ingest data, text splitters to break it into chunks, and vector stores to convert these chunks into searchable numerical representations (embeddings). This process allows the LLM to retrieve and utilize specific, relevant information from your knowledge base.

While the LangChain framework is open-source and free, you will incur costs for LLM API usage from providers like OpenAI, which can be unpredictable. Additionally, for professional-grade debugging and monitoring, you would likely need to pay for LangSmith, LangChain's dedicated monitoring platform. These expenses contribute to the overall project cost.

The learning curve for LangChain is quite steep, as it's designed for software developers and AI engineers already proficient in Python or JavaScript. Building a production-ready application involves significant developer time for setup, coding, debugging, deployment, and scaling, making it a full-on software project rather than a quick solution.

A LangChain chain links different components into a predefined, fixed workflow, executing steps in a set order. In contrast, an agent uses the LLM as a "brain" to dynamically decide what actions to take next from a provided toolbox of capabilities, allowing it to adapt and solve problems more autonomously rather than following a rigid recipe.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.