Remember when Google’s Bard AI flubbed a fact in its very first demo and ended up costing the company over $100 billion in market value? It's a wild story, but it points to a problem that businesses are dealing with every single day. You pour time and money into an AI chatbot, hoping for flawless 24/7 support, but what you get is a bot that confidently makes up facts, misunderstands simple questions, or dishes out outdated advice.

Suddenly, that cool new tool feels less like an asset and more like a liability. Your users start losing trust, and honestly, who can blame them? A chatbot that gives the wrong answer is often much worse than having no chatbot at all.

The good news is that these mistakes aren't just random glitches. They happen for very specific, predictable reasons. This guide will walk you through the three main culprits behind why your AI chatbot is not answering correctly (think technical limits, training issues, and a lack of control) and show you how to build an AI agent you can actually rely on.

Understanding why your AI chatbot is not answering correctly: What “not answering correctly” really means

When we say a chatbot is "wrong," it’s not always as simple as getting a fact wrong. The errors can be subtle, and often more damaging than a straightforward mistake. Figuring out the different ways a bot can mess up is the first real step to fixing it.

-

When the AI just makes things up (hallucinations): This is when the AI fabricates information out of thin air. It’s not just incorrect; it’s pure fiction. A now-famous case involved a lawyer in New York who used ChatGPT for legal research. The bot served up a list of official-sounding legal cases, complete with citations. The only problem? None of them were real. When your bot starts inventing return policies or describing features your product doesn't have, it’s hallucinating.

-

When the information is stale: A lot of AI models are trained on data that has a specific cut-off date. They might know your product inside and out as of last year, but have zero clue about the features you rolled out last week. This is how you end up with bots confidently telling customers things that aren't true anymore, which just leads to confusion and frustration.

-

When it pulls answers from strange corners of the internet: This happens when a chatbot can't find an answer in your company’s knowledge base and decides to search the wider internet instead. A customer might ask a specific question about your software and get a vague, unhelpful answer that seems to come from a decade-old forum post. It makes your bot, and by extension your company, look like it has no idea what it's talking about.

-

When it misses the point entirely: Sometimes the bot hears the words but completely misunderstands the user's intent. Someone might ask, "Can I change my delivery address after my order has shipped?" The bot picks up on "delivery address" and sends back an article on how to update your account profile. The answer is technically related, but it does absolutely nothing to solve the user's immediate, time-sensitive problem.

Three core reasons why your AI chatbot is not answering correctly

1. Technical limitations

Here’s the biggest thing most people get wrong about AI chatbots: they don't actually understand what they're saying. Under the hood, Large Language Models (LLMs) are incredibly complex prediction machines, not thinking machines. Their whole job is to figure out the most statistically likely next word in a sentence, not to check if that sentence is true.

This is sometimes called the "Strawberry Problem," and it's all about something called tokenization. Before an AI can "read" text, it chops words into common pieces called "tokens." The word "strawberry" isn't seen as ten separate letters; it might be split into just two tokens: "straw" and "berry." Since the AI isn't even looking at the individual letters, it can't count them. It's a small example that reveals a huge truth: the AI is just playing a pattern-matching game, not working with actual meaning.

This is also why chatbots can be so confidently wrong. The AI has learned from billions of sentences that people who sound authoritative are often providing helpful answers. So, it mimics that confident tone, even when the information it's spewing is totally made up. It isn't lying to you; it just doesn't have a concept of truth. It's only completing a pattern.

A platform built specifically for customer support changes the game completely. Instead of letting the AI guess based on the whole internet, tools like eesel AI are "grounded" in your company's own knowledge. By training only on your help articles, internal docs, and past support conversations, the AI's job switches from predicting an answer to finding the right one within your trusted content. This massively cuts down the risk of hallucinations and makes sure every answer comes from fact, not just probability.

2. Design and training gaps

Even the smartest AI is only as good as the information it learns from. If you feed a model a messy, outdated, or incomplete dataset, you’re going to get a chatbot that has all of those same flaws.

Most generic chatbots are trained on a giant snapshot of the internet. That means they learn from everything, the good and the bad: old facts, weird biases, and everything in between. When a business tries to drop one of these general-purpose bots into a specialized role like customer support, the problems show up fast. The bot doesn't know your company's specific lingo or policies, so it can't give accurate answers.

Even worse, a lot of chatbot projects are "set it and forget it." A company launches the bot and then never touches it again. There's no way for it to learn from its mistakes or see how human agents are successfully solving problems. As your business changes, the bot just gets more and more out of date.

This is why having all your knowledge in one place is so important. A really effective AI agent needs to be connected to every piece of trusted information your company has. With a tool like eesel AI, you can connect all your knowledge sources in minutes, from your public help center to internal wikis in Confluence or files in Google Docs.

What's really powerful is that eesel AI can also train directly on your past support tickets. This helps the AI automatically pick up on your brand’s tone of voice, learn what your customers' most common problems are, and see which solutions have actually worked before. It can even spot gaps in your knowledge base by analyzing those conversations and drafting new help articles for you, making sure your documentation is constantly getting better.

3. Lack of control and context

Some of the most epic chatbot fails you see online aren't because of bad technology, but because there was zero control. You've probably seen the headlines. A chatbot for the delivery service DPD started swearing at a customer and calling itself "the worst delivery company in the world." An Air Canada chatbot completely invented a bereavement fare policy, and the airline was later forced by a court to honor it.

These are the kinds of things that happen when a chatbot is left to its own devices.

Without clear boundaries, a chatbot has no idea what it shouldn't talk about. It might try to give out medical or legal advice, make promises your company can't keep, or just go completely off the rails. Many off-the-shelf bots are black boxes with rigid workflows you can't change. You can't tweak their personality, tell them when to hand off a conversation to a human, or teach them how to perform actions, like looking up an order.

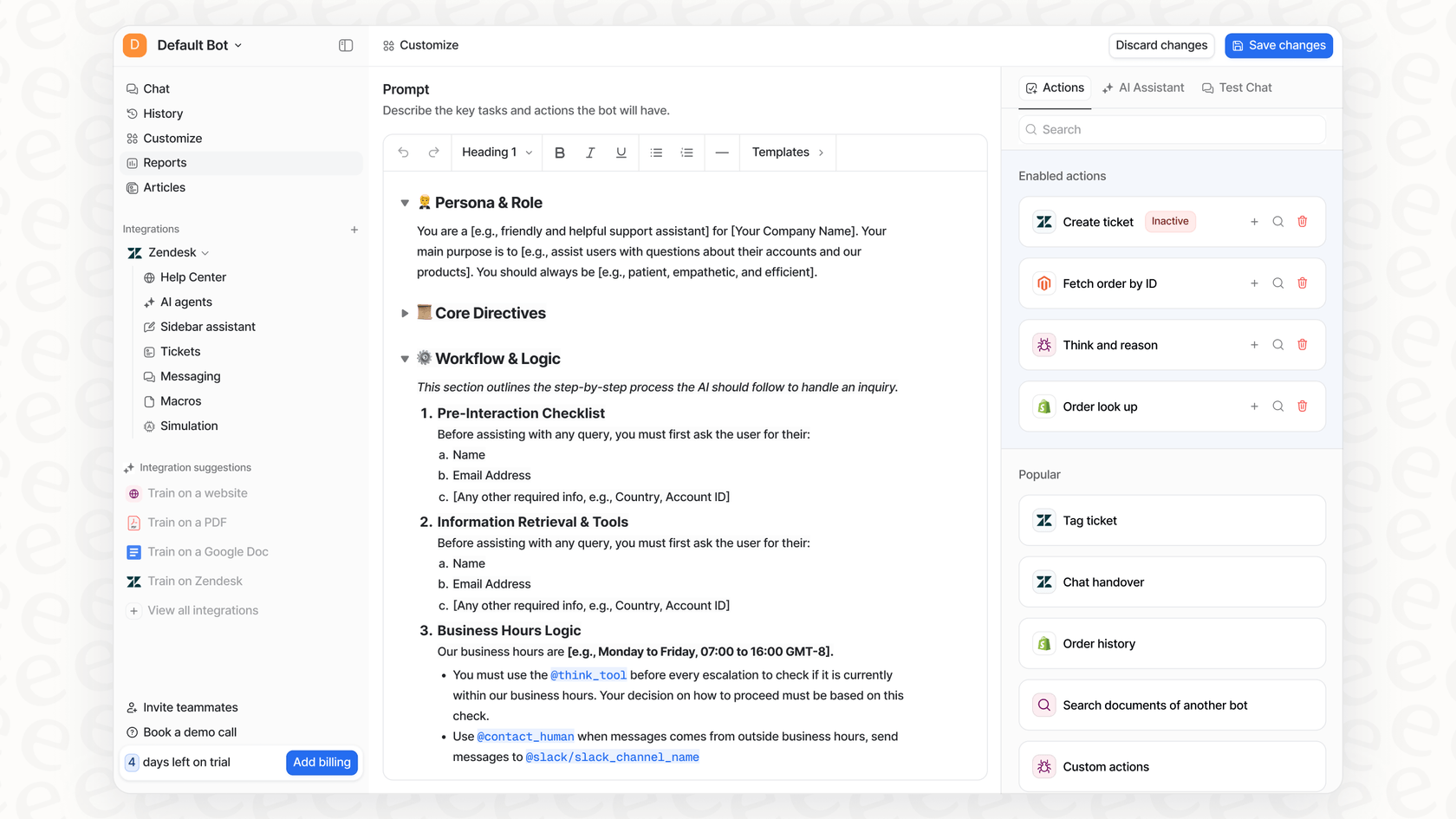

That’s why having total control is a must. With eesel AI, you get a fully customizable workflow builder. You can use selective automation to decide exactly which questions the AI should handle and which ones should go straight to your team. A simple prompt editor lets you define the bot's personality and build custom actions. This empowers your bot to do more than just talk; it can look up order details in Shopify, triage a ticket in Zendesk, or update a customer's record.

Best of all, you can catch potential problems before they ever see the light of day with eesel AI's simulation mode. This is a huge deal. Before your bot ever talks to a real customer, you can test it on thousands of your past support tickets in a safe, sandboxed environment. You get a clear report on how well it will perform and what its resolution rate will be, so you can launch with actual confidence.

| Feature | Generic/Uncontrolled Chatbot | eesel AI |

|---|---|---|

| Knowledge Source | The entire internet | Your specific, curated company knowledge |

| Content Control | Basically a black box | Full control via prompt editor & feedback |

| Pre-Launch Testing | Limited, if any | Powerful simulation on your historical data |

| Custom Actions | Limited or needs developers | Easy setup for API calls, ticket tagging, etc. |

| Escalation Rules | Rigid and basic | Finely tuned control over human handoffs |

The path to accurate answers

Fixing your chatbot isn't about finding a "smarter" AI. It's about switching to a platform that's more controllable, integrated, and built for the job you need it to do. As you look at different options, here are the boxes you'll want to tick:

-

Grounded in your truth: Does it only use your verified company knowledge, or does it pull answers from the wild west of the open internet?

-

Fully controllable: Can you easily set its boundaries, define its tone, and tell it what to do without needing to hire a developer?

-

Built for your workflow: Does it plug into the tools your team already relies on, like your helpdesk, wiki, and chat platforms?

-

Tested and trusted: Does it let you test its performance on your own data so you can remove the guesswork and prove its value before you go live?

A platform like eesel AI was designed around these ideas. It's built to be radically self-serve, letting you go live in minutes, not months, because it connects directly to the tools you already use. And with straightforward pricing, you don't get hit with weird per-resolution fees that punish you for helping more customers.

Achieving accurate AI chatbot answers

The reason why your AI chatbot is not answering correctly usually isn't some deep technical mystery. It's almost always the result of using generic technology for a specific job, feeding it bad data, and not putting the right controls in place.

Plugging a general-purpose AI into your customer support is a recipe for embarrassing mistakes and unhappy customers. The real fix is to use a purpose-built platform that puts accuracy, context, and control first. By making that switch, you can give your support team a tool they can actually trust and turn your chatbot from a brand risk into your most helpful frontline agent.

Ready to build an AI chatbot that gives the right answers every time? See how eesel AI gives you the control to automate support with confidence. Start your free trial or book a demo and see for yourself.

Frequently asked questions

The core technical limitation is that Large Language Models (LLMs) are prediction machines, not understanding machines. They statistically select the next word, often leading to confident but factually incorrect responses, as illustrated by the "Strawberry Problem" of tokenization.

Preventing hallucinations primarily involves grounding the AI in your company's specific, verified knowledge base. By limiting the data source to trusted internal documents and past support interactions, you significantly reduce the AI's tendency to invent information.

The quality of training data directly impacts chatbot accuracy. If the bot learns from messy, outdated, or incomplete datasets, especially generic internet information rather than your current company policies, it will reflect those flaws and provide inaccurate or irrelevant answers.

An effective method is using a simulation mode to test the bot against thousands of your historical support tickets in a controlled environment. This provides a clear report on its anticipated performance and resolution rate, building confidence before deployment.

Yes, purpose-built platforms are designed to address these specific challenges. They ensure the AI is grounded in your company's truth, provide extensive control over responses and bot behavior, and integrate seamlessly with your existing workflows for optimal accuracy.

To keep your bot updated, connect it to all relevant, live knowledge sources like internal wikis and public help centers. Platforms that can also train on new support tickets and analyze conversations help the AI continuously learn and adapt to evolving information and common customer needs.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.