OpenEvidence AI has been getting a lot of buzz as a powerful tool built for the demanding world of healthcare. It promises to give clinicians instant, evidence-backed answers, saving them from digging through millions of medical studies. But does it really deliver in a real-world setting?

In this review, we'll take a close look at OpenEvidence AI's features, performance, and practical limits, all from the perspective of someone who works with AI implementation. We’ll cover where it shines and, just as important, where a different kind of AI solution might be a better fit for your business.

What is OpenEvidence AI?

At its core, OpenEvidence AI is an AI platform built to help doctors, clinicians, and medical students make better decisions. Think of it as a super-specialized search engine that doesn't just find information, it actually understands it. Its main job is to pull insights from millions of peer-reviewed studies, journals, and guidelines to give clear, synthesized answers to complex medical questions.

The platform came out of the Mayo Clinic Platform Accelerate program, which gives it a lot of credibility right out of the gate. Instead of just giving you a list of links, it aims to provide fast, evidence-based recommendations you can use on the spot, drawing from trusted sources like PubMed, the New England Journal of Medicine (NEJM), and the Journal of the American Medical Association (JAMA). It's designed to be a reliable "peripheral brain" for doctors trying to keep up with the constant flood of new medical information.

Core features and capabilities

OpenEvidence isn't your average chatbot; it’s a focused tool with a very specific job to do. Let’s break down what it can actually do.

Evidence-based clinical decision support

The real standout feature of OpenEvidence is its promise to back up every answer with citations. When it suggests something, it links directly to the original studies, so clinicians can check the evidence for themselves. This is a massive plus compared to general large language models (LLMs) that can "hallucinate" or make up sources, a risk that’s completely unacceptable in medicine. This focus is central to how OpenEvidence AI is transforming clinical decision-making.

This "scoped knowledge" approach is what makes it reliable for such a high-stakes job. It’s the same principle any business should follow when using AI. A customer support team, for instance, can't just rely on generic answers from across the web. They need an AI trained exclusively on their own internal knowledge. That’s what tools like eesel AI are for, connecting directly to a company's own help docs, past tickets, and internal wikis in platforms like Confluence or Google Docs to create a secure, single source of truth.

AI scribe for clinical documentation

It’s not just about answering questions. OpenEvidence also has an AI Scribe feature to help with the mountain of clinical paperwork. It can analyze transcripts from patient visits and help draft notes. Users have been particularly impressed with its ability to understand complex medical acronyms like FOLFOX or CHOP (chemotherapy regimens). This shows it has a deep understanding of its specific field, something generic AI scribes often get wrong. It's built to get the lingo right, which takes some of the pain out of documentation.

Educational tools and USMLE preparation

OpenEvidence has also carved out a niche as a learning tool. For medical students, it's a quick way to look up evidence and get a handle on complicated subjects. The company even has a free "explanation model" to help students prep for the United States Medical Licensing Examination (USMLE). This tool doesn't just spit out the right answers to practice questions; it explains the reasoning behind them, citing top-tier medical sources as it goes. It's a smart way to give more people access to high-quality study materials.

User experience and workflow integration

A tool can have all the best features in the world, but if it's a pain to use, it’ll just collect dust. Here’s what users are saying about how OpenEvidence works in a day-to-day setting.

Performance and speed

One of the most common complaints from clinicians is that OpenEvidence can be slow. According to one detailed review, the lag is particularly bad when using its more advanced features. While the note-writing speed is okay, asking a question and getting an answer can take longer than you’d want in a busy clinic.

In a high-pressure environment, speed isn't just nice to have, it's everything. The same goes for customer support. When a customer is waiting for an answer, every second feels like an hour. It’s why modern AI platforms are built to be fast. eesel AI, for example, is designed to provide instant reply drafts and one-click actions right inside help desks like Zendesk or Intercom, helping agents resolve issues faster, not adding another delay.

Customization and control limitations

Another point of frustration for users is the tool's inflexibility. Some have described the AI Scribe's writing style as "robotic" and pointed out that you can't easily switch between short and long note formats. The AI also seems to have trouble following custom templates, often breaking information into separate bullet points instead of keeping it in one neat section.

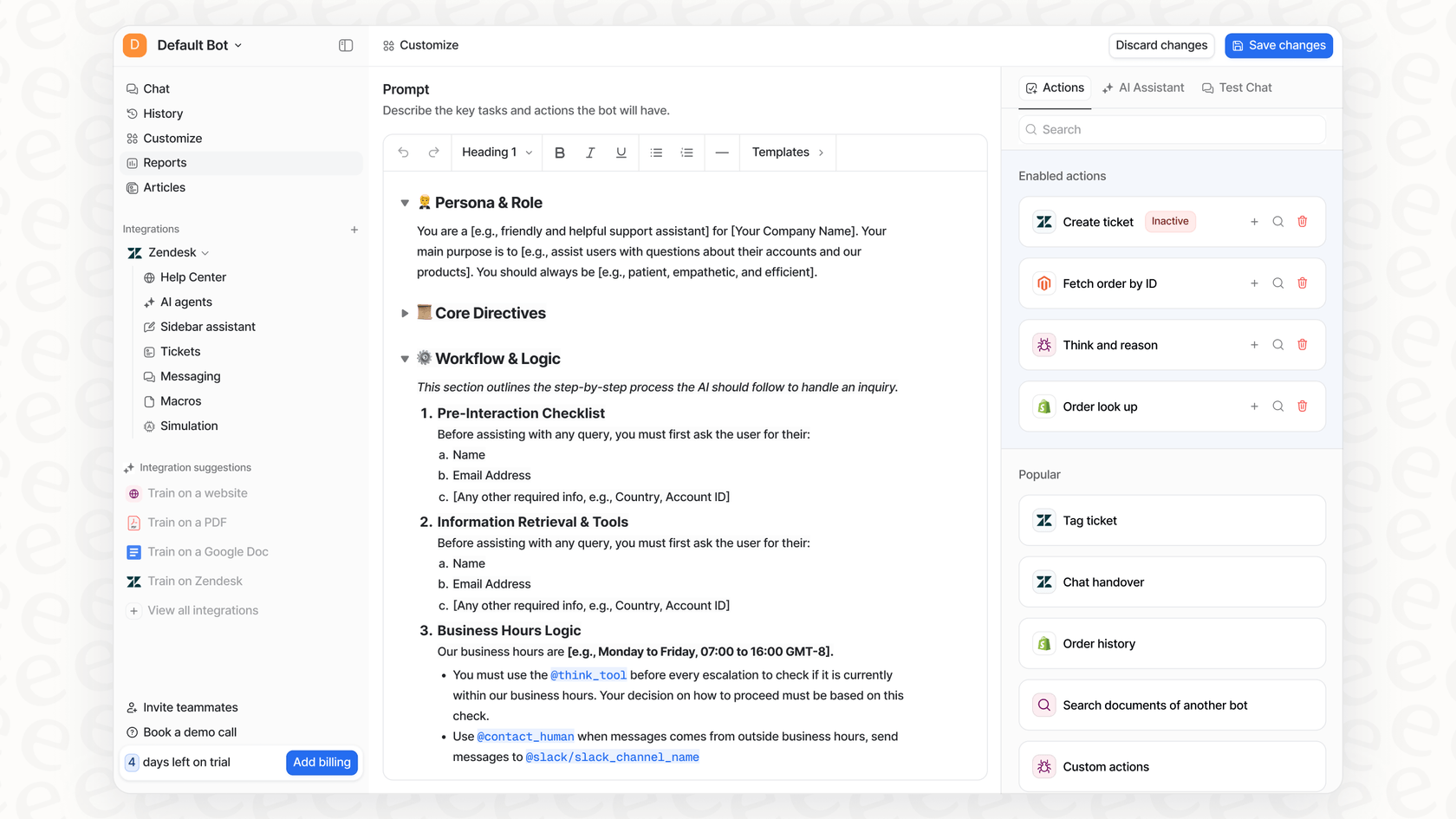

This is a classic problem with "one-size-fits-all" AI systems. For most businesses, that's a dealbreaker. Brand voice and consistency are everything. That's why platforms like eesel AI give you a fully customizable prompt editor. This lets support teams have fine-grained control over the AI's tone of voice, personality, and how it structures answers, making sure every interaction is perfectly on-brand.

Key considerations: Accuracy, liability, and testing

Before you roll out any serious AI tool, you have to talk about trust and safety. Here are a few big issues that come up with OpenEvidence and tools like it.

Accuracy, misinformation, and physician oversight

Even with its evidence-based design, trust is a huge hurdle. A Sermo poll of physicians found that 44% named accuracy and the risk of bad information as their biggest worry with AI systems like OpenEvidence. While the tool is mostly accurate, clinicians still feel they need to double-check everything it produces.

As one doctor bluntly put it, "I don’t trust it because when it’s wrong, it’s annoyingly confident in its wrong answer." An AI that's overconfident can be more dangerous than one that's unsure, as it might convince someone to act on bad information. This is exactly why a human expert always needs to be in the loop.

The importance of testing before deployment

In all the reviews and studies, there's no mention of a proper simulation mode for OpenEvidence. It seems clinicians are left to test the tool live in their daily workflow, which comes with obvious risks. When the stakes are this high, you really can't afford to "test in production."

For any business using AI in a critical or customer-facing role, a safe testing environment is a must. This is where having a sandbox becomes so important. eesel AI, for instance, tackles this with a simulation mode that lets teams test their entire AI setup on thousands of their own historical support tickets. This gives you a clear forecast of how well it will perform, shows you where your knowledge base has gaps, and lets you tweak the AI's behavior before it ever talks to a customer. It's all about rolling it out with confidence.

Pricing

So, what's the price tag? Right now, OpenEvidence is free for verified U.S. clinicians and medical students with a National Provider Identifier (NPI) number. This has been a huge factor in its quick adoption. Users without an NPI, like researchers, have said they're limited to just a couple of searches per day.

The business model seems to focus on partnerships with medical publishers like Elsevier and journals like NEJM, not on charging individual users. While that's great for their target audience, it’s not how most businesses operate. Companies need to know what they're paying for to manage budgets and plan for growth. Tools like eesel AI offer straightforward plans based on usage, so you don't get hit with surprise fees for being successful.

The verdict: A powerful tool for a specialized field

So what's the bottom line? OpenEvidence is an impressive tool that does a great job at a very specific task. It’s a fantastic example of how AI can be tailored to one industry to solve a real problem.

The good parts are obvious: it uses trusted sources, it's simple to use, and it's free for doctors and students. But the downsides, it's slow, not very customizable, and doesn't seem to have a safe testing mode, make it a tough fit for a fast-paced business environment like customer service, IT, or internal support.

A more flexible AI alternative for your support team

While OpenEvidence is making waves in healthcare, business teams in other fields need something built for flexibility, control, and easy integration. eesel AI is designed for teams who need an AI that learns from their knowledge and adapts to their workflows.

It's built to address the limitations we've talked about. You can get it set up yourself in minutes without waiting on a sales team. You get full control over the AI's personality and what it automates. And you can test everything on your past support tickets first, so you can launch without any guesswork. It also unifies your knowledge by seamlessly connecting to your help desk, internal wikis, chat tools like Slack, and more.

This video explores why OpenEvidence is considered a game-changer by medical professionals.

Final thoughts

OpenEvidence AI is a great example of what happens when AI gets focused. It delivers real value to clinicians and is pushing medical technology forward.

But for most businesses, the future of AI isn't about finding a single, rigid tool. It's about having a controllable, customizable, and safely integrated assistant that works right inside your existing systems. It’s about building an AI that truly gets your business from the inside out.

Ready to build an AI assistant that understands your business? Get started with eesel AI for free or book a demo to see it in action.

Frequently asked questions

OpenEvidence AI is primarily designed to provide evidence-backed clinical decision support for medical professionals. It synthesizes insights from millions of peer-reviewed studies to offer clear, reliable answers to complex medical questions.

The tool is specifically built for doctors, clinicians, and medical students. It aims to help them make better decisions and keep up with the constant influx of new medical information.

Key features include evidence-based clinical decision support with direct citations, an AI Scribe for clinical documentation, and educational tools for USMLE preparation. Its specialized medical understanding is a significant differentiator.

Users often report that OpenEvidence AI can be slow, especially with advanced features, which is a concern in fast-paced clinical environments. Additionally, the tool offers limited customization options, with some users finding the AI Scribe's style robotic.

While largely accurate and evidence-based, the review notes that clinicians still feel the need to double-check its output, especially due to concerns about "annoyingly confident" wrong answers. It emphasizes that human oversight remains crucial.

Yes, the OpenEvidence AI review states that the platform is currently free for verified U.S. clinicians and medical students who have a National Provider Identifier (NPI) number.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.