Let's be honest, the dream of controlling complex software like Blender with plain English is a big one. Imagine just telling it to “create a procedural city with random building heights” and watching the magic happen. For a little while, it seemed like OpenAI Codex was going to be the key that unlocked this new reality, translating our words directly into the code that makes Blender tick.

But as plenty of developers and 3D artists have found out, the road from a simple prompt to a perfect render is a lot bumpier than we hoped. The dream is definitely alive, but you can't just plug in a chatbot and expect it to work perfectly.

This guide is a down-to-earth look at the current state of OpenAI Codex integrations with Blender. We’ll get into what Codex has actually become, why general-purpose AIs often trip over their own feet with specialized software, and how the developer community is building some really smart workarounds to bridge that gap.

What's the deal with OpenAI Codex integrations with Blender?

First off, let's clear something up. OpenAI Codex was a seriously powerful AI model from OpenAI that was trained specifically to turn natural language into code. It was a huge deal because it understood Python, which is the scripting language that powers Blender. The idea was simple enough: you describe what you want, and Codex spits out the Python script to make it happen in Blender.

But if you go looking for the Codex API, you'll find it's been retired. The technology hasn't vanished, though. It’s been rolled into the bigger ChatGPT ecosystem. You can now get to its coding abilities through the "Codex agent," which is part of ChatGPT’s paid plans.

The goal for a Blender integration hasn't changed. You're still using an AI to generate scripts that talk to Blender's Python API (you'll often see this called "bpy"). These scripts can do all sorts of things, like create objects, add modifiers, tweak materials, or adjust the lighting. It’s a powerful "text-to-script" idea, but as we're about to see, it’s a long way from having a true AI co-pilot that actually understands what you're doing.

The problem with OpenAI Codex integrations with Blender: Why general AI chatbots get confused

Anyone who's tried using a general AI chatbot for a very specific, technical job has probably felt that little twinge of frustration. You ask it to write a script, and it confidently gives you code that breaks the second you run it. This is a common story in the Blender community, where developers have learned that these AIs, for all their smarts, have some pretty big blind spots.

Outdated knowledge

Blender is always being updated, and its Python API changes from one version to the next. AI models, however, are trained on massive but frozen-in-time snapshots of the internet. This means their knowledge of the Blender API is almost always out of date.

A classic problem is the AI generating code that uses a function from Blender 2.8 that was tweaked in version 3.0 and gone completely by 4.0. The AI has no idea. It serves up the old code with total confidence, and unless you're a Blender scripting pro, you're left scratching your head trying to fix an error you don't even understand.

Code hallucinations

In the AI world, they call it a "hallucination" when a model generates information that sounds right but is actually wrong or makes no sense. When this happens with code, it can be maddening.

For instance, you could ask an AI to build a complex shader node setup. It might write a script that tries to plug a "Color" output into a "Vector" input or just invents node sockets that don't exist. The code might look okay at first glance, and the AI will present it like it's the perfect answer, but when you run it, Blender either throws an error or you get a visual mess. The AI doesn't get the logic of why certain nodes connect a certain way; it's just mimicking patterns it saw in its training data.

Poor code design

This is probably the biggest hurdle. Writing good code isn't just about getting one command right, it's about designing something that's efficient, easy to maintain, and well-structured. General-purpose AIs are just plain bad at this.

It’s like asking for directions. A basic AI can give you a list of turns to get from A to B. But a real co-pilot understands the whole trip. They know to avoid that one street that always has terrible traffic during rush hour, and they can suggest a nicer, more scenic route if you're not in a hurry.

AI models often write clunky, inefficient code because they don't see that bigger picture. I saw a great example of this on the Blender Artists forum where someone asked an AI to create a "favorites" system for objects. Instead of the simple, clean solution (just storing a direct pointer to the object), the AI built a messy system that was constantly checking every single object in the scene whenever anything changed. It caused huge performance slowdowns. The script "worked," but its design was fundamentally broken.

Community solutions: Custom tools

Tired of the limits of generic chatbots, the Blender developer community did what it always does: it started building better tools. Instead of trying to get one giant, perfect script out of of an AI, they’re creating smarter integrations that let the AI have a real conversation with Blender.

The Model Context Protocol (MCP): A key solution

A big piece of the puzzle is something called the Model Context Protocol (MCP). Put simply, MCP is like a universal translator that lets large language models (LLMs) talk to your local apps and tools in a structured way.

Instead of the AI just guessing and generating a huge block of code, MCP lets the AI use specific, pre-defined "tools" that the application offers. It changes the interaction from the AI giving a speech to it having a back-and-forth conversation. The AI can ask questions, understand what's happening in the application right now, and run commands that are guaranteed to work. This is exactly the kind of setup a Redditor was dreaming of for a smarter Blender co-pilot.

How MCP servers work

Tools like BlenderMCP and Blender Open are the translators in this scenario. They run as a small server that listens for instructions from an LLM (like Claude or a model you run on your own computer) and turns them into specific, valid commands inside Blender.

This is a much more reliable way of doing things. The AI isn't trying to write a script from memory. Instead, it's just picking the right "tool" from the MCP server's list, like "get_scene_info" or "download_polyhaven_asset". The server takes care of running the code, making sure it's correct and works with your version of Blender.

graph TD

A[Large Language Model LLM] -->|1. User Prompt: 'Find wood texture'| B(MCP Server);

B -->|2. Selects Tool: download_polyhaven_asset| C[Blender];

C -->|3. Executes Command & Returns Result| B;

B -->|4. Translates Result to Natural Language| A;

A -->|5. Response to User: 'Texture applied'| D{User};

What this unlocks

This structured approach makes things possible that are pretty much impossible with a simple text-to-script model:

-

Scene awareness: You can ask real questions like, "What's the name of the object I have selected?" and get an actual answer back from Blender.

-

Asset management: Prompts like, "Find a good wood texture on PolyHaven and apply it to the floor" can actually work.

-

Step-by-step control: Instead of trying to get one perfect script for a complex task, you can walk the AI through it one step at a time, correcting it along the way.

This video demonstrates how connecting an AI like Claude to Blender can solve major headaches and create a powerful creative agent.

Building a real AI co-pilot: Lessons from other fields

The struggles the Blender community is facing, like dealing with outdated information, generic AI models, and the need for custom workflows, aren't new. Businesses in just about every industry hit the same walls when they try to apply general AI to specialized work, especially in a field like customer support.

Just hooking up a generic chatbot to a complex system is a recipe for frustration. To build an AI assistant that's actually helpful, you need a platform that’s designed from the start for deep, context-aware integration.

Why you need a specialized platform

Any AI integration that actually works, whether it's for 3D art or IT support, rests on a few key ideas that you just don't get from an off-the-shelf model.

It has to connect to your existing tools You shouldn't have to change your entire workflow just to use an AI. It needs to work inside the software you already use, whether that's Blender for an artist or a helpdesk for a support agent.

It needs to be trained on your knowledge The AI has to learn from your specific data, not just a generic dump of the public internet. To be accurate, it needs access to the latest, most relevant information for your situation.

You need a customizable workflow You need to be in complete control of how the AI behaves. That means defining what it can and can't do, what its personality is like, and, most importantly, when it needs to pass a task off to a human.

How customer support solves similar problems

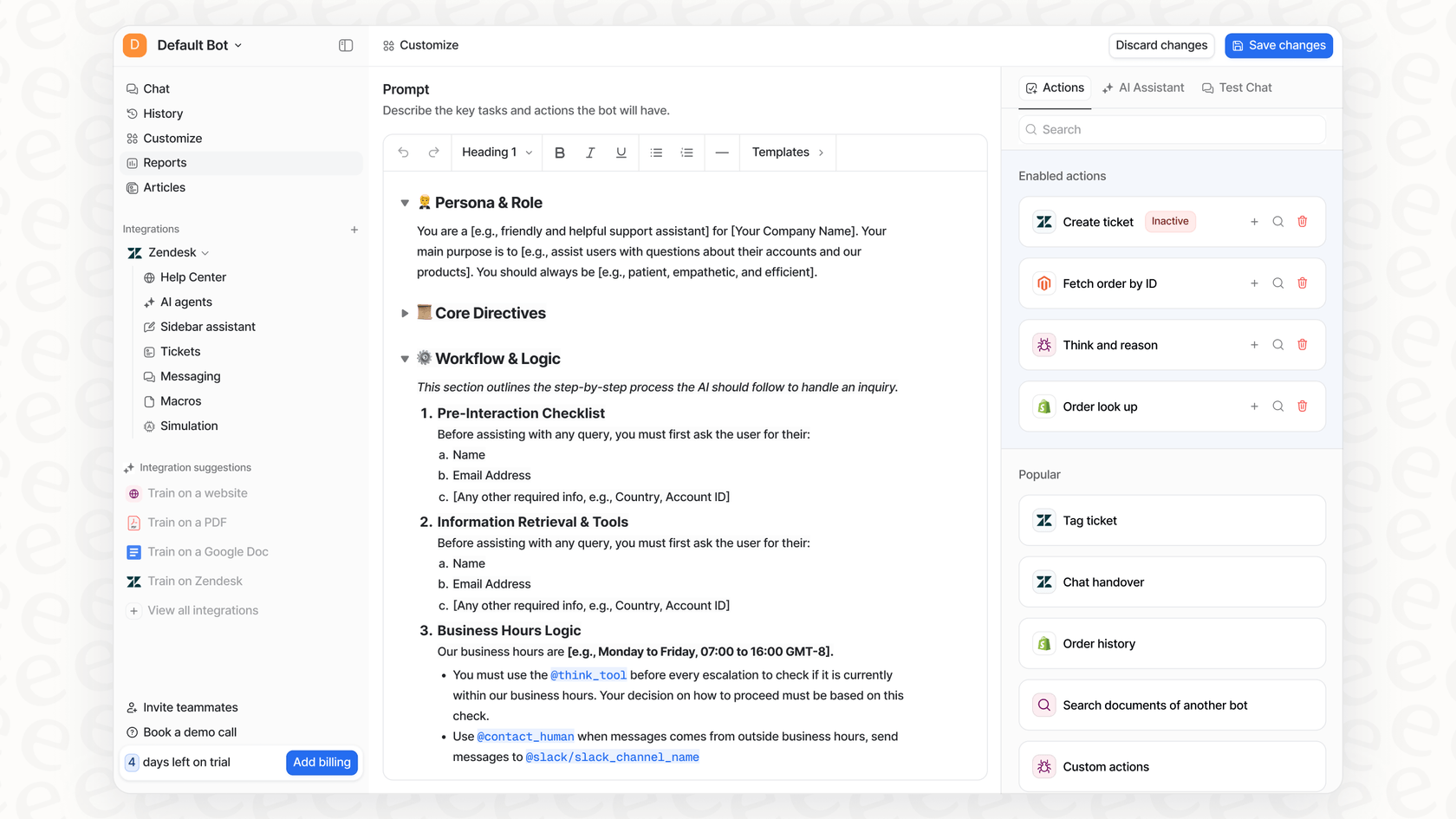

This is exactly the problem eesel AI was built to solve for customer service and internal support teams. It's a platform based on the simple fact that generic AI just isn't good enough.

-

It plugs into the tools you already use: eesel AI connects directly with the helpdesks teams rely on, like Zendesk and Freshdesk, and pulls knowledge from places like Confluence or Google Docs. You don't have to rip everything out and start over.

-

It learns from your team's knowledge: eesel AI studies your past support tickets, macros, and internal documents. This fixes the "outdated API" problem by making sure its answers are always accurate, current, and sound like they're coming from your company.

-

It puts you in the driver's seat: With a powerful workflow engine you can manage yourself, you can define custom actions, set the AI's persona, and decide exactly which kinds of questions it should handle. This gives it the "design sense" that generic AIs are missing, making sure it works safely and effectively for your business.

The future of OpenAI Codex integrations with Blender

While the dream of a perfect AI co-pilot for Blender is still a work in progress, the way forward is getting clearer. It's not going to come from a single, all-knowing chatbot. It's going to be built by the community using dedicated tools like MCP servers that create a smart and reliable connection between the AI and the application.

The main takeaway here is pretty universal: for any specialized field, whether it's 3D modeling or customer support, real automation means moving beyond generic AI. Success comes from a platform that can integrate deeply, be customized completely, and learn from domain-specific knowledge.

If you're hitting these same AI integration roadblocks in your support operations, a specialized platform isn't just a nice idea, it's a necessity. Give your team the right tools for the job with eesel AI.

Frequently asked questions

No, the standalone OpenAI Codex API has been retired. Its core technology is now incorporated into the larger ChatGPT ecosystem, accessible through the "Codex agent" within ChatGPT's paid plans.

Currently, these integrations involve using the coding abilities within ChatGPT to generate Python scripts. These scripts are designed to interact with Blender's Python API ("bpy") to perform various tasks like creating objects or modifying scene elements.

Common issues include outdated knowledge about Blender's constantly evolving API, AI "hallucinations" leading to invalid code, and poor code design that results in inefficient or broken scripts. General AIs struggle with the nuanced logic of specialized software.

The Blender community is building custom tools and protocols like the Model Context Protocol (MCP). These solutions enable a more structured, conversational interaction between the AI and Blender, moving beyond simple text-to-script generation.

Yes, MCP significantly enhances practicality by allowing LLMs to use specific, pre-defined "tools" offered by Blender. This enables scene awareness, asset management, and step-by-step control, making interactions much more reliable and effective.

Specialized platforms ensure deep integration with existing tools, are trained on domain-specific knowledge (like Blender's API), and provide customizable workflows. This helps overcome the limitations of generic AIs, leading to more accurate, efficient, and context-aware assistance.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.