We’ve all been there. You're trying to solve a problem with a support chatbot. You explain the whole situation, give your order number, and then… it asks you for the exact same information again. It's like the bot has the memory of a goldfish, and you're left wondering if you’re just shouting into a void.

The fix for this all-too-common headache is something called multi-turn AI conversations. It’s the tech that lets an AI actually remember the context of a chat, understand follow-up questions, and act more like a helpful person.

But while the idea is great, getting it right is notoriously tricky. This guide will walk you through what these conversations are, why they so often fall flat, and a straightforward way to get them working for your team.

What are multi-turn AI conversations?

First off, let's quickly define a "conversational turn." It's just one back-and-forth exchange: you say something, the AI responds. A single-turn chat is a one-shot deal, like asking your smart speaker for the weather. You ask, it answers, conversation over.

A multi-turn conversation, however, is a string of these turns all connected together. The secret sauce that makes this work is context. The AI’s ability to remember what was said a few minutes ago is what separates a genuinely useful assistant from a clunky, frustrating tool.

For instance, say a customer starts a chat with, "Where is my order?" After the bot gives them the status, the customer follows up with "Can you change the shipping address for it?"

A basic, single-turn bot would just get confused. It would probably say something like, "Sorry, I need an order number to do that." It has no idea what "it" is because it already forgot the first message. But a proper multi-turn AI gets it. It knows "it" refers to the order you were just talking about and can move on to the next logical step: "Of course, what's the new address?" That’s the difference between a dead end and a happy customer.

graph TD; subgraph Single-Turn Conversation (Fails) A[User: "Where is my order?"] --> B[Bot: "It will arrive Tuesday."]; B --> C[User: "Change the shipping address for it."]; C --> D{Bot forgets "it" refers to the order}; D --> E[Bot: "I need an order number for that."]; end

subgraph Multi-Turn Conversation (Succeeds) F[User: "Where is my order?"] --> G[Bot: "It will arrive Tuesday."]; G --> H[User: "Change the shipping address for it."]; H --> I{Bot remembers the order context}; I --> J[Bot: "Sure, what's the new address?"]; end

The building blocks of effective multi-turn AI conversations

These smarter conversations don't just happen by magic. They’re built on a few key ideas working together. Understanding them helps explain why some bots feel intelligent while others feel like they're just reading from a script.

Dialogue state tracking (the AI's working memory)

Think of this as the AI’s short-term memory. As you chat, it's mentally jotting down key details, your name, order ID, the product you’re asking about, and what you’re trying to accomplish. Without this, the AI is stuck in a loop, asking for the same info over and over. This simple memory is the foundation of any coherent conversation.

Contextual understanding (getting what you really mean)

A good conversational AI does more than just scan for keywords. It needs to figure out your intent, even when you don't spell it out. This means correctly interpreting pronouns like "it," "that," or "they" by looking back at the chat history. It’s about understanding what you actually mean, not just the words you typed. This is what lets an AI agent be flexible instead of breaking the second a user says something unexpected.

Managing the conversation flow (and not freaking out over interruptions)

Let's be real, human conversations are messy. People ask clarifying questions, change their minds, or give information in a weird order. A solid AI needs a flexible "dialogue policy" to handle these curveballs. It should be able to pause what it's doing, answer a side question, and then pick back up where it left off without getting confused or making you start over.

Building these systems to be flexible from the ground up is a massive technical challenge. It requires some serious expertise in how conversations work. That’s why platforms like eesel AI are built to handle these complexities for you, so you can focus on designing a great customer experience instead of getting lost in the technical weeds.

Why most multi-turn AI conversations fail

Knowing the parts helps us see why so many AI agents are still so frustrating to deal with. Even with powerful tech, there are a few common tripwires that can derail a conversation and make customers feel completely ignored.

Getting lost in the conversation

Every AI language model has a "context window," which is just a fancy way of saying it has a limited memory. In a long or complicated chat, the AI can literally forget important details you mentioned at the beginning. It's like talking to someone who completely zoned out ten minutes ago. This leads to the AI giving weird, irrelevant answers or asking for information you've already given. It’s a surprisingly common issue, even for the most advanced models out there, they lose track, struggle to maintain context, and lean too heavily on the last thing you said.

The "hallucinated user" problem

If you’ve ever tried to build your own chatbot, you might have seen this bizarre behavior. You show the model a chat history with a clear "User:" and "Assistant:" pattern. The model sees this, and in its effort to be helpful, it writes its own response and then invents a new line for the user. It’s a classic case of an AI being a little too clever, mindlessly continuing a pattern without understanding that its turn is over.

The endless cycle of prompt tuning

So many teams get stuck in a "whack-a-mole" trap trying to fix AI behavior by constantly tweaking prompts. You add a strict rule like, "Do not write for the user," which might fix one thing but then causes a totally new problem somewhere else. You can spend weeks adjusting instructions, only to find the AI is still making silly mistakes with real customers.

This is where you really need a data-driven way to test things. Instead of guessing, tools like eesel AI have a powerful simulation mode. You can test your setup on thousands of your actual past support tickets to see exactly how it would have responded. This lets you find and fix problems in a safe environment before a single customer sees it, replacing guesswork with confident, data-backed improvements.

How to successfully launch multi-turn AI conversations for your support team

Putting this technology to work doesn't have to be some huge, risky project. If you follow a practical, step-by-step plan, you can launch a multi-turn AI agent that actually helps customers from day one.

Step 1: Unify your knowledge sources

Having conversational memory is only half the picture. For an AI to be truly helpful, it needs access to all of your company's knowledge. That means connecting it to your help center, internal wikis, developer docs, and, most importantly, all the answers hidden in your old support tickets.

Trying to manually copy and paste all of that information is a non-starter. The solution is seamless, instant integration. eesel AI connects with over 100 tools you already use, like Zendesk, Confluence, and Google Docs. This creates a single source of truth for your AI in minutes, not months, letting it learn your brand voice and specific solutions on its own.

Step 2: Define and test your automation scope

Don't try to automate everything all at once. The smart move is to start with a narrow, clearly defined scope. Pick a few common, straightforward topics like "password reset requests" or "order status inquiries." Before you let it talk to customers, you need to know how well it will actually perform.

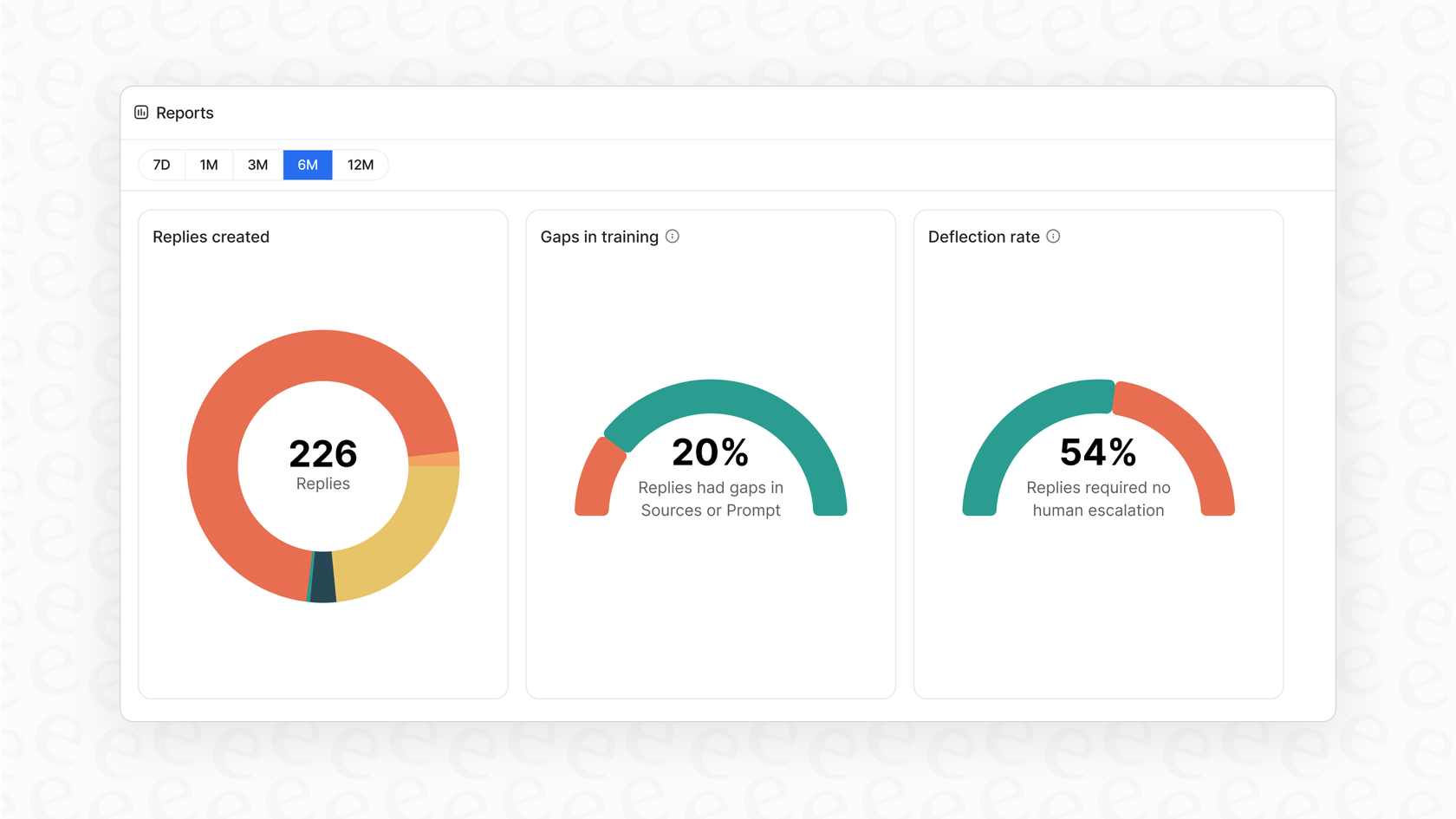

This is where testing is everything. With eesel AI, you can selectively automate just certain types of tickets. Then, you can run simulations on your past conversations to get a solid, data-backed forecast of the resolution rate for that specific topic. This gives you the proof you need to roll it out, set clear expectations for your team, and move forward without just hoping for the best.

Step 3: Roll out gradually and iterate

Once your simulations look good, it's time to go live, but do it slowly. Launch the AI to a small group of users first, or have it handle only a specific type of ticket. This keeps the risk low and lets you watch how it performs in a controlled setting.

Use this early phase to gather feedback and see what’s working. The analytics inside eesel AI don't just show you what the AI did; they actively flag the questions it couldn't answer. This gives you a clear to-do list for new knowledge base articles. It can even help you turn resolved tickets into new draft articles automatically, creating a smart feedback loop that makes both your AI and your help center better over time.

Move beyond simple chatbots with multi-turn AI conversations

Multi-turn conversations are a massive step up for automated support, but getting them right takes more than just a fancy language model. Success depends on solid memory, deep context, and a smart, data-driven way of putting it all together.

Too many teams get stuck on the common hurdles: AIs that get lost, unpredictable behavior, and the frustrating, endless cycle of tweaking prompts.

This is where having an all-in-one platform makes a world of difference. Instead of wrestling with APIs and guesswork, eesel AI gives you a complete solution that lets you go live in minutes. You can test confidently with your own historical data and connect all your knowledge sources without a fuss. Best of all, eesel AI offers transparent pricing without charging per resolution, so your costs don't spiral out of control as you grow.

Ready to see what a true multi-turn AI conversation can do for your team? Start your free trial with eesel AI or book a demo to see our simulation engine in action.

Frequently asked questions

The primary benefit is creating a more natural and helpful support experience. Unlike single-turn bots, multi-turn AI conversations remember context, understand follow-up questions, and can handle complex interactions more effectively, leading to happier customers.

Multi-turn AI conversations utilize "dialogue state tracking," which acts as the AI's short-term memory, jotting down key details like order IDs or product names. This allows the AI to recall previous information and understand subsequent questions without asking for details repeatedly.

Common challenges include the AI getting lost due to limited context windows, the "hallucinated user" problem, and endless prompt tuning. These can be overcome by using platforms that offer robust dialogue state tracking, context management, and powerful simulation tools to test and refine the AI's behavior before deployment.

Yes, effective multi-turn AI conversations are designed with flexible "dialogue policies" to manage conversation flow. This allows the AI to pause, answer a clarifying question, or handle a new piece of information, and then seamlessly return to the original task without making the user start over.

The best approach is to seamlessly integrate the AI with all existing company knowledge sources, such as help centers, internal wikis, and historical support tickets. This provides the AI with a single, comprehensive source of truth, enabling it to learn and provide consistent, accurate solutions.

Teams can confidently launch by starting with a narrow scope for automation and rigorously testing performance using simulations on historical data. Gradual rollout to small user groups or specific ticket types, combined with analytics to identify unanswered questions, allows for continuous, data-backed iteration and scaling.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.