Let's be honest, AI is writing a huge chunk of the internet these days. Blog posts, marketing emails, even customer support replies, it's everywhere. There's even a wild stat that by 2026, 90% of online content could be synthetic.

This brings up a big question for any business that cares about sounding, well, human: How can you tell what’s written by a person and what’s from a bot?

A lot of people are pointing to AI detectors as the solution. But it’s not quite that straightforward. We'll break down exactly how these tools work, what they get wrong, and why a different approach, proactive AI governance, is a much better strategy, especially for something as important as customer support.

What is an AI detector?

An AI detector is a tool that analyzes a piece of text and tries to figure out the odds that it was written by an AI like ChatGPT. The whole point is to spot the difference between the clean, statistical patterns of a machine and the slightly messy, unpredictable style of a human writer.

You'll see them being used in a few different places:

-

In schools: Teachers use them to check if students are writing their own essays or getting a little too much help from AI.

-

On websites: Platforms use them to find and flag fake product reviews or spammy comments.

-

For SEO: Some marketers use them to avoid getting penalized by search engines for publishing generic, low-effort AI content.

The important thing to remember is that these tools don't give you a straight "yes" or "no." They spit out a probability score, something like "98% likely AI-generated," and that uncertainty is where things get tricky.

How AI detectors work

To really get why they’re not foolproof, we need to pop the hood and see how they operate. These tools are basically digital detectives, looking for clues and statistical fingerprints that AI writers tend to leave all over their work.

Machine learning and NLP

Underneath it all, AI detectors are AI models themselves. They're trained on gigantic datasets filled with millions of examples of both human and AI writing. Using a technology called natural language processing (NLP), the detector learns to recognize sentence structure, grammar, and context, just like the AI tools they're trying to catch.

This training process makes the detector really good at spotting the subtle habits and statistical quirks that scream "a robot wrote this."

Perplexity

One of the biggest giveaways is something called perplexity. It sounds complicated, but it's really just a measure of how predictable a text is.

AI models build sentences by guessing the most statistically likely next word. This makes their writing super smooth and grammatically correct, but also… a little boring and predictable. This is what's known as low perplexity.

Human writing? We’re all over the place. We use weird metaphors, inside jokes, and clunky sentences. We make typos. All that beautiful messiness makes our writing surprising, or what the detectors call high perplexity.

Burstiness

Another dead giveaway is burstiness. This is just a fancy way of talking about sentence variety.

When you write, you probably mix short, snappy sentences with long, winding ones. That creates a natural rhythm, high burstiness, that keeps things interesting. It's how conversations flow.

AI, on the other hand, often produces text with low burstiness. All the sentences are about the same length, with similar structures. It’s grammatically sound, but it can feel flat and monotonous, which is another easy clue for a detector.

graph TD A[Start: Text Input] --> B{AI Detector Analysis}; B --> C{Perplexity Check}; C --> D{Is it predictable?}; D -- Yes --> E[Flag as Low Perplexity]; D -- No --> F[Flag as High Perplexity]; B --> G{Burstiness Check}; G --> H{Are sentence structures varied?}; H -- No --> I[Flag as Low Burstiness]; H -- Yes --> J[Flag as High Burstiness]; E --> K[Likely AI]; I --> K[Likely AI]; F --> L[Likely Human]; J --> L[Likely Human]; K --> M[Final Score: High Probability of AI]; L --> N[Final Score: Low Probability of AI];

Classifiers and embeddings

Finally, there are a couple of other things happening behind the scenes. Classifiers are the part of the model that makes the final judgment call. After looking at perplexity, burstiness, and other signals, the classifier decides whether to label the text "likely human" or "likely AI."

To make that call, it uses embeddings. This is a technical term for turning words into numbers (or vectors) so the model can analyze their relationships and meanings. It’s what helps the detector understand context, which is key for telling a sophisticated AI from a human.

Where AI detectors fall short

While the technology is pretty smart, it's a long way from perfect. Relying completely on AI detection is a risky move for any serious business. Here’s why.

-

They make mistakes (a lot). No detector is 100% accurate. They can flag human-written content as AI (a false positive), which could lead to some really awkward and unfair conversations. They can also fail to spot AI-generated text (a false negative), which might give you a false sense of security.

-

They can be biased against non-native speakers. This is a massive issue. Most detectors are trained on huge amounts of text written in standard American or British English. This means they often incorrectly flag content from non-native English speakers as AI-generated just because their sentence structure or word choice is different from the training data.

-

It’s a constant cat-and-mouse game. AI models are getting better at an incredible speed. The newest versions are specifically trained to write with more variety and creativity (higher perplexity and burstiness) to sound more human. The detectors are always one step behind, trying to catch up, which makes them less reliable against the latest AI.

-

A "probability score" isn't proof. At the end of the day, an AI detector's score is just an educated guess. You can't use it as concrete evidence. For a business, making decisions based on a probability score is a huge gamble, especially when your brand's reputation or customer trust is at stake.

What about the cost of popular AI detectors?

If you're thinking about using these tools for your business, the costs can also be a real, and often unpredictable, headache.

-

GPTZero: This is a popular one with a free version for small checks (up to 10,000 words a month). For more serious use, their Essential plan is about $100 a year for 150,000 words a month, and the Premium plan is around $156 a year for 300,000 words a month.

-

Originality.ai: This tool uses a pay-as-you-go model, charging about a penny for every 100 words you check. That sounds cheap, but if you're analyzing a high volume of content, those pennies can add up to a surprisingly large and unpredictable bill each month.

The pricing brings up another point: you're paying to check content after it's already been created, with no guarantee that the check is even accurate.

Beyond detection: AI governance is what support teams really need

Instead of just reacting to AI content, what if you could guide it from the start? That's the whole idea behind AI governance, and it’s a must-have for customer support teams where brand voice and accuracy are everything.

Trying to use a generic tool like ChatGPT for customer replies and then running it through a detector is a messy, unreliable process. A purpose-built platform just gives you more confidence and control.

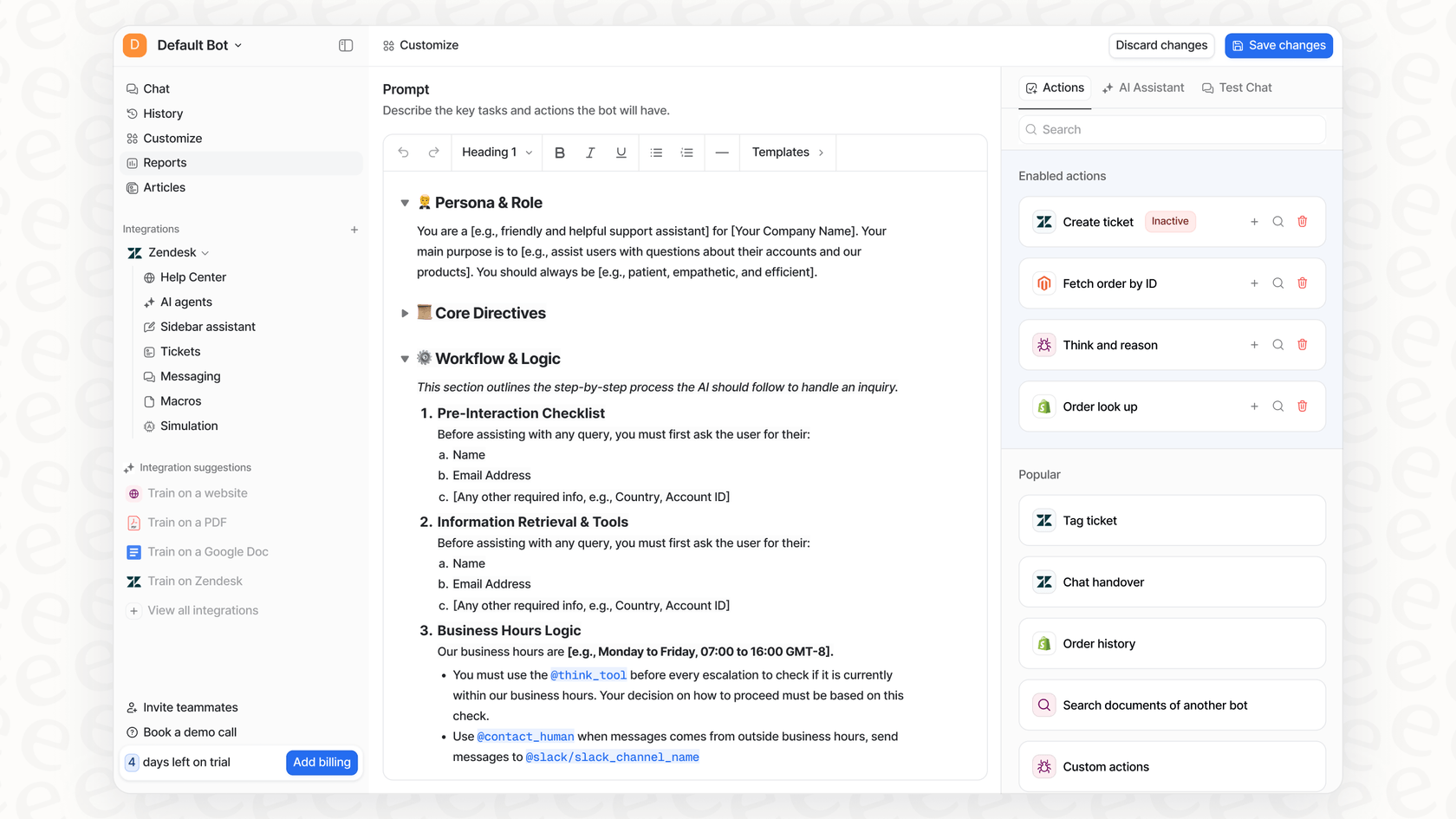

For example, a tool like eesel AI helps you sidestep the detection game entirely:

- It learns to sound just like you. Generic AI writes generic text, which is exactly what detectors are built to sniff out. eesel AI is different. It trains on your company's actual knowledge, your past support tickets, help docs from places like Confluence or Google Docs, and internal guides. It picks up your unique brand voice right away.

- You’re in the driver’s seat. No more guessing what your AI might say. With eesel AI's fully customizable workflow engine, you can set up the AI's persona, its tone, and exactly what it can and can't do. You can even limit its knowledge to specific topics so it doesn't go off-script and start answering questions it shouldn't.

- You can test it safely. Forget running text through a public detector and crossing your fingers. eesel AI has a simulation mode that lets you test your AI agent on thousands of your past tickets. You can see precisely how it would have replied and get solid forecasts on its performance before it ever talks to a real customer.

The verdict: Detection vs. direction

So, how do AI detectors work? They scan text for predictability (perplexity) and rhythmic variety (burstiness) to make an educated guess about whether a human or a machine wrote it.

And while they’re neat pieces of tech, their unreliability, biases, and inability to keep up with smarter AI make them a shaky foundation for any business. For professionals, especially in customer support, the goal isn't just to detect AI, it's to direct it.

Take control of your customer support AI

Stop worrying about if your AI sounds human and start making sure it sounds like your brand.

With eesel AI, you can build an autonomous AI agent that’s trained on your company’s knowledge, fits your exact workflows, and can be tested and trusted before it goes live. You can go from signing up to having a working AI agent in just a few minutes.

See the difference for yourself and start a free trial today.

Frequently asked questions

AI detectors analyze text for statistical patterns, attempting to identify differences between the predictable, smooth content generated by machines and the varied, often "messier" style of human writing. They achieve this by using machine learning models trained on vast datasets of both human- and AI-produced text.

These tools primarily look for low perplexity, which indicates predictable word choices, and low burstiness, signifying a lack of sentence length variety. These characteristics are common in AI-generated content, contrasting with the high perplexity and burstiness typically found in human writing.

Their primary limitations include frequent false positives (flagging human text as AI) and false negatives (missing AI-generated text), making them inherently unreliable. They also struggle to keep up with rapidly evolving AI models that are designed to sound more human, leading to a constant "cat-and-mouse" game.

Yes, a significant ethical concern is their potential bias against non-native English speakers. Since most detectors are trained on standard English texts, they can inaccurately flag unique sentence structures or word choices from non-native writers as AI-generated, leading to unfair assessments.

Understanding their limitations, such as inaccuracy, bias, and the reactive nature of detection, highlights why simply spotting AI isn't sufficient for business needs. Proactive AI governance allows companies to guide AI outputs from the start, ensuring they align with brand voice, accuracy, and ethical guidelines, which is more reliable than merely checking after the fact.

Businesses should realistically expect AI detectors to provide only a probability score, not definitive proof of AI authorship. Due to their inherent flaws and the rapid advancement of AI models, consistent, high accuracy cannot be guaranteed, making them a risky foundation for critical business decisions.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.