So, OpenAI just rolled out its newest and most advanced speech-to-speech model, gpt-realtime, and its Realtime API is now officially open for business. If you work with voice AI in any capacity, this is more than just a minor update, it's a pretty big shift in how these tools work. The new model is built to make voice interactions faster, sound more natural, and be reliable enough for actual business use.

The main idea is that we're finally moving past the slow, clunky voice processing we're all used to. Instead of chaining together different systems for speech-to-text, thinking, and then text-to-speech, gpt-realtime does it all in one go. OpenAI’s goal was to build a model for "reliability, low latency, and high quality to successfully deploy voice agents in production." For the rest of us, that just means conversations with AI might finally feel less like talking to a robot and more like talking to a person.

What is OpenAI's gpt-realtime update

This update isn't just a new model; it's a combination of a smarter AI and a more capable API. Together, they open up some new and interesting possibilities for developers and businesses. Let’s get into what’s new and why it’s worth paying attention to.

A look at OpenAI's official introduction on its gpt-realtime update.

From clunky pipelines to seamless conversation

You know that awkward pause you get when talking to a voice assistant? That frustrating little delay before it responds? That's usually because the AI is juggling a few different tasks behind the scenes. Traditionally, it has to convert your speech into text, send that text to a language model to figure out a response, and then turn that response back into speech. Each step adds a bit of lag, creating those unnatural gaps in conversation.

The gpt-realtime model handles this differently with a direct speech-to-speech approach. It processes the audio directly, cutting out the middle steps. This drastically cuts down on latency and, just as importantly, preserves the little things that make speech human, like tone, emotion, and rhythm, that often get lost when everything is converted to text. The result is a conversation that flows a lot more smoothly.

Key performance boosts

OpenAI didn't just make the model faster; they also made it quite a bit smarter. The improvements are mainly in three areas: intelligence, following instructions, and using tools (what they call function calling).

Here’s a quick look at the before-and-after:

| Metric | Benchmark | Previous Model (Dec 2024) | gpt-realtime (New) | What It Means |

|---|---|---|---|---|

| Intelligence | Big Bench Audio | 65.6% | 82.8% | Better reasoning |

| Instruction Following | MultiChallenge (Audio) | 20.6% | 30.5% | More precise control |

| Function Calling | ComplexFuncBench (Audio) | 49.7% | 66.5% | More reliable tool use |

What this means in the real world is that the AI is just better at its job. Higher intelligence helps it understand complex, multi-part questions. Better instruction following means you can tell it to stick to specific brand guidelines or read a legal disclaimer word-for-word. And more accurate function calling lets it reliably connect to other tools to do things like check an order status or process a refund.

New production-ready features

Along with the new model, the Realtime API received some important upgrades that make it suitable for serious business applications.

- SIP (Session Initiation Protocol) Support: This is a big one. SIP support lets the AI connect directly to phone networks. This means you can build AI agents that make and receive actual phone calls, which opens the door to things like fully automated phone support or appointment scheduling.

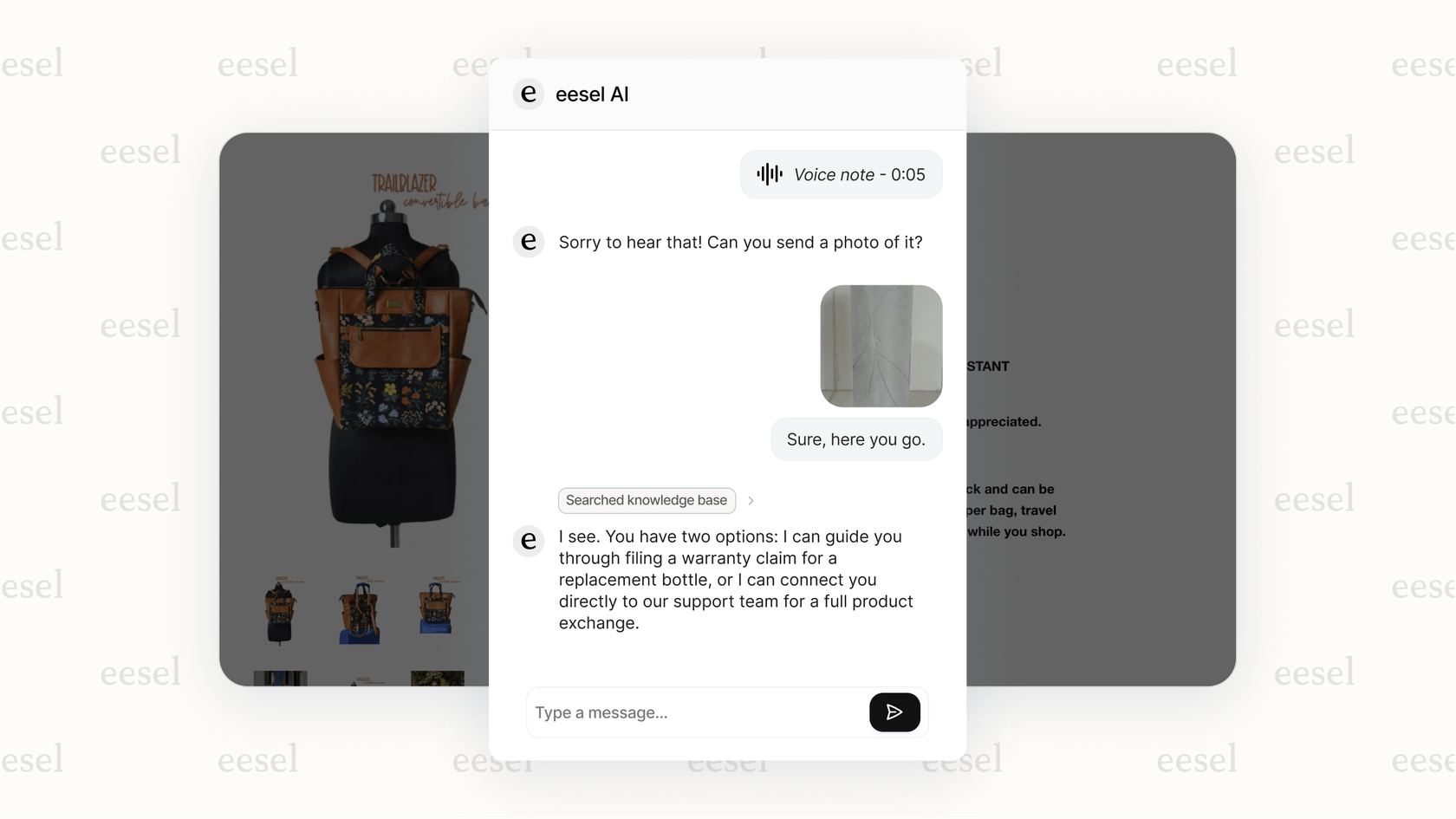

- Image Inputs: Conversations don't have to be limited to voice anymore. Users can now send images, photos, or screenshots during a voice chat. This creates a multimodal experience where a customer could, for instance, send a picture of a broken part or an error code and ask the AI for help.

- Remote MCP Server Support: This feature makes it simpler for developers to connect external tools and services. Instead of writing a bunch of custom code for every integration, you can just point the API to a server that handles tool calls. This allows your AI to access payment systems, booking platforms, or internal databases more easily.

Who gpt-realtime affects: The impact on customer support and developers

While the tech itself is powerful, its real impact depends on how easily businesses can actually put it to work. A raw API is a fantastic starting point for developers, but turning it into a helpful, on-brand customer support agent is a whole other challenge. This is where you see the split between using a raw API and an integrated platform.

A new era for automated customer support

There's a lot of potential for gpt-realtime to change how customer support works. It’s easy to imagine AI phone agents that sound natural, understand tricky problems, and actually solve them without putting you on hold. It’s an exciting idea, but getting there isn't as simple as plugging in an API key.

Building a production-ready voice agent from the ground up takes a lot of development time, continuous maintenance, and a solid grasp of conversational design. You have to manage the infrastructure, teach the AI about your specific business, figure out the logic for when to hand off a conversation to a human, and much more.

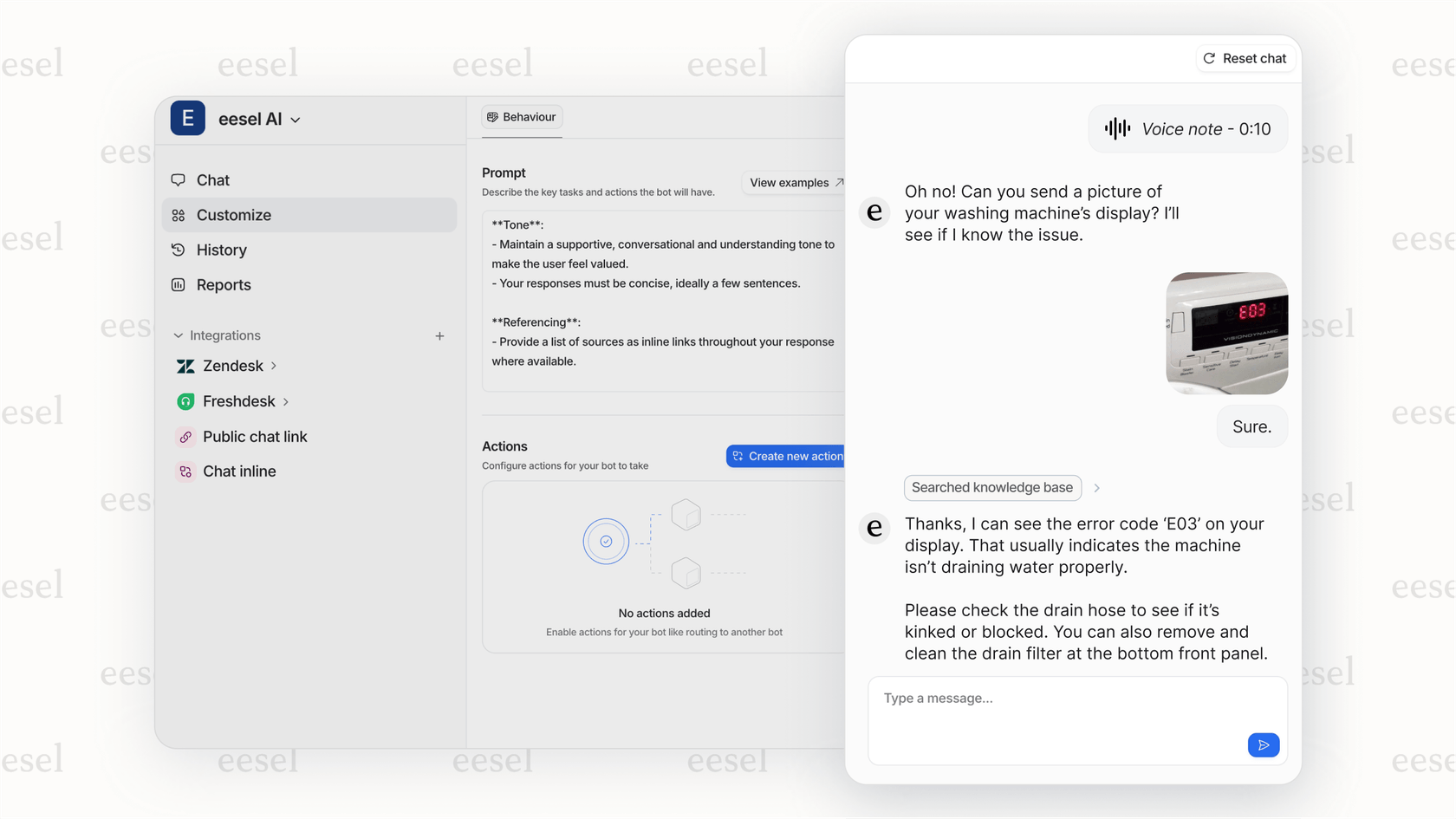

This is the gap that a platform like eesel AI is designed to fill. It uses the power of models like gpt-realtime but handles all the underlying complexity, letting you get an agent up and running in minutes instead of months.

- No "rip and replace": eesel AI integrates directly into the help desks you already use, like Zendesk, Freshdesk, and Intercom, so you don't have to migrate to a whole new system.

- True self-serve setup: You can get started and build a capable AI agent without ever having to sit through a sales demo. This is a pretty different approach compared to many competitors that require long, drawn-out onboarding.

- Risk-free simulation: One of the toughest parts of deploying AI is the uncertainty of how it will perform. eesel AI has a simulation mode that lets you test your AI on thousands of your past support tickets. You can see exactly how it would have replied, get solid forecasts on resolution rates, and feel confident before it ever interacts with a live customer.

What gpt-realtime means for developers and new apps

Outside of customer support, these advances open up some cool possibilities for developers building new voice-first applications. We’ll probably start seeing a new wave of innovation in a few areas:

- Smarter personal assistants for smart homes that are more responsive and less frustrating.

- Interactive educational tools that can adapt to a student's pace and learning style in real time.

- Better real-time translation and accessibility apps that can help close communication gaps.

What's next for gpt-realtime: Challenges and the future of voice AI

As cool as this technology is, it’s not perfect. The raw model is just one part of the equation, and there are still some hurdles to clear before voice AI becomes a seamless part of our daily lives.

Lingering gpt-realtime challenges and developer feedback

Early feedback from developers on forums like Hacker News and Reddit has pointed out some of the current limitations. For example, some users with heavy accents have mentioned that the model sometimes misidentifies the language they're speaking. It shows there's still work to do in making the tech truly robust for everyone.

Today's gpt-realtime releaseDeveloper feedback on gpt-realtime from Reddit. There’s also an ongoing conversation in the developer community about the risks of depending on a closed-source API from a single company. While OpenAI's models are powerful, building a core piece of your business on a platform you don't control creates a level of vendor lock-in that makes some developers a bit nervous.

byu/Weary-Wing-6806 inOpenAI

The future of gpt-realtime isn't just a better model, it's a better system

Think of a powerful AI model like gpt-realtime as a high-performance engine. It's an amazing piece of tech, but on its own, it can't really get you anywhere. To have a useful vehicle, you need the rest of the car: the chassis, steering wheel, brakes, and a dashboard.

In the world of AI support, platforms like eesel AI provide that complete system. The model is the engine, but eesel AI adds all the other parts that turn that raw power into something your business can actually use.

- Unified Knowledge: The smartest AI is useless if it doesn't have the right information. eesel AI connects to all your knowledge sources, your help center, past tickets, Confluence, Google Docs, and more, to give the AI the context it needs to provide accurate answers.

- Customizable Workflow Engine: You get full control over how the AI behaves. You can set its tone of voice, give it a persona, and create custom actions that let it do things like look up order details in Shopify or tag a ticket in your help desk.

- Actionable Reporting: eesel AI’s analytics dashboard does more than just track usage. It shows you where your knowledge base might have gaps and points out trends in customer issues, giving you a clear path to improving your entire support operation.

Start building with gpt-realtime today

OpenAI's gpt-realtime is a major step forward for voice AI, making it more powerful and natural than what we’ve had before. But for businesses that want to use this tech, an API key is only the first step. The real value comes from building a complete, intelligent system around the model.

Platforms like eesel AI offer a fast and safe way to implement advanced AI support. They take care of the technical heavy lifting, so you can focus on what actually matters: improving your customer experience and making your support team's life easier.

Pro Tip: If your team is looking to see what kind of impact voice AI could have, start with a tool that has a strong simulation mode. It lets you test everything on your own data and build a business case without any risk to your customers.

Ready to see what the future of voice AI can do for your business? Start your free eesel AI trial and see what's possible.

Frequently asked questions

The biggest difference is its direct speech-to-speech processing. This cuts out the intermediate steps of converting speech to text and back again, which drastically reduces lag and makes conversations feel much more natural and fluid.

While you can use the raw API, a simpler approach is to use a platform like eesel AI. These platforms handle all the technical complexity, allowing you to build and deploy a voice agent powered by the model in minutes, not months.

Yes, that's exactly what SIP support enables. By integrating with standard telephony protocols, voice agents built with the API can connect directly to phone networks to manage real calls for things like customer support or appointment scheduling.

Yes, some early developer feedback has noted challenges, like the model occasionally misidentifying the language of speakers with heavy accents. As with any new technology, there are still areas for improvement to make it robust for all users.

The Realtime API allows for multimodal input, meaning a user in a voice chat session could also send a file like a photo or screenshot. For example, a customer could send a picture of a broken part or an error code to the AI agent for faster troubleshooting.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.