Trying to figure out Google Gemini’s pricing can feel like you’re trying to solve a puzzle with half the pieces missing. Between the different costs for APIs, personal subscriptions, and business plans, it’s honestly a bit of a headache for teams just trying to figure out what they’ll actually end up paying.

If you’re trying to budget for an AI project or simply want to know which plan makes sense for you, you’ve probably noticed that the costs are scattered all over the place.

This guide is here to clear things up. We’re going to lay out a simple, complete breakdown of every bit of Gemini pricing in 2025. We'll demystify the token-based API costs, the per-user subscription fees, and everything in between. By the end, you'll know exactly which option fits your needs, whether you're a developer, a business user, or a support leader.

What is Google Gemini?

At its core, Gemini is Google's latest family of AI models. It's "multimodal," which is just a fancy way of saying it’s built to understand and work with more than just text. It can process code, images, audio, and even video, making it incredibly flexible.

To get a grip on its pricing, you first need to understand its different tiers. Each one is designed for a specific type of job and, you guessed it, comes with a different price tag.

-

Gemini 2.5 Pro: This is the top-of-the-line model. It's built for heavy-duty, complex tasks that need some serious reasoning power, like writing advanced code, doing deep analysis, or generating long articles.

-

Gemini 2.5 Flash: Think of this as the balanced, everyday workhorse model. It’s tuned for speed and cost-efficiency, making it a great all-rounder for most common business tasks.

-

Gemini 2.5 Flash-Lite: This is the most budget-friendly and fastest model in the lineup. It’s designed for situations where you need to handle a huge volume of tasks quickly without running up a massive bill.

You can get your hands on these models in three main ways: through the API for building your own stuff, as part of a subscription plan for business or personal use, or through special tools for developers. Each route has its own pricing structure, which we’ll dive into right now.

Gemini pricing for developers: The API model

For developers and businesses looking to build custom applications on top of Gemini, the API offers the most control and power. It runs on a pay-as-you-go model, which is fantastic for scaling up but can also lead to some surprisingly high bills if you're not keeping a close eye on things.

Understanding how token costs work in Gemini pricing

Before we jump into the numbers, we need to talk about "tokens." In the world of AI models, a token is basically a piece of a word. For example, the word "pricing" might be split into two tokens, "pric" and "ing." You get charged for the number of tokens you send to the model (your input) and the number of tokens the model sends back (its output).

Gemini's API pricing is usually listed per one million tokens. To make things a little more confusing, newer models like Gemini 2.5 also include "thinking tokens" in the output cost, which covers the model's background reasoning process. This can make it tricky to forecast your budget with 100% accuracy.

API pricing tiers

Here’s a straightforward breakdown of the costs for the main API models so you can see how they stack up.

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) | Best For |

|---|---|---|---|

| Gemini 2.5 Pro | $1.25 (≤200k tokens), $2.50 (>200k tokens) | $10.00 (≤200k tokens), $15.00 (>200k tokens) | Complex reasoning, coding, long-form content |

| Gemini 2.5 Flash | $0.30 (text/image/video), $1.00 (audio) | $2.50 | High-volume tasks needing speed and quality |

| Gemini 2.5 Flash-Lite | $0.10 (text/image/video), $0.30 (audio) | $0.40 | At-scale, cost-sensitive, and low-latency tasks |

Other factors affecting API costs

On top of those per-token rates, a few other features can sneak onto your final bill:

-

Context Caching: This lets you store and reuse parts of your prompts to save on input costs, but it comes with its own small storage fee.

-

Grounding with Google Search: This allows the model to pull in live information from the web. You get some free requests each day, but after you hit the limit, it costs $35 for every 1,000 requests.

-

Batch Mode: If your tasks aren't urgent, you can process them in batches and get a 50% discount.

This level of complexity is a real challenge for teams that need predictable budgets. While developers might be able to juggle these features to optimize costs, support teams just need a solution that works without all the hassle. Instead of forcing you to manage token consumption, platforms like eesel AI offer simple, interaction-based plans. You get all the power of models like Gemini without the billing surprises, because there are no per-resolution fees.

Gemini pricing: Subscription plans for business and individuals

If you're not trying to build a custom app from scratch, Google's subscription plans are a much simpler way to get access to Gemini. These plans have a fixed cost per user each month, making them way easier to budget for.

Google Workspace plans

Google has started weaving Gemini features directly into its Google Workspace plans for businesses. The idea is to give your internal teams an AI boost inside the tools they already use every day.

The pricing is pretty simple, billed per user, per month:

-

Business Starter: $8.40/user/month

-

Business Standard: $16.80/user/month

-

Business Plus: $26.40/user/month

With these plans, your team gets AI help right inside Gmail, Docs, Sheets, and Meet for things like drafting emails, summarizing meetings, and creating presentations.

These are great for making your internal employees more productive. But they’re designed to assist your team, not automate their work. For customer-facing automation that actually resolves support tickets without an agent ever touching them, you need a different kind of tool. eesel AI plugs right into your helpdesk to provide an autonomous AI Agent, which is something a standard Workspace license just can’t do.

For individuals: The Google One AI Pro plan

For solo users, freelancers, or anyone who just wants a powerful AI assistant, Google offers the Google One AI Pro plan.

-

Cost: $19.99/month

-

Features: This plan gives you access to the top-tier Gemini 2.5 Pro model, plus 2 TB of Google Drive storage and other perks from Google One.

It’s worth noting that this plan is tied to a personal Google account, so it doesn’t have the admin controls, security features, or team collaboration tools that businesses rely on. It’s a solid choice for personal use, but not really cut out for a team environment.

For developers and ops: Code Assist

For the more technical folks, Google has Gemini Code Assist, a separate subscription built to speed up the whole software development process.

-

Standard Plan: $19/user/month (with an annual commitment)

-

Enterprise Plan: $45/user/month (with an annual commitment)

This tool gives developers AI-powered code suggestions, chat help, and other features right inside their coding environment. The pricing is predictable, but it’s an add-on cost that’s focused squarely on developer productivity, not on powering the AI features in your final product.

A complete Gemini pricing comparison

With all these different options, it can still be tough to see which one is the right fit. This table puts all the Gemini pricing models in one place to help you decide.

| Plan/Product | Target User | Pricing Model | Price | Key Use Case |

|---|---|---|---|---|

| Gemini API | Developers, Businesses | Pay-as-you-go (per token) | Varies ($0.10 - $15.00 / 1M tokens) | Building custom AI applications |

| Google Workspace | Businesses, Teams | Per user, per month | $8.40 - $26.40+ | Internal productivity and collaboration |

| Google One AI Pro | Individuals, Power Users | Per user, per month | $19.99 | Personal AI assistant and cloud storage |

| Gemini Code Assist | Developers, IT Ops | Per user, per month | $19 - $45 | Speeding up software development |

This video provides a helpful breakdown of the various Gemini pricing plans available for different user needs.

As you can see, Google's pricing really makes you choose between a complicated, variable API model or a fixed-price license for internal tools. Neither one is really built for automating frontline support. eesel AI fills that gap by offering a platform designed specifically for customer service with simple, predictable pricing. You can go live in minutes, not months, without needing a team of developers just to manage API costs.

How to choose the right Gemini pricing plan

Google's Gemini pricing is split up for different kinds of users, and the right choice really just boils down to your goal.

-

Use the API if you're building a custom application from the ground up and need all the flexibility you can get.

-

Choose Google Workspace to give your team's internal productivity a boost within the Google ecosystem.

-

Go for Google One if you want a powerful personal AI assistant for yourself.

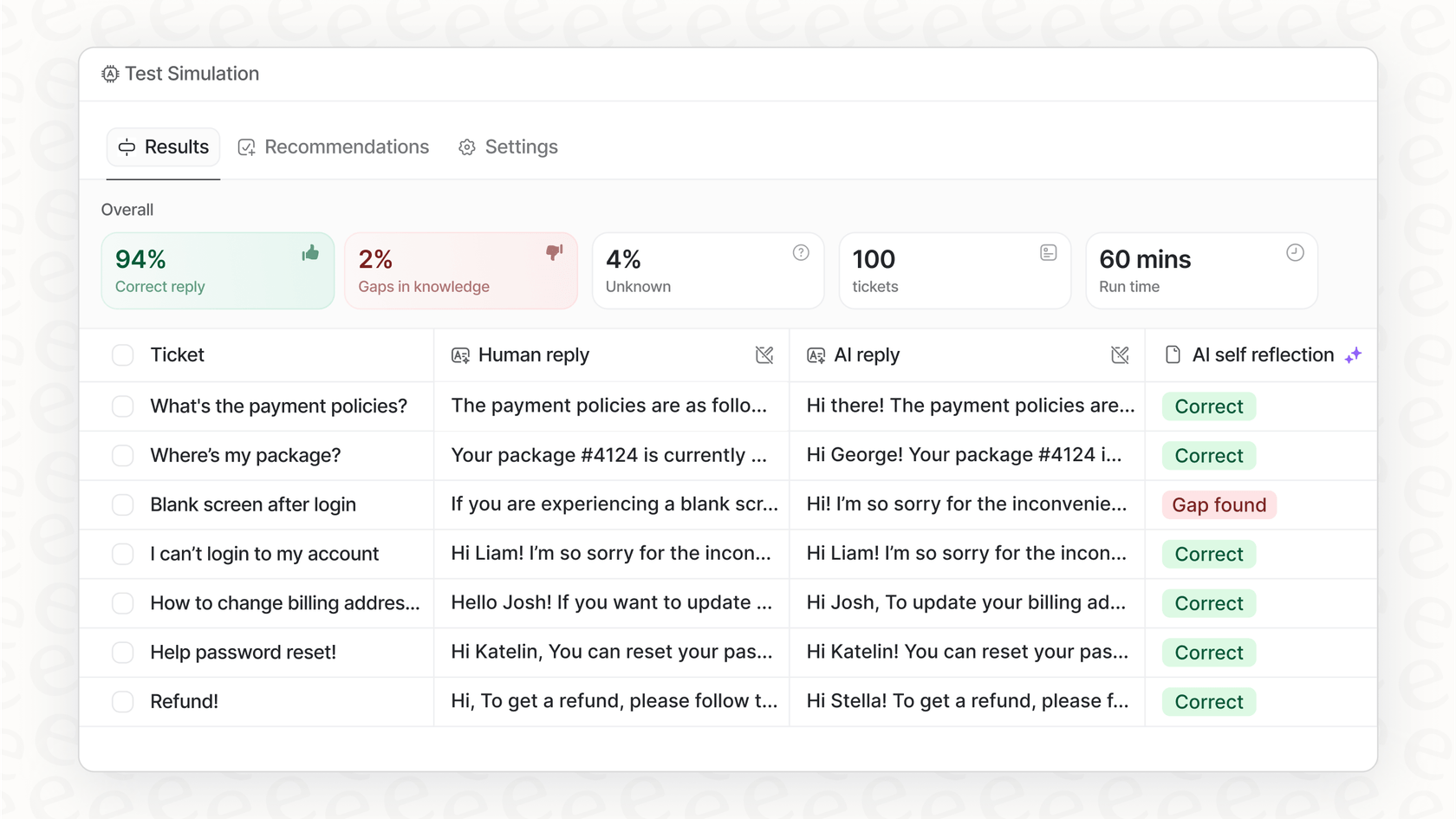

But for support, ITSM, and internal help desk teams, wrestling with Gemini's pricing and setup can be a major distraction from the main goal: solving problems for users faster. eesel AI offers a much smarter path. Our platform pulls together all your knowledge sources and plugs into your existing helpdesk to automate support right away. With our powerful simulation mode, you can see your exact ROI before you even commit to anything.

Try eesel AI for free and see just how simple and predictable AI for support can be.

Frequently asked questions

Gemini pricing varies depending on how you access the models. It's structured around pay-as-you-go API costs for developers, fixed monthly subscriptions for business and individual users, and specific plans for coding assistance.

For API users, Gemini pricing is based on tokens, which are small pieces of words. You're charged for both input (what you send) and output (what the model returns), including "thinking tokens" for newer models. This token-based system can make budgeting tricky without careful monitoring.

Gemini pricing for API models varies significantly. Gemini 2.5 Pro is the most expensive, suited for complex tasks, while Flash offers a balance of speed and cost. Flash-Lite is the most budget-friendly and fastest, designed for high-volume, low-latency tasks.

Subscription plans, such as Google Workspace, offer a fixed monthly cost per user for integrated AI features, making them predictable for budgeting. The API, however, uses a variable pay-as-you-go model based on token consumption, which can fluctuate depending on usage.

For individuals, Gemini pricing through the Google One AI Pro plan is a flat $19.99/month. This subscription provides access to the top-tier Gemini 2.5 Pro model, along with 2 TB of Google Drive storage and other Google One benefits.

While Google offers some fixed-price subscriptions for internal productivity and developer tools, the underlying API Gemini pricing can be highly variable due to token consumption. For predictable support automation without managing tokens, platforms like eesel AI offer interaction-based plans.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.