Let's be real, AI is everywhere in customer service now. It's no longer some futuristic concept, it's helping your team answer tickets, automate tedious tasks, and hopefully, make customers happier. But as we all rush to adopt these new tools, there's a huge elephant in the room: customer trust.

A recent IAPP survey found that a whopping 57% of consumers see AI as a major threat to their privacy. That stat can be a little jarring, right? It puts support leaders in a tough spot. You need to innovate and use AI to keep up, but you can't afford to freak out the very customers you're trying to help.

So, how do you find that sweet spot between AI-powered efficiency and the growing demand for data privacy? That’s the big question for 2025. This guide is here to walk you through it. We'll break down the essential Data privacy updates for AI in customer service 2025, get into the nitty-gritty of what customers are worried about, and give you a practical game plan for building an AI strategy that is both compliant and trustworthy.

Defining AI data privacy in customer service

At its core, AI data privacy in customer service is all about keeping your customers' information safe and secure when you're using AI tools. And we're not just talking about the basics like names and email addresses. It covers every single piece of data an AI might interact with: full conversation histories, what they've bought in the past, and even the personal feedback they share with you.

A few years ago, this mostly meant making sure your help desk or CRM was locked down. But with generative AI jumping into the scene, the playing field has gotten way bigger and a lot more complicated. Now, privacy also includes how your customer data is used to train AI models, how those AI-generated responses are created, and whether you're being open and honest about the whole process.

The thing is, for AI tools to be truly helpful, they often need to learn from huge amounts of your historical support data. This is what makes them smart, but it also raises the stakes. If that data isn't handled correctly, the potential for misuse or a breach is massive. And the fallout from that can be devastating. According to the Cisco 2025 Data Privacy Benchmark Study, 95% of customers said they wouldn't buy from a company if they don't feel their data is protected. That’s not a number you can ignore. Privacy isn't just a legal checkbox anymore; it's a fundamental part of the customer experience.

The 2025 legal landscape: Key regulatory updates

Trying to keep up with the tangled web of AI and privacy laws can feel like a full-time job. In 2025, several major regulations are kicking into high gear, and customer service teams are right on the front lines, whether they know it or not. The National Conference of State Legislatures reports that at least 45 states introduced over 550 AI-related bills in the last legislative session alone. Staying on top of this isn't just a good idea, it's a must.

The EU AI Act and GDPR

The European Union has been setting the global standard for a while, and its approach to AI is no different. It’s basically a two-pronged attack.

First, there's the EU AI Act. It uses a risk-based system, and most AI tools used in customer service (like your average chatbot) will probably be classified as "limited-risk." This sounds fine, but it comes with a big string attached: you have to be crystal clear with your users that they're talking to an AI, not a human. No more pretending your bot is a person named "Brenda."

At the same time, the General Data Protection Regulation (GDPR) is still the law of the land for any data these AI systems touch. Core GDPR principles you're likely familiar with, like only collecting the data you absolutely need (data minimization) and being upfront about how you'll use it (purpose limitation), are now being applied directly to how AI models are trained and used. This also shines a spotlight on data residency. If you serve customers in the EU, you need to make sure their data stays within the EU. Using an AI solution that offers EU data residency, like eesel AI, isn't just a nice-to-have; it's a critical part of being GDPR compliant.

U.S. state privacy laws

While the U.S. still lacks a single federal privacy law, a growing number of states are taking matters into their own hands. In 2025, new privacy laws are going into effect in places like Delaware, Iowa, New Hampshire, New Jersey, and Nebraska. They're joining the ranks of states with already established regulations, like California, Colorado, and Virginia.

For customer service teams, this patchwork of laws can be a headache, but they generally boil down to three key themes you need to watch for:

-

Broader definitions of "sensitive data." This often requires you to get explicit, opt-in consent from a customer before you can process certain types of information.

-

The right for consumers to opt out. Customers are getting more rights to say "no" to their data being used for profiling or in automated decision-making processes.

-

Mandatory data protection assessments. You may be required to conduct formal risk assessments for "high-risk" activities, and deploying certain types of AI can easily fall into that category.

Here’s a quick look at how some of these states compare:

| U.S. State | Right to Opt-Out of Profiling | Data Protection Assessment Required | Key Sensitive Data Definitions |

|---|---|---|---|

| California (CPRA) | Yes | Yes | Precise geolocation, genetic data, biometric information. |

| Colorado (CPA) | Yes | Yes | Data revealing racial or ethnic origin, religious beliefs, health diagnosis. |

| Virginia (CDPA) | Yes | Yes | Data revealing racial or ethnic origin, religious beliefs, health diagnosis. |

| Delaware (DPDPA) | Yes | Yes | Status as transgender or nonbinary, data of a known child. |

Just a heads-up, this table is a simplified overview. You'll want to consult the specific state laws for all the details.

Global data privacy trends

This isn't just a US and EU issue. Other countries are getting in on the action, too. Canada is working on its Artificial Intelligence and Data Act, and the U.K. is carving out its own regulatory path. The message is clear: governments all over the world are moving to put guardrails on AI. A rigid, one-size-fits-all compliance checklist isn't going to cut it. You need a strategy that can adapt.

Top consumer privacy concerns

Getting the legal stuff right is one half of the equation. The other half, which is arguably more important, is earning and keeping your customers' trust. To do that, you first need to understand what they're actually afraid of when it comes to AI. According to the Pew Research Center, a staggering 81% of people are worried companies will use their data in ways they're not comfortable with. When you throw AI into that mix, that fear gets dialed up to eleven.

"Where is my data going?": A core consumer concern

This is probably the biggest fear for most customers. They imagine their private support chats about a sensitive issue being fed into some giant, faceless AI model that hundreds of other companies can learn from. And honestly, it’s a valid concern. Many AI vendors are frustratingly vague about their data handling policies. A user on a Microsoft Q&A forum expressed confusion over a 30-day data retention policy for abuse monitoring, which just goes to show how a simple lack of clarity can quickly destroy trust.

This is a problem that modern AI platforms have to solve head-on. For example, some tools like eesel AI are built specifically to address this. They guarantee that your data is never used to train general models for anyone else. It's used exclusively to power your company's AI agents, ensuring your business context and your customer's private data stay completely siloed and secure.

"Is my data safe?": A key security question

AI systems create a new, shiny, and very tempting target for hackers. The average cost of a data breach has now climbed to $4.88 million, according to IBM. A breach at a single AI vendor could instantly expose sensitive customer data from hundreds or even thousands of their clients.

This makes your choice of vendor incredibly important. You have to do your homework and partner with companies that take security seriously. That means looking for strong encryption, SOC 2 compliance for all their subprocessors, and clear, robust security protocols you can actually read and understand.

"Is this fair?": Addressing algorithmic bias

Another common worry is that an AI might be unfair. Customers are concerned that an algorithm could deny their valid refund request or offer them a smaller discount based on hidden biases lurking in the training data. These "black box" AI models, where it's nearly impossible to understand why a particular decision was made, are a huge problem. It's not just a trust issue; it could also land you in legal hot water under regulations like GDPR, which includes a "right to an explanation" for automated decisions.

How to implement a privacy-first AI strategy

Building trust isn't about slapping a lengthy privacy policy on your website and calling it a day. It's about the choices you make every day, from the tools you pick to the workflows you design. Here’s a simple framework to help you put privacy at the heart of your AI strategy.

Choose a transparent vendor: A non-negotiable step

Think of your AI vendor as your partner in privacy. You need to choose one you can trust completely.

-

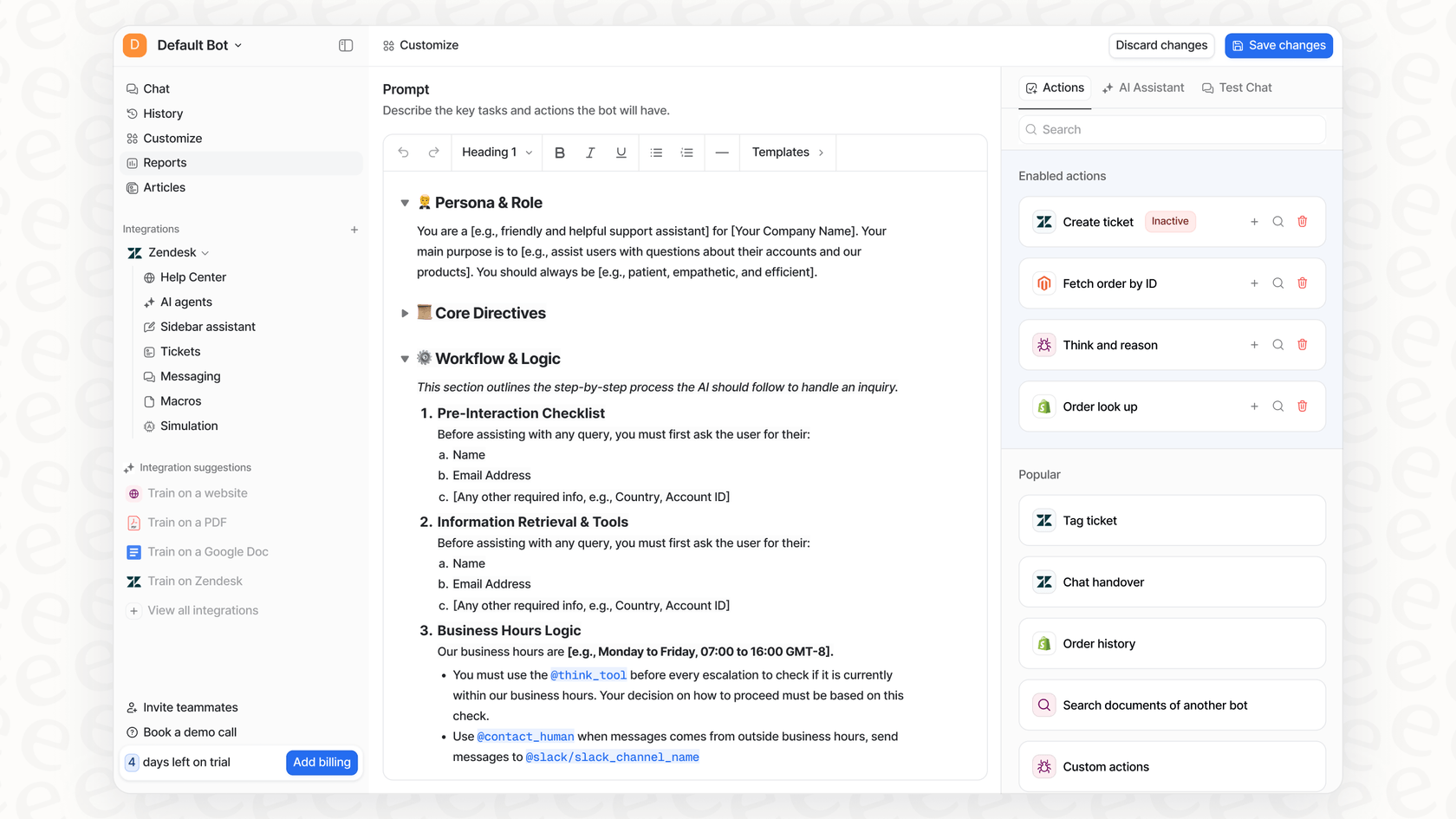

Look for a self-serve platform. Platforms that force you into long sales calls and demos just create more opportunities for your data to be handled by more people. A self-serve tool like eesel AI lets you connect your helpdesk and get an AI agent running in minutes, all by yourself. You control the process from start to finish.

-

Insist on data isolation. Your vendor needs to give you a contractual guarantee that your customer data will never be used to train models for other companies. This should be a non-negotiable.

-

Check their security credentials. Don't just take their word for it. Verify that they use SOC 2 Type II-certified subprocessors and offer essential features like EU data residency if you have customers there.

Maintain human oversight: A critical requirement

A lot of AI tools out there promise "full automation" right out of the box. While that sounds great, it can be a risky approach. It removes human judgment from the equation, which is especially dangerous when dealing with sensitive customer issues.

A much smarter approach is to use AI that allows for selective automation. A platform like eesel AI gives you a powerful workflow engine to decide exactly which types of tickets the AI should handle. You can easily create rules to automatically escalate any conversation that contains sensitive information (like a credit card number) or deals with a complex issue straight to a human agent. This gives you the best of both worlds: efficiency and complete control.

Test before you go live

Would you launch a new feature for your customers without testing it first? Of course not. The same rule should apply to your AI. Deploying a new AI bot without thorough testing is a surefire way to create privacy blunders and terrible customer experiences.

A much better way is to use a simulation environment. For example, eesel AI has a simulation mode that lets you test your AI setup on thousands of your own historical tickets in a completely safe, sandboxed environment. You can see exactly how it would have responded, spot any gaps in its knowledge, and get a forecast of its performance before a single customer ever interacts with it. This lets you launch with confidence, knowing your AI is both helpful and safe from day one.

Privacy is your competitive advantage

The world of data privacy and AI in customer service is definitely getting more complex in 2025. It's easy to look at all these new rules and regulations and see them as a roadblock to innovation. But that’s the wrong way to think about it. This is a massive opportunity to build deeper, more meaningful trust with your customers.

By taking the time to understand the legal landscape, listening to your customers' concerns, and choosing tools that are built with transparency and control in mind, you can build an AI strategy that's not just efficient, but also deeply trustworthy. In today's world, protecting customer data isn't just about avoiding fines; it's the very foundation of a modern, resilient, and successful customer experience.

Ready to build an AI support system that's both powerful and private? eesel AI lets you go live in minutes, not months, while giving you total control over your data and workflows. Try it for free today or book a demo to see how our simulation mode can de-risk your entire AI implementation.

Frequently asked questions

The most significant updates include the EU AI Act's clarity requirements, continued GDPR application to AI data, and new U.S. state privacy laws broadening "sensitive data" definitions and requiring consent. Prioritize transparency with customers, data minimization, and understanding data residency requirements.

These updates will demand greater transparency, requiring clear disclosure that customers are interacting with AI. They also necessitate stricter controls over how historical customer data is used to train AI models and mandate explicit consent for processing sensitive information.

Look for vendors that offer contractual guarantees of data isolation (your data isn't used to train models for others), strong security credentials (like SOC 2 compliance), and features such as EU data residency if applicable. Transparency in their data handling policies is crucial.

Yes, primary concerns include customers worrying about where their data is going, whether it's truly safe from breaches, and if AI systems might make unfair or biased decisions based on their information. Addressing these fears is vital for building trust.

Balance efficiency by implementing selective automation, allowing AI to handle simple, high-volume tasks while escalating sensitive or complex issues to human agents. Maintaining robust human oversight ensures both compliance and customer satisfaction.

Thorough testing in a simulation environment before deployment is critical. It allows you to identify potential privacy issues, ensure accurate responses, and forecast performance without exposing real customer data, thereby de-risking your AI implementation.

Absolutely. Proactively building a privacy-first AI strategy can significantly enhance customer trust and loyalty. Demonstrating a commitment to data protection differentiates your brand and transforms compliance from a burden into a strong competitive advantage.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.