If you work in support, you know the feeling. Ticket volumes are climbing, and everyone’s talking about how AI can help. But you've also probably seen generic chatbots that cause more headaches than they solve. They fail because they don’t have a clue about your company’s products, policies, or what your customers are actually dealing with. It’s like throwing a new hire on the phones with zero training.

This is where Retrieval-Augmented Generation (RAG) into the picture. It’s an AI approach that lets a model use your company’s own internal knowledge, your help center, internal docs, even past support tickets, to answer questions. It's the difference between a bot giving a vague, unhelpful reply and one that provides a perfect, context-aware solution.

For teams with developers on standby, LangChain is a popular open-source tool for putting these RAG pipelines together from scratch. In this post, we'll walk through the concepts behind building a support RAG pipeline with LangChain. But more importantly, we'll look at a more direct, powerful, and self-serve path for support teams who just want a solution that works right out of the box.

What you'll need for a DIY support RAG pipeline

Before we get into the nitty-gritty, let's get real about what it takes to build one of these from the ground up. This isn't a casual weekend project, it's a serious engineering effort that requires a proper investment.

Here’s a quick rundown of what you’ll need to have ready:

-

Developer firepower: You’ll need engineers who are comfortable with Python, APIs, and the often-tricky world of AI frameworks. This isn't a job for someone just dipping their toes into code.

-

LLM API keys: You'll need an account with a large language model (LLM) provider like OpenAI. This means paying for usage, both for generating answers and for creating the "embeddings" that power your AI's search.

-

A vector database: This is a special kind of database where your knowledge gets stored so the AI can search it quickly. Options like Pinecone, ChromaDB, or FAISS are common, but they all need to be set up, configured, and looked after.

-

Hosting infrastructure: Your pipeline needs a server to run on. That means setting up and paying for cloud hosting (like AWS or Google Cloud) to keep it online 24/7.

-

A lot of time and patience: This is the big one. Building, testing, and fine-tuning a RAG pipeline is a major project that can easily take weeks or even months to get right.

Building a support RAG pipeline with LangChain: A step-by-step guide

Let's break down the main stages of building a RAG pipeline. For each step, we’ll look at how you'd do it with LangChain and then see how it compares to a more streamlined, no-code approach designed for support teams.

Step 1: Loading and indexing your support knowledge

The LangChain way:

First things first, you have to get your data into the system. In LangChain, this is done with "Document Loaders." This means writing Python code to connect to each of your knowledge sources. You might use a "WebBaseLoader" to scrape your public help docs, a "GoogleDocsLoader" for internal guides, or build a custom loader to pull info from your help desk's API.

This process often means wrangling API keys, dealing with different file formats, and writing scripts to make sure the information stays up to date. If you decide to add a new knowledge source later on, you have to go back to the code and build a whole new loader.

A simpler alternative:

Now, what if you could skip all that? A platform like eesel AI offers one-click integrations with over 100 sources. Instead of writing code, you just securely connect your accounts. Want the AI to learn from your Zendesk tickets, Confluence wiki, and internal Google Docs? Just click a few buttons to authorize access, and you're done. A task that could take an engineer days is finished in minutes from a simple dashboard.

Step 2: Splitting documents into chunks and creating embeddings

The LangChain way:

Once your documents are loaded, you can't just hand them over to the AI. They're way too long. You need to break them down into smaller, more manageable pieces, a process called "chunking." LangChain gives you tools for this, like the "RecursiveCharacterTextSplitter," but it’s on you to figure out the best way to do it. You'll likely spend a good amount of time fiddling with settings like "chunk_size" and "chunk_overlap" to see what works.

After chunking, each piece is sent to an embedding model, which turns the text into a string of numbers (a vector) that captures its meaning. These vectors are then stored in that vector database you set up earlier.

A smarter, automated approach:

This is another big technical job that a managed platform just handles for you. eesel AI automates the entire chunking and embedding process, using methods that are fine-tuned for support-style content. You don't have to think about any of the technical details, it just works.

Even better, eesel AI can actually train on your past tickets. It digs through thousands of your historical support chats and emails to learn your brand's tone of voice, figure out common customer issues, and see what a successful resolution looks like, all on its own. This is something you simply can't get from standard LangChain tools without months of custom development work.

Step 3: Setting up the retriever

The LangChain way:

The "Retriever" is the part of your pipeline that searches the vector database. When a customer asks a question, the retriever’s job is to find the most relevant bits of text to show the LLM. In LangChain, you set this up in your code by pointing it to your vector database. Its performance is completely tied to how well you did the embedding step and the search algorithm you're using.

Unifying knowledge for better retrieval:

A major weakness of a basic LangChain setup is that it usually only searches one knowledge base at a time. But a real support team's knowledge is spread all over the place. A customer's issue might be touched on in a help article, discussed in a private Slack channel, and fully documented in a Google Doc.

eesel AI gets around this by bringing all your connected sources together into one unified knowledge layer. When a question comes in, it can pull context from a help desk macro, a past Slack conversation, and a technical doc all at once to give the best possible answer. You can also easily scope knowledge to create different AI agents for different jobs. For instance, you could have an internal IT bot that only uses your IT docs, while your public-facing bot is limited to your help center.

Step 4: Generating answers and taking action

The LangChain way:

The final step is putting it all together. You create a "Chain" (like "RetrievalQA") that takes the user's question and the retrieved text, bundles them into a prompt, and sends it off to the LLM to write an answer.

But what if you want the AI to actually do something? Like tag a ticket, look up an order number, or escalate to a human agent? In LangChain, this means building complicated "Agents" that use "Tools." This is a huge leap in complexity and really requires a deep understanding of AI development to pull off correctly.

A no-code, customizable workflow engine:

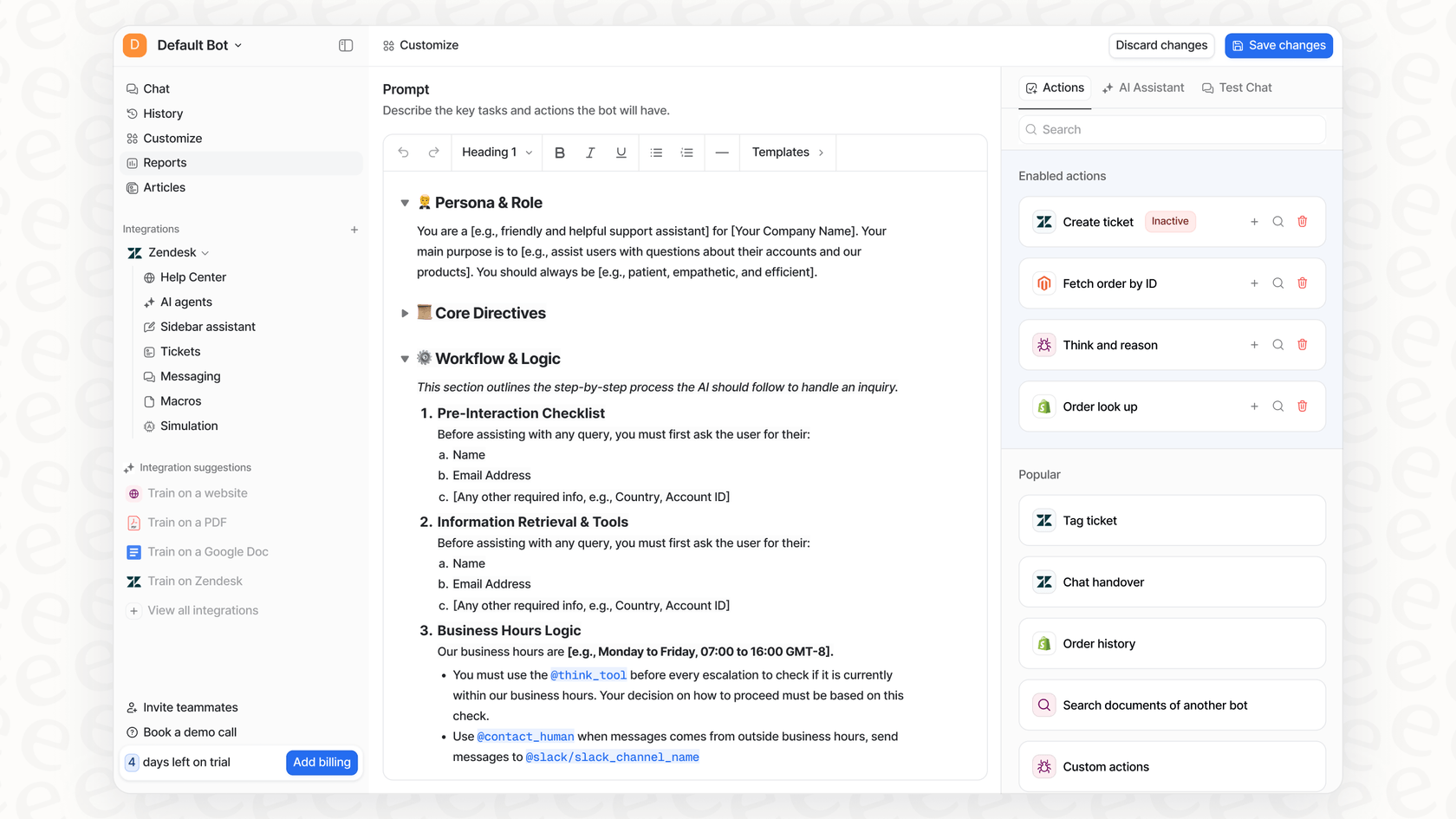

This is where a dedicated support platform pulls way ahead. With eesel AI, you use a simple, visual prompt editor to define your AI's personality, tone, and rules for when it should hand things over to a person.

Better yet, you can give it custom actions without writing a single line of code. You can set it up to "tag ticket as 'Billing Issue'," "look up order status from Shopify," or "create a Jira ticket and escalate to Tier 2." This level of control lets you automate whole workflows, not just answer questions. It turns your bot from a simple Q&A machine into an autonomous agent that can actually resolve issues.

Common pitfalls when building a support RAG pipeline with LangChain

When you're building a support RAG pipeline with LangChain, it's easy to hit snags that can stall the whole project. Here are a few common ones and how a managed solution helps you dodge them completely.

| Pitfall with DIY LangChain Pipeline | How eesel AI Solves It |

|---|---|

| AI making things up ("hallucinations") | Trains on your actual past tickets and unified knowledge, so answers are highly relevant. Scoped knowledge also keeps it from going off-topic. |

| Hard to know if it actually works | A powerful simulation mode tests the AI on thousands of your old tickets, giving you accurate resolution forecasts before you ever turn it on. |

| A huge time and money sink up front | Goes live in minutes, not months. The platform is designed to be self-serve, so you don't need a dedicated AI engineering team to get it running. |

| Costs that spiral out of control | Offers clear, predictable pricing. You won't get hit with a surprise bill based on ticket volume, unlike some pay-per-resolution competitors. |

Building a support RAG pipeline with LangChain: Build vs. buy for your support team

LangChain is a fantastic, powerful framework. For developers building very specific, custom AI applications from the ground up, it’s an amazing tool.

But for a support team, the goal isn't to become an AI infrastructure company. The goal is to solve customer problems faster, cut down on agent burnout, and make customers happier. The DIY route with LangChain brings a ton of complexity, long development timelines, and a maintenance headache that pulls focus from what really matters: delivering great support.

The fastest path to intelligent support automation

eesel AI gives you all the power of a custom-built RAG pipeline without any of the headaches. It’s an enterprise-grade platform built for support teams, not just developers. It unifies your knowledge, learns from your team's past conversations, and gives you the tools to automate entire workflows in just a few minutes.

Ready to automate your frontline support with an AI that actually understands your business? Sign up for a free eesel AI trial and see it in action yourself.

Frequently asked questions

You'll need experienced developers proficient in Python and AI frameworks, access to LLM API keys for model usage and embeddings, a configured vector database, and robust cloud hosting infrastructure to maintain the pipeline.

Companies often choose LangChain for its flexibility and open-source nature, especially if they have dedicated AI engineering teams and specific, niche requirements that pre-built solutions cannot address.

In LangChain, this involves developing custom Python code using "Document Loaders" for each data source. These scripts manage different file formats, API integrations, and ensure information is periodically refreshed.

Major challenges include mitigating AI hallucinations, optimizing document chunking, effectively integrating disparate knowledge sources, and the substantial time and expertise required for initial development, fine-tuning, and ongoing maintenance.

Yes, enabling custom actions like tagging tickets or escalating issues is possible, but it dramatically increases complexity. It requires implementing LangChain's "Agents" and "Tools," demanding advanced AI development skills.

Evaluating performance with a DIY LangChain setup typically relies on extensive manual testing and iterative adjustments. Without specialized tools, it can be challenging to obtain accurate forecasts of resolution rates or systematically identify and address hallucination patterns.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.