OpenAI's Sora 2 has made a huge splash in AI video generation, creating some incredibly realistic clips from just a few lines of text. But if you're a professional video producer, filmmaker, or part of a marketing team, you know that the raw footage is just the beginning. The real work starts when you pull that AI-generated content into a post-production powerhouse like Adobe After Effects.

This guide is all about why After Effects integrations with Sora 2 are so important for getting that polished, high-quality look. We’ll talk about the cool possibilities, but also get real about the practical workflow and the bumps you might hit along the way. Honestly, figuring out this process tells you a lot about the future of plugging powerful AI into the professional tools we use every day, whether you're in a creative studio or a customer support team.

Understanding Sora 2 and After Effects

Before we jump into the workflow, let's do a quick refresh on what makes these two tools so powerful on their own.

What is Sora 2?

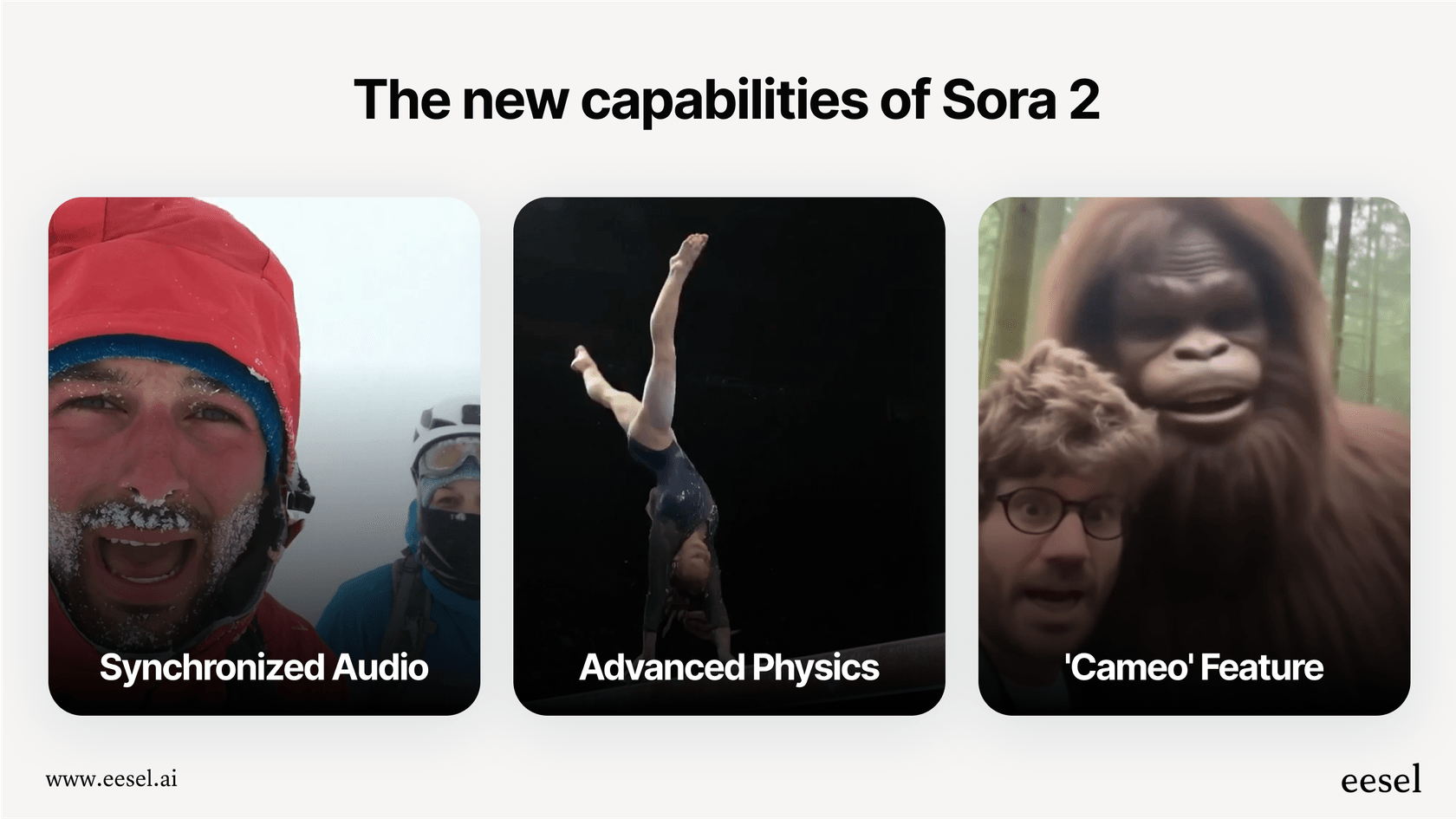

Sora 2 is OpenAI’s newest text-to-video model. The big deal with this one is its surprisingly good grasp of the physical world, which is a big step up from earlier AI video tools. This means it can create clips with:

-

Believable physics: Things actually interact with their environment in a way that makes sense, like a basketball bouncing off a rim or water splashing like it should.

-

Things stay consistent: Characters and objects don’t just morph into something else mid-shot. They stay consistent, which is a must-have for any kind of storytelling.

-

Audio that lines up: Sora 2 can generate sound that actually syncs up, from background noise to dialogue that matches a character's lip movements.

OpenAI is even calling it an early version of a "general-purpose simulator of the physical world," which gives you an idea of where this is all heading.

What is After Effects?

Adobe After Effects is the software for motion graphics, visual effects (VFX), and compositing. For years, it's been the go-to for filmmakers, animators, and designers who need to:

-

Add titles, graphics, and animations to video.

-

Create wild visual effects like explosions or magical elements.

-

Seamlessly blend different visual pieces together.

-

Handle advanced color correction and grading.

Basically, if Sora 2 is your camera, After Effects is the entire post-production studio where you turn raw footage into a finished piece.

Why integrate Sora 2 with After Effects?

Here’s why this combination is so important:

-

Cleaning up the AI weirdness: Let's be honest, AI video still has its quirks. You might run into weird flickering, unnatural character movements, or physics that are just slightly off. After Effects has the tools to fix these things, whether it's using stabilization to smooth out a jittery camera move or masking to paint out a small visual glitch.

-

Adding the human element: A raw AI clip doesn't have your branding, a story, or a call to action. With After Effects, you can add text overlays, logos, motion graphics, and all the other things that turn a generic video into a finished ad or a scene for a short film.

-

Getting that cinematic feel: Color grading is huge in professional video for setting the mood. After Effects lets you do advanced color correction and apply stylistic looks that are way beyond what a text prompt can do right now. This helps make sure the final video actually matches your brand’s look and feel.

-

Building a world: You could use Sora 2 to generate an amazing background, and then use After Effects to place live-action actors or 3D models into that scene. This hybrid approach gives you the speed of AI generation with the precision of traditional VFX work.

This video demonstrates various techniques for combining AI-generated content with After Effects to create unique motion graphics and composites.

A high-level look at the production workflow

While we won't get into a super deep technical tutorial, it helps to understand the main steps in a real production workflow. This isn't just about exporting from Sora and importing into After Effects; it takes some planning, a lot like a traditional film production.

You can think about the process in three main stages:

-

Pre-production and generating footage: This is where you map out your story, make a shot list, and start crafting your prompts for Sora 2. The idea is to get as close as you can to your final vision right from the start. This means tweaking prompts to get the right camera moves, lighting, and character actions. You might end up generating dozens of clips just to find the one that feels right.

-

Reviewing and picking your shots: Once you have a bunch of raw clips, you'll go through and pick the best takes. This is also when you might do some light cleanup. If a Sora 2 clip has a small issue, you have to decide if it’s faster to just "fix it in post" or go back and try to get a better result with a new prompt.

-

Post-production in After Effects: Here's where the heavy lifting happens. You import your selected clips into After Effects for color grading, stabilization, adding motion graphics, sound design, and putting all the final pieces together. This is where your artistic vision really takes over.

It's a powerful workflow, but as you can tell, it has quite a few steps and requires someone who knows their way around both AI prompt engineering and traditional post-production.

Current roadblocks and the bigger picture for AI

Even with all this potential, making professional content with Sora 2 and After Effects isn't exactly a walk in the park. The hurdles we're seeing here point to a bigger theme in the AI world: getting these amazing models to play nicely with existing professional tools is often a complicated and expensive process.

Here are some of the main limitations right now:

-

It’s hard to get access: The Sora 2 API isn't open to everyone yet. Access is mostly limited to an invite-only app and a few enterprise partners. This makes it tough for most teams to build any kind of reliable, automated pipeline.

-

The workflow is manual and clunky: The process we just walked through is pretty hands-on. You’re generating clips on one platform, downloading them, and then importing them into another. This creates a lot of friction and can really slow down the creative flow, especially if you need to go back and regenerate a clip.

-

It requires a unique skillset: This workflow demands a weird mix of skills. You need to be a good prompt engineer to get what you want out of Sora 2 and a talented After Effects artist to make it look polished. Finding someone who is great at both is rare, and probably not cheap.

This problem of plugging powerful AI into existing tools isn't just a creative-field thing. Over in the customer support world, teams are asking a similar question: how do you use AI without having to throw out your current help desk (like Zendesk or Freshdesk) and rebuild your whole operation from scratch?

A lot of AI support tools demand that you rip out your old system or spend months on a complicated setup. But some platforms are built to avoid that headache. Take eesel AI, for example. It’s designed to plug right into your existing help desk and knowledge sources with one-click integrations. You can get it running in minutes, not months, because it's built to be incredibly self-serve. Instead of a messy, manual process, you get a system that learns from your team's past tickets and starts automating answers right away, all inside the software your team already uses.

The costs of Sora 2 and After Effects

If you're looking to set up this workflow, you'll need to factor in the costs of both platforms.

Sora 2 Pricing: A full public pricing model for the API isn't out yet, but OpenAI has mentioned it will likely be a pay-per-second model.

-

Sora 2 Standard: Free to use through the app with some limits, which is great for just playing around.

-

Sora 2 Pro: Comes with a ChatGPT Pro subscription on sora.com. API access is expected to be priced per second of video you generate, maybe around $0.10/sec for standard quality and up to $0.50/sec for high resolution.

Adobe After Effects Pricing: After Effects is part of the Adobe Creative Cloud subscription.

-

Single App: Runs about $22.99/month.

-

All Apps Plan: Gets you After Effects, Premiere Pro, Photoshop, and more for about $59.99/month.

And of course, these prices don't include the cost of the time and talent needed to use both of these tools well.

The future of integrating Sora 2 with After Effects

The combination of Sora 2 and After Effects is a pretty exciting leap for video creation. It shows that AI's biggest strength might not be in replacing creative professionals, but in giving them tools that help them bring their ideas to life faster. The raw power of AI generation, mixed with the fine-tuned control of professional post-production software, creates a workflow where the end result is way better than what either tool could do on its own.

But the current clunkiness of it all is a good lesson. The best AI tools of the future will be the ones that slide right into the platforms we already use, cutting down on the hassle and letting us do our best work without needing a degree in AI.

If you like the sound of that same seamless, powerful AI integration for your customer support team, you might want to check out eesel AI. You can set up an AI agent that learns from your existing knowledge and works right inside your help desk in just a few minutes.

Frequently asked questions

The main benefit is gaining professional control over AI-generated content. It allows artists to refine, enhance, and personalize raw Sora 2 footage, transforming it into polished, high-quality video that matches specific creative or branding requirements.

Yes, After Effects is crucial for addressing common AI quirks. It provides tools for stabilizing jittery footage, correcting unnatural movements, masking glitches, and performing advanced color grading to ensure a cinematic and consistent look.

The workflow typically involves pre-production planning and prompt crafting in Sora 2, reviewing and selecting the best AI-generated clips, and then extensive post-production in After Effects for refinement. Currently, this process is largely manual, requiring downloading and importing between platforms.

Current roadblocks include limited access to the Sora 2 API, a manual and somewhat clunky workflow, and the need for a rare blend of prompt engineering and After Effects artistic skills. These factors can slow down the production process significantly.

Effectively managing After Effects integrations with Sora 2 requires a dual skillset: strong prompt engineering abilities to guide Sora 2's output and proficient After Effects artistry for post-production tasks like compositing, motion graphics, and color grading. Finding individuals skilled in both areas can be challenging.

Costs involve a pay-per-second model for Sora 2 (e.g., $0.10-$0.50/sec once public) and an Adobe Creative Cloud subscription for After Effects (e.g., $22.99/month for single app or $59.99/month for all apps). These costs do not include the significant investment in skilled labor.

After Effects integrations with Sora 2 are more likely to empower creative professionals rather than replace them. AI generates raw content quickly, but the human element of artistic vision, storytelling, and precise refinement in After Effects remains essential for achieving professional-grade results.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.