An honest OpenAI Frontier review: The future of enterprise AI agents?

Kenneth Pangan

Katelin Teen

Last edited February 6, 2026

Expert Verified

On February 5, 2026, OpenAI made a significant announcement: the launch of Frontier. It's their new enterprise platform for building and managing AI agents, which they refer to as "AI co-workers."

The stated promise is to close the "opportunity gap," the space between what powerful AI models are capable of and what most businesses can reliably implement.

This raises the question of whether this marks the point where AI transitions from a novel technology to an integral part of business operations.

This OpenAI Frontier review will cover what it is, its main features, its intended audience, its potential drawbacks, and what it means for the future of AI in business.

What is OpenAI Frontier?

At its core, OpenAI Frontier is a platform that acts as an "intelligence layer" for an entire company. It functions less like a single tool and more like an operating system that links various systems, data, and applications.

The main idea is to give all your AI agents a shared brain. Instead of a group of separate bots operating independently, Frontier creates a "semantic layer" for the business. This means every agent has the same understanding of how the company operates, where to find information, what the key goals are, and how to communicate with other systems. It is designed to provide one source of truth so AI can handle complicated tasks that cross different departments and tools.

A notable aspect is OpenAI's open approach. Frontier is built on open standards, designed to work with existing software and manage AI agents built on competitor models, not just OpenAI's. The goal is to create a central system for all enterprise AI rather than a closed ecosystem.

Core features of OpenAI Frontier

OpenAI has organized Frontier around four main pillars. To help visualize how these components work together, the following graphic breaks down each pillar and its function within the platform. Let's take a look at what each one does.

Shared business context

This is the foundation of the entire platform. Frontier is built to connect to various systems in a company: data warehouses, CRMs like Salesforce, ticketing systems like Zendesk, and internal applications. By tapping into these systems, it creates what OpenAI calls "durable institutional memory."

In simple terms, it learns how your business works. This shared context allows all the AI agents on the platform to work together cohesively. They all pull from the same knowledge base, so they can collaborate on tasks smoothly.

Building this company-wide "semantic layer" is a significant project that can take months or longer, representing a long-term commitment. For teams looking for solutions with a faster implementation, an AI teammate like eesel AI offers a different approach. It learns business context by connecting to existing tools like a help desk, Confluence, or Google Docs, without requiring platform engineering.

Agent execution environment

Once the AI has a grasp of the business, it needs a place to perform tasks. That's where the "agent execution environment" comes in. This is the workspace where AI agents use their intelligence to tackle real business problems.

This could involve working with files, running code, using tools for data analysis, or solving complex issues. As agents complete these tasks, they build up "memories," which are records of past actions that provide useful context for the future. This helps them improve over time by learning from their own experiences.

Evaluation and optimization

To bridge the gap between AI demonstrations and real-world performance, this feature acts as the quality control system for your AI agents. It gives both human managers and other AI co-workers a way to see what's working and what isn't.

This feedback loop is key. It helps agents understand what a "good" result looks like, letting them improve based on actual performance. It is intended to be the bridge between a technology demo and a reliable AI teammate.

Identity and governance

For AI agents to operate within company systems, strong guardrails are necessary. This pillar manages all the security and control elements. Every AI agent gets its own identity, complete with specific permissions and boundaries. This is vital for using AI with confidence, particularly in regulated fields.

Every action an agent takes is tracked and can be audited through built-in monitoring and detailed logs, so you always have a clear record. Frontier is also designed to meet enterprise compliance standards like SOC 2 Type II and ISO/IEC 27001, which is a requirement for many large companies.

Who is OpenAI Frontier for?

So, who is this really meant for? While the technology is exciting, it is not designed for the average small business or startup.

A platform for large enterprises

Frontier is aimed directly at large enterprises. The list of early customers includes HP, Intuit, Oracle, State Farm, and Uber. These are large companies with the budget and technical staff to handle a project of this scale.

The recent $200 million partnership with Snowflake further solidifies its enterprise focus. This deal brings OpenAI's models directly into the Snowflake AI Data Cloud, showing a clear plan to integrate deeply within the existing enterprise data ecosystem.

Integration with existing tools

As mentioned, Frontier is not meant to replace your current software. It's built on open standards and is designed to integrate with what you have. OpenAI is also creating an ecosystem of "Frontier Partners," specialized AI companies like Abridge and Harvey, who will build new solutions on the platform. The goal is to create a central hub where top-tier AI can connect with enterprise systems.

The forward deployed engineer approach

To manage the implementation, OpenAI provides its own "Forward Deployed Engineers" (FDEs) to work alongside customer teams. These specialists oversee the entire deployment, from the initial prototype to the final production system.

The existence of this specialized role indicates a comprehensive implementation process. This model differs from solutions like eesel AI, where a non-technical manager can set up an AI teammate to begin drafting customer replies quickly, without requiring code.

Limitations and market impact of OpenAI Frontier

Frontier is a powerful platform, but it has its challenges and is already affecting the market.

The software stock shake-up

When OpenAI and Anthropic recently announced their AI agent plans, the market reacted immediately. Software stocks for companies like ServiceNow, Workday, and Thomson Reuters experienced declines.

The concern is that if one powerful AI agent can handle complex tasks across many different applications, it could change the role of specialized SaaS tools. There is a worry that autonomous agents could commoditize sophisticated software, which would affect prevalent subscription revenue models.

Platform complexity

Frontier is a foundational platform, not a standalone application. A successful implementation requires a significant investment of time, money, and skilled engineering resources, which is supported by the FDE role. This type of project represents a major strategic initiative.

Suitability for immediate business needs

This raises the question of the best approach for solving specific business problems. For teams whose primary goal is to address immediate operational challenges, such as reducing customer support wait times or automating repetitive tasks, a more targeted solution may be more direct.

An AI Agent from a company like eesel is designed to address these specific use cases. With features that allow users to run simulations on thousands of past tickets, teams can evaluate how the AI would perform and build confidence before deploying it in a live customer-facing environment.

OpenAI Frontier pricing

If you're wondering what Frontier costs, OpenAI has not released any public pricing. The website just says "Contact sales."

Given the enterprise focus, custom deployments, and the hands-on work from Forward Deployed Engineers, it is likely a high-value solution. Pricing is probably based on custom quotes for multi-million dollar deals, which places it outside the budget for most companies.

An alternative approach: AI teammates

Frontier represents one vision for the future of AI at work: building a comprehensive platform from the ground up. An alternative approach involves deploying an AI teammate for a specific role without a large-scale engineering project.

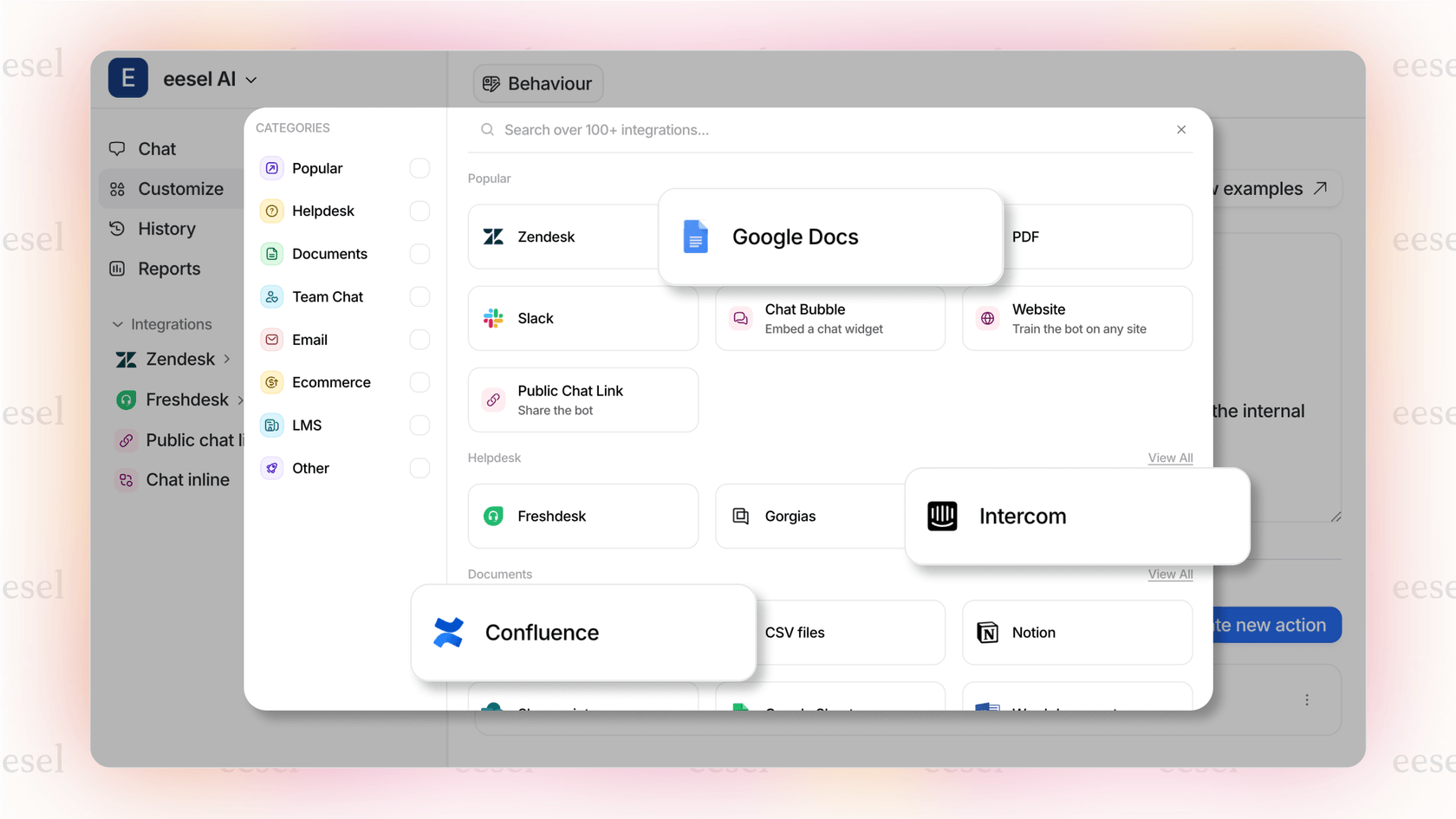

This is the model offered by eesel AI. It is onboarded rather than configured, learning from existing company knowledge. It can be set up in a short amount of time.

This approach allows teams to start with a tool like an AI Copilot that assists human agents by drafting replies. Based on its performance, it can later be transitioned to a more autonomous role, handling tickets on its own.

Eesel AI Copilot in This OpenAI Frontier Review

A demonstration of the eesel AI Copilot in this OpenAI Frontier review, showing an AI-drafted reply for a human agent to review.

| Feature | OpenAI Frontier | eesel AI |

|---|---|---|

| Target User | Large Enterprise (with dev teams) | Support, Sales, & Ops Teams |

| Setup Time | Months (with FDEs) | Minutes (no-code) |

| Go-Live Model | Platform deployment | Progressive rollout (Copilot → Agent) |

| Pre-launch Check | Built-in evaluation tools | Simulate on thousands of past tickets |

| Pricing | Custom (undisclosed) | Transparent, from $239/mo |

To better understand the competitive landscape and the broader implications of platforms like Frontier, the following discussion provides an analysis of the major players pushing the boundaries of AI.

A video providing analysis on the AI frontier for this OpenAI Frontier review, covering major players like OpenAI and Anthropic.

A new frontier, but not for everyone

OpenAI Frontier is a powerful and visionary platform that lays the groundwork for a future where AI co-workers are an integral part of the enterprise landscape. It is an ambitious project that moves the industry forward.

However, it is a comprehensive solution designed for large companies with the technical teams and budgets required for a significant implementation.

For businesses focused on solving immediate problems, like managing high ticket volumes or improving agent efficiency, a more targeted solution may be a more direct starting point. The future of work may involve fleets of AI agents, and for many, that journey can begin with a single AI teammate.

To learn how an AI teammate can assist with customer service, you can see eesel AI in action.

Frequently Asked Questions

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.