OpenAI Assistants API: 2025年の非推奨化とより良い代替案に関するガイド

Kenneth Pangan

Katelin Teen

Last edited 2025 10月 14

Expert Verified

When OpenAI first dropped its Assistants API, it felt like a pretty big deal for developers. The idea was simple enough: give us a way to build smart, stateful AI agents without having to juggle all the tricky bits like conversation history ourselves. A lot of us in the support and dev world were genuinely excited.

But like many shiny new tools, the reality turned out to be a bit more complicated. While the API certainly lowered the barrier to entry, it came with its own set of headaches, especially for anyone trying to build something that could handle real-world use. We’re talking about unpredictable costs that could spiral out of control, clunky performance, and a feeling that you weren't really in the driver's seat.

And now, there's a new development: OpenAI is officially deprecating the Assistants API. It's being replaced by a new "Responses API," with the old one getting switched off for good in 2026.

So, what does all this mean for you? In this guide, we'll walk through what the OpenAI Assistants API was, why it's on its way out, the problems developers kept running into, and what’s next. More importantly, we'll show you a more direct, stable, and powerful way to build the AI agents your business actually needs.

What was the OpenAI Assistants API?

The main point of the OpenAI Assistants API was to give developers a toolkit to build AI assistants right into their own apps. Before it came along, if you wanted a chatbot that could actually remember what you just talked about, you had to build that memory system from the ground up. The Assistants API promised to take care of that for you.

Its biggest selling point was that it was a "stateful" API. This just means it managed the conversation history (which it called "threads"), gave you access to pre-built tools like Code Interpreter and File Search (a basic take on Retrieval-Augmented Generation, or RAG), and let you call your own functions to connect to other tools.

Think of it as a starter kit for building a chatbot. It handed you some key components, like memory and tools, so you didn't have to start from zero. This made it incredibly fast to spin up a proof-of-concept, which is exactly why it got so much attention from developers and teams looking to experiment.

It was all built around three main ideas: Assistants, Threads, and Runs. Let's dig into what those are and the problems they created.

The core components and challenges of the OpenAI Assistants API

To really get why the API is being replaced, you first have to understand how it worked, and where it kept falling short.

Assistants, threads, and runs: The building blocks of the OpenAI Assistants API

The whole system was based on a simple, if a bit inflexible, structure:

-

Assistant: This was the AI's brain. You’d set it up with a specific model (like GPT-4o), give it some instructions ("You are a friendly support agent for a shoe company"), and tell it which tools it was allowed to use.

-

Thread: This was just a single conversation with a user. Every time a user sent a message, you’d add it to the thread. The API stored the entire back-and-forth here.

-

Run: This was the action of telling the assistant to read the thread and come up with a response. You'd kick off a "run," wait for it to finish, and then grab the new message from the thread.

This all sounds logical on paper, but in practice, it created some serious roadblocks for anyone building a real application.

The hidden costs: How token usage gets out of control

The biggest, and by far the most painful, issue with the OpenAI Assistants API was its pricing. Every single time a user sent a message, the API would re-process the entire conversation thread, including the full, unabridged text of any files you'd uploaded.

Let's make that real. Imagine a customer uploads a 20-page PDF to ask a few questions about your product. If they ask five questions, you aren't just paying for the tokens in their five questions and the AI's five answers. You are paying to process that entire 20-page PDF five separate times, on top of a conversation history that keeps getting longer.

Developers found this out the hard way.

This made it almost impossible for businesses to predict their costs, turning what should have been a helpful tool into a financial guessing game. A much better way to go is a platform with predictable costs. For example, a solution like eesel AI offers transparent pricing based on your overall usage. You don't get hit with per-resolution fees that effectively penalize you for having longer, more helpful conversations with your customers.

The waiting game: Lack of streaming and reliance on polling

The other major headache was performance. The Assistants API didn't support streaming, which is the tech that gives modern chatbots that live, word-by-word typing effect.

Instead, developers had to use a clunky workaround called polling. You’d start a "run" and then have to repeatedly ping the API, basically asking, "Are you done yet? How about now?" Only when the entire response was generated could you finally grab it and show it to the user.

For a customer waiting for help, this feels slow and broken. They're just staring at a blank screen or a spinning icon, wondering if anything is happening. It's a world away from the instant, interactive feel of tools like ChatGPT. This was a known issue, but it was a deal-breaker for anyone trying to build a quality, real-time chat experience.

Modern AI support platforms are built for the kind of instant interaction customers now expect. The AI agents and chatbots from eesel AI are designed for streaming from the ground up, hiding all these technical details so you can just focus on giving your users a great experience.

A major shift: Why the OpenAI Assistants API is being replaced

Given all these challenges, it’s not too surprising that OpenAI decided to head back to the drawing board. The result is a complete overhaul and a new path forward for developers.

From the OpenAI Assistants API to Responses: What's changing?

According to OpenAI's official documentation, the Assistants API is now deprecated and will be completely shut down on August 26, 2026. It's being replaced by the new Responses API.

This is more than just a name change; it's a completely different way of building. Here’s a quick look at how the old concepts map to the new ones:

| Before ("Assistants" API) | Now ("Responses" API) | Why the Change? |

|---|---|---|

| "Assistants" | "Prompts" | Prompts are easier to manage, version-control through a dashboard, and keep separate from your app's code. |

| "Threads" | "Conversations" | Conversations are more flexible. They can store different kinds of "items" like messages and tool calls, not just plain text. |

| "Runs" | "Responses" | This moves to a much simpler request/response model. You send inputs, you get outputs back, and you have way more control. |

From OpenAI's view, the new model is simpler and gives developers more flexibility. But it also comes with a pretty big catch.

What this means for developers building on the OpenAI Assistants API

While the new API gives you more power, it also puts more work back on your plate. The original promise of the Assistants API, to handle all the conversation state stuff for you, is gone. Now, it's on you to manage the conversation history and orchestrate the back-and-forth for tool use.

This creates a huge risk for any business that built a production system on the Assistants API. They're now looking at a major migration project just to keep their application from breaking. This kind of platform instability is a massive red flag for any company that relies on a third-party API for a core part of its business.

This is where having a layer of abstraction really shows its value. A platform like eesel AI acts as a stable buffer between your business and the constantly shifting world of foundational APIs. When OpenAI makes a breaking change like this, eesel AI handles the migration work behind the scenes. Your AI agents keep running smoothly without you ever having to rewrite a line of code, giving you stability on top of a volatile foundation.

A closer look at OpenAI Assistants API pricing and features

Even before the deprecation was announced, the cost and the limitations of the built-in tools were a common source of frustration for developers.

Understanding the pricing for OpenAI Assistants API tools

On top of the wild token costs, the Assistants API had separate fees for its special tools:

-

Code Interpreter: Priced at $0.03 per session. A session lasts for an hour, which sounds simple but can get complicated and expensive to manage when you have hundreds or thousands of people talking to your bot at once.

-

File Search (Storage): Priced at $0.10 per GB per day, with the first gigabyte free. This cost is for the vectorized data, not the original file size, making it yet another expense that's tough to predict.

When you add these fees to the runaway token costs from re-processing the conversation history, you ended up with a pricing model that was anything but business-friendly.

Limitations of tools like File Search

The File Search tool was supposed to be an easy way to give an assistant knowledge from your documents. In reality, it came with some serious limitations:

-

You had no control over how your documents were chunked, embedded, or retrieved.

-

It couldn't parse images inside your documents.

-

It didn't support structured files like CSVs or JSON.

In practice, this meant you were stuck with a black box. If the retrieval quality was bad, there was nothing you could do to fix it. If your company's knowledge was sitting in spreadsheets, the tool was basically useless.

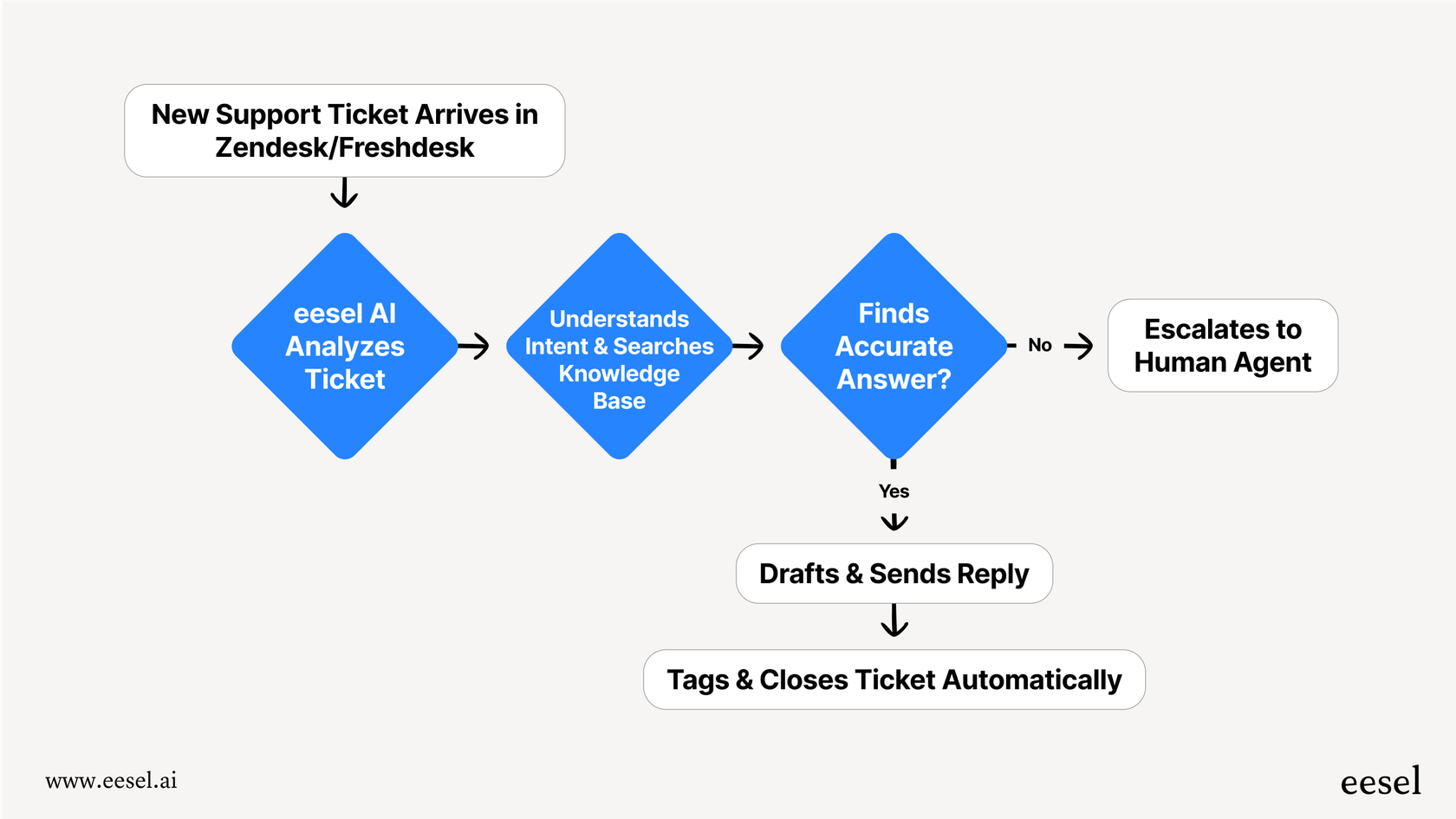

This is a huge contrast to a purpose-built solution. eesel AI is designed to handle the messy reality of business knowledge. It can be trained directly on your past support tickets from help desks like Zendesk or Freshdesk. It connects seamlessly to your wikis in Confluence and Google Docs. It can even pull product info from e-commerce platforms like Shopify, giving you a unified and fully controllable knowledge source for your AI.

The better way to build production-ready AI agents beyond the OpenAI Assistants API

Building directly on a low-level API like OpenAI's is great for a weekend project or a quick experiment. But when you're building an AI agent for a real business function like customer support, you need more than that. You need stability, control, and a clear path to getting a return on your investment.

This is where the idea of an "AI workflow engine" comes into play. It's not just about getting an answer from a model; it's about what you do with that answer. The right solution should have a few key things going for it:

-

It should be incredibly simple to set up. You should be able to connect it to your helpdesk and knowledge sources in minutes, not months, without needing a whole team of developers.

-

It should give you total workflow control. You should be able to define exactly which tickets the AI handles, what actions it can take (like tagging a ticket, updating a field, or escalating to a human), and its specific tone of voice.

-

It should let you deploy without risk. It must let you simulate how the AI would perform on your past data. This lets you see your potential automation rate and build confidence before it ever talks to a real customer.

This is exactly why we built eesel AI. It's a powerful, self-serve workflow engine that solves these challenges. It lets you deploy reliable, customized AI agents without getting stuck managing API changes, unpredictable costs, and complicated state management.

Moving beyond the OpenAI Assistants API

The OpenAI Assistants API was an interesting experiment. It pointed toward a future where creating AI agents is more accessible, but its flaws in cost, performance, and now, its retirement, really highlight the risks of building important business tools on such a moving target.

The shift to the new Responses API gives developers more power, but it also hands them more responsibility. For most businesses, that's not a trade-off worth making. The goal isn't to become an expert in managing OpenAI's ever-changing APIs; it's to solve a business problem.

For companies that need to deploy reliable, cost-effective, and highly customized AI for their support teams, a dedicated platform that handles all the underlying complexity is the smarter, more sustainable way to go.

Get started with a production-ready AI agent

Ready to move past the complexity and build an AI agent that actually delivers results? With eesel AI, you can connect your helpdesk and knowledge sources to launch a fully customizable AI agent in just a few minutes.

Simulate its performance on your own data and see your potential automation rate today.

Frequently asked questions

The OpenAI Assistants API is being replaced primarily due to issues like unpredictable costs, clunky performance (lack of streaming), and limited control for developers. OpenAI is moving to a new "Responses API" that offers more flexibility but shifts more state management responsibility back to developers.

The primary cost concern was that the API re-processed the entire conversation thread, including full document content, with every user message. This led to token usage scaling rapidly and unpredictably, making costs difficult for businesses to manage.

No, the OpenAI Assistants API did not support real-time streaming. Developers had to use a polling mechanism, repeatedly asking the API for updates until the full response was generated, which led to slower user experiences.

Developers need to plan for a migration project as the OpenAI Assistants API will be completely shut down by August 26, 2026. They should familiarize themselves with the new Responses API and consider stable, abstracted platforms like eesel AI to mitigate future API changes.

Yes, the File Search tool had significant limitations, including no control over document chunking or embedding, inability to parse images, and lack of support for structured files like CSVs. This often resulted in poor retrieval quality with no developer recourse.

The new Responses API shifts from stateful management to a simpler request/response model. While it offers more control, developers are now responsible for managing conversation history and orchestrating tool use, which the old API handled.

この記事を共有

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.