The AI space is getting crowded, fast. Just when you think you’ve got a handle on the big names, another one like Together AI pops up. If you're scratching your head wondering what it is, who it's for, and if it's the magic bullet for your customer support team, you've landed in the right spot.

This is a straightforward, no-nonsense guide to the Together AI platform. We’ll cover what it does, its main features, how the pricing works, and who it’s really for. More importantly, we’ll highlight the massive difference between a powerful AI infrastructure platform for developers and a ready-to-use tool that can actually start solving your business problems today.

What exactly is Together AI?

Let’s get straight to it. Together AI is a cloud platform for developers and AI researchers who want to build, train, and run open-source generative AI models. They call themselves an "AI Acceleration Cloud," which is a fancy way of saying they provide the raw computing power (specifically, graphics processing units, or GPUs) and tools needed for heavy-duty AI work.

Founded back in 2022 and with big-name backers like Nvidia and General Catalyst, Together AI has quickly become a popular resource for the deeply technical crowd. It gives them access to a huge library of over 200 open-source models they can use as a foundation for their own projects.

Here's the best way to think about it: Together AI is like a massive, professional-grade workshop. It’s stocked with every high-tech tool, raw material, and piece of heavy machinery you could dream of. But here's the catch: you still have to be the master craftsperson who draws up the plans, assembles all the parts, and builds the final product from scratch. It's a world away from just being handed a finished, ready-to-use product.

Key features of the Together AI platform

So what’s under the hood? Together AI packs a lot of heat, but all its features are aimed squarely at a technical audience with serious machine learning and software engineering chops.

A vast library of open-source models

One of the platform’s biggest selling points is its access to over 200 pre-trained models, including well-known ones like Meta's Llama, DeepSeek, and Mixtral. You can think of these as super-smart, "blank slate" AI brains. They’re incredibly capable but know absolutely nothing about your company, your products, or your customers out of the box. Developers grab these models and start building on top of them.

The challenge, of course, is that picking the right model for a job like customer support, then testing and managing it, takes a ton of expertise. This is a huge contrast to a tool like eesel AI, which completely removes the guesswork. It uses models that are already purpose-built for support and automatically connects to your company's knowledge from places like your help center, past tickets, and internal docs. The result is an AI that gives accurate, relevant answers from day one, no AI research team needed.

Together AI GPU clusters and cloud infrastructure

This is the real heart of Together AI's service. They rent out access to high-performance NVIDIA GPUs, which are the specialized computer chips you absolutely need to run large AI models without waiting forever. This lets companies tap into massive computing power without the cost and hassle of buying and maintaining their own server racks.

But even with a cloud provider, managing these GPU clusters isn't a walk in the park. It requires dedicated DevOps or MLOps engineers to handle all the configuration, scaling, and upkeep. That’s another layer of complexity and cost that many companies aren't ready for. On the other hand, eesel AI is a fully managed, self-serve platform. You never have to waste a single thought on servers or infrastructure. You can connect your helpdesk, whether it's Zendesk or Freshdesk, in a single click and be up and running in minutes, not months.

Together AI fine-tuning and inference APIs

Together AI gives developers the tools for two critical AI processes: fine-tuning and inference.

-

Fine-tuning is how you take a general model and customize it with your own data. It’s like teaching the AI to become an expert on something specific, like your company's product catalog.

-

Inference is the act of actually using that trained model to do something, like generate an answer to a customer's question.

These are powerful capabilities, but fine-tuning a model on a platform like Together AI is a full-blown engineering project. You have to gather, clean, and format thousands of data examples and then write code to manage the whole training process. eesel AI gets you an even deeper level of customization in a much simpler way. It learns directly from the knowledge you already have, like your old support tickets, help center articles, and internal wikis in Confluence or Google Docs, without needing a formal, painful training project. You just connect your sources, and it starts learning.

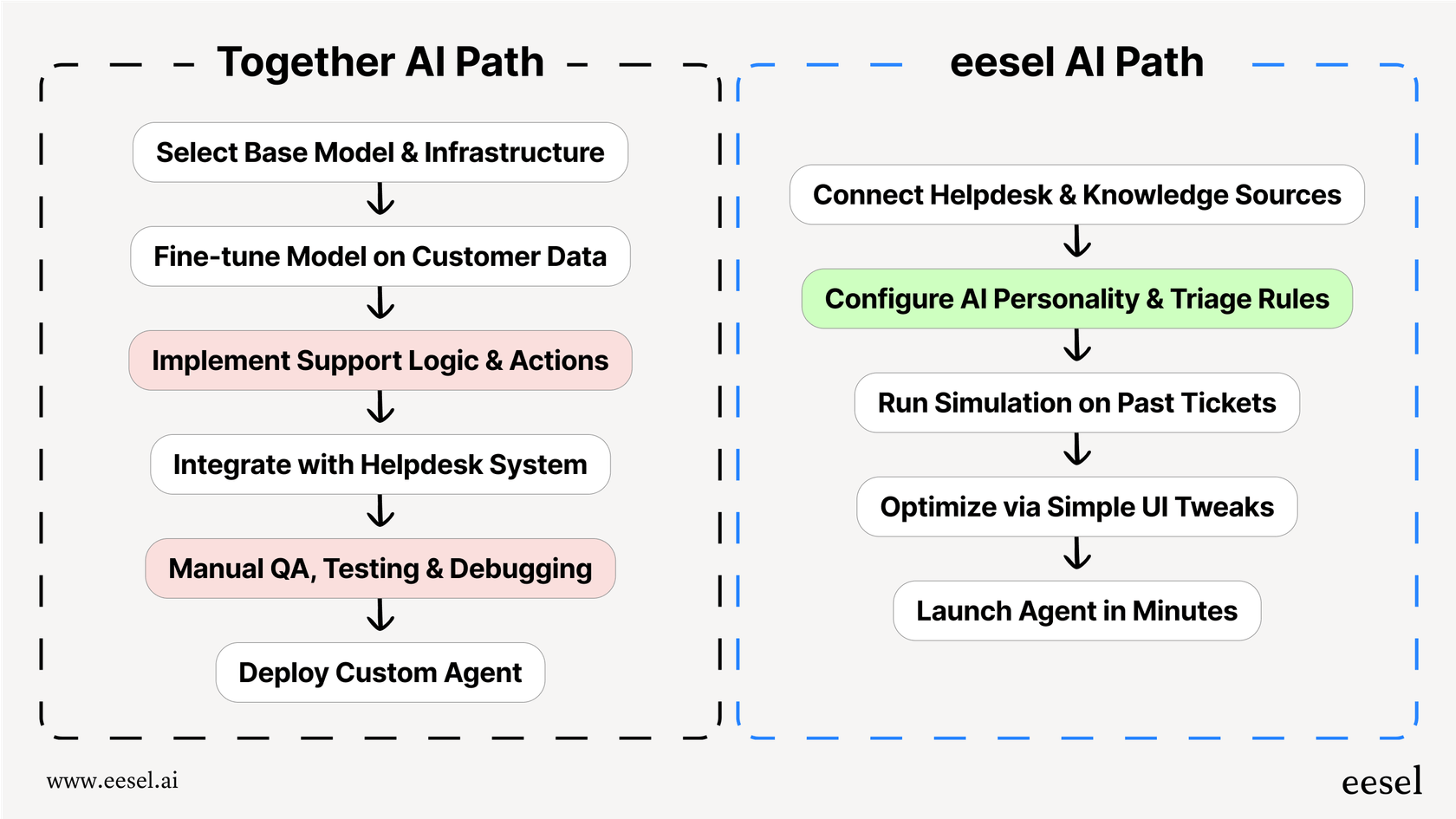

The journey to launching an AI support agent looks completely different depending on the path you take. With Together AI, you're signing up for a multi-step, resource-heavy development cycle. With eesel AI, you're just a few clicks away.

Who is Together AI for (and who is it not for?)

Figuring out who a platform is built for is everything. Together AI is a fantastic tool for a certain kind of user, but it's completely the wrong choice for many others, especially people in non-technical roles.

Who is the ideal Together AI user?

-

AI Researchers and Developers: These are the folks building brand-new AI apps from the ground up. They need direct access to the underlying models and hardware to do their work.

-

Companies with Dedicated AI/ML Teams: Big organizations that have their own in-house experts who can handle the technical heavy lifting of AI infrastructure and build custom software.

-

Anyone Needing Raw GPU Power: If your main goal is just to rent high-performance computing for large-scale AI training or research, and you're not looking for a finished application, it’s a great fit.

Why Together AI is probably not for your support team

-

The Technical Hurdle: This isn't a "switch it on and go" kind of solution. You can't just activate a support bot. It demands a complete development project, from picking a model all the way to deploying a functional application.

-

The Long Wait: The time it takes to get a custom AI agent running with Together AI is measured in months, and it requires a serious investment in engineering hours and salaries.

-

The "Blank Slate" Problem: Together AI gives you the engine, but you still have to build the entire car around it. That means you’re on the hook for creating all the support-specific logic yourself: how to triage tickets, when to escalate to a human, what the reports should look like, and how to integrate with tools like Shopify to check on an order.

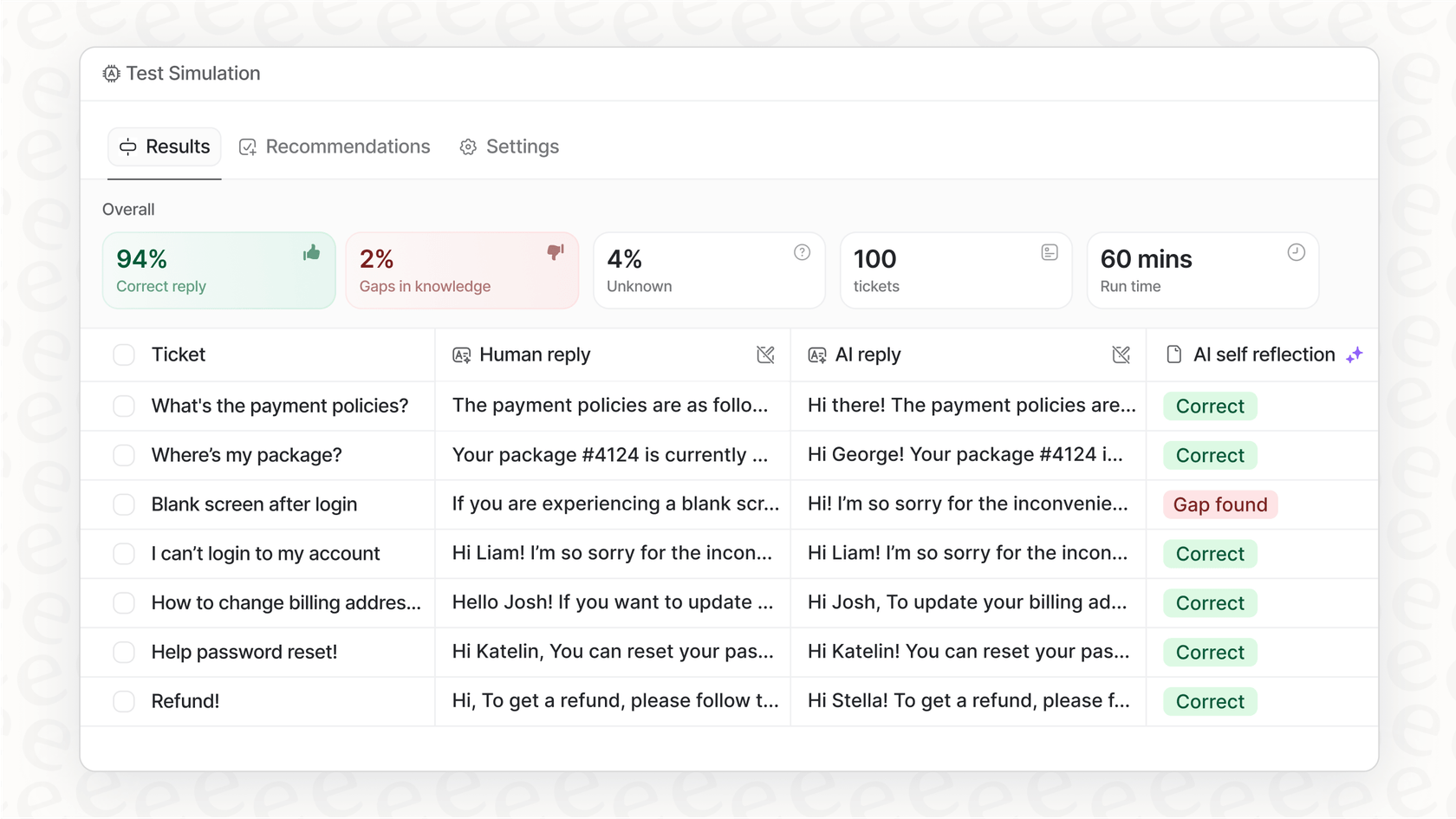

This is precisely where a purpose-built platform like eesel AI comes into its own. It was designed from day one for support and IT teams. Instead of a blank slate, you get a complete workflow engine. It includes a no-code prompt editor to shape your AI's personality, customizable actions to triage tickets or talk to external APIs, and a powerful simulation mode to test your setup on old tickets before your customers ever see it.

| Feature | Building with Together AI | Deploying with eesel AI |

|---|---|---|

| Time to Launch | Months | Minutes |

| Required Skills | AI/ML Engineers, DevOps | None (Self-serve) |

| Helpdesk Integration | Custom development required | 1-Click (Zendesk, Freshdesk, etc.) |

| Core Functionality | Raw model APIs | Pre-built agent, copilot, triage & chatbot |

| Testing | Manual QA & custom scripts | Built-in simulation on past tickets |

| Cost Structure | Unpredictable (compute + salaries) | Predictable, transparent subscription |

This video provides a clear overview of the Together AI cloud platform and how it's designed for building and running generative AI models.

Together AI pricing explained

Alright, let's talk money. Together AI’s pricing is all about "pay-as-you-go," broken down into a few different buckets. This is great for developers who want flexibility, but it can be a real headache for anyone trying to stick to a budget.

Together AI serverless inference pricing

This is what you pay to use the pre-trained models. You get charged by the "token," which is just a small piece of a word. You pay for the tokens you send in (input) and the tokens the model sends back (output). Prices can vary wildly depending on which model you use.

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|

| Llama 4 Maverick | $0.27 | $0.85 |

| Llama 3.1 405B Instruct Turbo | $3.50 | $3.50 |

| DeepSeek-R1 | $3.00 | $7.00 |

| Mixtral 8x7B Instruct v0.1 | $0.60 | $0.60 |

| Qwen 2.5 72B | $1.20 | $1.20 |

| Kimi K2 Instruct | $1.00 | $3.00 |

Together AI fine-tuning pricing

This is the cost to train a model on your own data. The bill is based on the total number of tokens that get processed during the training job.

| Size | LoRA Fine-Tuning | Full Fine-Tuning |

|---|---|---|

| Up to 16B | $0.48 | $0.54 |

| 17B-69B | $1.50 | $1.65 |

| 70-100B | $2.90 | $3.20 |

Together AI GPU cluster pricing

This is the cost to rent the hardware, billed by the hour for each GPU. Prices change based on the power of the chip and how long you commit to renting it.

| Hardware | Hourly Rate |

|---|---|

| NVIDIA HGX H100 Inference | $2.39 |

| NVIDIA HGX H100 SXM | $2.99 |

| NVIDIA HGX H200 | $3.79 |

| NVIDIA H100 (Reserved) | Starting at $1.75 |

And here’s the part they don’t put in big, bold letters: these prices are only for the raw infrastructure. They don’t include the six-figure salaries for the engineering team you’ll need to actually build and maintain something useful with it. This pay-as-you-go model can easily lead to surprise bills that skyrocket with usage.

That’s a totally different world from eesel AI's pricing, which is designed to be simple, transparent, and predictable. You get all the features, from the AI Agent to the AI Copilot and Triage, in one straightforward plan with no hidden per-resolution fees. This makes it easy to budget and scale your support automation without any nasty financial surprises.

Build with Together AI or deploy a ready-made solution?

So, what's the bottom line?

If you have a team of AI developers ready to build something custom from the ground up, Together AI is a fantastic and affordable platform. It gives them the fundamental building blocks they need to innovate.

But for most business teams, especially in customer support or IT, trying to build your own solution is a recipe for a long, expensive project that might never see the light of day. The goal is to solve a business problem, like crushing ticket queues or slow response times, not to launch a whole new software development department. For that, a purpose-built, integrated platform will deliver results faster, more reliably, and at a much lower total cost.

If your goal is to level up your customer support with AI, a solution like eesel AI is the clear answer. It delivers all the power of modern generative AI in a platform you can set up yourself in just a few minutes. You can connect your knowledge sources, automate resolutions, and give your agents superpowers, all without writing a single line of code.

Frequently asked questions

Together AI is a cloud platform providing computing power (GPUs) and tools for developers and AI researchers. Its primary offering is an "AI Acceleration Cloud" for building, training, and running open-source generative AI models.

It's ideal for AI researchers, developers building custom AI applications, and companies with dedicated AI/ML teams needing raw GPU power. It is generally not suitable for non-technical business teams seeking ready-to-use solutions like customer support platforms.

Using Together AI involves a multi-month development project requiring significant technical expertise and resources to build a custom solution from scratch. A pre-built solution like eesel AI, conversely, offers a complete, ready-to-use platform that can be deployed in minutes with no coding.

The main costs include serverless inference (billed per token for input/output), fine-tuning (billed per token processed during training), and GPU cluster rental (hourly rates for hardware). These costs do not include the salaries for the engineering teams required to build and maintain solutions.

No, a non-technical customer support team cannot directly implement an AI solution using Together AI. It requires extensive AI/ML engineering and DevOps skills to develop, fine-tune, and deploy any functional application, making it unsuitable for direct business team usage.

Together AI provides access to a vast library of over 200 pre-trained open-source models. These include popular models like Meta's Llama, DeepSeek, and Mixtral, which developers can use as a foundation for their projects.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.