Remember when OpenAI first dropped those Sora demos? The internet pretty much broke. Seeing photorealistic videos pop up from just a few lines of text felt like we'd skipped ahead a few chapters in a sci-fi novel. Well, that hype is starting to become something real for developers, with the preview release of the Sora 2 API. It’s making the jump from a mind-blowing tech demo to a tool people can actually start building with.

So, let's cut through the noise. This is our honest review of what you can expect from the Sora 2 API. We’ll get into its core features, who it's actually for, what it'll cost you, and the very real limitations you need to know about before you dive in. Because while generative video is an exciting new playground, it's worth remembering that other kinds of AI are already solving some very practical business problems today, and we'll touch on that too.

What is OpenAI's Sora 2?

In a nutshell, Sora 2 is OpenAI's latest and greatest model for creating video and audio from text prompts or even just a single image. It's a big leap from the first version, building on that jaw-dropping visual quality with a few key improvements.

The official word from OpenAI is that the big new features are synchronized audio, better physics, and the ability to create several consistent shots in one go. The first Sora gave us silent movies; Sora 2 creates videos with dialogue, sound effects, and background noise that actually match what’s happening on screen. OpenAI is aiming for a "general-purpose simulator of the physical world," and this is a huge step in that direction.

Just to be clear, this is OpenAI's video tool. It has zero connection to other tech products with the same name, like the WebRTC server Sora from the Japanese company Shiguredo. And while Sora 2 is an incredibly powerful creative engine, it's only available through a technical API right now. That means you’ll need some coding skills to get it to do anything.

Core capabilities

Okay, so what can you actually do with the Sora 2 API? It’s a lot more than just typing a sentence and getting a video. The API gives developers a surprising amount of control over the final product.

Better physical realism and consistency

One of the biggest giveaways of early AI video was that things just felt… wrong. Objects would float weirdly, physics would take a vacation, and items would morph into something else for no reason. Sora 2 really works on fixing this. In its own demos, OpenAI shows a basketball missing a shot and realistically bouncing off the backboard instead of just sort of teleporting into the net.

This is a big deal for developers. It means you can create more believable product demos, architectural fly-throughs, or training simulations where the world acts like it's supposed to. The improved object permanence and cause-and-effect just make the videos feel more grounded and professional.

Synchronized audio and dialogue generation

This might be the most important update. The Sora 2 API can generate a complete soundscape for your video, from spoken dialogue and sound effects to ambient noise. If your prompt describes a busy café, you won't just see the people, you'll hear the murmur of conversation, clinking cups, and the hiss of the espresso machine.

Honestly, this is a massive time-saver. For a lot of projects, it completely gets rid of the need for a separate audio editing step. You can generate a short clip that’s ready to share, sound and all, straight from the API.

Finer control and better prompt-following

Sora 2 isn't just for one-off shots. The API lets you write detailed, multi-part prompts that spell out camera movements ("start wide, then dolly in on the character's face"), shot sequences, and specific visual styles. Whether you want something gritty and cinematic or a bright, anime-inspired look, you can steer the model with your words.

What's really key is that it's much better at keeping things consistent across those shots. If you describe a character in shot one, they're far more likely to look the same in shot two, right down to their clothes and surroundings. This finally opens the door to crafting short narratives and more complex stories that were basically impossible with older models.

Image-to-video and the "cameo" feature

The API isn't just for text-to-video. You can feed it a static image to get the ball rolling, bringing a photo or illustration to life.

Even more interesting is the "cameo" feature. This lets you insert a real person's face and voice into a generated scene. OpenAI seems to be treading carefully here, building it on a consent-first framework, which they detail in their guide to launching Sora responsibly. You have to verify your identity and get to decide who can use your cameo, giving you full control over your digital self. It’s a wildy personal way to create content, but it also shines a light on the safety tightrope that comes with this tech.

Practical use cases: Who is the API for?

With these features, it’s pretty clear the Sora 2 API is aimed at industries that live and breathe visual content.

-

Filmmaking and entertainment: For filmmakers, Sora 2 could be an amazing pre-visualization tool. You can storyboard entire scenes, test out camera angles, and create moving concept art before you even think about rolling a real camera.

-

Advertising and marketing: Agencies can now mock up video ad ideas in minutes instead of days. Wondering what a car commercial on a futuristic street would look like? Just prompt it. This helps teams brainstorm and iterate much faster for social media campaigns.

-

E-learning and education: Creating dynamic explainer videos or historical simulations just got a lot easier. A teacher could generate a short animation to explain a tricky scientific concept without needing any animation software or skills.

It's important to draw a line here, though, between creative content generation and business process automation. Sora 2 is fantastic for making visuals, but it’s not built to manage your company’s internal workflows. For things like answering customer support tickets, handling IT requests, or helping employees find company info, you need a totally different kind of AI.

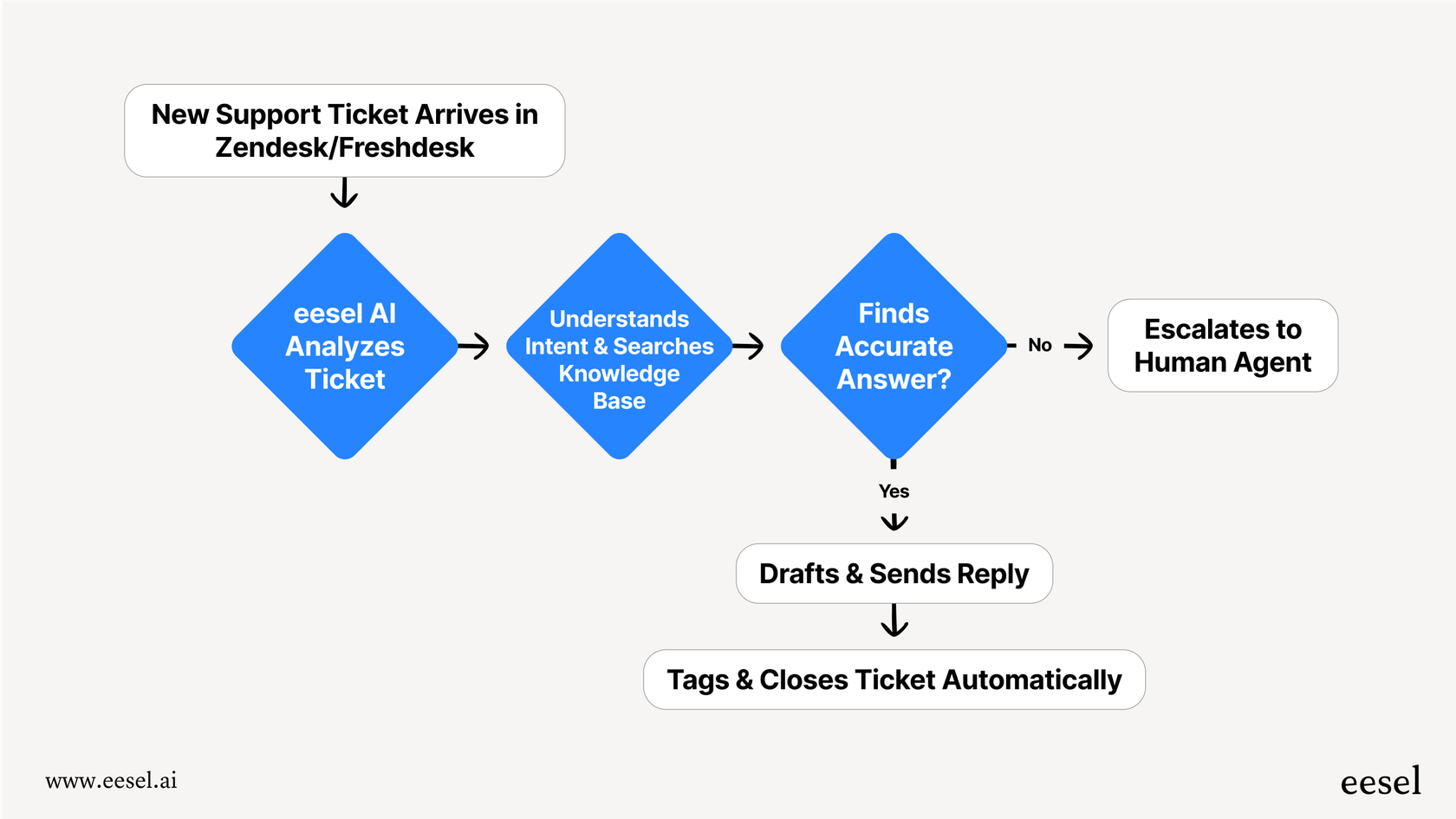

A tool like eesel AI is designed for exactly that. It offers an AI Agent that connects directly to your helpdesk (like Zendesk or Freshdesk) and learns from your past support tickets and knowledge base articles. It’s all about providing instant, accurate, text-based answers to automate your support, focusing on efficiency, not video production.

Sora 2 API pricing: What we learned from reviews

Alright, let's talk money. This kind of power doesn't come for free. OpenAI's pricing for the Sora 2 API depends on the model you use, the resolution, and the length of the video you're making. From what we've gathered from early reviews, here’s the breakdown:

| Model | Resolution | Cost per Second | Example: 10-Second Video |

|---|---|---|---|

| Sora 2 | 720p (1280x720 or 720x1280) | $0.10 | $1.00 |

| Sora 2 Pro | 720p (1280x720 or 720x1280) | $0.30 | $3.00 |

| Sora 2 Pro | High Res (1792x1024 or 1024x1792) | $0.50 | $5.00 |

This pay-per-second model means costs can stack up fast, especially if you're making high-res videos or trying out a lot of different prompts. A single minute of high-res video from the Sora 2 Pro model will set you back $30. That makes it a tool for high-value creative work where you can justify the cost, not for high-volume, everyday business tasks.

This is a totally different world from the predictable pricing you see with automation platforms. For example, eesel AI's pricing is a flat monthly fee for a certain number of AI conversations. There are no hidden fees for "higher resolution answers," which means a business can scale up its automated support without getting a scary bill at the end of the month.

Limitations and challenges

The demos are incredible, but hands-on reviews of the Sora 2 API have uncovered a few practical limitations and headaches that developers should know about.

Technical flaws and quirks

The model isn't perfect. Not yet, anyway. A common problem that popped up in early tests is its struggle with generating readable text. If you ask for a sign in the background or words on a t-shirt, it often comes out as gibberish.

Character consistency can also still be an issue in longer videos. Small details, like a watch or an earring, might just vanish between shots. On top of that, generation times can be slow. A 20-second clip can take 3 to 5 minutes to render, which can really drag down a creative workflow when you're trying to iterate quickly.

Limited access and developer roadblocks

You can't just sign up and start playing with the Sora 2 API today. It’s currently in a limited preview, which means you have to apply for access and get in line. This is a pretty big hurdle for developers who want to experiment or build a proof-of-concept right now.

This is a far cry from truly self-serve platforms. With a tool like eesel AI, you can sign up and have a working AI agent hooked up to your helpdesk in a few minutes. There's no waitlist and no mandatory sales call. You can just get started on your own time.

Safety, IP, and ethical minefields

Using the Sora 2 API comes with a lot of responsibility. The potential for creating convincing deepfakes, the need to protect minors, and the murky legal questions around generating copyrighted characters are all very real issues.

OpenAI has built-in safety filters and that consent-based framework for its "cameo" feature, but at the end of the day, it's on the developer to make sure their app is being used ethically and legally. That adds a layer of governance and legal work that can be tricky to navigate. For a business function like customer support, that level of risk just isn't acceptable. A platform like eesel AI gives you complete control by letting you limit its knowledge to only your approved documents. You can even run a simulation on your past tickets before you go live, so you can be sure every answer is safe, on-brand, and accurate.

A powerful creative tool, but not for every job

There's no question that the Sora 2 API is a huge step forward for generative AI. For anyone in a creative field, it opens up possibilities that were pure science fiction a year ago. It’s an exciting, powerful tool that will absolutely change how visual content gets made.

But for a lot of businesses, its high cost, technical hurdles, limited access, and creative focus make it the wrong tool for solving day-to-day operational problems. It’s a specialized instrument for a very specific type of work. Companies that need a fast, reliable, and affordable AI solution to automate workflows should probably look at platforms designed for exactly those challenges.

If you want to see how AI can automate your support, cut down on ticket volume, and be live in minutes, try eesel AI for free.

Frequently asked questions

The main improvements include synchronized audio, better physical realism and consistency in generated videos, and enhanced prompt-following for finer control over shots and styles. It also introduces an image-to-video feature and the "cameo" option.

Pricing for Sora 2 is based on a pay-per-second model, varying by resolution and model type (Sora 2 vs. Sora 2 Pro). This means costs can accumulate quickly, especially for longer, high-resolution videos, making it suitable for high-value creative work.

Yes, common issues include difficulty generating readable text, potential inconsistencies in character details over longer videos, and slow rendering times. A 20-second clip can take 3 to 5 minutes to generate.

It's best suited for creative industries like filmmaking (pre-visualization), advertising (video ad mock-ups), and e-learning (dynamic explainer videos). It excels at generating visual content rather than automating business processes.

Currently, access is limited to a preview program. Developers must apply and get approved, which means there's a waitlist and it's not immediately available for self-serve experimentation.

Yes, the blog highlights concerns around deepfakes, IP rights, and protecting minors. OpenAI includes safety filters and a consent-based framework for features like "cameo" to address these issues.

Sora 2 is designed for creative content generation and visual storytelling, whereas tools like eesel AI focus on automating business processes such as customer support or internal knowledge retrieval. They serve fundamentally different purposes and have distinct pricing models.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.