It seems like everyone is talking about OpenAI's release of Sora 2. The buzz is that it's the "GPT-3.5 moment for video," and honestly, the leap in quality and control is pretty wild. But after the initial "wow" factor, the practical question for developers and businesses pops up: how do we actually use this thing?

If you're thinking about building apps or workflows with this new model, you're in the right spot. This guide will walk you through what Sora 2 is, what it can do, and what it really takes to access it through an API today. We'll also get into the pricing you can expect and the limitations you should probably know about.

While a full-blown public API from OpenAI is still on the horizon, you don't have to just wait around. There are a few clever ways to start building with Sora 2's power right now.

What is Sora 2?

At its core, Sora 2 is OpenAI’s newest and most capable text-to-video model. It's a huge step up from the original Sora, with videos that feel much closer to real-world footage. The most impressive part? It can create clips with synchronized audio, like dialogue and sound effects, that actually match what's happening on screen.

OpenAI is rolling this out through a new standalone iOS app called "Sora" and has confirmed API access is on the roadmap. This is a pretty clear sign they see Sora 2 as more than a creative toy; it’s meant to be a platform for a new wave of media.

One of the most talked-about additions is "Cameos," a feature that lets you drop your own face and voice into the videos you create. It's not just a party trick, either. It’s built with user consent in mind, giving you full control over your digital likeness.

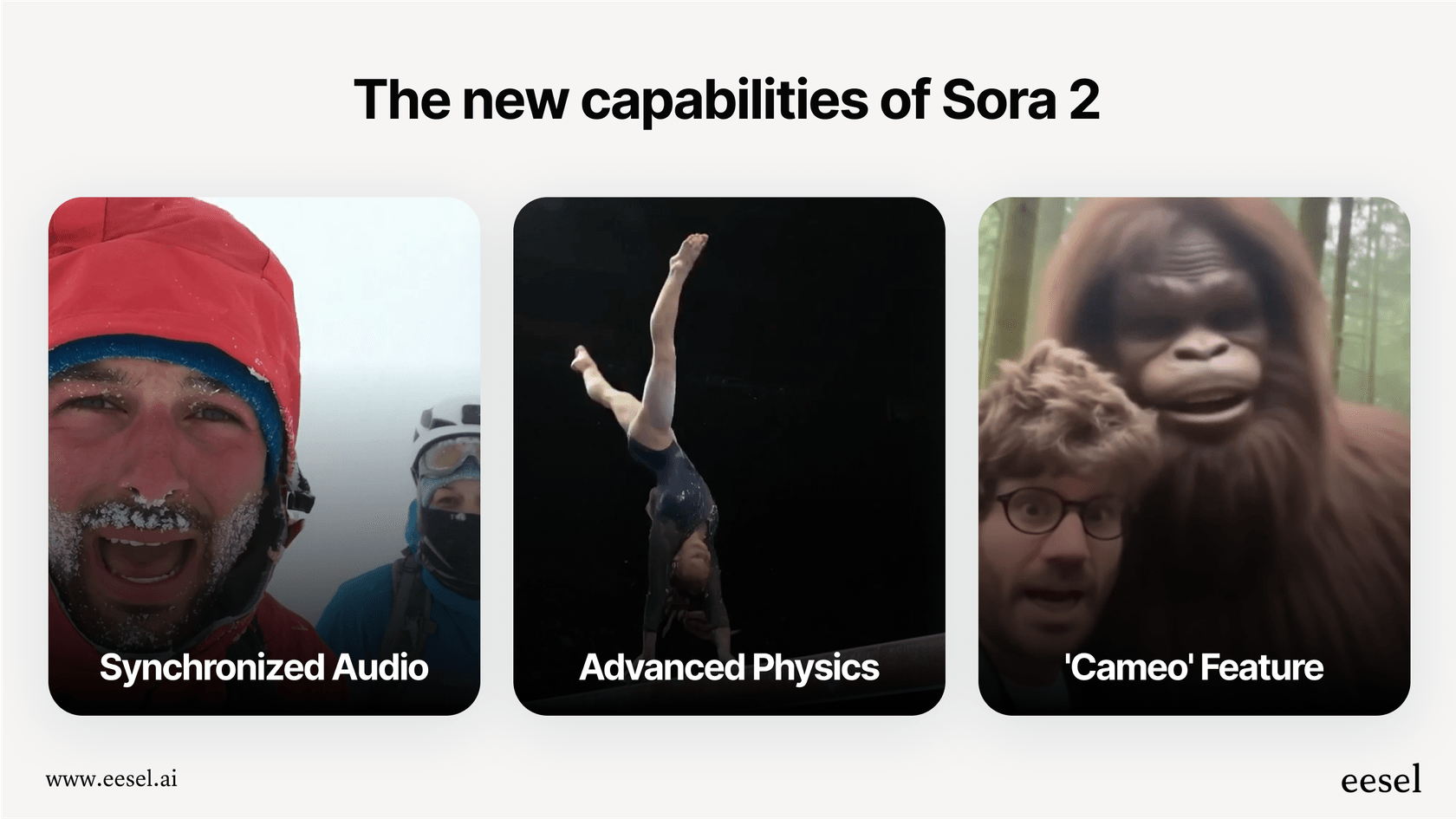

Core features of Sora 2

Sora 2 isn't just a small update; it's a different beast altogether in how it understands and mimics the real world. Let's dig into the features that make it so impressive.

Advanced world simulation and physics

Getting physics right has always been a huge headache for video generation models. Objects would often do weird things, like morphing into something else or just teleporting across the screen. Sora 2 seems to have a much better grasp of how things work in the real world.

OpenAI shared a great example: if you prompted an older model to show a basketball player missing a shot, the ball might just decide to go in the hoop anyway. With Sora 2, if the player misses, the ball will realistically bounce off the backboard. It gets concepts like cause and effect, momentum, and even how things float in water, making its videos far more believable.

Synchronized audio and dialogue

This is where things get really interesting. The original Sora, and most of its rivals, basically made silent movies. Sora 2 generates the whole audio-visual package. It can whip up complex background noises, specific sound effects, and even dialogue that syncs up with a character's lip movements. This single ability turns it from a simple animation tool into something you could actually use for short-form storytelling.

Enhanced controllability and the 'Cameo' feature

Sora 2 gives you way more control over the final product. You can give it detailed instructions for camera moves, shot composition, and visual styles, whether you want a hyper-realistic cinematic look or something more like stylized anime.

The "Cameo" feature is where this control gets personal. You can record a short video and audio clip of yourself and then stick that likeness into any scene you generate. The model does a surprisingly good job of capturing your look and voice. OpenAI also built a solid consent-based system around this, so you’re the one who decides who can use your cameo, and you can pull the plug on it at any time.

How to get API access to Sora 2

Alright, the big question: how can you actually get Sora 2 in the API? As of late 2025, OpenAI hasn't just opened the floodgates with a public API you can plug and play. But that doesn’t mean you're completely stuck. There are three main ways developers are getting access right now.

The Sora app: A human-in-the-loop approach

The most straightforward way in is through the invite-only iOS app and the sora.com website. For a lot of teams, this effectively becomes a "human-in-the-loop" API. Basically, a person on your team manually types in prompts, generates the video, and downloads the file to use in your project. It’s not automated, sure, but for things like prototyping, storyboarding, or creating a few specific assets, it's a perfectly good way to get started without writing a single line of code. It's not glamorous, but it works.

Through the Azure OpenAI preview

For larger companies, Microsoft is offering a limited preview of Sora 2 on its Azure AI platform. This isn't a live, on-demand API. Instead, it works asynchronously. You submit a request to generate a video, and then your system has to check an endpoint periodically until the job is done and the video is ready. Access is pretty restricted and depends on your Azure setup, but it’s the most "official" way for enterprise customers to integrate it. You can find the nitty-gritty details in the Microsoft Learn documentation.

Using third-party API providers

Seeing the huge demand from developers, a few third-party platforms have jumped in to offer early access to Sora 2 in the API. Services like Replicate and CometAPI have wrapped the model in their own API, giving you a clean, programmatic way to generate videos. For many startups and individual developers, this is the most practical option available today.

Getting started is usually pretty simple. For instance, with Replicate's Python client, you can generate a video in just a few lines of code. It's surprisingly straightforward:

import replicate

output = replicate.run(

"openai/sora-2",

input={

"prompt": "A dramatic shot of a golden retriever pondering its life choices on a rainy day, cinematic style.",

"seconds": 10,

"aspect_ratio": "landscape"

}

)

print(output)

This video offers a step-by-step guide on how to bypass the waitlist and begin using the model.

Sora 2 API pricing and availability

Let's talk money. Cost is always a big piece of the puzzle when you're thinking about using a new tool. Here’s a look at how Sora 2's pricing shakes out depending on how you access it.

OpenAI's direct pricing

-

Sora 2 (Standard): If you get an invite, you can use the base model for free through the Sora app and sora.com, and the usage limits are pretty generous.

-

Sora 2 Pro: If you're a ChatGPT Pro subscriber, you get access to a higher-quality "Pro" version of the model on sora.com, and it's included in your subscription fee.

OpenAI has hinted that they might add paid plans for heavy users if their servers start getting overwhelmed, but for now, this is the deal.

Third-party API pricing

The platforms that give you API access charge for it, usually based on the length of the video you generate. This makes the costs predictable and easy to manage for developers.

| Platform | Model | Price | Notes |

|---|---|---|---|

| OpenAI | Sora 2 Pro | Included with ChatGPT Pro | Access via sora.com |

| OpenAI | Sora 2 | Free (with limits) | Access via Sora app |

| Replicate | Sora 2 | $0.10 / second | Programmatic API access |

| CometAPI | Sora 2 | $0.16 / second | Aggregator API access |

Safety, limitations, and responsible use

With a tool this powerful, you have to talk about the responsible side of things. OpenAI has been pretty forward about building in safety features from the get-go, but it's still important to know what it can and can't do.

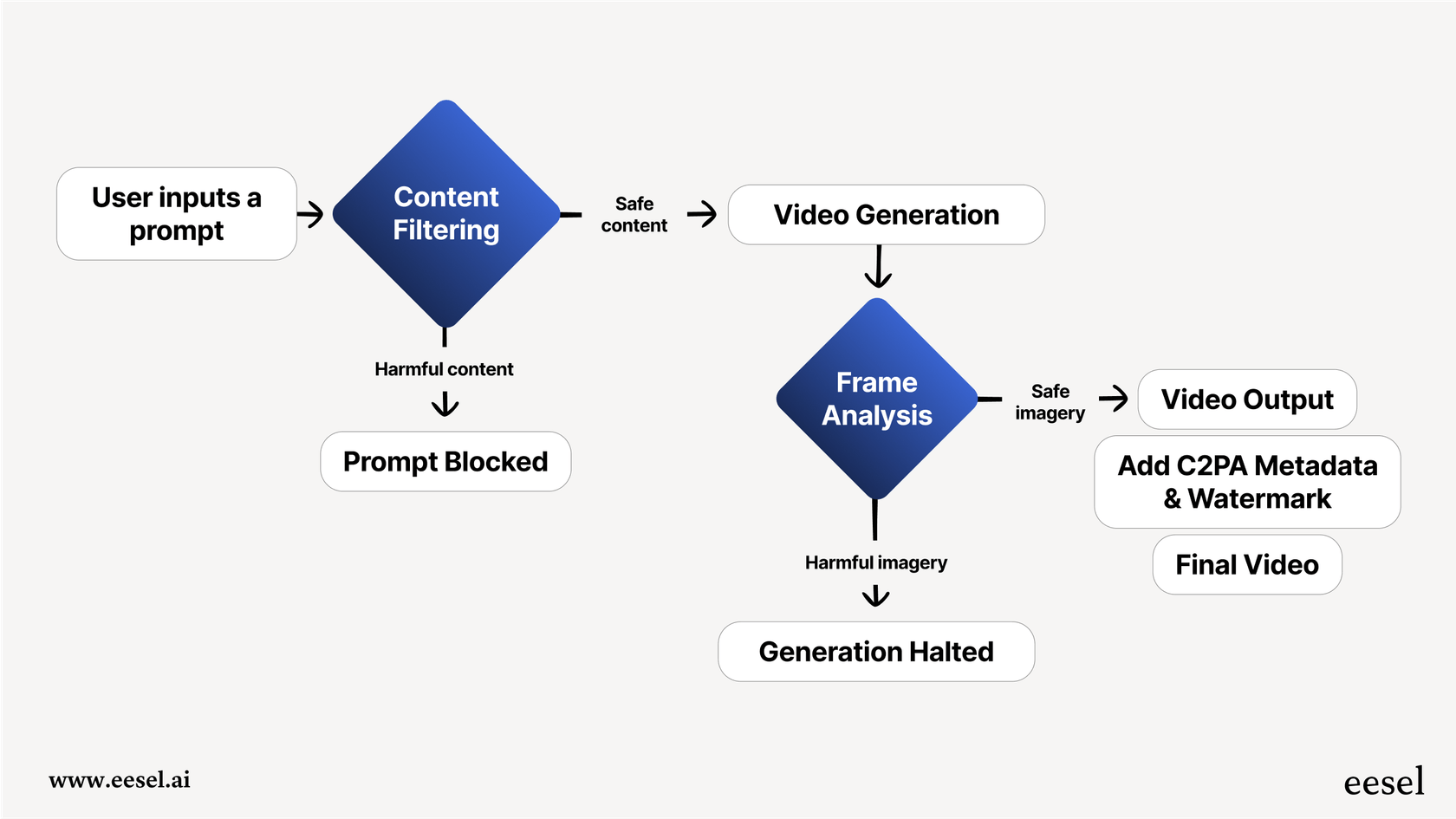

Built-in safety and provenance features

OpenAI has put a few layers of protection in place to help people use Sora 2 responsibly and tell the difference between AI content and real videos.

-

C2PA Metadata: Every video made with Sora 2 comes with C2PA metadata. Think of it as a digital birth certificate that cryptographically proves the video was generated by AI.

-

Visible Watermarks: For now, all videos have a visible watermark, so it’s obvious where they came from.

-

Content Filtering: The system automatically scans both text prompts and the video frames it creates to block harmful stuff like graphic violence, explicit content, and hateful imagery.

-

Consent-Based Likeness: The "Cameo" feature won't let you create a video of someone unless they've given you explicit permission, which helps prevent misuse.

Known limitations and potential risks

Sora 2 is amazing, but it’s not perfect. The model still messes up sometimes, creating videos where the physics are a bit off or objects strangely change from one moment to the next.

On a bigger scale, the potential for misuse is a real concern. The ability to create such realistic videos opens the door for convincing deepfakes or non-consensual content. OpenAI's safety systems are there to fight this, but as the tech gets better, everyone using it will need to stay vigilant.

The future of Sora 2 and practical business AI

Sora 2 is genuinely exciting and has set a new bar for AI video. It has the potential to shake up creative fields from filmmaking to marketing. But let's be real: for most businesses, integrating a specialized tool like Sora 2 in the API is a heavy lift. It requires a lot of engineering effort and is aimed at a very specific creative niche.

This is a world away from the immediate, practical AI needs that most businesses are facing, like getting a handle on customer support. While creative teams are playing with the future of video, support and IT teams can get real value right now from a platform built for their daily grind.

That's where a tool like eesel AI fits in. It’s a dead-simple, self-serve platform that connects to your existing help desks like Zendesk and Freshdesk in minutes. Instead of wrestling with an experimental API, you can just connect your knowledge sources, let an AI agent learn from your past support tickets, and start automating answers almost immediately. eesel AI even lets you run a simulation on thousands of your past tickets, so you get a clear, data-driven look at how well it will work before it ever talks to a real customer.

Ready to put AI to work in your business without a massive engineering project? While the creative world explores what's next with Sora 2, you can solve your customer support headaches today. Start your free eesel AI trial and see for yourself how quickly you can automate support and give your team a boost.

Frequently asked questions

You can access Sora 2 in the API through the Azure OpenAI preview for enterprise customers, or via third-party API providers like Replicate and CometAPI for programmatic integration. The invite-only Sora app also offers a "human-in-the-loop" workflow.

Sora 2 in the API enables generation of highly realistic videos with advanced world simulation, accurate physics, and synchronized audio including dialogue. It also offers enhanced controllability over camera moves, styles, and a "Cameo" feature for personalized content.

OpenAI offers free access via the Sora app and sora.com (with generous limits), or as part of a ChatGPT Pro subscription for a "Pro" version. Third-party API providers typically charge per second of video generated, with examples ranging from $0.10 to $0.16 per second.

Sora 2 in the API can occasionally make errors with physics or object consistency. OpenAI has implemented C2PA metadata, visible watermarks, content filtering, and consent-based likeness for "Cameos" to ensure responsible use and identify AI-generated content.

While OpenAI has confirmed that API access is on the roadmap, a full-blown public API for Sora 2 in the API isn't generally available as of late 2025. Current programmatic access is primarily through limited previews or third-party aggregators.

Yes, the "Cameo" feature within Sora 2 in the API allows you to record a short video and audio clip of yourself to embed your likeness into generated scenes. This feature includes a solid consent-based system, giving you full control over your digital representation.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.