So, you're trying to get your ServiceNow AI agent to do more than just answer basic questions. You want it to handle real, multi-step tasks, and that's where you run into subflows. If you're like many developers and admins, you’ve probably found the process to be a bit of a maze, with results that can be, well, unpredictable.

You're not alone. The idea of an AI agent that can automatically resolve complex issues is fantastic, but the reality of building that automation in ServiceNow can be a bit of a headache.

This guide is here to help clear things up. We'll walk through what ServiceNow AI Agent Subflows are, how they actually work, their biggest limitations, and a much more straightforward way to get powerful ITSM automation done without all the heavy lifting.

What are ServiceNow AI Agent Subflows?

Alright, let's quickly get on the same page with the terminology. It's actually pretty straightforward when you look at the pieces.

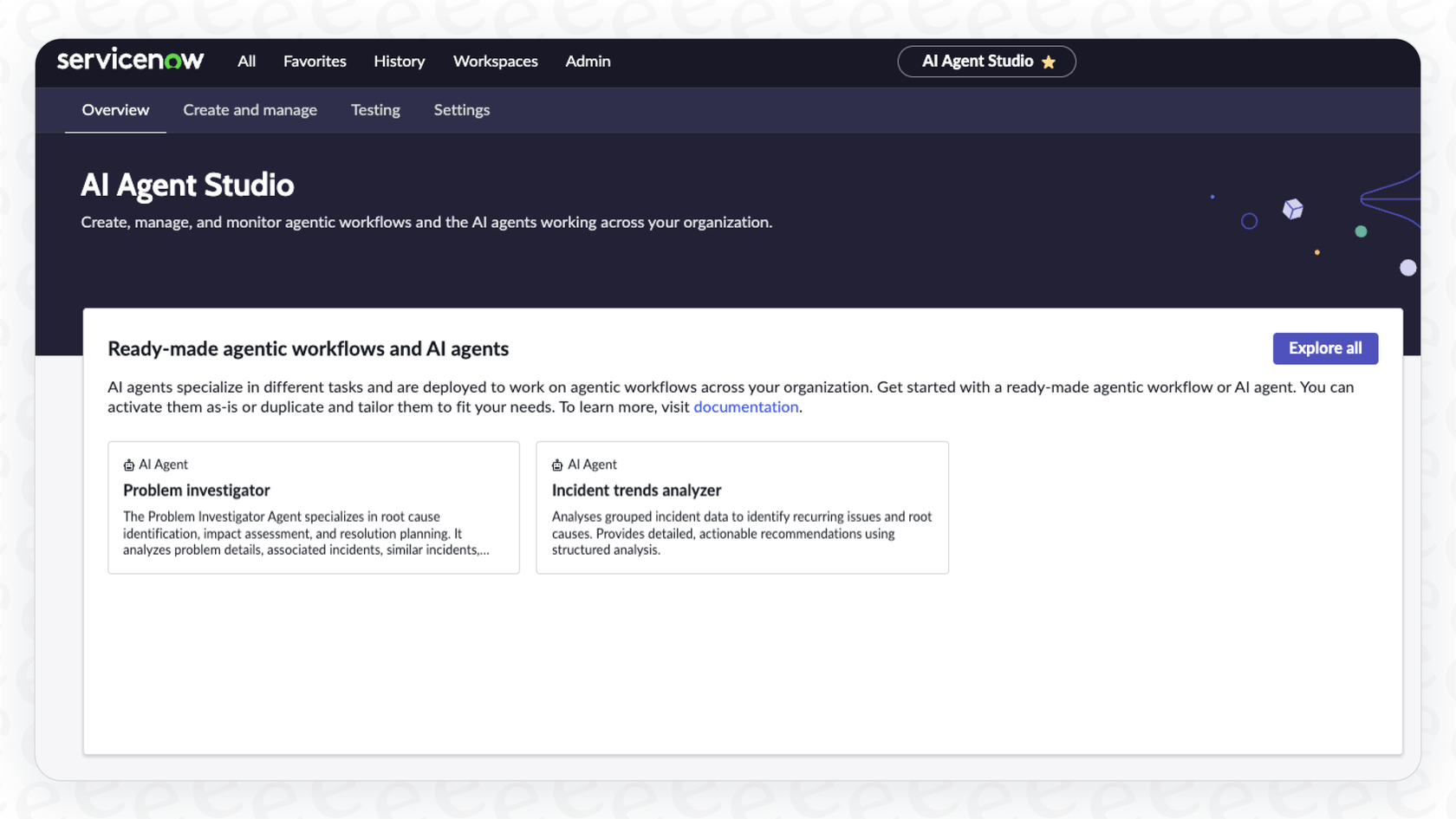

A ServiceNow AI Agent is the bot that lives inside the Now Platform. It's powered by Now Assist and is built to handle tasks on its own, whether that's answering a user's question or starting a whole workflow. Think of it as a digital teammate ready to pick up a task.

A Subflow is a reusable sequence of actions you create in ServiceNow's Workflow Studio or Flow Designer. It’s like a mini-playbook for a specific job. Instead of building the same logic from scratch every time, you can just call on this pre-built sequence when you need it.

Put them together, and ServiceNow AI Agent Subflows are basically a "tool" you hand to your AI agent. When the agent understands that a user's request requires a complex action, it triggers the right subflow to execute the task. This is what turns your agent from a chatbot into a real doer. Instead of just explaining how to create a knowledge base article, it can actually go and create one for you.

How ServiceNow AI Agent Subflows work

If you've ever felt like setting up a subflow is overly complicated, you're not imagining things. Let's peel back the layers to see why. The whole process works a bit like a game of telephone inside a big company, with multiple handoffs before the real work gets done.

The multi-step orchestration process

Here’s a typical journey from a user’s request to a finished action:

-

The AI Agent gets the request: A user asks for something in the Now Assist panel. The AI Agent figures out what they want and realizes it needs to run a specific task, which has been assigned to it as a "Script Tool" or "Subflow Tool" in the AI Agent Studio.

-

The call to the Gateway: The agent’s script usually doesn't trigger the final action directly. Instead, it calls a generic "Integration Gateway" subflow. This gateway acts as a central router, taking in the request and figuring out where to send it next.

-

The check-in with the Decision Table: The gateway then consults a Decision Table. This table is a set of rules that maps different kinds of requests to the right workflow. Based on certain details, like the feature or service name, it decides which specific subflow needs to run.

-

The Final Subflow runs: At last, the Decision Table points to the actual subflow containing the business logic. This is the part of the process that finally makes the API call through Integration Hub, runs a script, or updates a record.

While this structure is robust and built for enterprise control, it adds a lot of steps and complexity, especially for what should be simple tasks. Each step is another place where something could go wrong and another screen a developer has to configure and manage.

This is where a more direct approach can save a ton of headaches. A platform like eesel AI was designed to cut through this kind of complexity. It lets you define custom API actions and workflows right from a single dashboard. You can connect your agent to any internal or external tool you need without getting tangled up in gateways and decision tables. What takes four or five steps in ServiceNow can often be done in just one, letting you build and launch powerful automation much faster.

Common use cases and implementation challenges

Let's be fair, when these subflows are up and running properly, they can do some pretty cool things. But getting there can be a real struggle.

Powerful use cases

First, let's look at what’s possible. Here are a few valuable examples that show the potential:

-

Automated KB creation: An agent could take the resolution notes from a complicated incident, package them up with an update set, and automatically create a draft article for your knowledge base. This keeps your documentation fresh without anyone having to do it manually.

-

Proactive problem investigation: When a P1 incident comes in, an agent could trigger a subflow that runs diagnostic scripts across several CIs, pulls logs, and adds all that information to the ticket before an engineer even lays eyes on it.

-

Complex HR actions: An employee could ask the AI agent to "start my parental leave request." The agent could then kick off a subflow that notifies their manager, creates the HR case, and sends them the relevant policy documents automatically.

The challenges you'll actually face

The use cases sound great, but turning them into reality often means hitting some frustrating roadblocks. If you've ever felt like you're "poking around blindly" to get things to work, you're definitely not the first.

Here's why it can be so tough:

-

You're constantly jumping between tools: To build a single automated workflow, you have to navigate completely different parts of the platform. You're in the AI Agent Studio for the agent, then the Workflow Studio for the subflow, over to the Integration Hub for the API connection, and then to standard ServiceNow tables for the decision logic. It feels disjointed and makes building and troubleshooting a real chore.

-

This isn't a job for just anyone: Let's be honest, this is not a low-code task. Making subflows work the way you want requires a developer who is deeply familiar with ServiceNow's scripting, flow logic, and API integrations. This creates a bottleneck where IT and support teams have great ideas for automation but have to get in a long developer queue to see them come to life.

-

How do you even test this thing? With so many moving pieces, it's tough to test your agent with any real confidence. There isn't a great simulation environment to see how your agent would handle real-world questions and scenarios. You basically have to build it, deploy it, and hope for the best, which is a risky way to roll out new automation to your users.

This is exactly why a self-serve platform designed for simplicity can make such a difference. eesel AI is built to be set up in minutes, not months. It brings the whole automation workflow, from connecting your knowledge sources to defining custom actions, into one intuitive place. This gives your support and IT teams the power to build the automation they need without having to wait on developer resources.

Limitations of the native ServiceNow approach

Here’s a fundamental issue with the native ServiceNow approach: it’s built to live inside its own world. That’s great for some things, but not so great for how most companies actually operate today.

The platform-centric knowledge gap

ServiceNow’s AI is incredibly capable when it's working with data and knowledge that already live inside the Now Platform. But what happens when your team's most helpful troubleshooting guides are in Confluence, your official procedures are in Google Docs, and your engineers are sharing critical fixes in a Slack channel?

Getting all of that external information to your ServiceNow AI agent isn't easy. It typically requires a separate, and often complicated, integration project for each and every source. Your agent ends up with massive blind spots, unable to access the very information it needs to solve problems correctly.

This is the core problem eesel AI was built to solve. It plugs right into over 100 of the tools your team already uses. You can instantly connect your help desk, wikis, and chat platforms to give your AI a complete picture. Instead of forcing you to move all your documentation into one system, eesel AI learns from where your team already works, ensuring your agents are always using the most current information.

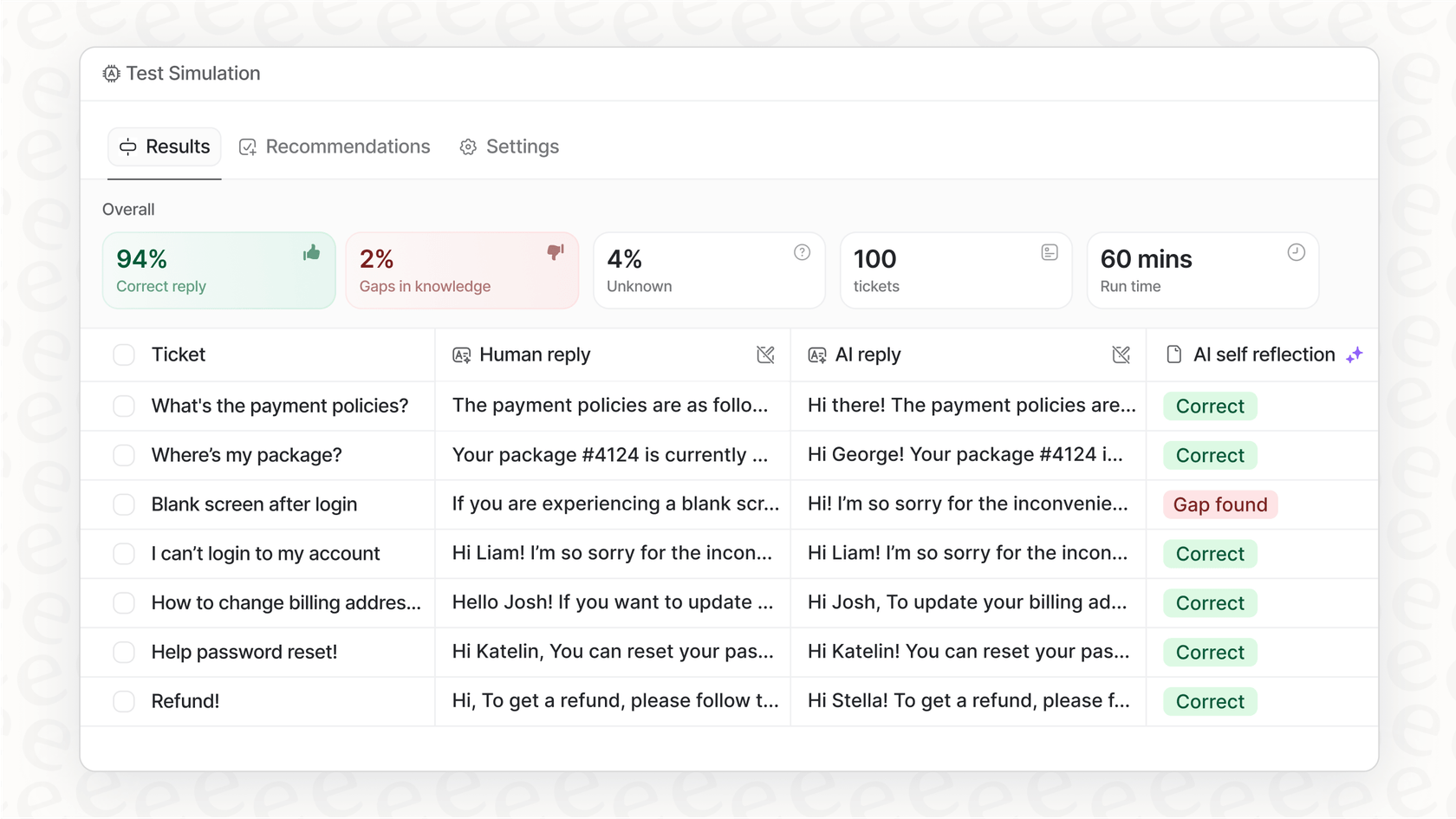

The risk of deploying without simulation

This might be the biggest headache of all: the lack of a proper test environment. You can spend weeks building an agent and a subflow, but how do you know it will actually work under pressure? How can you be sure it won't stumble on an edge case or give a wrong answer to an important question?

Without the ability to simulate how your AI agent would have handled thousands of past tickets, you're essentially deploying blind. You can't accurately predict its resolution rate, find its weak spots before your customers do, or calculate the potential return on investment. You're left to just cross your fingers.

This is where eesel AI’s powerful simulation mode really changes things. Before your AI agent ever speaks to a live user, you can run it against your historical ticket data in a safe, sandboxed environment. You'll get a detailed report showing exactly how it would have responded, which tickets it would have solved, and where you have knowledge gaps that need to be filled. This risk-free testing lets you fine-tune your agent's performance and deploy with total confidence, armed with a clear understanding of its business impact from day one.

Choosing the right approach for your team

So, what's the bottom line? It's really about choosing the right tool for your team's reality.

ServiceNow AI Agent Subflows are powerful, no doubt. But they ask for a lot in return: significant developer resources, time spent navigating a clunky toolchain, and a bit of a leap of faith when you go live. If you have a dedicated ServiceNow dev team and long project timelines, that might be a perfectly fine trade-off.

But if you're a team that needs to solve problems now, unify knowledge from all the tools you already use, and actually know how your AI will perform before it talks to a single user, you probably need a different approach. eesel AI provides a radically simple, self-serve platform that plugs directly into your existing ITSM workflows. It offers powerful simulation, instant knowledge integration, and a clear path to automating with confidence.

Ready to see how much simpler it can be? Go live with eesel AI in minutes, not months.

Frequently asked questions

ServiceNow AI Agent Subflows are pre-defined sequences of actions (subflows) that a ServiceNow AI agent can trigger to perform complex, multi-step tasks. They empower the AI agent to move beyond basic answers and execute automated workflows, turning it into a "doer."

The process involves the AI agent calling a generic Integration Gateway, which then consults a Decision Table to route the request to the specific subflow containing the business logic. This final subflow then executes the desired action, often via Integration Hub.

They can be used for automated knowledge base article creation from incident notes, proactive problem investigation by running diagnostics, or triggering complex HR actions like parental leave requests. These automations streamline operations and reduce manual effort.

Key challenges include constantly jumping between different tools (AI Agent Studio, Workflow Studio, Integration Hub), the requirement for deep developer expertise, and the difficulty in testing due to a lack of a comprehensive simulation environment.

Natively, ServiceNow lacks a robust simulation environment, often leading to a "build it and hope for the best" approach. This makes it challenging to predict performance or identify weak spots without live deployment.

The native approach often struggles with external knowledge, requiring separate and complex integration projects for each non-ServiceNow source. This can lead to knowledge gaps for the AI agent.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.