AI agents are all the rage, promising to do way more than just spit out canned answers. The idea is they’ll automate whole workflows, think through problems on their own, and act like another member of your team. It’s a pretty compelling picture. But if you’re the one signing the checks, you’ve probably got a question in the back of your mind: "This sounds amazing, but how do I know it will actually work before I unleash it on real customer tickets?"

That's what testing is for. But testing generative AI isn't like your standard software QA. AI is probabilistic, which is a fancy way of saying it doesn't always follow the same script. You can give it the same prompt twice and get two slightly different, but still correct, answers. So, you’re not really hunting for bugs in the code. You’re trying to build confidence that the agent will behave the way you expect it to.

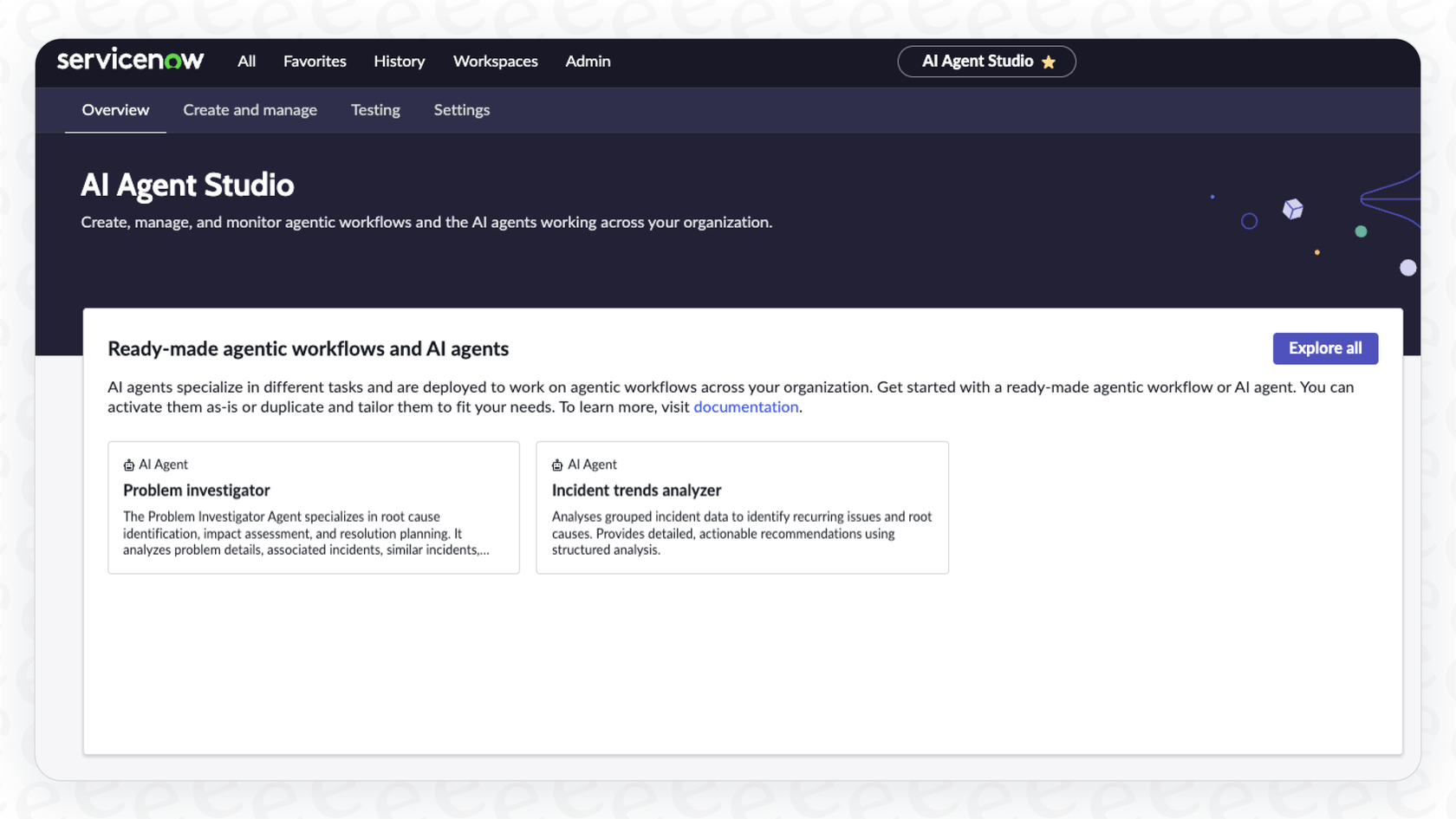

This guide will walk you through the testing features inside ServiceNow’s AI Agent Studio. It’s written for IT and support leaders who are kicking the tires on the platform. We'll break down what the studio offers, how the testing works, and some practical limits you should know about.

What is ServiceNow AI Agent Studio Testing?

First, let's get our bearings. The ServiceNow AI Agent Studio is a low-code space for building, managing, and, most importantly, testing AI agents that live and breathe inside the ServiceNow platform. Testing isn't an afterthought here; it's a key feature meant to help you prove that your agents are on the right track, following instructions, and using their tools correctly.

Based on ServiceNow’s own docs, you've got two main ways to test things in the studio:

-

Unit Testing (The Testing Tab): This is more for developers. It lets you run a single agent or workflow through a specific scenario in a controlled space. It’s like a quick sanity check to debug your instructions and see if the basic logic holds up.

-

Scale Testing (Evaluation Runs): This is for when you want to see how an agent handles a whole bunch of past scenarios at once. The goal is to measure performance and reliability on a bigger scale before you even think about a full rollout.

The whole point of these features is to build trust. You need to feel good that when you flip that switch, your AI agent is going to handle tasks the right way and not cause any headaches.

The challenge of ServiceNow AI Agent Studio Testing in a complex enterprise platform

Testing an AI agent isn't as straightforward as making sure 2 + 2 equals 4. It’s more like trying to figure out why the agent thought 4 was the right answer in the first place. This creates a few unique problems, especially in a huge, interconnected system like ServiceNow.

The first big one is the "black box" issue. Large language models (LLMs) are incredibly complicated, and it can be hard to see their exact thought process. ServiceNow gives you a "decision log" that tries to show the agent's line of reasoning, but making sense of those logs is a technical skill. You're not just checking the final answer; you have to confirm the logic it used to get there.

Second, an AI agent is only as smart as the information it can access. Inside ServiceNow, that means its success is tied directly to the quality of your knowledge base, incident history, and other data. If your help articles are outdated or your ticket data is a mess, the agent's performance will take a hit, both in your tests and in the real world. You know the old saying: garbage in, garbage out.

Finally, you have to deal with the reality of a platform that's always changing. A small ServiceNow upgrade or a change to a required plugin, like Now Assist or the Generative AI Controller, could quietly change how your agent behaves. This makes regression testing super important, but it can be a huge time sink to manually re-check every single workflow after every update.

For teams that don't have ServiceNow developers or AI experts on speed dial, working through these testing issues can easily tack weeks or even months onto a project.

How ServiceNow AI Agent Studio Testing works

So, how does ServiceNow actually help you with these challenges? Let's get into the specifics of the two main testing methods and what they look like in practice, plus a few of their limitations that aren't always mentioned in the brochure.

Manual validation with the ServiceNow AI Agent Studio Testing tab

Think of the testing tab as a developer's personal sandbox. It's where an admin can manually put an agent through its paces. You give it a task, something like, "Help me resolve Incident INC00123," and watch it work through the process step-by-step in a simulated environment.

When you run a test, you'll see a few things on your screen:

-

A simulated chat: You can follow the back-and-forth conversation between a fake user and the AI agent.

-

An execution map: This is a flowchart that shows how the AI coordinates different agents and tools to complete the task.

-

A decision log: This is the nitty-gritty, step-by-step record of the agent's reasoning, showing which tools it looked at and the choices it made along the way.

This is helpful for debugging, but it has some real-world drawbacks:

-

It’s all done by hand, one test at a time. You can only test a single scenario, which doesn't give you a clear picture of how the agent performs across hundreds of different ticket types.

-

It only tests the agent's logic, not what starts it. For instance, the test won't tell you if the agent correctly kicks into gear when a new ticket is created. You have to test that separately somewhere else.

-

You need to be a technical user. To run these tests and understand the results, you need an admin with the "sn_aia.admin" role who gets the underlying workflows and knows how to read those detailed decision logs.

Testing at scale with evaluation runs

When you’re ready to move past one-off tests, ServiceNow has "Evaluation Runs." This is their way of doing automated, large-scale testing. You can run an agent against a big set of historical tasks to see how well it does.

Getting it set up, however, is pretty involved:

-

Define an "Evaluation Method": You first have to decide what you want to measure. Are you looking at "Task Completeness" (did it finish the job?) or "Tool Performance" (did it use the right tools correctly?).

-

Create a dataset: This is the hard part. To build a dataset, you have to pull from past AI agent execution logs. This leads to a classic chicken-and-egg problem: you can't test your agent until it has already run on a bunch of tasks and created the very logs you need for testing.

-

Run the evaluation: Once you finally have a dataset, you can run the test and get a report with performance numbers.

This is where the complexity of a big enterprise platform can really bog you down. Building and managing these datasets isn't a simple point-and-click task; it's a technical job that requires a lot of prep and an existing history of agent activity.

The hidden costs and limitations of ServiceNow AI Agent Studio Testing

Beyond the technical side of testing, there are some bigger, strategic things to think about before you go all-in on the ServiceNow AI ecosystem.

The steep learning curve and the need for specialists

Building and testing agents in ServiceNow is not a job for a casual user. It demands administrators who have a deep knowledge of the platform's architecture, from managing plugins and user roles to building workflows in Flow Designer and finding your way around the Now Assist Admin Console.

Some guides might say you can build a custom agent in under an hour, but a production-ready agent that can reliably handle real-world tickets takes a lot more effort. You have to define roles, write very specific instructions, connect different tools like subflows and scripts, and then put it all through that multi-step testing process. This usually means you'll need dedicated developer time or expensive consultants.

In contrast, solutions like eesel AI are designed so anyone can use them. You can connect your helpdesk, train the AI on your knowledge sources, and set up an agent in minutes from a simple dashboard, no special ServiceNow certifications needed.

The 'all-or-nothing' platform commitment

ServiceNow's AI tools are powerful, but they work best inside the "walled garden" of the ServiceNow world. To really get your money's worth, your ITSM, CSM, and HR workflows should already be running on the platform.

For the thousands of companies using other popular helpdesks like Zendesk, Freshdesk, or Intercom, using ServiceNow's AI agents would mean a massive and expensive move to a whole new platform.

This is where a tool that works with what you already have makes a huge difference. eesel AI plugs right into your existing helpdesk with one-click integrations. There’s no need to rip out and replace the tools your team is already comfortable with.

Unclear pricing and licensing

Finally, let's talk about money. ServiceNow doesn't publish its pricing for the required licenses. To even get started with AI Agent Studio, you need a "Now Assist Pro+ or Enterprise+" license, but you have to contact their sales team to find out the cost. This lack of transparency makes it tough to budget for and figure out your potential return on investment ahead of time.

Clear pricing is just a better way to do business. With eesel AI, you know exactly what you're paying for.

| Plan | Monthly (billed monthly) | Key Features |

|---|---|---|

| Team | $299 | Up to 1,000 AI interactions/mo, train on docs/websites, AI Copilot for agents. |

| Business | $799 | Up to 3,000 AI interactions/mo, train on past tickets, AI Actions (triage/API), bulk simulation. |

| Custom | Contact Sales | Unlimited interactions, advanced actions, multi-agent orchestration, custom integrations. |

Most importantly, eesel AI has no per-resolution fees. Our plans come with predictable costs that don't punish you for having a busy support month, which is a common and frustrating surprise you might find with other vendors.

Final thoughts: A simpler way to test and deploy

ServiceNow AI Agent Studio has a powerful set of tools for building and testing AI agents. But that power comes with a lot of complexity, requires specialized resources, locks you into one platform, and has unclear pricing.

At the end of the day, the goal of testing is to feel confident enough to launch a solution that actually helps your team and your customers. For many teams, a more direct and less resource-heavy path isn't just a nice-to-have; it's the only way to get started with AI.

If you’re looking for an AI platform that lets you test with confidence and go live in minutes, not months, it might be worth exploring a solution built for speed and simplicity. With powerful, easy-to-use simulation and direct integration into the tools you already use, eesel AI offers a faster way to see the real value of AI in your support workflows.

Frequently asked questions

The primary goal of ServiceNow AI Agent Studio Testing is to build confidence that your AI agent will behave as expected and correctly handle tasks before it's deployed to real-world scenarios. It helps ensure the agent follows instructions and uses its tools effectively.

ServiceNow provides two main methods for ServiceNow AI Agent Studio Testing: Unit Testing via the 'Testing Tab' for single-scenario debugging, and Scale Testing using 'Evaluation Runs' to measure performance across a large set of historical tasks.

Yes, effectively utilizing ServiceNow AI Agent Studio Testing, especially understanding decision logs and setting up complex evaluation runs, typically requires an admin with the "sn_aia.admin" role and a deep understanding of the platform's architecture.

A significant challenge with ServiceNow AI Agent Studio Testing datasets is the "chicken-and-egg" problem: you need to pull from past AI agent execution logs to create them. This means your agent must have already processed a substantial number of tasks to generate the necessary data for testing.

ServiceNow does not publicly list pricing for the required "Now Assist Pro+ or Enterprise+" licenses needed for ServiceNow AI Agent Studio Testing. You generally need to contact their sales team directly to get specific pricing and understand the potential investment.

While ServiceNow's AI tools are powerful, they are designed to work best within the ServiceNow ecosystem. If your organization uses other helpdesks like Zendesk or Freshdesk, adopting ServiceNow AI agents would likely mean a massive and expensive migration to the ServiceNow platform.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.