ServiceNow's AI Agents are exciting, promising to automate all sorts of complex workflows. But what happens when they go off-script? Trying to figure out what went wrong with an autonomous system can feel like searching for a needle in a haystack. Suddenly, ServiceNow AI Agent Studio Debugging becomes a huge time sink, pulling your team away from more important work.

This guide will walk you through how to tackle debugging in AI Agent Studio, step by step. We'll cover some smart habits, essential tools, and common mistakes to help you solve problems faster. We’ll also look at a more modern way to build AI agents that helps you catch bugs before they even happen, so you can launch with a lot more confidence.

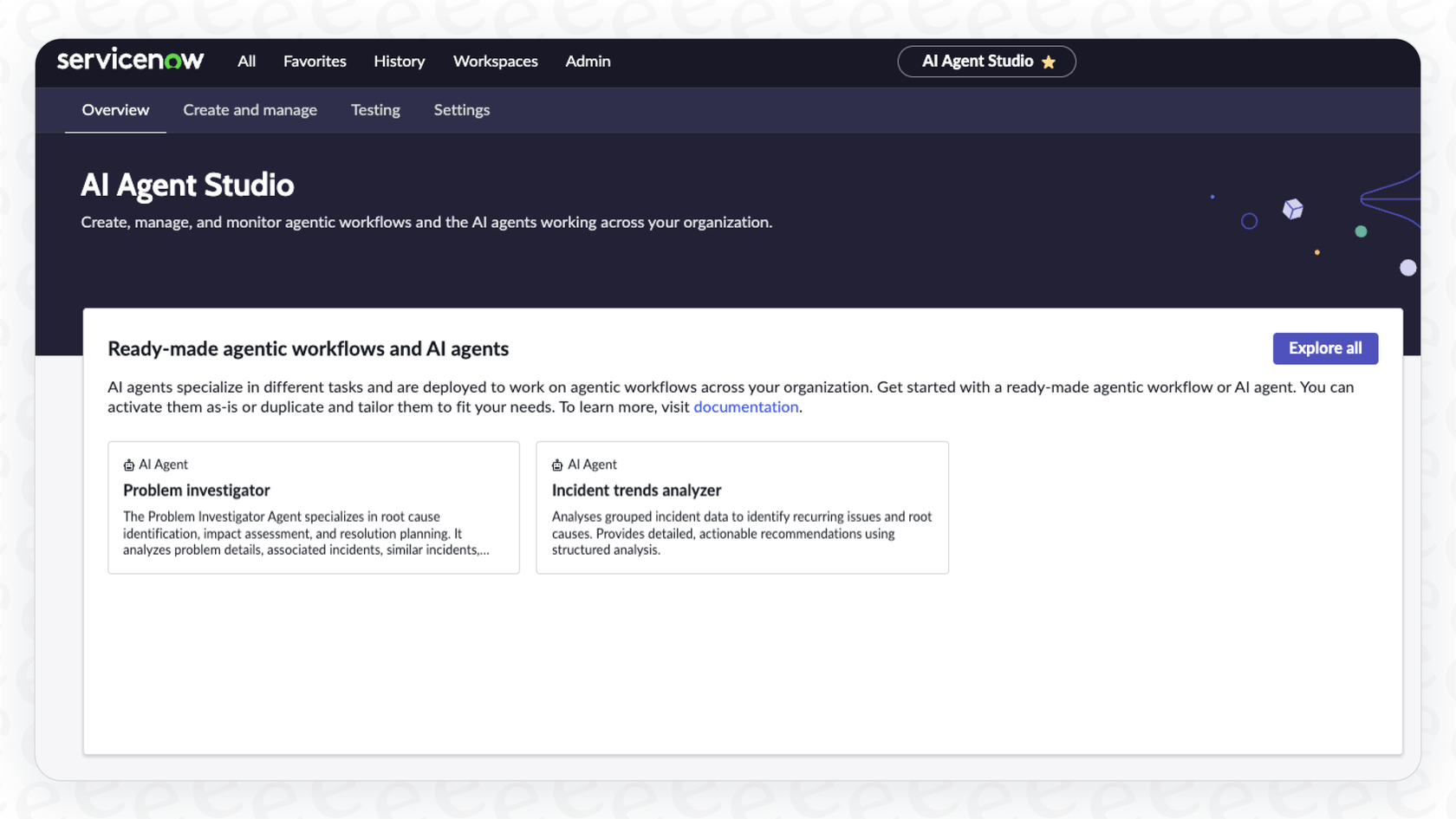

What is ServiceNow AI Agent Studio?

Before we get into fixing things, let's quickly cover what AI Agent Studio is. Think of it as ServiceNow's workshop for building and testing AI agents that can handle multi-step jobs, often called "agentic workflows."

Here’s a simple way to think about it:

-

Agentic Workflow: This is the big-picture process you want to automate, like resolving an IT ticket from start to finish or onboarding a new hire. It’s the "what."

-

AI Agent: This is the digital team member you build to do a specific part of that job, like gathering initial ticket details, creating a record, or asking for an approval. It's the "who."

-

Tools: These are the specific actions an agent can perform, like running a script, making an API call, or kicking off a Flow Designer workflow. This is the "how."

The Studio gives you a dashboard to manage all these pieces, but with so many interconnected parts, it’s easy to see how things can get tangled.

Why ServiceNow AI Agent Studio Debugging can be such a headache

While AI Agent Studio is powerful, it definitely adds new layers of complexity. If you've ever found yourself completely stuck trying to trace an issue, you're not the only one. The frustration usually boils down to a few things:

-

A steep learning curve: There are just so many moving parts, and without a clear map, it’s easy to get lost.

-

Jumping between tools and logs: Proper ServiceNow AI Agent Studio Debugging often means you have one tab open for the AI Agent Studio, another for the Script Debugger, and you're digging through session logs on the side. It feels like you’re trying to solve a puzzle without the box, piecing together clues from a dozen different places.

-

Documentation that’s hard to use: The official ServiceNow documentation is thorough, but it can be very dense and developer-heavy. Sometimes it's even locked behind a login, making it tough for a business user or new admin to find a quick, straightforward answer.

All this adds up to more time spent fixing things and a slower journey to reliable automation.

A straightforward guide to ServiceNow AI Agent Studio Debugging

Even though it can be tricky, ServiceNow does give you several ways to troubleshoot. Here’s a practical approach to finding and fixing common problems, starting with the basics and moving to the more advanced stuff.

Good habits to avoid ServiceNow AI Agent Studio Debugging

The easiest bug to fix is the one that never happens. Before you even start building, it's a good idea to follow these simple practices:

-

Map it out on paper first: Seriously, grab a whiteboard or a notebook and chart out your entire workflow. This helps you clarify the steps, spot dependencies, and get everyone on the same page before a single piece of the agent is built.

-

Duplicate before you debug: This is a big one. Never, ever edit a live agentic workflow. Always make a copy to work on. Deactivate the original, publish your changes to the duplicate, and test it there.

-

Keep it simple at first: Start with a small, manageable task. If you limit the number of agents and tools in your first workflow, it’ll be much easier to see what’s going on. You can always add more complexity later.

Using built-in tools for ServiceNow AI Agent Studio Debugging

AI Agent Studio has some handy built-in features that should be your first port of call when something seems off.

-

AI Agent Studio Testing Page: This lets you run an agent through its paces and watch the conversation, the AI's "thought process," and any errors in real time. It's great for figuring out if a single agent is misbehaving.

-

Chat Test Window: When you're testing a topic, the chat window has a few debug tabs like Variables, Context, and Logs. These are super helpful for spotting things like a variable holding the wrong value or an unexpected context switch.

-

AI Agent Analytics Dashboard: This isn't a direct debugging tool, but it helps you see the bigger picture. If you notice a sudden drop in successful tasks or a spike in how long they take, it’s a good signal that something is wrong and needs a closer look.

Key logs and tables for advanced ServiceNow AI Agent Studio Debugging

When the built-in tools aren't giving you the answer, it's time to roll up your sleeves and get into the platform's logs. This is a bit more technical, but it gives you the most detailed view of what’s happening behind the scenes.

For serious ServiceNow AI Agent Studio Debugging, these are the tables you'll want to get familiar with:

| Table Name | What It's For |

|---|---|

| "sys_gen_ai_log_metadata" | This is the main log for everything generative AI. It's the best place to start your investigation. |

| "sn_aia_execution_plan" | Shows the plan of which agents will run and in what order. Super helpful for orchestration issues. |

| "sn_aia_execution_task" | Tracks the status and result of each individual AI agent task in a workflow. |

| "sn_aia_tools_execution" | Gives you logs specifically for the tools your agent uses, like flows or scripts, to see if they're failing. |

| "sys_cs_message" | Contains the raw chat messages between the user and the agent, perfect for tracing the conversation. |

Common ServiceNow AI Agent Studio Debugging problems and fixes

A lot of headaches come from just a few common setup issues. Here’s a quick-reference table to help you out.

| Issue | Possible Cause & Solution |

|---|---|

| "No agents are available" error | This is often due to wrong agent proficiency settings, disabled AI Search, or inactive agents/tools. Go back and double-check all your configurations. |

| Agent output isn't visible to the user | Almost always a permissions problem. Make sure the user's role has read access to the tables and fields the agent is trying to show. |

| Agentic workflow trigger not firing | Your trigger conditions might be too vague or conflicting with something else. Try making them more specific and check that the "run-as" user has the right permissions. |

| Inconsistent agent behavior | This is just the nature of generative AI sometimes. You can get more consistent results by writing very specific prompts and instructions. Clearly define the agent's role and the exact steps you want it to take. |

A simpler alternative to ServiceNow AI Agent Studio Debugging

While ServiceNow gives you a deep set of tools, the debugging process is often reactive and complicated. What if you could spend less time reacting to problems and more time preventing them? This is where a more modern approach comes in, especially for teams that need to move fast without a dedicated developer.

Go from setup to live in minutes and avoid complex debugging

Instead of wrestling with a complex setup of plugins and configurations, you could use a platform designed for simplicity. For example, eesel.ai is built to be self-serve, with one-click integrations for helpdesks like Zendesk and even ServiceNow. This means you can connect an AI agent to your existing tools in minutes, not months, without a massive implementation project. You can build, test, and launch an agent on your own time, no sales calls required.

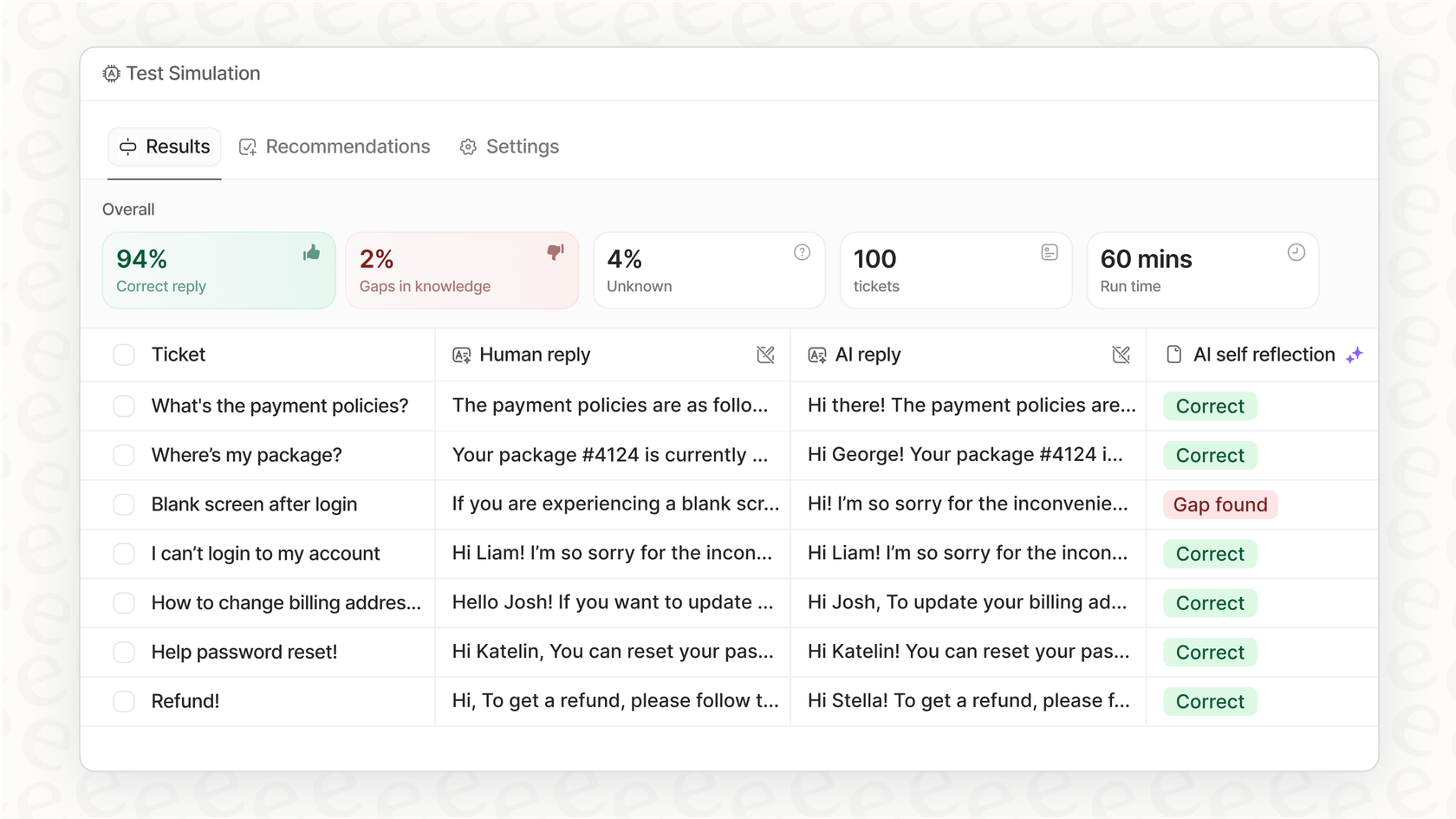

Proactive testing to reduce ServiceNow AI Agent Studio Debugging

The biggest challenge with ServiceNow AI Agent Studio Debugging is that you usually find problems after they’ve already happened. The best way to debug is to stop bugs from ever getting to your users in the first place.

With eesel AI's simulation mode, you can test your AI agent on thousands of your company's real historical tickets before it ever talks to a live user. The simulation shows you exactly how the AI would have responded and what actions it would have taken, and it even gives you an accurate forecast of your automation rate. This lets you tweak your agent's behavior in a safe environment, which massively cuts down on the need for post-launch fire drills. You can see what kinds of tickets are easy to automate, adjust the AI's prompts with confidence, and roll it out when you're ready.

A single dashboard vs. complex debugging

Instead of digging through multiple ServiceNow tables like "sys_gen_ai_log_metadata" and "sn_aia_execution_plan", a unified dashboard puts everything you need in one spot. Tools like eesel.ai provide reports that tell you more than just what happened. They show you where the gaps are in your knowledge base, point out common topics the AI struggled with, and even suggest new automation opportunities. It turns analytics from a chore into a clear roadmap for improvement.

Focus on automation, not just ServiceNow AI Agent Studio Debugging

ServiceNow AI Agent Studio Debugging offers a powerful, developer-focused toolkit for troubleshooting agentic workflows. But getting good at it takes a lot of platform knowledge and time spent digging through logs and tables.

For most teams, the real goal is to get reliable automation up and running quickly. By using a platform that focuses on proactive testing and simple management, you can switch from reacting to problems to preventing them. Tools like eesel AI plug right into your existing systems (including ServiceNow) and give you powerful simulation and clear reporting. You end up spending less time fixing things and more time delivering value.

Ready to build, test, and deploy AI agents without all the complexity? Try eesel AI for free and see how easy automating your support can be.

Frequently asked questions

Debugging in AI Agent Studio can be complex due to its steep learning curve, the necessity of jumping between multiple tools and logs, and sometimes dense documentation. This combination makes tracing issues difficult and time-consuming for many users.

Your first stop should be the AI Agent Studio Testing Page, which allows real-time observation of an agent's thought process and errors. Additionally, the Chat Test Window's 'Variables', 'Context', and 'Logs' tabs are invaluable for identifying immediate issues.

For deeper investigations, focus on tables like "sys_gen_ai_log_metadata" for general AI logs, "sn_aia_execution_plan" for orchestration, and "sn_aia_tools_execution" for tool-specific failures. These provide granular detail on agent operations and can pinpoint underlying problems.

To minimize debugging, always map out your entire workflow on paper first to clarify steps and dependencies. It's also critical to duplicate agents before making changes and to start with simple, manageable tasks to catch potential issues early.

A common issue is the "No agents are available" error, often caused by incorrect agent proficiency settings, disabled AI Search, or inactive agents/tools. The solution involves meticulously double-checking all agent, tool, and AI Search configurations to ensure they are correctly enabled and configured.

Yes, platforms like eesel.ai offer a simpler alternative with self-serve setup and proactive simulation modes. This allows you to test agents on thousands of historical tickets before deployment, significantly reducing post-launch debugging efforts.

Efficient ServiceNow AI Agent Studio Debugging or its prevention directly accelerates the deployment of reliable automation. By reducing the time spent on reactive fixes and troubleshooting, teams can launch agents faster and focus more on delivering continuous value rather than resolving errors.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.