AI agents are popping up everywhere in big companies, taking on jobs from IT support to customer service right inside platforms like ServiceNow. They promise to make things way more efficient, but just letting them run wild without any rules is asking for trouble. Without proper oversight, you could find yourself in a compliance mess, creating confusing experiences for users, and watching your costs get out of hand.

This is where governance comes in. It’s basically the rulebook that keeps your AI on a leash. This guide will walk you through the ServiceNow AI Agent Governance framework, dig into some of the real-world headaches it can cause, and show you a more flexible way to manage AI agents, especially if you need to move fast without getting tangled up in a huge, complicated system.

What is ServiceNow AI Agent Governance?

ServiceNow AI Agent Governance is the company's official playbook for managing AI agents on its platform. It’s a mix of policies, tools, and workflows that aim to make sure AI is used responsibly, transparently, and in a way that lines up with company rules. The whole point is to give large organizations the confidence to use AI across different departments without losing control.

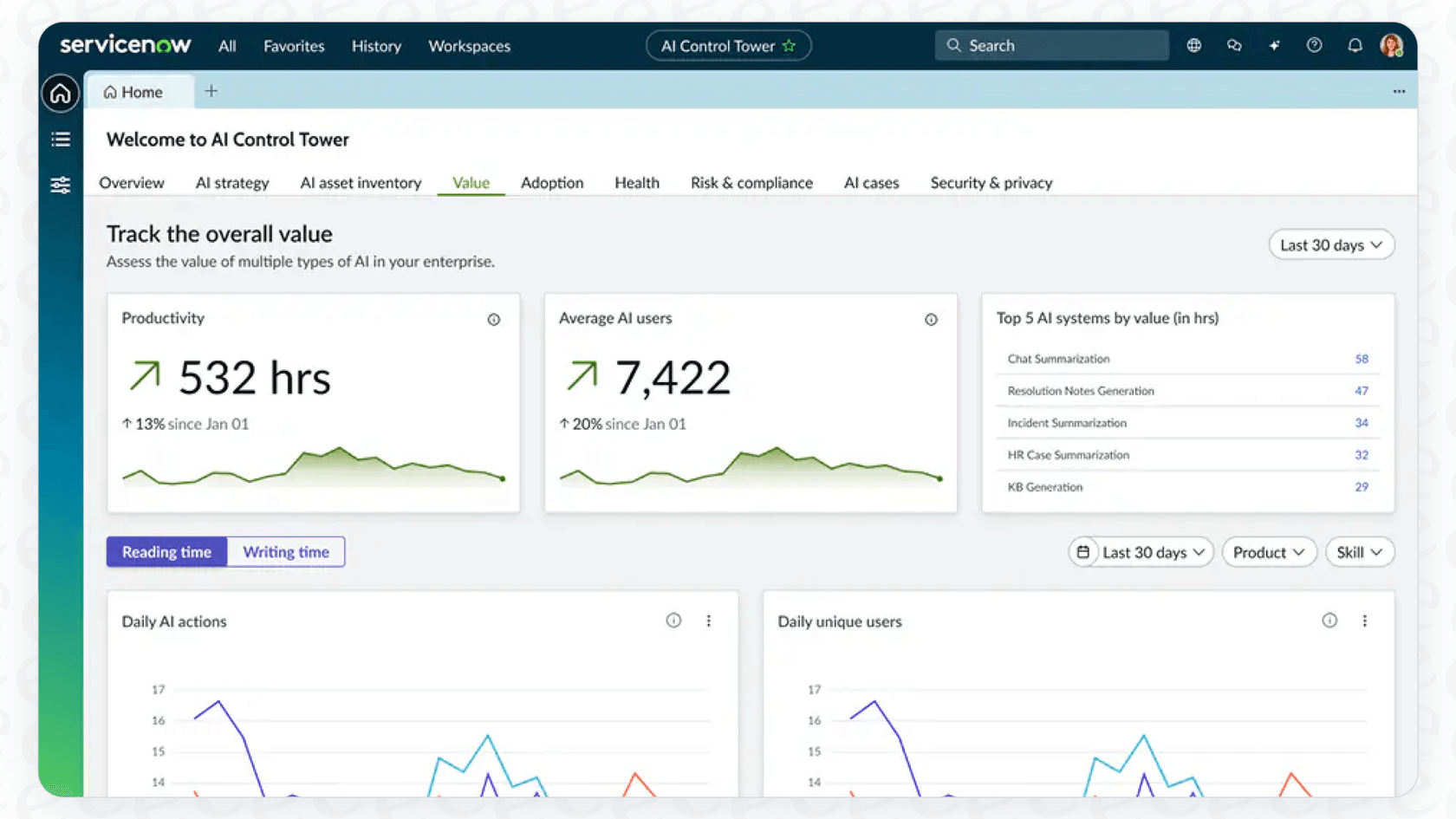

The main component of this strategy is the ServiceNow AI Control Tower. Think of it as the command center for every AI project you have going on. It links your company's big-picture goals with the day-to-day work of your AI, making sure everything is secure, compliant, and actually helping the business. The goal is to let big companies use AI on a massive scale while keeping the risks as low as possible. It’s built for enterprises with complex needs that have to manage AI across many different teams.

The core pillars of the ServiceNow AI Agent Governance framework

ServiceNow’s way of thinking about governance is built on a few key ideas, all designed to give big companies tight control over their AI inside the platform's world.

1. A central hub for everything AI

The AI Control Tower is the brain of the whole operation. It's the place you go to see how your AI is doing, manage the entire life of your AI agents, and check if your AI projects are actually paying off. It’s meant to be a single place for the truth, giving you a clear picture of not just the AI agents built inside ServiceNow, but also any third-party agents you might be using. This creates one dashboard for all your AI stuff, which is a pretty big deal for large organizations trying to keep everything straight.

2. Built-in ethical and responsible AI rules

ServiceNow talks a lot about what it calls "responsible AI". This just means their AI is designed to be human-focused, fair, and transparent. In the real world, this looks like built-in guardrails that can spot and block things like offensive language or prompt injection attacks, where someone tries to fool the AI into doing something it’s not supposed to. These features are there to make sure the AI agents act in a way that fits your company’s values, but as we’ll get into, they can also make things a bit inflexible.

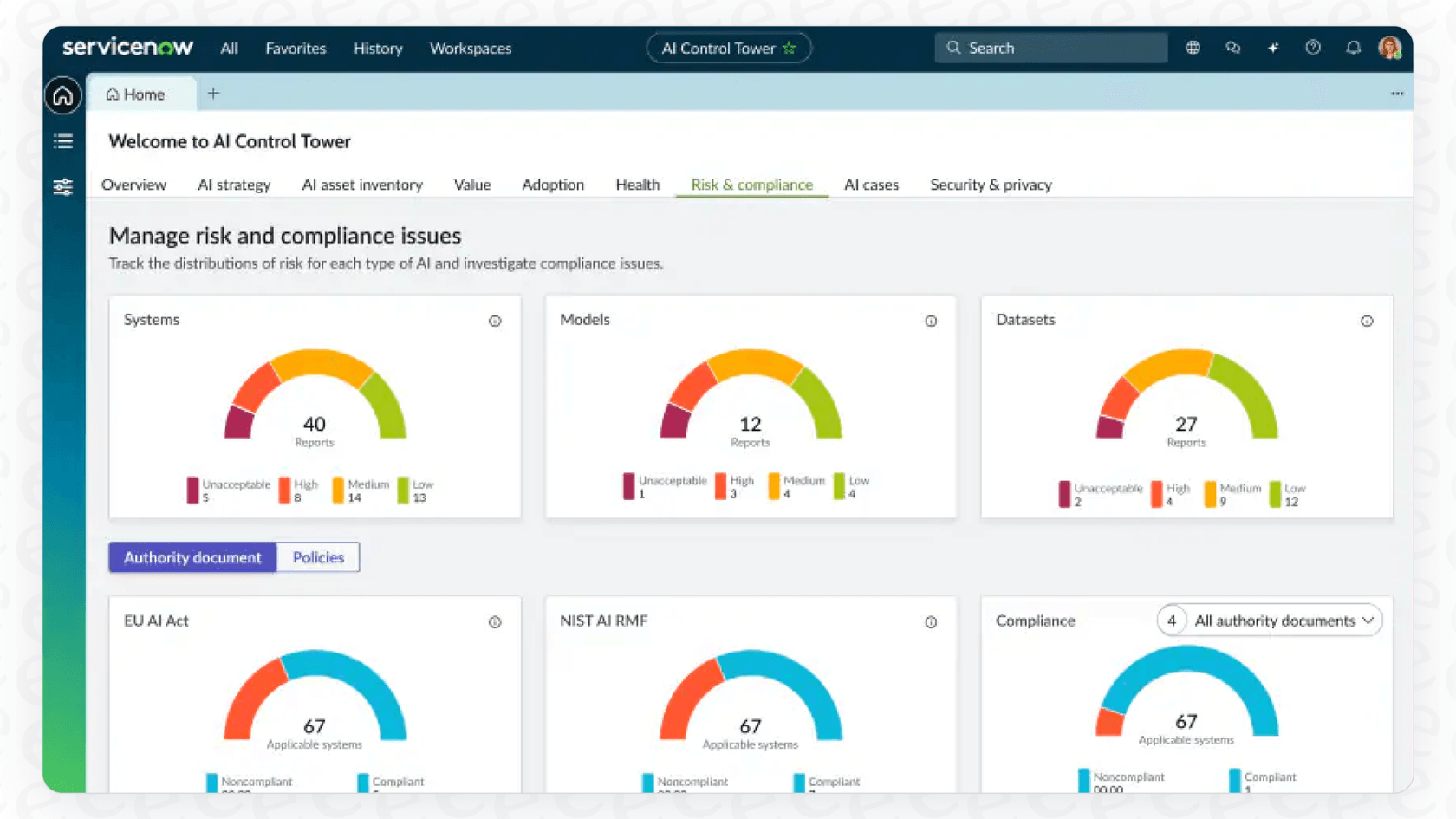

3. Integrated risk and compliance tools

One of the main draws of the ServiceNow ecosystem is how all its pieces fit together. Their AI governance is no different and is closely tied to their Governance, Risk, and Compliance (GRC) products. This link allows for automated compliance checks, ongoing risk monitoring, and easier audit reporting. For businesses that have to follow strict regulations like GDPR, this means AI activities are always being checked against legal and internal policies, which helps avoid some pretty hefty fines.

Practical challenges of ServiceNow AI Agent Governance

While a big, all-in-one governance framework sounds great in theory, it often comes with some serious baggage in practice. For teams that don't have the time or money for a massive, company-wide project, this kind of system can feel more like a roadblock than a help.

1. It takes a long, long time to set up

Let’s be honest, enterprise software is never plug-and-play, and ServiceNow is no exception. Setting up its AI governance framework is a huge project that involves a whole crew: IT, legal, compliance, and usually a team of pricey consultants. It’s a process that can drag on for months, which is basically a lifetime in the fast-paced world of AI. For support teams that need to be nimble, try out new ideas, and show results quickly, this slow, deliberate process can completely stall their progress and shut down innovation.

2. The framework can be too rigid

A "one-size-fits-all" approach to governance rarely fits anyone just right. The strict rules you might need for a critical IT system that handles sensitive data are probably way too much for a simple customer service bot that just answers questions about shipping. This is where rigid frameworks start to cause problems. They often box you into a single set of rules for every situation, making it hard to just use common sense. It also makes it tough to roll out AI in small, bite-sized pieces. Many enterprise platforms want an all-or-nothing commitment, which means you can’t easily dip your toes in the water, learn from a small pilot, and grow from there.

3. There’s no truly risk-free way to test

Most enterprise platforms offer some kind of testing environment, but they often don't give you what you really need for AI. What you actually need is a way to see how your AI will behave with your real data before it ever talks to a customer. The only way to launch with any real confidence is to run a simulation on thousands of your past support tickets to get a solid forecast of resolution rates, accuracy, and how much you’ll save. Without that, you're pretty much flying blind, and every new AI launch becomes a high-stakes bet.

A simpler, more flexible approach to ServiceNow AI Agent Governance

What if governance wasn't about locking things down, but about giving teams the confidence to move forward? There's a different way to think about AI oversight, one where you can start small, keep total control, and build trust in your AI before you decide to scale it up.

This modern approach is built on a few simple principles:

-

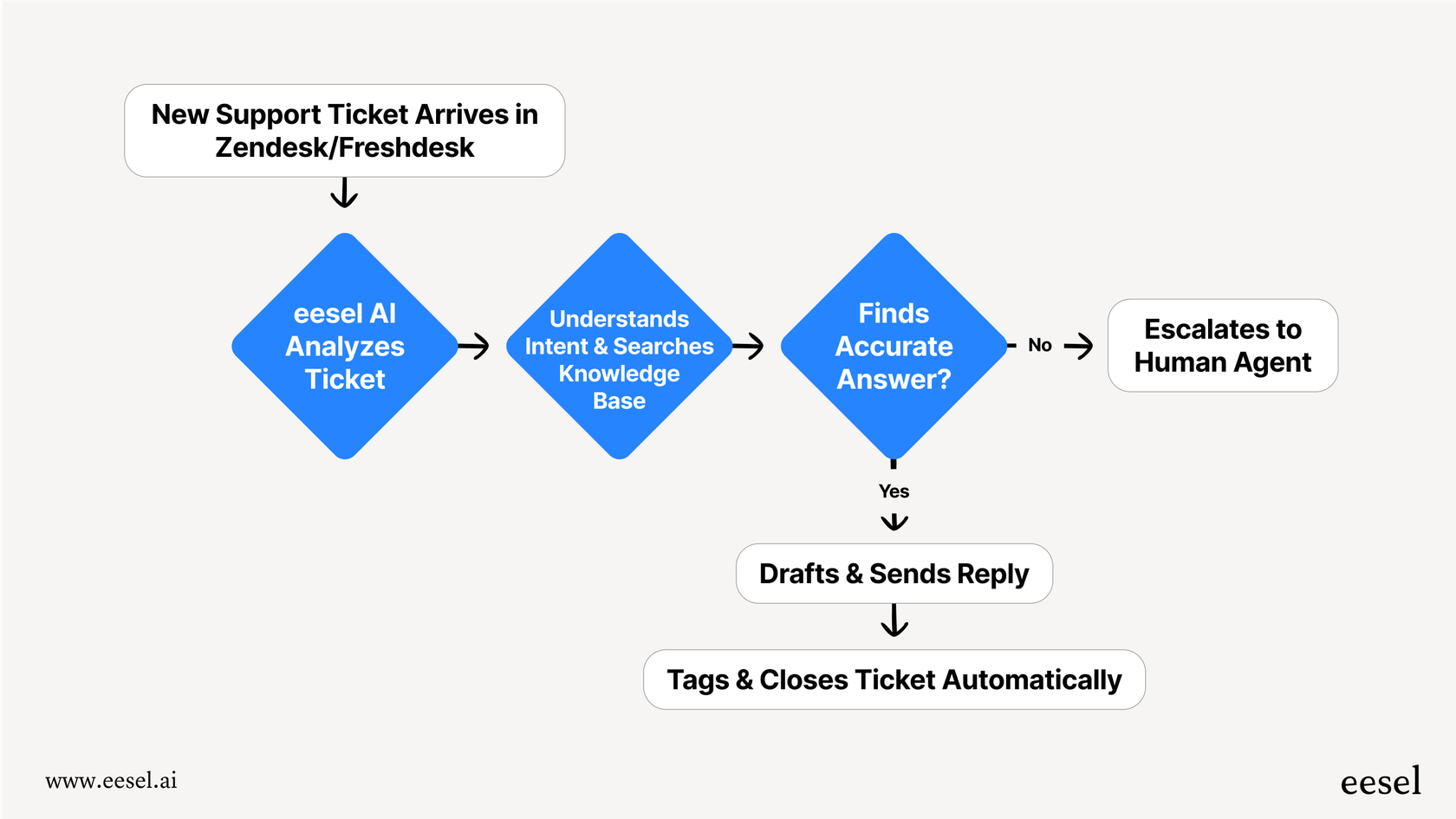

Get started in minutes, not months. You shouldn't have to sit through endless sales calls and mandatory demos just to try something out. Modern tools are built to be self-serve. For instance, eesel AI lets you connect your helpdesk with a single click and get a basic AI agent running in just a few minutes. It lets you skip the months-long buying and setup processes that are so common with enterprise software.

-

Control you can fine-tune. You know your support operations better than anyone, so you should be the one calling the shots on what your AI does. Instead of being stuck with a rigid, company-wide set of rules, a tool like eesel AI gives you a simple workflow engine to define exactly which tickets the AI should touch. You can start with something easy, like password resets, and have the AI pass everything else to a human. This gives you complete control over the agent's scope from day one.

- Risk-free simulation and a gradual rollout. This is the part that really gives you peace of mind. Imagine being able to test your AI agent on thousands of your company's past tickets in a safe, contained environment. The simulation mode in eesel AI does just that, giving you a real forecast of resolution rates and performance before you go live. You can see how it’ll do, adjust its responses, and then roll it out to handle just one specific type of ticket. As you get more comfortable, you can gradually give it more to do.

ServiceNow AI Agent Governance: Comparing pricing and accessibility

The way a company talks about its price tag tells you a lot about who it's for. Enterprise platforms often keep their pricing a secret, which can be a huge barrier for teams that just want to get going.

ServiceNow’s pricing is classic enterprise. It's not listed publicly, and getting a quote means going through a long sales process. This usually involves a big upfront cost and long-term contracts, making it tough for individual teams to experiment or run a small pilot.

On the other hand, eesel AI offers a transparent and predictable pricing model. There are no hidden per-resolution fees that can lead to surprise bills at the end of the month, and the plans are easy to understand. You can even start with a monthly plan and cancel anytime, which takes away the risk of getting locked into a long-term contract.

| Plan | Monthly Price (Billed Monthly) | Key Features |

|---|---|---|

| Team | $299 | Train on docs, Slack integration, basic reporting. |

| Business | $799 | Train on past tickets, MS Teams, AI Actions, bulk simulation. |

| Custom | Contact Sales | Advanced multi-agent orchestration and integrations. |

Choose governance that fits your team's speed

ServiceNow offers a powerful, detailed governance framework that’s built for huge, top-down AI projects in large corporations. If you have the time, budget, and organizational buy-in to go through that process, it can be a very solid solution.

But for most teams today, speed and flexibility are the name of the game. They need to experiment, learn, and deliver value without getting stuck in months of red tape. For them, a modern approach to AI governance is a much better fit. It’s about having the control to start small, the confidence to test safely, and the freedom to scale at your own pace.

If you're looking for a simpler, more controlled way to bring AI into your support workflows, it might be time to try a different approach. Start your free trial of eesel AI and see your automation potential in minutes.

Frequently asked questions

ServiceNow AI Agent Governance is the company's official framework of policies, tools, and workflows designed to manage AI agents responsibly on its platform. It's crucial for large organizations to ensure compliance, maintain control, and use AI transparently across departments, minimizing risks.

The framework is built on three core pillars: a central AI Control Tower for oversight, built-in ethical and responsible AI rules, and integrated risk and compliance tools. These components aim to provide a unified view and control over all AI activities within the ServiceNow ecosystem.

Practical challenges include the lengthy setup time, often involving months of work with multiple teams and consultants, which can stall innovation. The framework can also be too rigid, applying a one-size-fits-all approach that doesn't suit all AI use cases or agile teams.

The setup for ServiceNow AI Agent Governance typically takes months, requiring significant internal resources and often external consultants. In contrast, modern, flexible tools like eesel AI can be connected and have a basic agent running in minutes, bypassing lengthy sales and implementation cycles.

Yes, the framework can be quite rigid due to its "one-size-fits-all" approach, which might be overkill for simpler AI applications or pilot projects. This rigidity can hinder the ability to experiment, learn, and roll out AI in small, incremental steps.

Absolutely. More flexible approaches prioritize quick setup, fine-tuned control over agent scope, and risk-free simulation on past data. Tools like eesel AI offer these capabilities, allowing teams to start small, build confidence, and scale AI gradually.

Large enterprises with complex, company-wide AI initiatives, significant compliance requirements, and the resources (time, budget, personnel) for a substantial implementation project are best suited. It's ideal when a top-down, integrated approach across many departments is essential.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.