Everyone’s buzzing about generative AI, but let's be honest, there's a big elephant in the room: trust. Especially when you're talking about customer data. How do you use these powerful new tools without putting privacy on the line? It's a valid concern, and one that customers share. In fact, nearly three-quarters of them are worried about companies using AI in unethical ways.

The Salesforce Einstein Trust Layer is Salesforce’s attempt to solve this problem. It’s a framework built to make their native AI features safe enough for big companies, wrapping them in a protective layer of security and data controls.

But what does it actually do day-to-day? And more importantly, is it the right choice for your entire support team? This guide will walk you through the Trust Layer, what it is, its main features, its real-world limitations, and its confusing pricing. By the end, you'll have a clear picture to help you decide if it’s the solution you need, or if you'd be better off with a more flexible tool.

What is the Salesforce Einstein Trust Layer?

Put simply, the Salesforce Einstein Trust Layer is a security blanket for all the AI tools built into the Salesforce platform. It’s not a separate add-on you buy; it’s the background architecture that’s always on when you use tools like Einstein Copilot or Prompt Builder.

Its main job is to act as a secure go-between. It sits between the prompt you enter and the Large Language Model (LLM) that comes up with a response. In that position, it intercepts your data, runs a bunch of safety checks, and makes sure your sensitive company and customer info never leaves the Salesforce environment. Think of it as a bouncer for your AI. It stands between your request and the AI model, checking your data's ID and making sure nothing sensitive gets past the velvet rope.

graph TD A[User enters a prompt in Salesforce] --> B{Einstein Trust Layer}; B --> C[1. Secure Data Retrieval from CRM]; C --> D[2. Data Masking for PII]; D --> E[3. Prompt Defense & Toxicity Check]; E --> F[LLM Partner e.g., OpenAI]; F --> G{Einstein Trust Layer}; G --> H[4. Toxicity Check on Response]; H --> I[5. Un-masking Data]; I --> J[User receives a secure, relevant response]; style F fill:#ADD8E6,stroke:#333,stroke-width:2px style B fill:#90EE90,stroke:#333,stroke-width:2px style G fill:#90EE90,stroke:#333,stroke-width:2px

Key features of the Salesforce Einstein Trust Layer

The Trust Layer isn’t a single thing; it’s a handful of components working together to keep your AI interactions secure. To really get what it does, you have to look at each piece. Here’s a breakdown of its most important features.

Secure data retrieval and dynamic grounding

One of the biggest letdowns of using a general-purpose AI like ChatGPT is that it knows absolutely nothing about your business. Ask it to write a customer email, and you'll get a template that sounds like it was written by a robot. This is where dynamic grounding helps. The Trust Layer feeds the AI real-time context from your CRM data, which makes its answers much more relevant and personalized.

It manages this with secure data retrieval. The Trust Layer double-checks that the AI only pulls from data that the user has permission to see. It automatically respects all your existing Salesforce user permissions and field-level security, so an agent can't accidentally use information from a record they shouldn't be able to access.

Data masking for private information

This one is a huge deal. Before any prompt gets sent to an outside LLM, the Trust Layer’s data masking feature automatically finds and hides sensitive information. It scans for details like names, email addresses, phone numbers, and credit card information and swaps them out with generic placeholders.

It uses two ways to do this: pattern-based detection (for things in a standard format, like a phone number) and field-based detection (which uses the data classifications you’ve already set up in Salesforce). After the LLM sends its response back, the Trust Layer un-masks the data, putting the real information back in. The user sees the correct details, but the LLM never saw the private stuff.

Zero-data retention policy

A common worry with third-party AI models is what happens to your data after you’ve used them. Salesforce tackles this with its zero-data retention policy. They have deals with their LLM partners, like OpenAI, to guarantee that your data is never stored or used to train their models.

This means that after the AI gives you a response, your prompt and all the data in it are wiped from the LLM’s servers. Nothing is kept for review, training, or anything else, which is a key part of keeping your data private.

Toxicity detection and prompt defense

Let’s face it, AI models can sometimes generate biased, inappropriate, or just plain weird responses (people often call these "hallucinations"). The Trust Layer has toxicity detection that scans both the user's prompt and the AI's response for anything harmful or offensive, flagging anything that seems out of line.

It also uses prompt defense, which is basically a set of instructions that tells the LLM how to behave. These guardrails keep the AI on a leash, reducing the odds of it going off-topic and protecting against attacks where someone tries to trick the AI into breaking its own safety rules.

Audit trail and monitoring

To keep everyone accountable, the Trust Layer maintains a detailed audit trail. Every prompt, every response, and every piece of user feedback gets logged and stored securely within Salesforce Data Cloud. This gives admins a way to see how AI is being used, review specific conversations, and make sure everything stays compliant with company policies.

Limitations of the Salesforce Einstein Trust Layer

While the Salesforce Einstein Trust Layer does a pretty good job of securing AI conversations that happen inside the Salesforce platform, the fact that it's built-in creates some real problems for most teams today.

The problem: A walled garden

The biggest issue is that the Einstein Trust Layer is only designed to protect data moving in and out of Salesforce. But your company’s knowledge doesn’t live in just one place. Most support teams depend on a bunch of different tools. Your help articles might be in a Zendesk help center, your internal process docs in Confluence, and the latest product updates in a bunch of Google Docs.

Salesforce’s AI can’t get to that external knowledge easily or securely. This creates knowledge silos and forces your AI to come up with answers based on a tiny slice of your company’s total wisdom. The result? Incomplete answers and unhappy customers.

Complicated and slow setup

Getting started with Einstein is not as easy as flipping a switch. To even be eligible, you have to be on one of Salesforce's most expensive plans, and then you have to buy a pricey add-on. The whole thing involves a long sales cycle and a drawn-out implementation project.

This feels completely out of step with how modern software should work. Teams want tools they can try out and set up themselves, usually in a few minutes. Being forced to "contact your Salesforce account executive" just to get started is a huge roadblock that slows things down and adds a layer of frustrating bureaucracy.

The anxiety of a "big bang" rollout

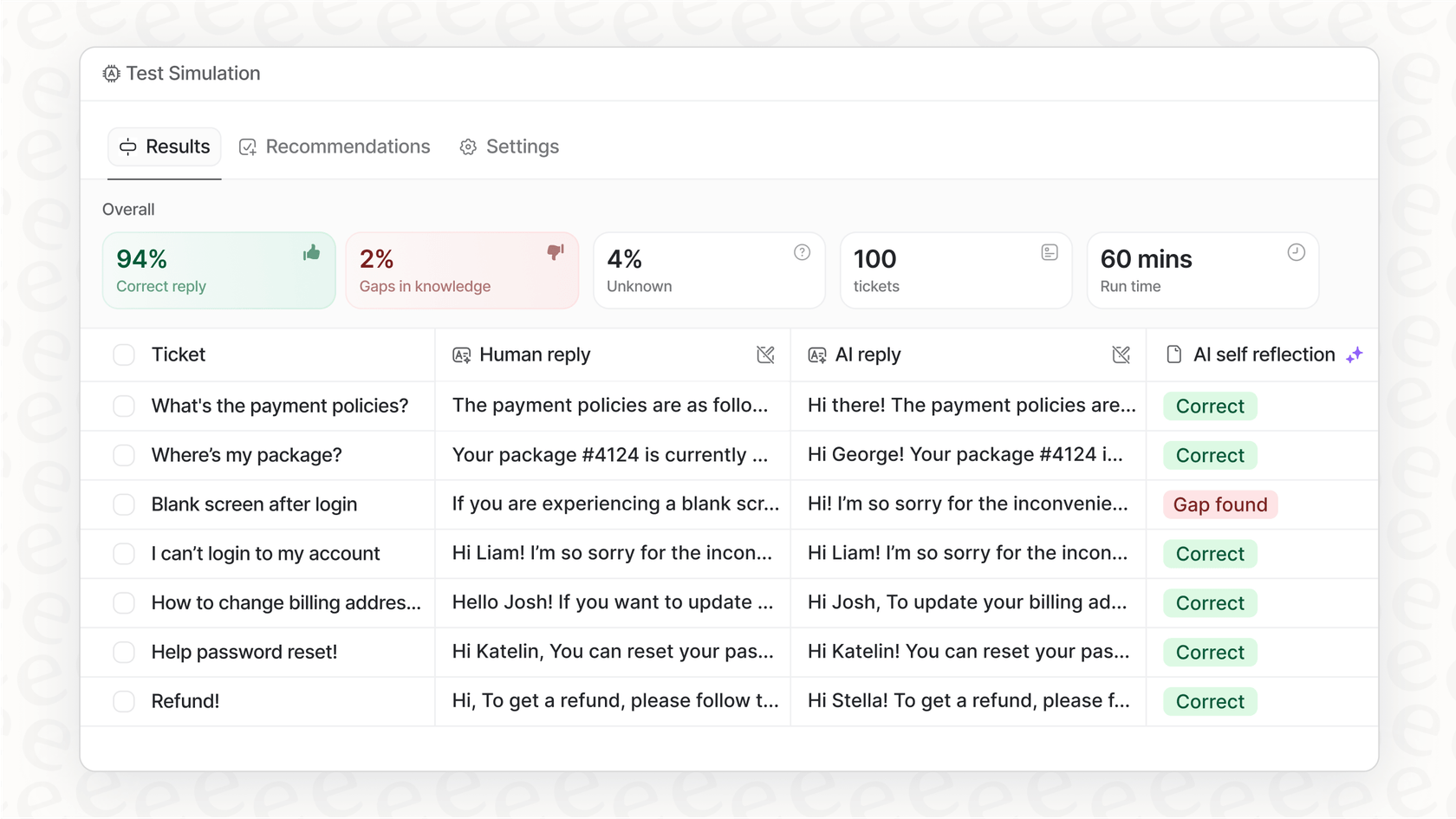

Testing a new AI system on live customer chats is a nerve-wracking idea. While Salesforce has some tools for this, it doesn't offer a simple way to simulate how the AI would perform on thousands of your actual past tickets. Without that kind of historical test run, it’s nearly impossible to know how well the AI will do or how many tickets it will actually be able to resolve.

This lack of a good simulation mode makes it tough for support leaders to roll out automation with any confidence. You’re often pushed into an "all or nothing" launch that can mess up your team's workflow and lead to a terrible customer experience if the AI isn't quite ready for prime time.

Salesforce Einstein Trust Layer pricing and packaging

If you’re trying to find a simple price for Salesforce's generative AI, you’re out of luck. The pricing is confusing and anything but transparent.

According to Salesforce's own documentation, their generative AI is only available if you're on an Enterprise, Performance, or Unlimited plan. On top of that, you have to buy one of their add-ons, like "Einstein for Service" or "Einstein 1 Service." And what do those cost? You guessed it: you have to "contact your Salesforce account executive" to get a quote.

Here’s what that really means for you:

-

A high cost of entry: You need to already be paying for a top-tier Salesforce plan before you can even think about their AI tools.

-

Surprise costs: The price of the add-on is a mystery. This leads to unpredictable expenses negotiated in private, making it impossible to budget for.

-

Vendor lock-in: This pricing model is built to pull you deeper into the Salesforce world, making it harder and more expensive to switch to other tools if your needs change later on.

The alternative: A flexible AI layer for all your tools

For teams that need an AI solution to work with all their tools, not just one, there's a better way. eesel AI was built to be that flexible AI layer, designed to solve the problems of closed platforms like Salesforce.

Go live in minutes, not months

Forget about the long sales calls and implementation projects that Salesforce requires. eesel AI is completely self-serve. You can sign up, connect your help desk like Zendesk or Freshdesk with a single click, and have a working AI Copilot in minutes, no mandatory demo or sales pitch required.

Better yet, eesel AI has a powerful simulation mode that lets you test your setup on thousands of your past support tickets. This gives you a solid forecast of your automation rate and cost savings before you go live, taking all the guesswork out of your rollout.

Connect all your knowledge, not just Salesforce data

eesel AI connects to all of your knowledge sources, not just a single platform. It integrates with over 100 tools, including wikis like Confluence and Google Docs, chat apps like Slack, and even e-commerce platforms like Shopify. By looking at your past tickets and macros alongside your help articles and internal docs, eesel AI builds a truly complete knowledge base. This ensures your AI gives accurate, context-aware answers every time, no matter where the information lives.

Full control and clear pricing

With eesel AI, you get a fully customizable workflow engine, so you can decide exactly which tickets the AI should handle and what it can do, from simple triage to looking up order information.

And when it comes to cost, there are no games. eesel AI offers transparent, predictable pricing plans with no per-resolution fees. You can start on a flexible monthly plan and cancel anytime, giving you the freedom to scale up or down without getting stuck in a long-term contract.

| Feature | Salesforce Einstein Trust Layer | eesel AI |

|---|---|---|

| Setup Time | Weeks to months; requires sales | Minutes; fully self-serve |

| Knowledge Sources | Primarily Salesforce data | 100+ integrations (Help desks, wikis, etc.) |

| Simulation | Limited and complex to configure | Built-in; simulate on thousands of past tickets |

| Pricing Model | Opaque; requires enterprise plans + add-ons | Transparent, flat monthly fees; no hidden costs |

| Flexibility | Locks you into the Salesforce ecosystem | Works with your existing tools |

Trust in AI means more than a single platform

The Salesforce Einstein Trust Layer is a solid and necessary security framework if your company lives and breathes Salesforce. It offers important features like data masking, zero-retention policies, and an audit trail that are table stakes for any enterprise-level AI.

However, its greatest strength, being deeply woven into Salesforce, is also its biggest weakness. It creates a walled garden that just doesn't match how modern teams actually work, with knowledge spread across dozens of different tools. In today's world, a trustworthy AI needs to be more than just secure; it needs to see everything.

For most support teams, a truly helpful AI solution has to be flexible, easy to set up, and able to connect all your knowledge, wherever it happens to be. Building real trust means giving your team an AI that has the full picture, not just a tiny piece of it.

Next steps

If you're looking for a secure AI solution that works with all the tools you already have, not just one, then you're ready for a more flexible approach.

Get started with eesel AI for free and see how quickly you can automate support by connecting to the tools your team already loves.

Frequently asked questions

The Salesforce Einstein Trust Layer is a security architecture built into Salesforce's native AI features. Its primary function is to act as a secure intermediary between user prompts and Large Language Models, ensuring sensitive company and customer data remains protected within the Salesforce environment.

It uses a data masking feature that automatically identifies and replaces sensitive details like names and credit card information with generic placeholders before sending the prompt to an external LLM. After the LLM provides a response, the Trust Layer un-masks the data. It also employs a zero-data retention policy, ensuring your data is never stored or used to train third-party models.

A significant limitation is that the Salesforce Einstein Trust Layer is primarily designed to protect and utilize data exclusively within the Salesforce platform. This means it struggles to access or secure knowledge stored in other tools like Zendesk, Confluence, or Google Docs, leading to knowledge silos and potentially incomplete AI responses.

Access to Salesforce's generative AI, which includes the Salesforce Einstein Trust Layer, is only available for customers on Enterprise, Performance, or Unlimited plans. Additionally, users must purchase a separate, often unlisted, add-on like "Einstein for Service," with specific pricing requiring direct consultation with a Salesforce account executive.

The Salesforce Einstein Trust Layer uses dynamic grounding to feed the AI real-time context from your CRM data, making responses more relevant and personalized. It also includes toxicity detection and prompt defense mechanisms to scan for inappropriate content and guardrails to keep the AI on topic, reducing the likelihood of irrelevant or harmful outputs.

Setting up the Salesforce Einstein Trust Layer is generally not a quick process, often involving a long sales cycle and an extended implementation project. Eligibility requires being on one of Salesforce's most expensive plans, followed by the purchase of an additional, costly add-on, making it less of a self-serve solution.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.