It seems like everyone in the Salesforce world is talking about AI, especially with exciting new tools like Agentforce on the horizon. The promise of automating away the boring stuff and making teams more productive is huge. But let's be honest, the pressure to get it right is just as big.

While companies are racing to adopt AI, the real roadblocks aren't about the tech itself. They're about trust, security, and having some control over what you're building. Most people are (rightfully) a little nervous about deploying AI. How do you actually give an AI agent access to your company data and let it talk to customers without a solid governance plan in place?

This guide is here to help you cut through that noise. We’ll walk through what Salesforce AI Governance looks like, cover the real-world challenges you'll face, and map out a much simpler path for teams who just want to automate support safely and get on with their day.

What is Salesforce AI governance?

Salesforce AI Governance is basically the company's set of rules, tools, and best practices for using AI responsibly on its platform. Think of it as the instruction manual that helps you get the good stuff from AI while keeping the risks under control.

And those risks are no joke. We’re talking about potential data breaches, AI models going off-script with biased decisions, damaging your brand's reputation, or even getting on the wrong side of major regulations like the EU’s AI Act.

The whole concept is based on Salesforce's commitment to "Trusted AI", which is all about ensuring AI is accurate, safe, and transparent. It's a great idea in theory, but putting it into practice is where things start to get complicated.

The core parts of the native Salesforce AI governance framework

Salesforce has put together a suite of powerful, connected tools to help you build a governance framework. It’s an impressive setup, but it’s crucial to understand what each piece does and, more importantly, what it doesn’t do.

The data foundation: Data Cloud and Shield

At the center of Salesforce's strategy is the Data Cloud. Its job is to pull all of your customer data into one unified profile. The idea is to give your AI a single, clean source of information to learn from.

Layered on top of that is Salesforce Shield, which adds a heavy dose of security. It gives you Platform Encryption to lock down sensitive data, Event Monitoring to see what users are up to, and tools like Data Mask to scramble information in your test environments.

But here’s the catch: this entire setup is designed to manage data that lives inside the Salesforce ecosystem. It’s fantastic for that specific job, but it completely misses all the critical knowledge your company has stored elsewhere.

Agent deployment with Agentforce

Agentforce is Salesforce's platform for building your own custom AI agents to handle tasks and chats. To keep them on a leash, Salesforce gives you built-in controls like the Agentforce Testing Center, which lets you see how an agent will behave in a safe sandbox. You also get "instruction adherence checks" to help guide the AI's logic and keep it from wandering off track.

The problem? Building, testing, and managing agents this way demands some serious platform expertise and a whole lot of time. It effectively hitches your entire AI strategy to Salesforce's wagon, which can be a slow, complex, and expensive journey that locks you into their world.

The Einstein Trust Layer

The Einstein Trust Layer is the security architecture running behind the scenes. It’s built to work with any AI model and has some really smart features, like automatically masking personal info (PII) before it gets sent to a large language model and enforcing a zero-data retention policy with providers like OpenAI.

These are excellent security measures. But they don't solve the biggest business problem: how do you know if an AI will actually be accurate and helpful before you unleash it on your customers? An audit trail is nice, but it won’t tell you if your new bot is about to annoy a third of your user base.

The real-world headaches of Salesforce AI governance

Salesforce gives you a solid set of tools, but for many teams (especially in customer support), trying to put this framework into action brings up some tough, practical problems.

The scattered knowledge problem

Let's be real for a second. The information needed to solve a customer's problem is almost never sitting neatly in your CRM. It’s spread out across Confluence pages, tucked away in Google Docs, mentioned in Slack threads, and buried in thousands of old support tickets in your helpdesk, which might be Zendesk or Intercom, not Service Cloud.

The native Salesforce AI Governance setup has no simple way to tap into this ocean of outside knowledge. This means your AI agents get an incomplete picture, give half-answers, and end up creating more work for your human agents.

This is exactly why a tool like eesel AI exists. It was designed to instantly unify all your different knowledge sources. It plugs into Salesforce, sure, but it also connects to Confluence, Google Docs, Notion, and over 100 other apps to create one comprehensive brain for your AI to use.

The complexity and "rip and replace" problem

Getting the full Salesforce AI Governance stack running isn't a casual afternoon project. It's a major undertaking that often requires specialized consultants and can take months of planning and work. It’s definitely not a switch you can just flip on.

Even worse, adopting these tools often means you have to overhaul your existing support workflows to fit the Salesforce way of doing things. If your team is already humming along nicely in another helpdesk, you’re suddenly looking at a painful "rip and replace" project just to get your AI started.

Compare that to eesel AI's self-serve approach. You can connect your helpdesk and knowledge bases in minutes, not months. The AI fits right into your current workflow without making you ditch the tools you and your team already know and use.

The risk of going live

This is the fear that keeps every support manager up at night. How can you be absolutely sure your shiny new AI agent won't say something weird or wrong to a real customer? Sandboxes and test environments are useful, but they can't fully predict the messy, random nature of actual customer conversations.

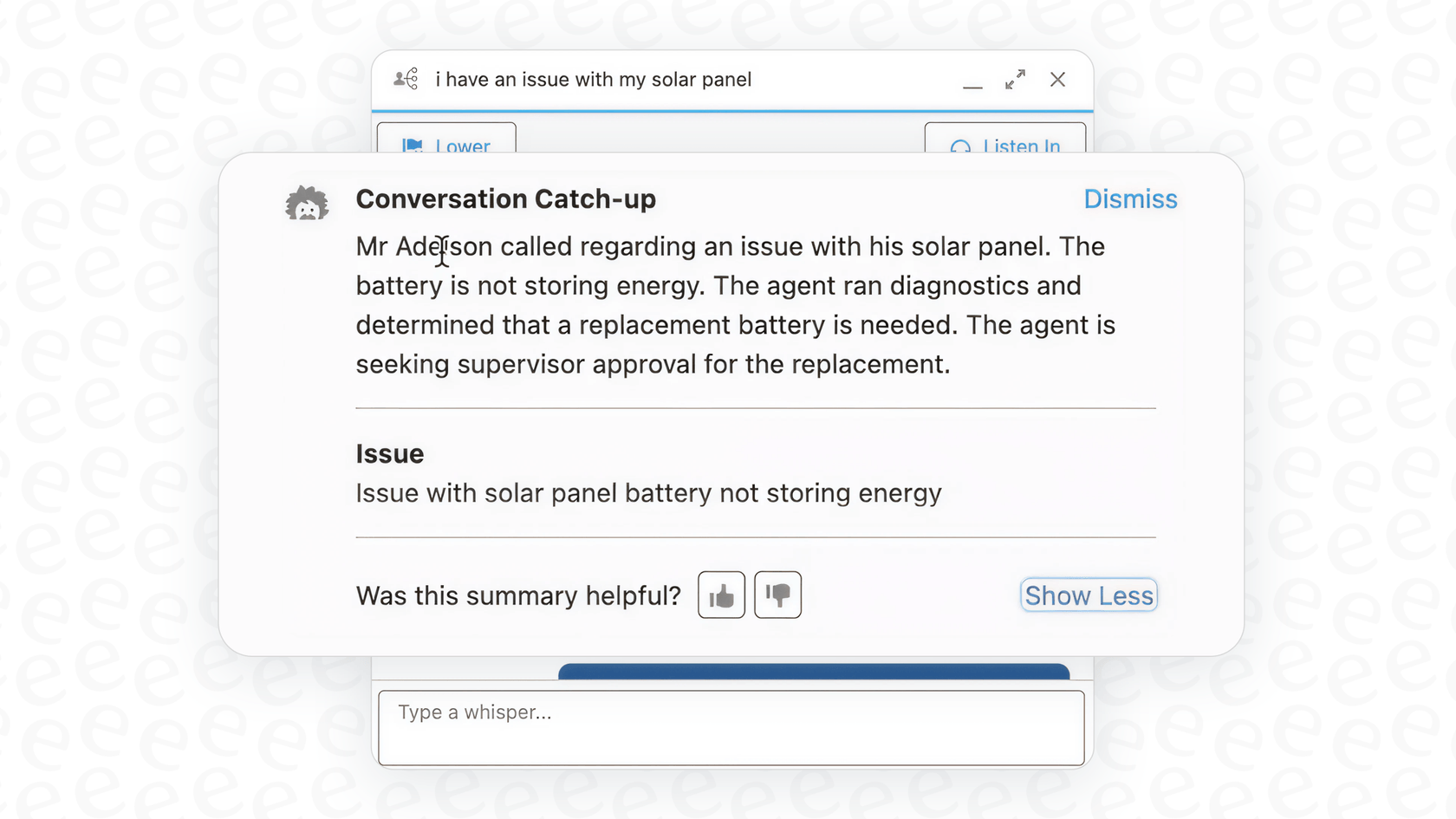

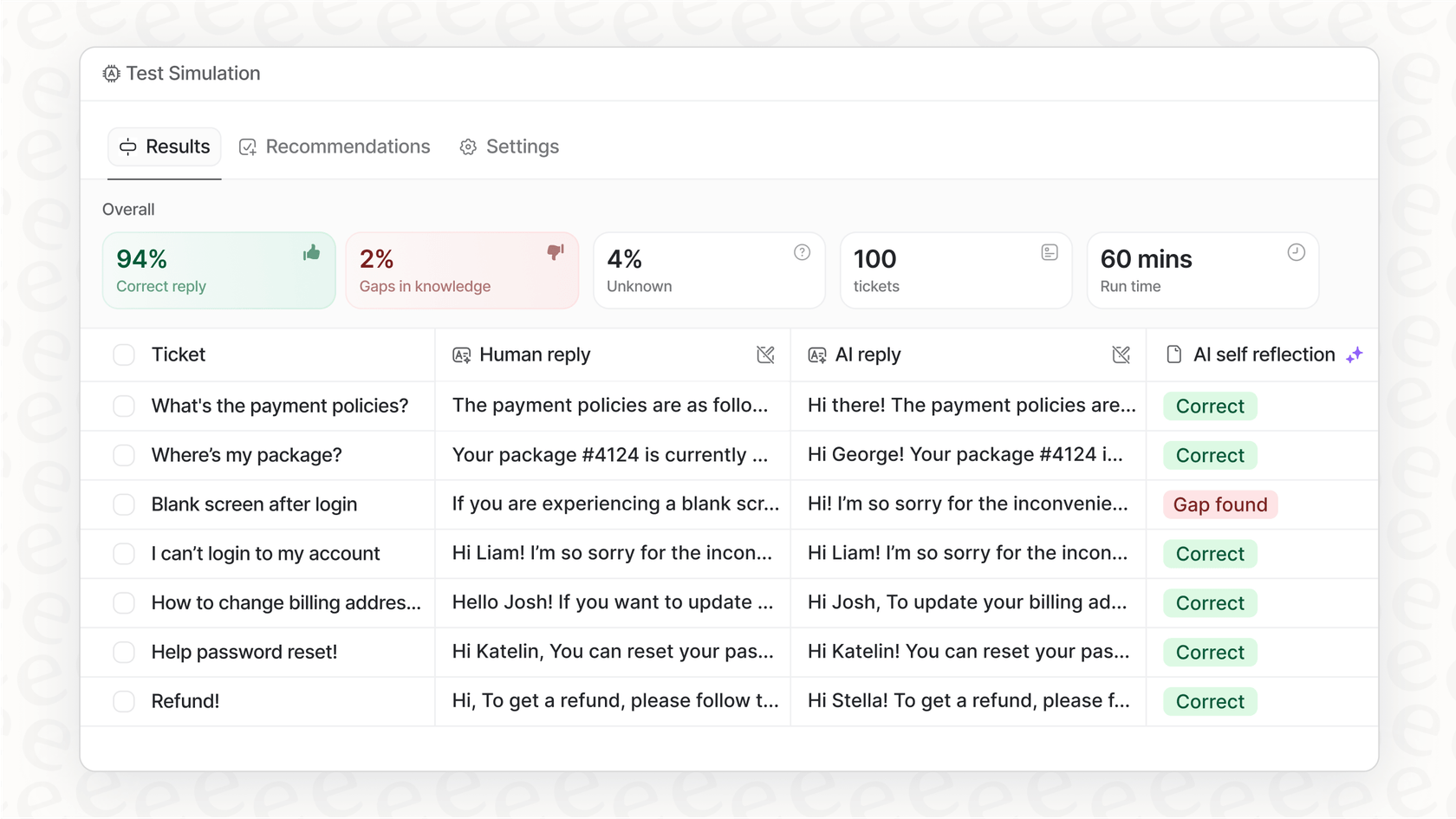

This uncertainty is why eesel AI’s simulation mode is so useful. Before you turn anything on, you can run the AI against thousands of your past support tickets. It shows you exactly how the AI would have answered real customer questions, giving you a data-backed forecast of its performance, resolution rate, and how much you could save. It lets you tweak its behavior and launch with total confidence.

A simpler, unified way to handle Salesforce AI governance for support

Instead of getting stuck in a complex, platform-specific framework, you can take a more practical approach to AI governance that gets you results faster and with way less risk.

Pull all your knowledge into one place

A trustworthy AI support agent needs to know everything your team knows, no matter where that information lives. eesel AI connects to your entire knowledge ecosystem without a fuss. It can even learn from your past tickets in platforms like Zendesk or Freshdesk, automatically picking up your brand's tone of voice and most common solutions from day one. This creates a single source of truth that is far more complete than what Salesforce can see on its own.

![A view of the eesel AI automated ticketing system dashboard showing one-click integrations with tools like Zendesk and [REDACTED].](/_next/image?url=https%3A%2F%2Fwebsite-cms.eesel.ai%2Fwp-content%2Fuploads%2F2025%2F08%2F03-Screenshot-of-integrations-available-in-the-eesel-AI-automated-ticketing-system.png&w=1680&q=100)

Stay in the driver's seat with a customizable workflow

Real governance means you're always in control. With eesel AI, you don’t have to go all-or-nothing on automation. You can set up specific rules that decide exactly which tickets the AI should handle (like simple "where is my order?" questions) and which ones it should immediately send to a human.

You can also use a simple prompt editor to shape the AI's personality, tone, and what it's allowed to do. Need it to pull order info from Shopify or tag a ticket in a certain way? You can set all of that up yourself without needing a developer.

Launch with confidence, not guesswork

The ability to test before you trust is the ultimate governance tool. The simulation mode in eesel AI takes the guesswork and risk out of launching your AI. You get a clear preview of how it will perform before it ever chats with a single customer.

From there, you can roll it out slowly. Start by letting the AI handle just one type of common question, or turn it on for just one support channel. As you watch the reports and see the good results, you can confidently expand what it does at a pace that feels comfortable.

A practical path to trusted Salesforce AI governance

Salesforce has built a strong governance framework that’s a great fit for companies living entirely within its ecosystem. But for most support teams, it’s a complicated, slow, and walled-off approach that misses key knowledge and comes with a lot of risk when you finally go live.

A properly governed AI support strategy needs a tool that is easy to set up, connects all of your knowledge, and lets you deploy safely and with confidence. By adding a specialized AI platform built for the way modern support teams actually work, you can get trusted, effective automation up and running much faster and more safely.

Take control of your AI support automation

Ready to see how you can safely launch an AI support agent using your Salesforce data and all your other knowledge sources? Simulate eesel AI on your historical tickets and see what your potential resolution rate could be in minutes.

Frequently asked questions

Salesforce AI Governance refers to the rules, tools, and best practices for responsible AI use within the Salesforce platform. It's crucial for managing risks like data breaches, biased AI decisions, and reputational damage, ensuring AI is accurate, safe, and compliant with regulations like the EU’s AI Act.

Salesforce provides several key components for AI governance, including Data Cloud for unifying customer data and Salesforce Shield for security like encryption and monitoring. Agentforce offers tools for building and controlling AI agents, while the Einstein Trust Layer handles behind-the-scenes security such as PII masking and zero-data retention.

A primary challenge is the "scattered knowledge problem," as crucial information often resides outside Salesforce in various apps. Additionally, the native setup can be complex and time-consuming, often requiring significant overhauls to existing workflows, leading to a "rip and replace" scenario.

The native Salesforce AI Governance framework is primarily designed to manage data within its own ecosystem. It struggles to tap into external knowledge sources like Confluence, Google Docs, or other helpdesks, which can lead to incomplete AI responses.

While Salesforce offers tools like the Agentforce Testing Center, fully predicting AI behavior in real customer conversations remains a concern. Solutions like simulation modes, which test AI against historical tickets, can provide data-backed forecasts of performance and help ensure confidence before deployment.

Implementing the full Salesforce AI Governance stack is often a major undertaking, requiring specialized expertise and potentially months of planning and work. It's not a simple switch and can lock organizations into a specific, often complex, ecosystem.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.