Rovo prompt best practices: A practical guide for Atlassian teams

Stevia Putri

Katelin Teen

Last edited November 2, 2025

Expert Verified

So, Atlassian Rovo is now included in all paid cloud plans. It’s integrated right into tools your team already uses, like Jira and Confluence, and it's meant to be your new AI teammate. But as you may have discovered, getting it to give you consistently good results isn't as simple as just asking a question.

The secret sauce is in the "prompting," which is just a fancy way of saying how you ask the AI for things. This guide will walk you through the Rovo Prompt Best Practices that actually work. We'll cover the basics for simple chats and then move on to the more detailed instructions needed for complex AI agents. We’ll also get real about some of the common headaches teams run into and how to build more reliable AI workflows.

What is Atlassian Rovo?

Atlassian Rovo is built to act like an AI teammate that has a solid grasp of your company's internal knowledge and processes. It works in three main ways:

-

Rovo Search: This helps you find information scattered across your Atlassian tools and even connects to other apps like Slack, Google Drive, and Microsoft SharePoint.

-

Rovo Chat: This is where you can have a conversation with the AI. Ask it to summarize a long Confluence page, brainstorm some ideas, or answer a quick question.

-

Rovo Agents: These are like little virtual helpers you can build to handle repetitive tasks, like drafting release notes or tidying up your Jira backlog. You can create them with a no-code tool called Rovo Studio, or if you need something more custom, developers can use the Atlassian Forge platform.

Essentially, Rovo is trying to pull all your team’s knowledge together in one place, especially for those who live and breathe the Atlassian suite.

Getting started with Rovo Prompt Best Practices for chat and search

The foundation for making Rovo useful is pretty simple: clear communication. It doesn't matter if you're looking for a document or asking for a quick summary, the quality of your prompt will make or break the answer you get back.

Be clear, contextual, and conversational

My best advice is to talk to Rovo less like you're typing into a search engine and more like you're giving instructions to a new team member. The more specific you are, the better the outcome.

-

Use action words: Kick off your prompts with verbs like "Create," "Summarize," "Find," or "List." This tells Rovo exactly what you want it to do.

-

Give it some context: Instead of a vague question like, "What's the status?", try something more specific: "What is the status of the 'Q4 Security Initiative' project in Jira?"

-

Write like a human: You don't need to stuff your prompts with keywords. Just ask your questions in a natural, conversational way, like you would if you were messaging a colleague.

Tweak your prompts as you go

Think of your first prompt as the start of a conversation, not the end. If the first answer isn't quite what you were looking for, just refine your request with a bit more detail. For example, if Rovo drafts a Jira ticket for you, you could follow up with, "Okay, now add acceptance criteria based on the technical brief on this Confluence page."

The big catch with prompt-based knowledge

Here's the thing about relying on prompts: even a perfectly written prompt can't fix a messy knowledge base. Rovo is only as good as the information it has access to. If your Confluence spaces are full of outdated documents or your files are a mess, Rovo will just serve up those same outdated, messy answers.

This points to a problem most of us are familiar with: keeping official documentation perfectly up-to-date is a never-ending battle. Often, the most current and valuable information is buried in the day-to-day conversations happening in support tickets or Slack threads. This is why some newer AI tools are designed to learn from more than just official docs. For instance, platforms that can train directly on thousands of your team's past conversations can pick up your brand voice and figure out the right answers without you needing to write the perfect prompt every time.

| Vague Prompt | Refined Prompt |

|---|---|

| "Plan the project" | "Draft a project plan in Confluence for the 'New Website Launch'. Add sections for a timeline, key stakeholders, objectives, and potential risks. Assign the 'Design' tasks to Sarah." |

| "Summarize the update" | "Summarize the key decisions and action items from the comment thread on JIRA ticket PROJ-123." |

Advanced Rovo Prompt Best Practices for AI agents and automation

Once you move past basic chat and search, you can use Rovo Agents to automate workflows with multiple steps. This is where things can get a lot more complicated and a solid understanding of Rovo Prompt Best Practices becomes even more important.

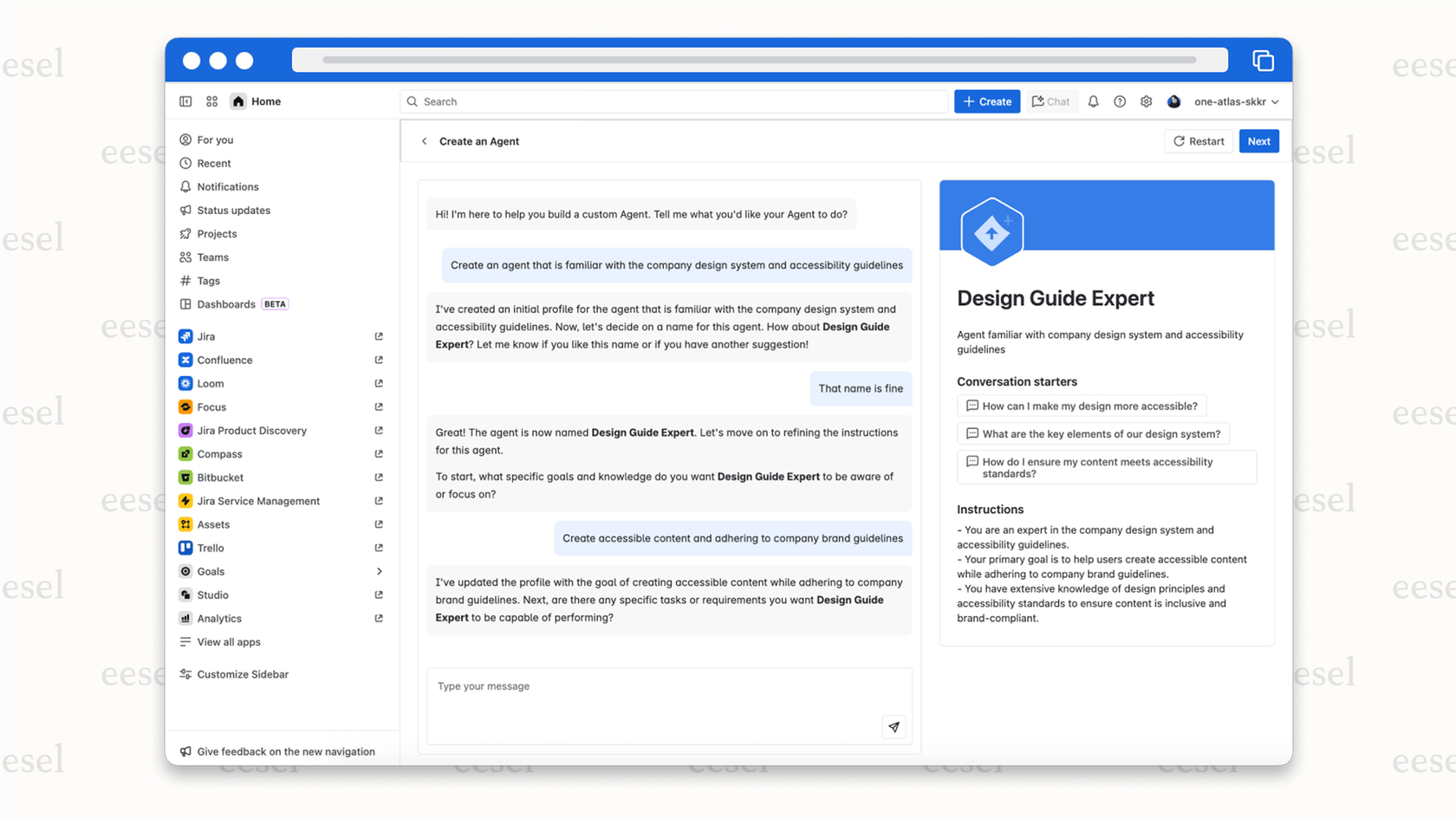

Building agents: No-code vs. developer-heavy

Atlassian gives you two options for building these agents:

-

Rovo Studio: This is a no-code tool that lets just about anyone create agents for simple, repetitive tasks.

-

Atlassian Forge: This is a full-on developer platform for building custom agents that are deeply integrated into your systems. It requires knowing your way around JavaScript, APIs, and manifest files.

Writing Rovo agent instructions

The "instructional prompt" is the brain of your agent. It’s a set of core instructions that defines what the agent is, what its goals are, and how it should behave in different scenarios. A solid instructional prompt might look something like this:

-

Role and Goal: "You are a 'Release Notes Drafter'. Your main job is to create easy-to-read release notes from completed Jira issues."

-

Process: "When you're activated, you need to look at all Jira issues in the 'Ready for Release' status. Then, group them by 'feature' and 'bug fix'."

-

Rules and Logic: "If an issue doesn't have a clear summary, flag it for a human to look at. The final output should be a bulleted list on a new Confluence page."

A common problem: Things can get brittle

While the instructional prompt gives the agent its logic, you still have to hook it up to an automation trigger in Jira or Confluence. This is where things can get a bit wobbly. As some consultants like Atlas Bench have pointed out, teams have seen their automation rules break simply because they couldn't properly read the AI agent's response.

You end up with a fragile system where one small, unexpected change in the AI's output can bring an entire workflow to a halt, sending you on a technical treasure hunt to figure out what went wrong. The root of the issue is that the AI's brain and the automation engine are two separate things, and the connection between them isn't always stable.

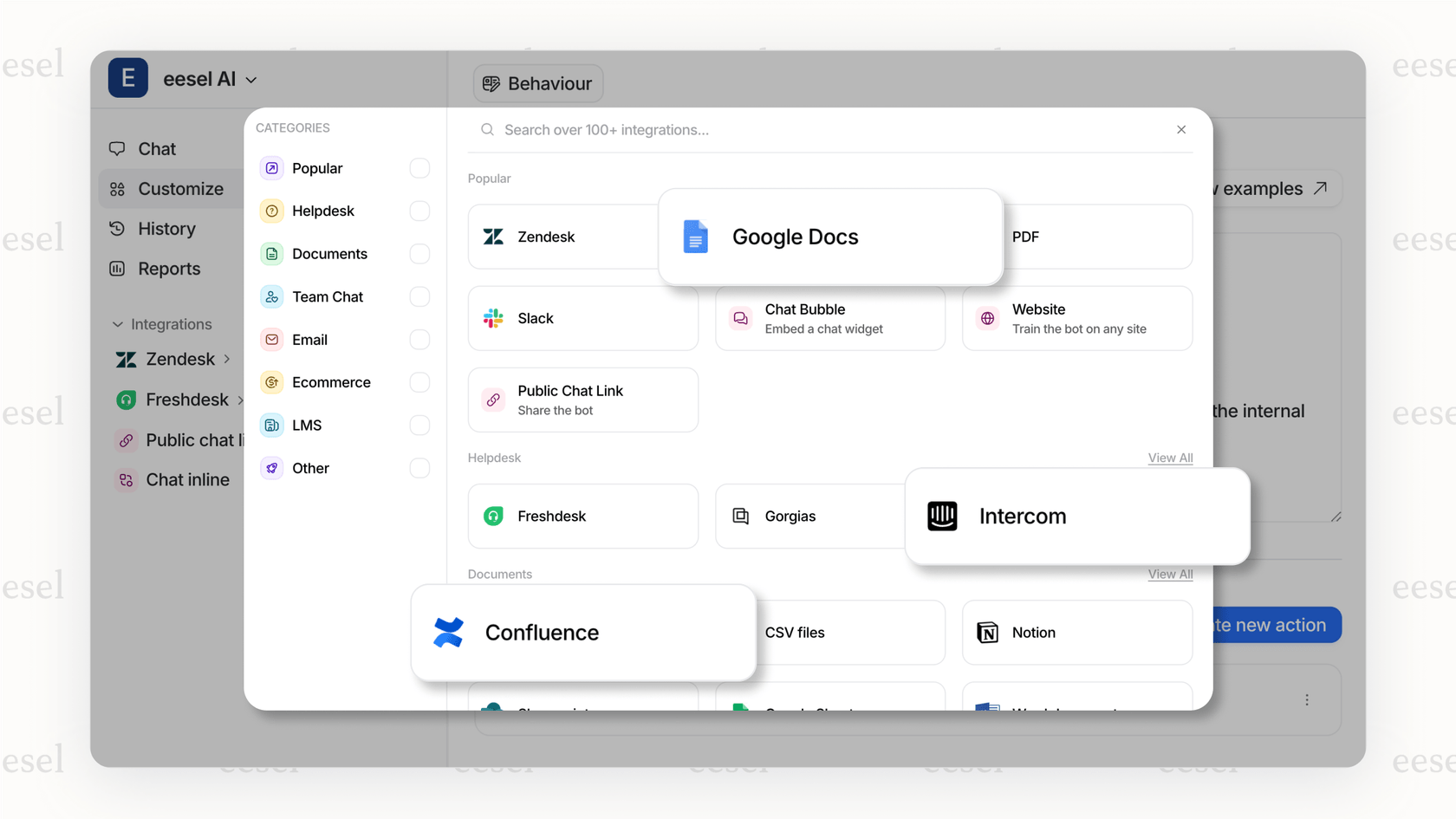

A more solid alternative: Integrated AI and workflows

Instead of fighting with platform-specific automation rules, a better approach is to use a tool where the AI and the workflow engine are built together from the ground up. Take eesel AI, for example. It lets you build powerful automations right inside its dashboard, without needing to write code or deal with complicated variables.

You can set up rules with just a few clicks, like:

-

"Only respond to tickets that mention 'password reset' or 'billing inquiry'."

-

"If the customer seems upset, escalate the ticket to a human agent right away."

-

"When someone asks for an order status, use our Shopify integration to get the details and reply to them directly."

This approach gives you fine-grained control in a simple interface, resulting in a system you can actually rely on.

Testing, confidence, and launching

So you’ve built your agent. The big question now is: will it actually work when real people start using it? A wrong or unhelpful AI response can be frustrating and quickly make people lose trust in the tool.

The Rovo deployment path

For a custom Rovo Agent built with Forge, the deployment process is pretty standard for developers: you move it through development, staging, and finally to production. It’s a familiar process, but it can be slow. For agents built in the no-code Rovo Studio, the path is less defined. It usually involves testing it with a small group of users and crossing your fingers.

The risk of insufficient testing

When you can't test your AI against a lot of real-world scenarios before launch, you're essentially flying blind. You won't know how it will handle weird edge cases or tricky questions until it’s already live and interacting with your team or customers.

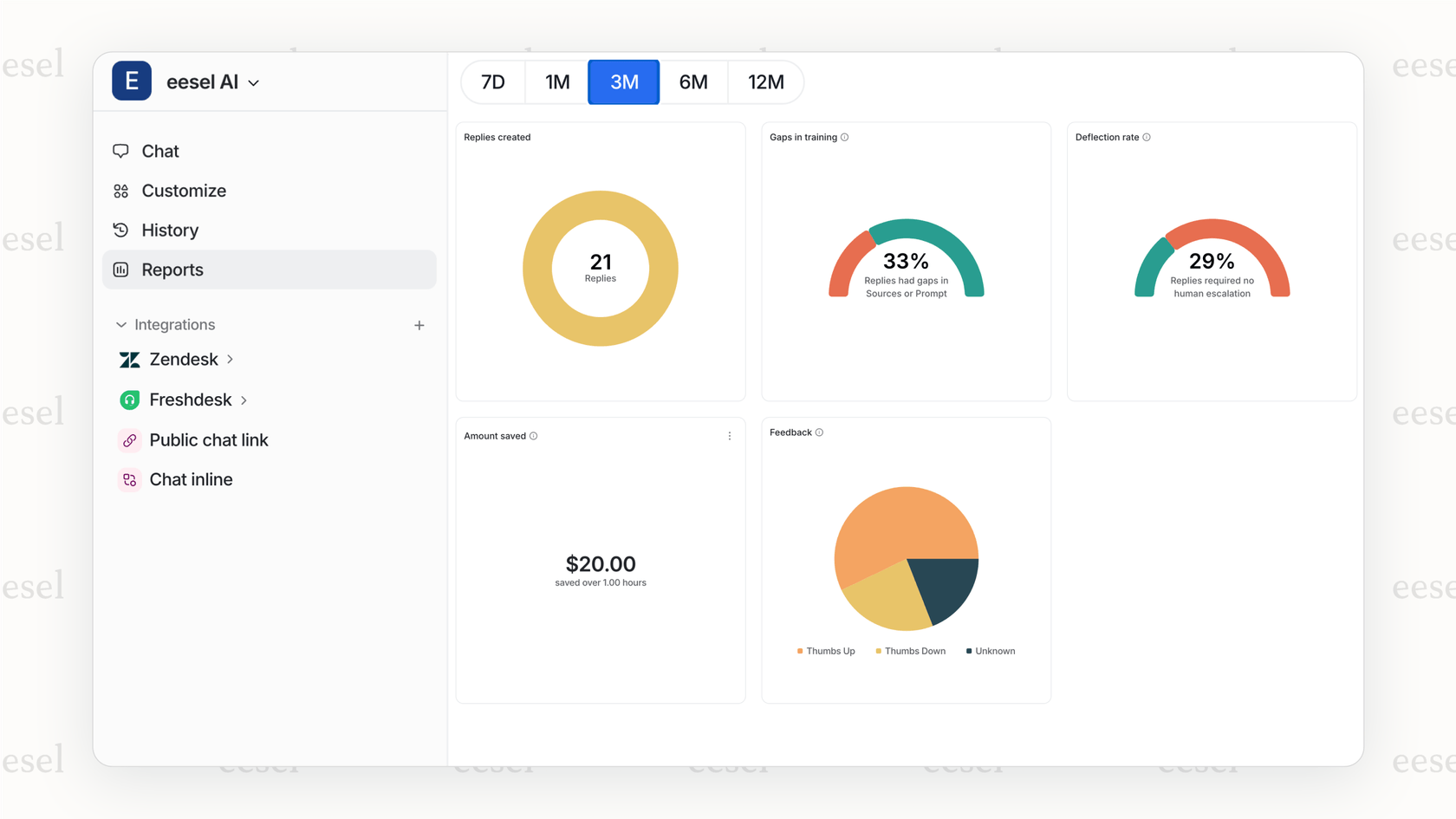

Building confidence with risk-free simulations

This is one area where a dedicated AI platform really shines. Tools like eesel AI come with a Simulation Mode. Before your AI agent goes anywhere near a real user, you can run it on thousands of your actual historical support tickets or internal requests.

The simulation report gives you a clear picture of what to expect:

-

It shows you exactly how the AI would have replied in every single one of those cases.

-

It gives you a data-backed forecast of your expected automation rate.

-

It points out where you have gaps in your knowledge base that need to be filled.

This lets you tweak your prompts, add more knowledge, and adjust your workflows with total confidence, so you can have a smooth launch from day one.

Rovo pricing

The good news is that Atlassian Rovo is now included with all paid Atlassian Cloud plans: Standard, Premium, and Enterprise. While it's available to all paying customers, there are different usage limits depending on which tier you're on.

Moving beyond Rovo Prompt Best Practices with a truly self-serve AI platform

Getting the hang of Rovo Prompt Best Practices is a must for any team that wants to make Atlassian's AI useful. It takes a bit of thought, a willingness to iterate, and for the really advanced stuff, a fair amount of technical work.

But a truly helpful AI tool shouldn't put the entire burden on you to write the perfect prompt or debug fragile automation rules. The platform itself should be smart, flexible, and straightforward to manage.

eesel AI offers a much simpler path. Our platform learns from the conversations you're already having, has an intuitive workflow engine that anyone on your team can use, and lets you test everything with risk-free simulations. You can get a production-ready AI agent up and running in minutes, not months, and start seeing the benefits almost immediately.

Ready to see how simple and powerful an AI support agent can be? Try eesel AI for free or book a demo to learn more.

Frequently asked questions

Start by being clear and conversational. Use action words like "Summarize" or "Find," provide sufficient context, and talk to Rovo as you would a new team member. The more specific your request, the better the initial response.

Treat your first prompt as the beginning of a conversation. If the answer isn't what you need, refine your request by adding more detail or specifying what was missing or incorrect in the previous response. This iterative approach helps Rovo narrow down to the desired outcome.

While Rovo Prompt Best Practices aim for clarity, even perfect prompts can't compensate for a disorganized or outdated knowledge base. Rovo will only retrieve and process the information it has access to, meaning good data management is crucial for accurate AI responses, regardless of prompt quality.

Yes, when building Rovo Agents, you need an "instructional prompt" that defines the agent's role, goals, process, and specific rules. This is more complex than simple Chat or Search prompts, requiring a detailed, multi-part instruction set to guide multi-step workflows.

For Rovo Agents, focus on highly structured and explicit instructions, clearly defining every step and expected output. However, due to Rovo's separate AI and automation engines, agent fragility can still be an issue; integrated AI platforms are highlighted as a more stable alternative.

Testing is critical to build trust in your AI agent. For custom Forge agents, standard development lifecycle applies, but for Rovo Studio, the path is less defined. Ideally, test against a wide range of real-world scenarios, which dedicated AI platforms often facilitate through simulation modes.

The ultimate goal is to make Rovo a truly helpful AI teammate that improves team efficiency and knowledge access. While Rovo Prompt Best Practices are essential, some teams find integrated AI platforms like eesel AI offer a simpler, more robust path with intuitive workflows and built-in learning from existing conversations, reducing the reliance on perfect prompts.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.