Cisco's recent move to acquire Robust Intelligence for a reported $400M has put a huge spotlight on a corner of the tech world that’s quickly moving to center stage: AI security. For years, companies have been in a sprint to build and roll out AI, but the conversation around how to protect these powerful systems from new, almost invisible threats has been a few steps behind.

Robust Intelligence is a company focused on making AI systems safe from the inside out. But what does that really mean? In this post, we’ll break down what Robust Intelligence does, why its mission is so important for any business using AI, and what this trend means for you, whether you’re using AI for customer support, IT, or anything in between.

What is Robust Intelligence?

At its heart, Robust Intelligence is an AI security company. They’ve built a platform to protect AI models, and the apps they run on, through their entire lifecycle, from the first line of code to every customer interaction.

Founded by former Harvard professor Yaron Singer and his then-student Kojin Oshiba, the company was built on a simple but critical idea: AI models can fail, and they will. Their job is to prevent those failures by sniffing out vulnerabilities before they can be exploited and guarding against malicious attacks in real time.

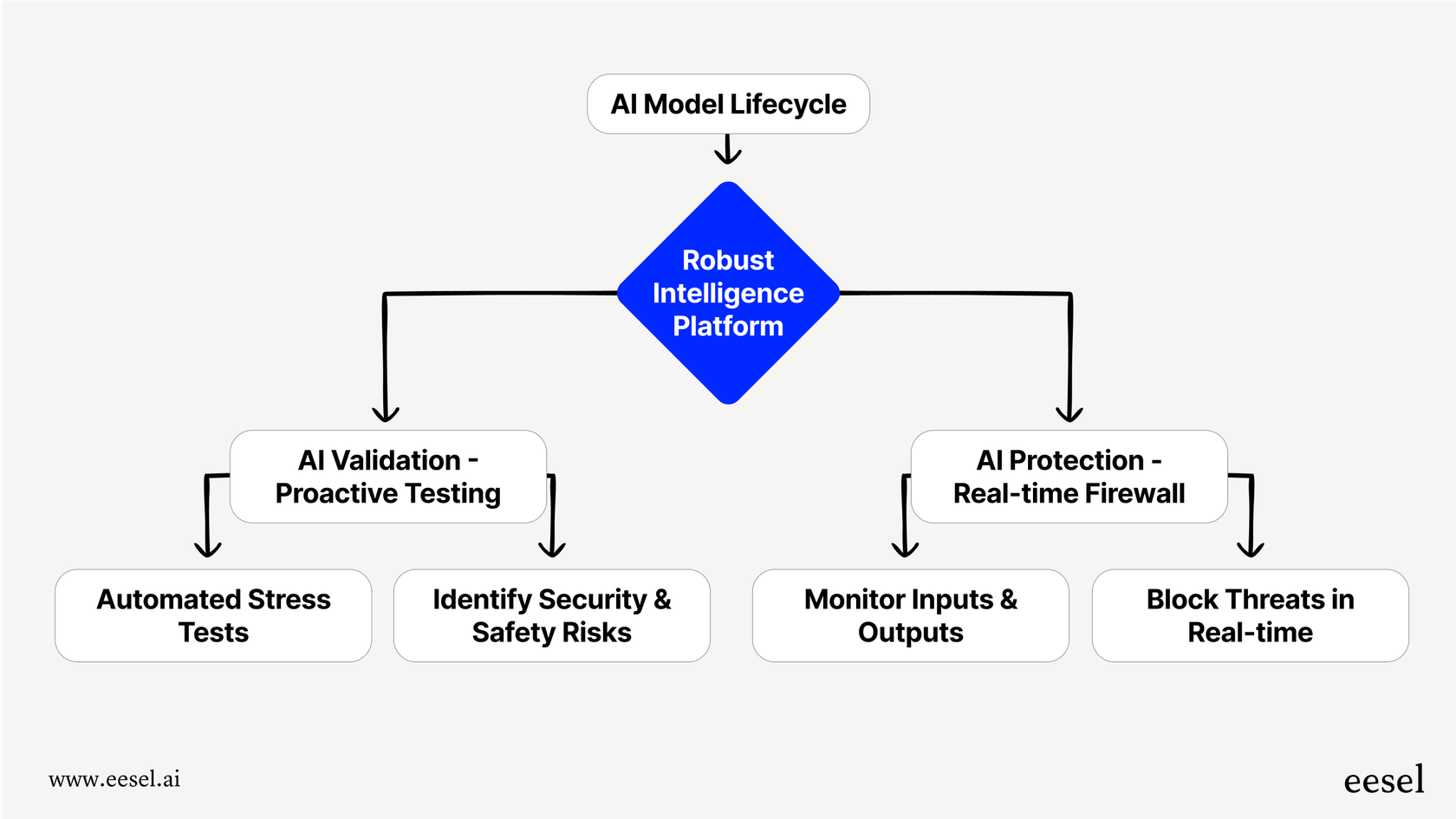

Their platform is split into two main parts:

-

AI Validation: This is the proactive stuff. Before an AI model ever sees the light of day, this tool automates the process of testing it for security holes and safety risks. Think of it as a stress test that finds the weak points before they can cause any real-world damage.

-

AI Protection (AI Firewall): This is the real-time defense. Once a model is up and running, the AI Firewall acts like a set of guardrails, constantly watching the inputs and outputs to block threats as they pop up.

They’re basically building the security immune system for a new generation of technology.

The core problem it solves: Why AI model security is so important

As companies rush to adopt AI, they're also opening the door to a new class of risks that their traditional cybersecurity tools just can't see. AI models aren't like regular software; they can be manipulated in some pretty strange and subtle ways.

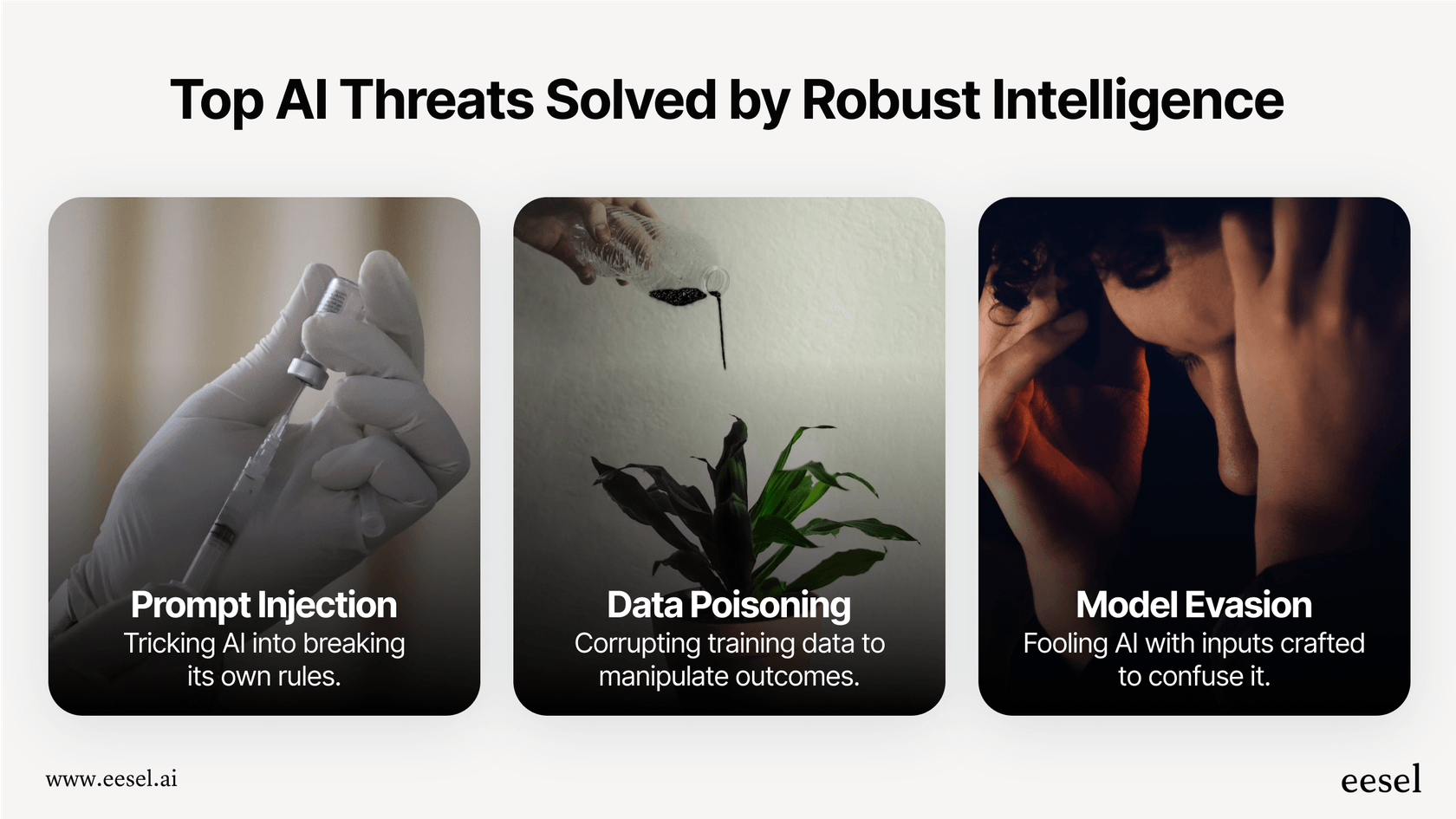

Robust Intelligence was built to defend against these unique threats. Here are a few of the big ones:

-

Prompt Injection & Jailbreaking: This is basically social engineering for AI. It involves tricking a bot into ignoring its own safety rules or giving up sensitive information it shouldn't. An attacker could, for instance, write a prompt that tricks a customer service bot into spilling another user's account details or generating harmful content.

-

Data Poisoning: An AI is only as good as the data it learns from. Data poisoning is when an attacker secretly feeds the AI bad data during its training phase. This can create hidden backdoors or introduce biases that cause the model to make terrible decisions later on, all without anyone noticing until it's too late.

-

Model Evasion: This is all about creating inputs that are specifically designed to confuse an AI and make it mess up. For example, an attacker might tweak an image in a way that’s invisible to a human but causes an AI to completely misidentify what it's seeing.

These aren't just theoretical problems. Researchers have shown again and again how vulnerable even the most advanced models can be. Securing them isn’t just a nice-to-have; it’s becoming a basic requirement for doing business.

The Cisco acquisition of Robust Intelligence: What it means for enterprise AI

When a giant like Cisco spends hundreds of millions on a specialized startup, you know the market is changing. This acquisition confirms that AI security is no longer a niche concern for a handful of data scientists but a top-level priority for every major company.

In the announcement blog post, Cisco’s SVP of Security, Tom Gillis, said it well: "The combination of Cisco and Robust Intelligence means that we can deliver advanced AI security processing seamlessly into the existing data flows..."

This is a big step. It means AI security is being built directly into the infrastructure that powers businesses. Launching an AI application without dedicated security is quickly becoming as unthinkable as running a corporate network without a firewall. This isn't just happening in boardrooms either; governments around the world, like the U.S. with its Executive Order on AI safety, are pushing for safer and more secure AI development.

This video discusses Cisco's acquisition of Robust Intelligence and its implications for the future of AI security.

Beyond Robust Intelligence and model security: The other half of the problem

While companies like Robust Intelligence focus on securing the foundational AI models, which you can think of as the engine of the car, businesses using AI applications have another big challenge. Securing the engine is one thing, but you also have to make sure the car drives safely, follows the rules of the road, and doesn't go off-script.

For teams using AI in customer support, IT service management, or for internal questions, this "application-layer" challenge is where things get real. The risks here are less about hackers and more about your brand's reputation and your customers' trust. An AI agent giving out wrong information, adopting a weird tone, or failing to pass a sensitive issue to a human can be just as damaging as a technical breach.

This is where you need a different kind of robust intelligence, one focused on control, reliability, and trust in the application itself.

This is a problem modern platforms are starting to solve. The best ones are built around a few key ideas:

First, you need granular control. You can't just flip a switch and let an AI run wild. Real control means you get to choose exactly which types of questions the AI automates. For example, tools like eesel AI let you start small by automating simple, repetitive topics while making sure everything else gets escalated to a human. This gives you complete command over your support workflow, so there are no nasty surprises.

Next is risk-free testing. How can you possibly trust an AI before it's talked to a single customer? The answer is simulation. The ability to test your AI setup on thousands of your own past support tickets is a huge advantage. It gives you an accurate forecast of how it will perform and what your cost savings will look like, all before you go live. This kind of powerful simulation is a core feature of eesel AI that many alternatives just don't offer.

Finally, there's seamless integration. The last thing anyone wants is a long, complicated setup that takes months of developer time. The best AI solutions don't force you to rip out your existing tools. Instead, they should plug directly into your helpdesk, whether it's Zendesk, Freshdesk, or Intercom, in just a few minutes. This simple, self-serve approach makes modern AI accessible to everyone, not just massive companies with huge IT budgets.

Robust Intelligence pricing

As you might expect with software aimed at large corporations, Robust Intelligence doesn't list its pricing publicly. To get a quote, you’ll need to schedule a demo and talk to their sales team.

This model makes sense for their kind of complex solution, but it can be a hurdle for teams that just want to get started quickly and see if something works. For many businesses, a transparent, predictable price is pretty important for planning and budgeting without getting stuck in a long sales process.

Building a complete AI safety plan with Robust Intelligence

The rise of Robust Intelligence and its acquisition by Cisco proves one thing for sure: AI security is now a non-negotiable part of any modern tech stack. It’s not an add-on; it’s a necessity.

But a complete strategy really has two parts. First, you need to secure the underlying AI models from technical threats, which is what companies like Robust Intelligence handle. Second, you have to ensure the AI applications you actually use are controlled, reliable, and trustworthy in every single interaction. This is where platforms like eesel AI come into the picture.

For teams in customer support, IT, or internal ops, having truly "robust intelligence" means using AI tools that give you transparency, fine-grained control, and the confidence to test and tweak things safely. It's about building a system you can actually trust, from the deep-down model all the way to the end customer.

Get started with AI you can trust

If you're looking to use AI for customer support without the risks, eesel AI offers a self-serve platform that you can set up in minutes. You can simulate its performance on your own historical tickets and get total control over what gets automated. Give it a try today.

Frequently asked questions

Robust Intelligence is an AI security company that develops a platform to protect AI models and applications throughout their entire lifecycle. It solves the critical problem of securing AI systems against unique, subtle vulnerabilities that traditional cybersecurity tools cannot detect, ensuring they perform safely and reliably.

Robust Intelligence employs a two-pronged approach: AI Validation, which proactively tests models for security flaws before deployment, and AI Protection (AI Firewall), which continuously monitors inputs and outputs in real-time to block threats once models are operational.

Yes, Robust Intelligence is specifically designed to defend against advanced AI threats such as prompt injection and jailbreaking, which trick AI into misbehaving, and data poisoning, where attackers secretly feed bad data to influence model behavior. It also guards against model evasion techniques.

The acquisition of Robust Intelligence by Cisco highlights that AI security is no longer a niche concern but a top-level priority for major companies. It signifies a move towards integrating advanced AI security directly into enterprise infrastructure, making it a fundamental requirement for deploying AI applications.

While Robust Intelligence secures the underlying AI models, a comprehensive strategy also requires managing the application layer to ensure AI behaves reliably in customer-facing scenarios. This involves granular control over AI actions, risk-free testing, and seamless integration with existing tools, as offered by platforms like eesel AI.

No, Robust Intelligence does not list its pricing publicly. Interested businesses need to schedule a demo and consult with their sales team to obtain a quote, as their solutions are typically tailored for large corporate needs.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.