The best Rebellions AI alternatives for business growth in 2025

Stevia Putri

Katelin Teen

Last edited November 14, 2025

Expert Verified

Introduction: The search for powerful and efficient Rebellions AI alternatives

Generative AI seemed to pop up out of nowhere, and now every business is scrambling to figure out how to use it. Underpinning this whole revolution is some incredibly specialized hardware, and the race is on to build the fastest, most efficient chips. You’ve probably heard of startups like Rebellions AI, which are doing some pretty cool things with energy-efficient chips to make AI faster and cheaper to run.

This has led a lot of people to search for the best Rebellions AI alternatives as they think about scaling up. But it brings up a huge question: is sinking a ton of money into custom AI hardware the only way to get ahead? Or is there a simpler, more direct way to get real results with AI right now?

In this guide, we'll look at the big names in the AI chip world. But more importantly, we’ll help you figure out which path actually makes sense for what you’re trying to accomplish.

What is Rebellions AI?

Before we jump into the alternatives, let's do a quick rundown of what Rebellions AI is all about. They're a "fabless" semiconductor company from South Korea. All that means is they design specialized computer chips and let other companies handle the actual manufacturing.

Their main gig is creating AI accelerator chips, like their ATOM and REBEL products. These aren't the kind of processors you have in your laptop; they're built for a very specific job: AI inference.

"Inference" is just the fancy term for an AI model doing its work. For instance, when a customer asks a chatbot a question, the brainpower needed to understand it and spit out an answer is inference. Rebellions AI designs its chips to handle that work super fast without guzzling electricity, which is a big deal for data centers running AI models 24/7.

Our criteria for selecting the top Rebellions AI alternatives

To give you a straight-up comparison, we've sized up the alternatives based on a few things that really matter when you're dealing with AI infrastructure:

-

Performance: How fast can this stuff actually run AI tasks? You'll often see this measured in training speed or, for inference, tokens per second.

-

Energy Efficiency: A huge part of Rebellions AI's appeal is how much performance it squeezes out of every watt. We looked at how competitors are doing, especially since electricity costs and environmental impact are on everyone's mind.

-

Software Ecosystem: A powerful chip is just a fancy paperweight without good software. We checked how well each option is supported by popular frameworks and how easy it is for developers to actually build with it.

-

Market Adoption & Accessibility: Is this a niche product for a select few, or can you easily access it through major cloud providers like AWS and Google Cloud?

-

Scalability: How well does the solution grow? Can you go from one unit to a massive, interconnected system for the really heavy lifting?

Comparison of the best Rebellions AI alternatives in 2025

Here’s a quick look at how the top AI hardware players stack up.

| Company | Key AI Chip/Architecture | Best For | Energy Efficiency Focus | Pricing Model |

|---|---|---|---|---|

| NVIDIA | Blackwell (B200/GB200) | General-purpose AI training & inference | High performance, with efficiency gains in newer models | Enterprise Purchase / Cloud Rental |

| AMD | Instinct (MI300 Series) | High-performance computing & AI | Competitive performance-per-watt | Enterprise Purchase / Cloud Rental |

| Groq | LPU™ Inference Engine | Ultra-low-latency AI inference | High speed and efficiency for inference tasks | Cloud API Access |

| AWS | Trainium & Inferentia | Optimized AI on the AWS cloud | Cost-efficiency within the AWS ecosystem | Cloud Usage-Based |

| Google Cloud | Tensor Processing Unit (TPU) | Large-scale model training & inference | Optimized for TensorFlow and Google's AI workloads | Cloud Usage-Based |

| Intel | Gaudi 3 | Enterprise GenAI training & inference | Price-performance for enterprise workloads | Enterprise Purchase |

| Cerebras | Wafer Scale Engine (WSE-3) | Training massive, single AI models | Solving large-scale compute challenges | System Purchase / Cloud Access |

The 7 best Rebellions AI alternatives

Each of these companies takes a different swing at powering AI. Let's get into the details.

1. NVIDIA

-

Description: Let's face it, NVIDIA is the undisputed king of the hill. Their GPUs and the CUDA software that makes them tick are the industry standard for training and running just about every major AI model out there. Their latest Blackwell architecture looks set to keep them on top.

-

Use Cases: AI model training, large-scale inference, scientific computing, and, of course, graphics.

-

Pros: They have the most mature and complete software ecosystem, top-tier performance for training, and you can find them on every major cloud provider.

-

Cons: The price tag is eye-watering. They use a lot of power, and high demand means you often can't get your hands on their latest tech even if you have the cash.

-

Pricing: Premium and not at all transparent. Good luck finding a public price list on their site. Both their product and pricing pages are dead ends. You’ll either buy their hardware inside a larger server or rent time on it through the cloud.

2. AMD

-

Description: AMD is NVIDIA's biggest rival in the high-performance GPU space. Their Instinct series, especially the MI300X, is a real challenger, offering comparable performance for both training and inference. For any business that doesn't want to be tied to a single supplier, AMD is a pretty compelling option.

-

Use Cases: High-performance computing (HPC), data center AI, and cloud computing.

-

Pros: Delivers performance that can go toe-to-toe with NVIDIA, often with a friendlier price tag.

-

Cons: Their ROCm software platform isn't as widely used as NVIDIA's CUDA. This could mean a steeper learning curve and a few more headaches for your development team.

-

Pricing: Just like NVIDIA, AMD sells to big enterprise customers, so you won't find a price list on their website. The hardware is usually more affordable than similar NVIDIA chips, but you'll have to talk to sales to get a quote.

3. Groq

-

Description: Groq is laser-focused on one thing: making AI inference ridiculously fast. They ditched GPUs and built a custom chip called an LPU™ (Language Processing Unit). It’s designed from the ground up to run large language models (LLMs) with almost zero delay, which means you get answers from your AI practically instantly.

-

Use Cases: Real-time apps, chatbots, copilots, anything where the speed of the AI's response is the most important thing.

-

Pros: Ludicrously fast inference speed, predictable performance, and a simple API that developers can pick up quickly.

-

Cons: It's a specialist's tool. Groq's LPUs are only for running AI models, not for training them.

-

Pricing: Refreshingly transparent. Groq offers pay-as-you-go access through their cloud API, priced per million tokens. This makes it easy to guess your costs and scale up or down whenever you need to.

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|

| Llama 3.1 8B Instant | $0.05 | $0.08 |

| Llama 3.3 70B Versatile | $0.59 | $0.79 |

| Qwen3 32B | $0.29 | $0.59 |

4. AWS (Amazon Web Services)

-

Description: As the world's biggest cloud provider, AWS figured it would be cheaper to just build its own custom chips. They offer Trainium chips for training AI models and Inferentia chips for inference. It’s a complete, in-house solution for companies already living in the AWS world.

-

Use Cases: Best for businesses that are all-in on the AWS ecosystem and want to get their AI spending under control.

-

Pros: Seamless integration with AWS services like SageMaker. It can be a lot cheaper than renting NVIDIA GPUs on the same platform.

-

Cons: It’s a classic case of vendor lock-in. You can't buy their chips or use them anywhere but the AWS cloud.

-

Pricing: You’re billed based on your usage of AWS cloud instances. There’s no separate price for the chips; it's all rolled into the hourly or monthly cost of whatever service you’re using.

5. Google Cloud

-

Description: Google was one of the first tech giants to design its own AI hardware, creating Tensor Processing Units (TPUs) to power things like Search and Google Translate. Now you can rent them on Google Cloud Platform, and they are absolute beasts at training massive AI models.

-

Use Cases: Training and running enormous AI models, especially if they're built with Google's own TensorFlow or JAX frameworks.

-

Pros: Unbelievable performance for huge training jobs and tight integration with Google's AI tools.

-

Cons: They really prefer Google's own software, which makes them less flexible than all-purpose GPUs.

-

Pricing: You can only get them through Google Cloud Platform on a pay-per-use basis. Their pricing is clear and broken down by the hour, with discounts if you commit for the long haul.

| TPU Version (us-central1 region) | On-Demand Price (per chip-hour) | 3-Year Commitment (per chip-hour) |

|---|---|---|

| TPU v5e | $1.20 | $0.54 |

| Trillium | $2.70 | $1.22 |

6. Intel

-

Description: Intel has owned the CPU market for ages and is now trying to muscle into the AI accelerator game with its Gaudi series. The Gaudi 3 chip is being sold as a powerful, open-standard alternative to NVIDIA for big businesses doing generative AI.

-

Use Cases: Enterprise AI training and inference, particularly for companies that like open software and don't want to be stuck with one vendor.

-

Pros: They’re claiming strong performance that can compete with the top dogs, at what should be an appealing price, all while focusing on open software.

-

Cons: They are playing catch-up in a big way. Intel has a tough fight ahead to build a software ecosystem and user base that can truly challenge the leaders.

-

Pricing: Like its main competitors, Intel’s pricing is for large enterprise buyers and isn’t listed publicly. Their whole pitch is to offer a more cost-effective option than NVIDIA.

7. Cerebras

-

Description: Cerebras has a wild strategy: they build one single, gigantic chip the size of a dinner plate. Their WSE-3 has trillions of transistors, a design that gets rid of the communication delays that happen when you try to link thousands of smaller chips together.

-

Use Cases: Training foundational AI models from scratch, complex scientific research, and advanced simulations.

-

Pros: It packs an unmatched amount of computing power onto a single chip, which can make the process of training the world's biggest AI models a lot simpler.

-

Cons: This is a very specialized and incredibly expensive piece of kit. It’s really only for a handful of well-funded AI labs and tech giants.

-

Pricing: You can buy a full Cerebras system or access it through their cloud service, which offers transparent, usage-based pricing. A "Developer" tier starts at just $10, but enterprise plans will require a custom quote.

This video discusses Rebellions' funding and advancements in energy-efficient AI chip technology.

Is custom hardware the right path? A look at software-first Rebellions AI alternatives

For the people building the next ChatGPT, investing in the hardware we just covered is a no-brainer. But let's be honest, that's not most of us. What about the 99% of businesses that just want to use AI to solve everyday problems, like automating customer support, answering internal questions, or clearing out IT tickets?

For them, the hardware-first path is a long, expensive, and painful road. You're looking at:

-

A huge upfront investment: We're talking millions for the hardware, plus all the infrastructure needed to house and power it.

-

Finding niche talent: You need a team of highly paid, hard-to-find AI and machine learning engineers to build and maintain everything.

-

Waiting forever: It can easily take months, if not years, to go from buying the hardware to actually launching something that helps your business.

There’s a much smarter way. Instead of building the whole engine from scratch, you can use a ready-made AI platform that plugs right into the tools you use every day. This software-first approach is where platforms like eesel AI come in. They give you the power of a world-class AI system in a simple package that starts solving problems on day one.

Why eesel AI is a smarter choice than other Rebellions AI alternatives

Instead of getting bogged down in which chip to buy, think about what problem you actually want to solve. eesel AI is built to be the fastest path from problem to solution, skipping all the cost and complexity of building your own AI stack.

Go live in minutes, not months

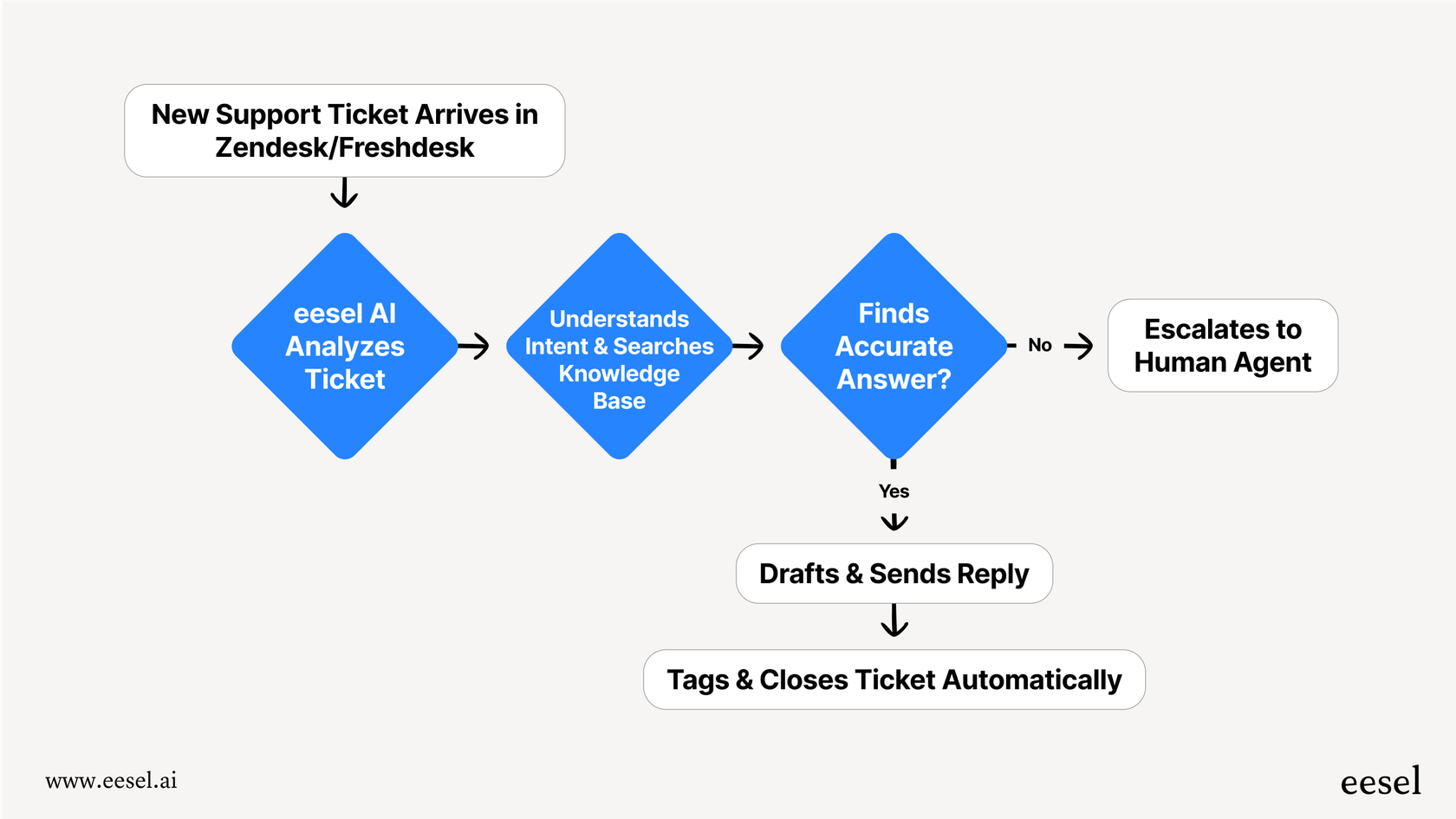

While an engineering team spends months setting up an AI hardware cluster, eesel AI connects to your helpdesk (like Zendesk, Freshdesk, or Intercom) and knowledge sources with simple, one-click integrations. You can have a fully working AI agent running in minutes, all by yourself. No need to sit through a mandatory sales call just to see if it's a good fit.

Unify your knowledge without a data science team

You don't need a team of engineers to prep your data and train a model. eesel AI automatically learns from your past support tickets, help center articles, and internal wikis (like Confluence or Google Docs). This lets it provide accurate, relevant answers that sound like they came directly from your team.

Test with confidence and zero financial risk

You can't exactly get a free trial on a multi-million dollar server. With eesel AI, you can use the powerful simulation mode to test your setup on thousands of your actual past tickets. You'll see exactly how it would have performed and get a clear picture of resolution rates and cost savings before you ever switch it on for a real customer.

Get predictable costs with transparent pricing

The AI hardware world is full of huge, confusing costs. eesel AI offers clear, predictable subscription plans with no per-resolution fees. You'll never get a surprise bill just because you had a busy month. It's a simple, flat rate that you can actually build a budget around.

Choose the right Rebellions AI alternatives path for your business

The AI landscape really comes down to two paths. The hardware path, led by companies like Rebellions AI and its alternatives, is for the teams building the fundamental technology of artificial intelligence.

But the software path, led by platforms like eesel AI, is for every other business that wants to use that power to be more efficient, cut costs, and make customers happier. For the vast majority of companies, the faster, smarter, and more cost-effective way to get results with AI is to start with a software solution that works with the tools you already have.

Ready to see how a software-first AI approach can transform your support operations?

Frequently asked questions

Most businesses don't need to build AI models from scratch. A software-first approach allows companies to rapidly deploy AI for practical problems like customer support automation, avoiding the massive investment and specialized talent requirements of custom hardware.

Hardware-centric alternatives demand significant upfront investment, specialized talent, and long development cycles for deployment. Software platforms, conversely, offer quick integration, simplified knowledge management, and predictable costs, getting you to results much faster.

Hardware-based alternatives typically involve huge upfront costs, high power consumption, and ongoing maintenance, leading to unpredictable expenses. Software solutions usually offer clear, predictable subscription pricing without per-resolution fees, making budgeting simpler and more transparent.

Deploying hardware-focused alternatives requires highly specialized AI and machine learning engineers, who are difficult and expensive to find. Software platforms simplify implementation, allowing existing teams to integrate and manage AI solutions without deep technical expertise.

Hardware-based alternatives like those from NVIDIA or Groq are primarily designed for foundational AI model training, large-scale inference for cutting-edge applications, or highly specialized tasks requiring ultra-low latency. They are best suited for those pushing the boundaries of AI development.

Scaling hardware-based alternatives can be complex and costly, often requiring more hardware purchases or extensive cloud resource management. Software-first platforms typically offer more flexible scaling options through subscription tiers, adjusting easily to changing business needs without major infrastructure changes.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.