If you're building anything with OpenAI's models, you’ve probably had a moment where you just wanted one thing: for the AI to give you the same answer twice. Getting predictable, repeatable outputs is a huge deal for testing, quality control, and just delivering a user experience that isn't all over the place. OpenAI gave us the "seed" parameter and the "system_fingerprint" to try and help with this, promising a way to get more deterministic AI responses.

The problem is, a lot of developers are finding that these tools don't always work as advertised. The result is often frustration and some seriously unpredictable AI behavior. This guide will break down what the OpenAI System Fingerprint is, why it’s supposed to be a big deal, and the frustrating reality of using it. More importantly, we'll walk through a better way to build and deploy reliable AI agents, even when the tools underneath them are a bit shaky.

What is the OpenAI System Fingerprint?

The OpenAI System Fingerprint is basically a unique ID for the specific backend setup that OpenAI used to generate a response. Think of it less like a version number for the model and more like one for the entire system running it at that exact moment. According to OpenAI's own documentation, it's meant to be used alongside the "seed" parameter.

Here's the basic idea:

-

Prompt: This is the input you give the model.

-

Seed: This is just a number you pick to give the model a starting point for its random-ish process. Using the same seed should, in theory, kick things off from the same spot every time.

-

System Fingerprint: This is a code OpenAI sends back to you. It's supposed to confirm that the underlying hardware and software configuration haven't changed.

When you make an API call with the same prompt and the same seed, and you get back the same system fingerprint, you should get an identical output. This is the whole magic behind "reproducible outputs."

The promise of the OpenAI System Fingerprint: Why consistent AI answers are a big deal

For any business plugging AI into its daily operations, especially something like customer support, an unpredictable model isn't just a quirky feature; it's a genuine risk. The ability to get the same answer for the same question is vital for a few key reasons.

Confident testing and QA

If you're going to let an AI talk to your customers, you have to be able to test it thoroughly. Reproducible outputs let you set a stable baseline. You can run your tests over and over, knowing that any changes you see are because of your tweaks (like a new prompt or an updated knowledge source), not because the model just felt like saying something different. Without that, you're trying to hit a moving target, which makes it nearly impossible to know if you're actually making things better.

A consistent customer experience

Picture this: a customer asks a simple question, gets an answer, and then asks the exact same question an hour later only to get a completely different response. That kind of thing kills trust and just creates confusion. Deterministic AI makes sure that a specific question gets a specific answer, every single time. This is non-negotiable for things like company policies, factual info, or step-by-step guides.

Easier debugging and fixes

When an AI gives a weird or just plain wrong answer, your team has to jump in and figure out why. If the output changes every time they try to recreate the problem, debugging becomes a total nightmare. When you can get the same bad answer every time, your team can pin down the exact problem, analyze the output, and figure out how to fix the prompt or knowledge base that's causing the issue.

The reality: Why the OpenAI System Fingerprint often misses the mark

While the logic behind the "seed" and "system_fingerprint" makes sense, actually using it has been a letdown for many. If you look around the OpenAI community forums, you'll find plenty of developers reporting that the system fingerprint changes constantly and without warning, even when every other setting is locked down.

One user even ran an experiment with 100 trials and found that the "gpt-4-turbo" model gave back four different fingerprints. None of them even showed up a majority of the time.

This instability pretty much defeats the whole purpose of the feature. If the one thing that's supposed to guarantee a stable result is itself unstable, you can't really count on it to get consistent outputs.

What causes the OpenAI System Fingerprint instability?

OpenAI says that the fingerprint can change when they update their backend systems. That's fair enough, but these changes seem to happen way more often than anyone expected. It suggests that API requests might be getting sent to different server setups or that small, unannounced tweaks are rolling out all the time.

The end result is that even with a fixed seed, you can get different answers to the same prompt just minutes apart. This makes it incredibly tough to build the kind of reliable, testable AI workflows that businesses need. You wouldn't build a house on shifting sand, and you can't build a solid support automation system on an unpredictable foundation.

The business impact of an unreliable OpenAI System Fingerprint

When the OpenAI System Fingerprint doesn't deliver, it has real-world consequences:

-

Wasted developer time: Teams can sink hours trying to debug "inconsistent" AI behavior that's actually caused by backend changes they have no control over.

-

A lack of deployment confidence: How can you feel good about automating 30% of your support tickets if you can't be sure the AI will act the same way tomorrow as it did in your tests today?

-

Skewed analytics: It's impossible to measure the ROI of your AI or the impact of your improvements if you can't even establish a stable baseline for how it performs.

This is where you have to change your strategy. Instead of fighting for perfect consistency from a tool that can't provide it, you need a platform that gives you a solid way to test and deploy around the model's natural variability.

A better approach: Test with confidence using simulation

Since you can't totally force the model to be deterministic, the next best thing is to have a great way to predict its behavior and de-risk putting it in front of customers. This is where a dedicated AI support platform like eesel AI becomes so valuable. Instead of crossing your fingers for a stable OpenAI System Fingerprint, you can take charge with a solid simulation and rollout engine.

Simulate before you activate

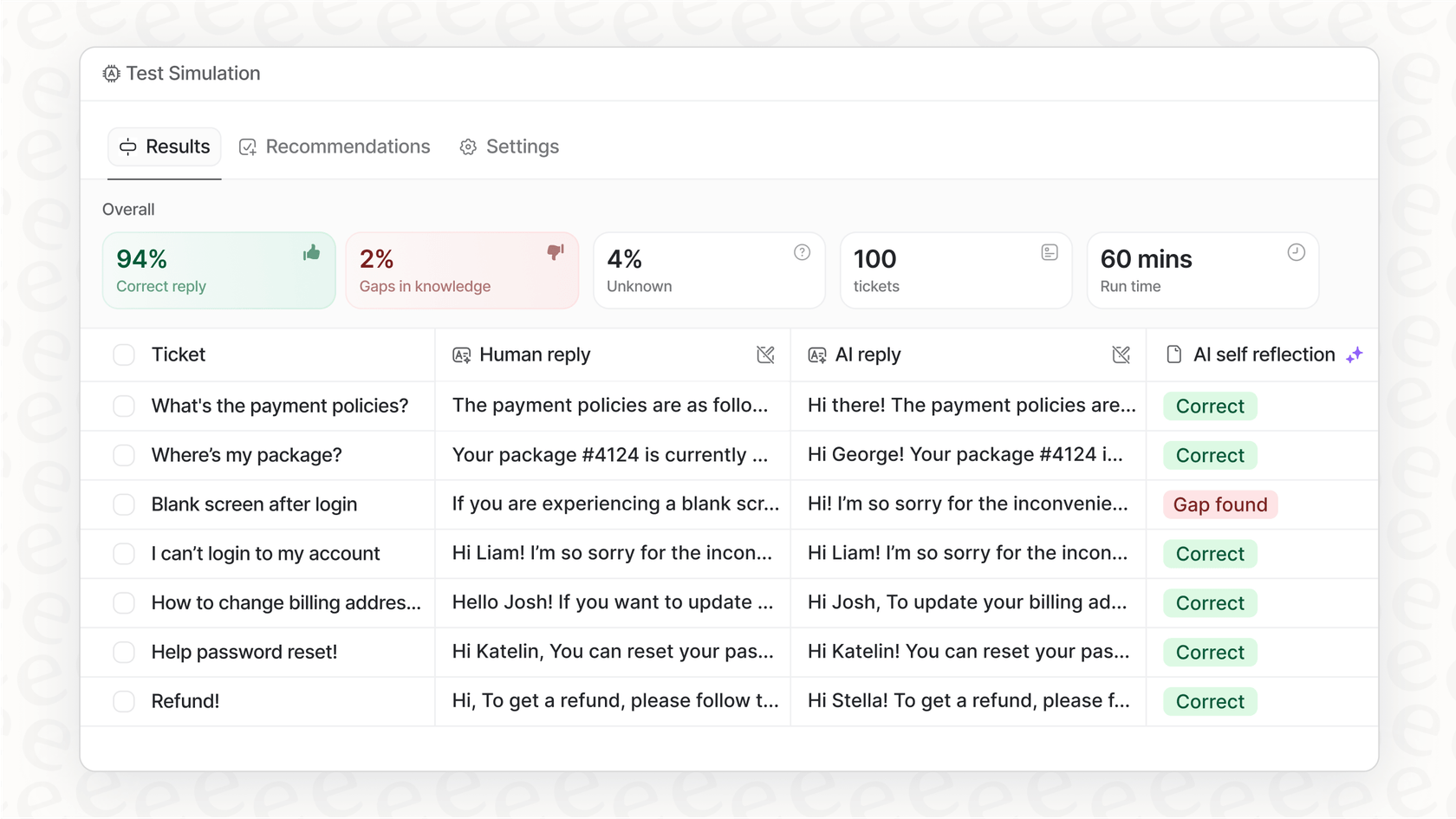

The biggest fear with an unpredictable AI is what it might say to a real customer. The simulation mode in eesel AI tackles this head-on. It lets you test your AI agent on thousands of your actual past tickets in a safe, offline space.

-

Forecast your automation rate: See exactly how many tickets your AI would have been able to resolve on its own.

-

Check every single response: You can go through the AI-generated replies to make sure they're accurate and match your company's tone.

-

Spot knowledge gaps: The simulation dashboard points out the questions the AI stumbled on, showing you exactly where your knowledge base needs a little love.

This kind of risk-free testing gives you a level of confidence that you just can't get from other platforms that lack this kind of deep simulation. You'll know exactly how your agent is likely to behave before it ever interacts with a single customer.

Roll out slowly and with total control

Once you’re happy with how it did in the simulation, you don't have to just flip a switch and pray. eesel AI gives you fine-grained control over how you go live.

-

Be selective with automation: You can start by letting the AI handle only certain types of simple, repetitive questions.

-

Scope its knowledge: You can limit the AI to specific knowledge sources to make sure it doesn't try to answer questions it's not ready for.

-

Create clear escape hatches: Set up simple rules for when the AI should hand a conversation over to a human agent.

This method lets you build trust in your automation over time. You can expand its duties as you see how it performs in the real world, all while your team stays completely in the driver's seat.

OpenAI System Fingerprint: API pricing overview

The "seed" and "system_fingerprint" parameters are available across a range of OpenAI models, from GPT-3.5 Turbo all the way to GPT-4o. API pricing is typically based on how many tokens you use, with different costs for input (your prompt) and output (the model's completion).

| Plan/Model | Typical Pricing Structure | Key Features for Developers |

|---|---|---|

| Free | Limited access to explore capabilities. | Access to GPT-5, web search, custom GPTs. |

| Plus/Pro | Monthly subscription ($20-$200/month). | Extended/unlimited access to latest models, higher limits. |

| Business/Enterprise | Per-user, per-month fee or custom pricing. | Dedicated workspace, enhanced security, admin controls. |

Just a heads-up: For the latest pricing info, it's always best to check the official ChatGPT pricing page.

While you can access this feature on most paid plans, its unreliability means that paying more for a fancier model doesn't fix the underlying consistency problem. The real risk isn't in the API bill; it's in the operational cost of deploying an agent you can't fully trust.

OpenAI System Fingerprint: Focus on what you can control

Look, getting a large language model to be 100% predictable is still a tough nut to crack, and the OpenAI System Fingerprint has turned out to be a less-than-perfect tool for the job. For businesses that rely on consistency, this is more than just a small annoyance, it's a real roadblock to automating with confidence.

Instead of getting bogged down trying to force determinism out of the model itself, the smarter move is to build a solid operational process around the AI. By using a platform that gives you powerful simulation, gradual rollout options, and detailed controls, you can manage the risks that come with a bit of unpredictability and deploy AI agents that are both helpful and trustworthy.

Ready to stop guessing and start testing? With eesel AI, you can simulate your AI agent on your own historical data and see exactly how it will perform in just a few minutes. Start your free trial today and build your support automation on a foundation you can actually trust.

Frequently asked questions

The OpenAI System Fingerprint is a unique identifier returned with each API response, indicating the specific backend configuration used to generate that output. Its primary goal, alongside the "seed" parameter, is to help achieve reproducible AI responses for the same input.

Despite its intended purpose, the OpenAI System Fingerprint has been reported by many developers to change frequently and unpredictably, even with consistent inputs and "seed" values. This instability makes it difficult to rely on for consistent AI outputs.

An unstable OpenAI System Fingerprint leads to wasted developer time debugging inconsistent behavior, a lack of confidence in deploying AI agents, and skewed analytics when trying to measure performance improvements. It undermines the ability to establish a stable baseline for testing.

Theoretically, if the OpenAI System Fingerprint remained stable, it would allow developers to reproduce specific problematic outputs, making debugging easier. However, its current unreliability often prevents it from consistently delivering this benefit, turning debugging into a greater challenge.

OpenAI states that the OpenAI System Fingerprint can change due to backend system updates. Developers suspect that requests might be routed to different server configurations or that small, unannounced tweaks are frequently rolled out, leading to perceived instability.

Instead of relying solely on the OpenAI System Fingerprint for determinism, a better approach involves using dedicated AI support platforms. These platforms offer robust simulation environments and controlled rollout capabilities to predict and manage AI behavior confidently.

Yes, the OpenAI System Fingerprint and the "seed" parameter are generally available across a range of OpenAI models, including GPT-3.5 Turbo and GPT-4o, regardless of the specific paid API plan. However, its availability does not guarantee its reliability.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.