If you're a developer, you've probably seen what models like GPT-4o can do and thought about building something with them. The main entry point is the OpenAI API, but working with it directly means writing a lot of boilerplate HTTP requests. That's where OpenAI SDKs (Software Development Kits) come in. They're basically toolkits for different programming languages that make calling the API a whole lot easier.

But here's the thing: while the SDKs are great for getting started, building a full-blown, production-ready app for something like customer support is a different beast entirely. It’s not just about a few API calls. You have to think about conversation history, pulling in data from different places, and a ton of testing. That all adds up to a lot of engineering time. So, let’s walk through what the OpenAI SDKs are, what you can do with them, and some of the real-world complexities to keep in mind. We'll also look at a way to get powerful AI agents up and running much faster.

What are the OpenAI SDKs?

At its heart, the OpenAI API is a RESTful service you talk to over HTTP. You could build those requests yourself, but it’s a pain and easy to mess up. OpenAI SDKs are official libraries that do the heavy lifting for you. They give you simple functions and classes to use the API right from your favorite programming language.

Think of an SDK as a friendly wrapper. Instead of sweating the details of auth headers, request formatting, and parsing responses, you can just make a straightforward function call like "client.responses.create()".

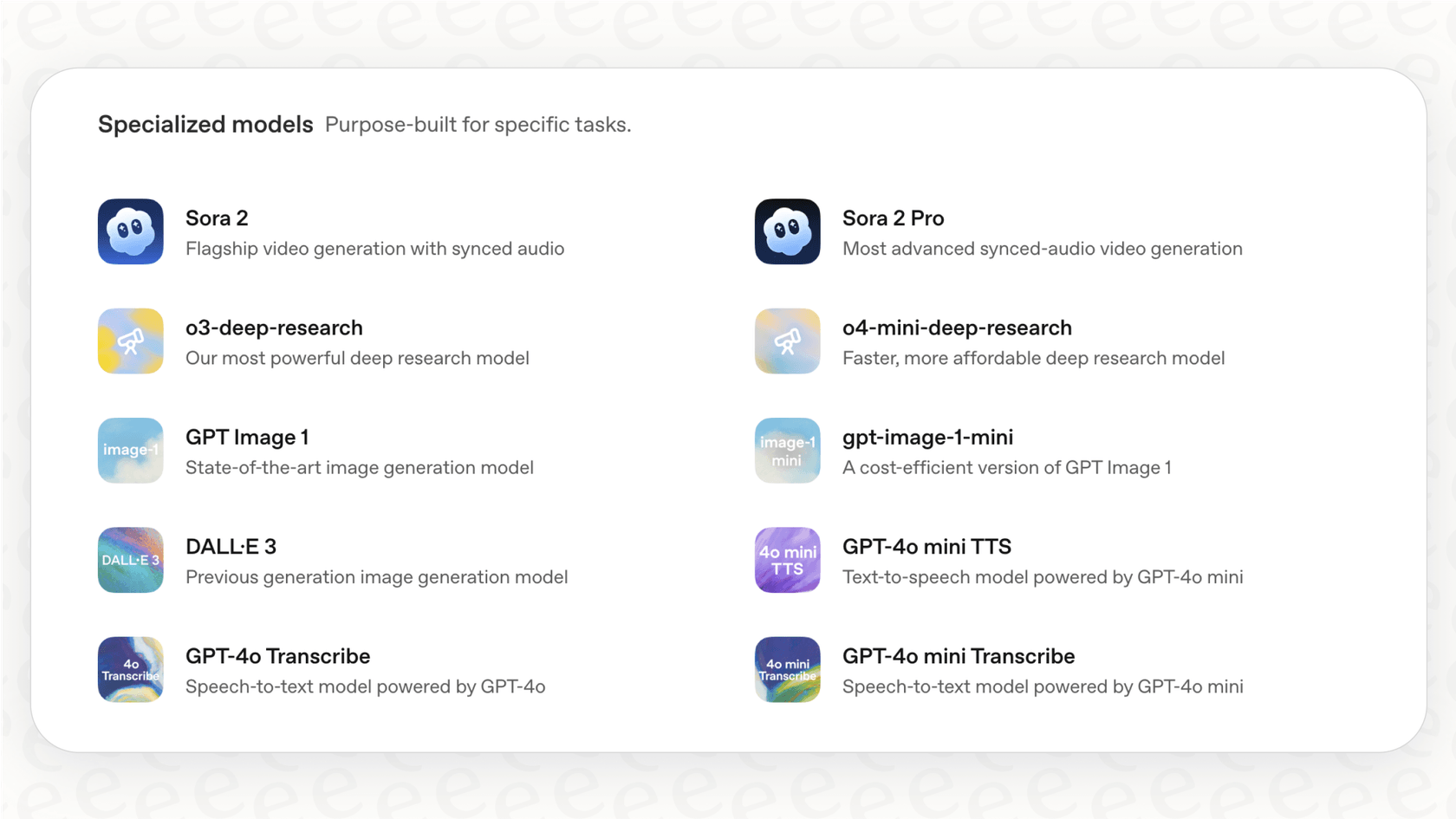

OpenAI has official SDKs for a bunch of popular languages:

-

Python: The go-to for most AI and machine learning work.

-

TypeScript / JavaScript (Node.js): Perfect for web apps and backend services.

-

.NET: For anyone working in the C# and Microsoft world.

-

Java: A solid choice for bigger, enterprise-style applications.

-

Go: Great if you need speed and good concurrency.

And if your language of choice isn't on that list, the community has probably built a library for it. The general idea is always the same: install the SDK, grab your secret API key from your OpenAI dashboard, and you can start calling the models from your code.

Key features and use cases for the OpenAI SDKs

Once you're set up, the SDKs open the door to a lot of different capabilities. Here are the main things people build with them.

Text and response generation

This is the bread and butter. Using the responses.create endpoint, you can send a prompt to a model like "gpt-4o" and get a text reply. This is the foundation for everything from a simple content generator to a full-on conversational chatbot. To give the model context, you can pass a series of messages in your request, which helps it keep track of the conversation.

Multimodality (image, audio, and file analysis)

Newer models like GPT-4o aren't limited to just text; they're multimodal. The OpenAI SDKs let you include different kinds of content in your prompts:

-

Images: You can pass an image URL or upload one directly and ask the model to tell you what's in it, answer questions about it, or even pull text out of it.

-

Audio: The models can transcribe audio files into text.

-

Files: You can upload documents like PDFs and have the model summarize them or answer questions based on their content.

Extending models with tools (function calling)

This is where things get really interesting. The "tools" feature (which used to be called function calling) lets you give the model a set of custom functions it can ask to use. For example, you could define a function called get_weather. If a user asks, "What's the weather in Paris?", the model won't just make something up. It will return a JSON object saying it wants to call your get_weather function with the parameter location: "Paris".

Your code then runs the function, fetches the actual weather data, and feeds that information back to the model. The model then uses this data to give the user a natural-sounding answer. This is how you build apps that can interact with the outside world.

A quick tip: while you can build your own function calls to look up order data from your database, you have to build and host all that backend logic yourself. An alternative is a platform like eesel AI, which gives you pre-built AI Actions and a visual workflow builder. You can connect to tools like Shopify or internal databases without writing all the plumbing from scratch, saving a ton of dev time.

Building advanced agents with the Agents SDK

For more complex tasks, OpenAI has a specialized Agents SDK. This is for building "agentic" apps, where an AI can tackle multi-step problems and even coordinate with other AI agents. It's powerful stuff, but it's also a big step up in complexity.

The Agents SDK is based on a few main ideas:

-

Agents: An LLM that has specific instructions and a toolkit of functions it can use.

-

Handoffs: This lets one agent pass a task to another, more specialized agent. Think of a general triage agent handing off a bug report to a technical support agent.

-

Guardrails: These are rules you set up to check an agent's work, making sure it stays on topic and doesn't go off the rails.

-

Sessions: The SDK automatically manages the conversation history for you, even as tasks are passed between different agents.

Using the Agents SDK allows you to create some pretty sophisticated logic in Python or TypeScript. But it's a very low-level tool. Building something that's reliable enough for a business requires a deep understanding of agent design and solid software engineering.

This is really where a purpose-built platform comes in handy. Piecing together a multi-agent support system yourself is a major undertaking. For business needs like customer service, a platform approach is almost always faster and easier to scale. For instance, eesel AI gives you a fully customizable workflow engine. You can define how your AI agents should behave, set up escalations (which are like handoffs), and choose your knowledge sources from a simple dashboard. This lets you go live in minutes, not months.

The hidden costs and challenges of using OpenAI SDKs

The SDKs make it seem easy to get started, but the path from a simple script to a production app has some bumps and hidden costs you should know about.

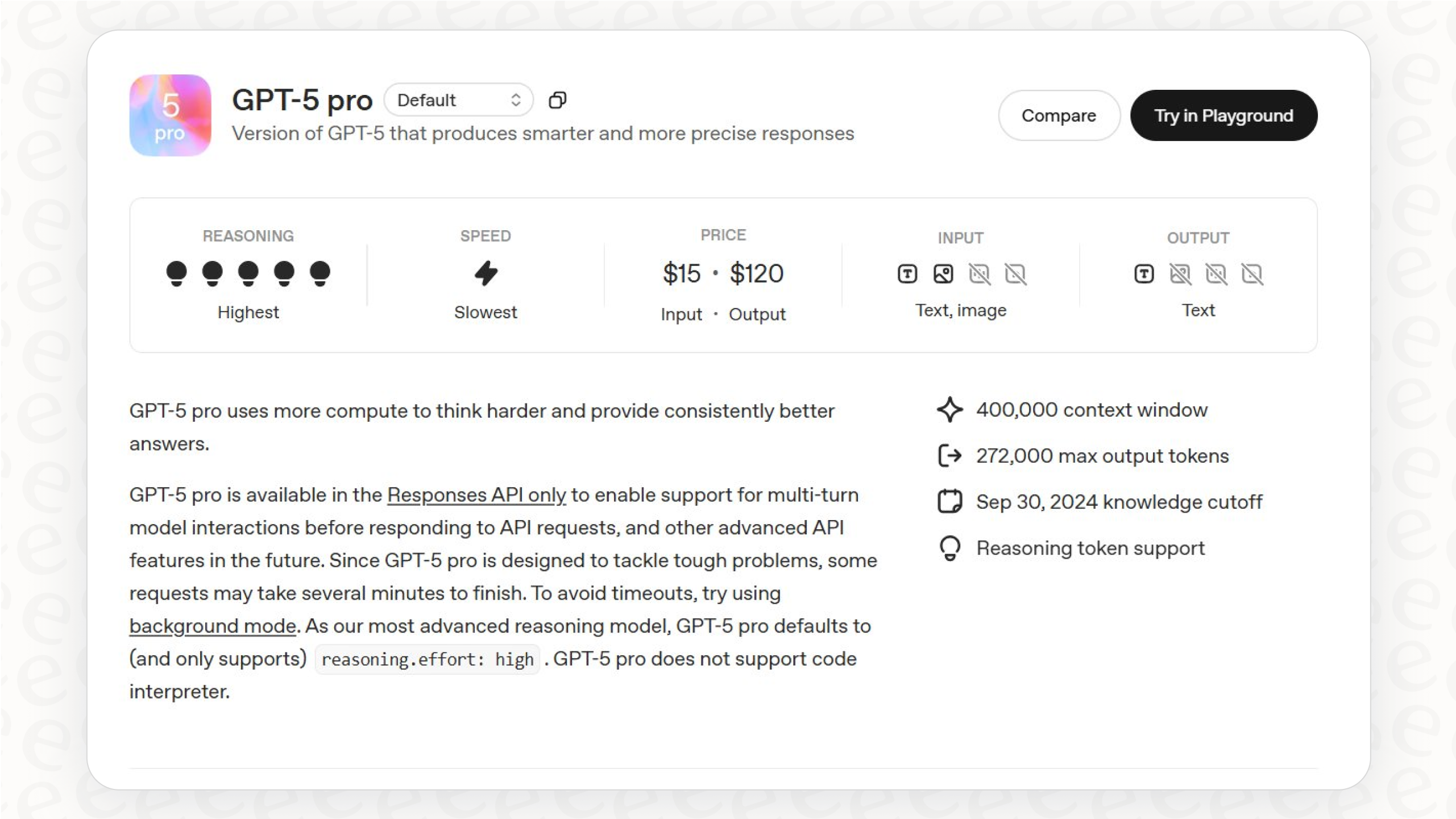

Understanding API pricing

OpenAI's pricing is mostly pay-as-you-go, based on "tokens" (which are roughly parts of words). You pay for the tokens you send in your prompt (input) and the tokens the model sends back (output). These costs can sneak up on you, especially if you're sending long prompts or handling a lot of conversations.

Here's a quick look at the standard pricing for a few models (per 1 million tokens, as of late 2025):

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| gpt-4o | $2.50 | $10.00 |

| gpt-4o-mini | $0.15 | $0.60 |

| gpt-4.1 | $2.00 | $8.00 |

| gpt-5-mini | $0.25 | $2.00 |

Source: OpenAI Pricing. Prices are for the standard tier and might change.

And it's not just the models. Other features have costs too:

-

Tools: Using built-in tools like Code Interpreter or File Search adds extra fees per session or based on storage.

-

Fine-Tuning: If you train a custom model, you'll pay for the training process and for using the model.

This per-token pricing can make your monthly bill unpredictable, which isn't great for budgeting. In contrast, services like eesel AI offer clear, predictable pricing based on a fixed number of AI interactions. You know exactly what you're paying for, which makes forecasting costs a lot easier.

The engineering overhead of building a complete solution

An API call is just one tiny part of a real application. A genuinely helpful AI support agent needs a whole lot of infrastructure behind it, all of which you have to build and maintain.

-

Knowledge Management (RAG): For an AI to answer questions accurately, it needs context from your company's documents. You'll have to build a Retrieval-Augmented Generation (RAG) system. This involves breaking up your documents, creating embeddings, setting up a vector database, and building a way to retrieve the right information.

-

Integrations: Your AI needs to talk to your helpdesk (Zendesk, Intercom), your internal knowledge bases (Confluence, Google Docs), and other business tools (Shopify, Jira). Each of those connections is its own mini-project.

-

Testing and Validation: How do you know if your AI is actually helping or making things worse? You need to build a testing framework to run it against your past support tickets to see how well it performs.

-

Maintenance and Improvement: Models get updated, your internal documents change, and your business needs evolve. A custom solution needs constant care and feeding to stay effective.

Description Create an infographic with a central circle labeled "Production AI App". Four arrows point away from the circle to four other boxes:

-

Knowledge Management (RAG): Include icons for document splitting, embedding, and a vector database.

-

Integrations: Include logos of popular tools like Zendesk, Shopify, Confluence, and Jira.

-

Testing & Validation: Include icons representing A/B testing, performance metrics, and data analysis.

-

Maintenance & Improvement: Include icons for model updates, data refresh cycles, and continuous monitoring.

The infographic should visually represent that a simple API call is just the tip of the iceberg.

These are exactly the headaches that platforms like eesel AI are built to solve. eesel connects to all your knowledge sources with one-click integrations, takes care of the entire RAG pipeline for you, and even has a powerful simulation mode to test your AI agent on thousands of real tickets before a single customer ever talks to it.

A simpler path to production-ready AI

OpenAI SDKs are fantastic developer tools. They are the essential building blocks for anyone doing deep, custom AI work.

But for specific business problems, like automating customer support, streamlining IT helpdesks, or setting up an internal Q&A bot, building from the ground up is often a slow, expensive, and risky journey. The question isn't just about what's technically possible; it's about how quickly you can get value, how reliable the final product will be, and what the total cost will be over time.

Why spend months reinventing the wheel when a dedicated platform can give you a more powerful, integrated, and battle-tested solution in a fraction of the time? By handling the messy parts like data pipelines, integrations, and agent logic, platforms like eesel AI let your team focus on what really matters: creating a great experience for your customers and employees.

If your goal is to solve a support problem today, not to kick off a long-term R&D project, then a platform-based approach is probably the right call.

Go live with your AI agent in minutes

Ready to see what a production-grade AI support agent can do for you? With eesel AI, you can connect your helpdesk and knowledge bases in one click, simulate performance on your real data, and roll out a fully customized AI agent, copilot, or chatbot. All without writing a single line of code.

Start your free trial today and see for yourself.

Frequently asked questions

OpenAI SDKs are official libraries for various programming languages that act as a wrapper around the OpenAI API. They handle low-level details like HTTP requests, authentication, and response parsing, enabling developers to make API calls with simple, language-specific function calls.

OpenAI provides official SDKs for several popular programming languages, including Python, TypeScript/JavaScript (Node.js), .NET (C#), Java, and Go. These SDKs cater to a wide range of development environments for building different types of applications.

The OpenAI SDKs enable multimodal capabilities by allowing you to include various content types such as image URLs, uploaded images, audio files, and documents directly within your prompts. This allows models like GPT-4o to analyze and respond to diverse inputs beyond just text.

Yes, OpenAI SDKs fully support the "tools" feature (formerly function calling), which allows you to define custom functions that the model can request to use. When the model determines a function is relevant to a user's request, it will return a JSON object specifying the function and its parameters for your code to execute.

Key challenges include significant engineering overhead for implementing knowledge management (RAG), building and maintaining custom integrations, and developing robust testing frameworks. These aspects demand substantial development time and ongoing maintenance for a production-ready solution, in addition to API pricing.

The specialized Agents SDK is designed for building sophisticated agentic applications, providing features like agent handoffs, guardrails for controlled behavior, and automatic session management for conversation history. It facilitates a structured approach for multi-step problem-solving and coordinating multiple AI agents, simplifying complex agent logic.

While OpenAI SDKs are excellent for custom and deep AI development, dedicated platforms like eesel AI often offer a faster and more efficient path to production for specific business solutions. These platforms abstract away complex infrastructure, data pipelines, and integrations, significantly reducing engineering overhead and speeding up deployment.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.